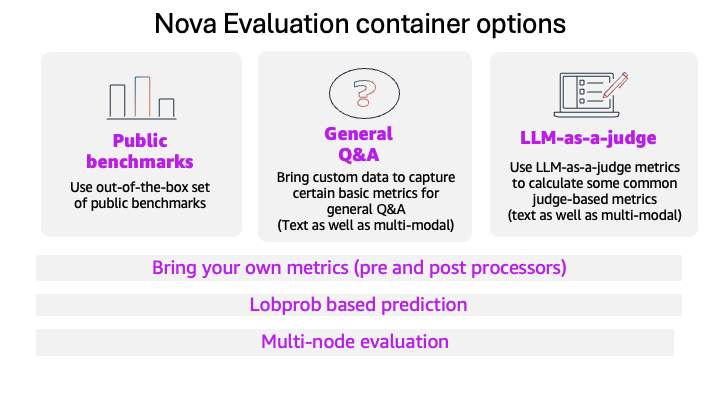

This weblog publish introduces the brand new Amazon Nova mannequin analysis options in Amazon SageMaker AI. This launch provides {custom} metrics assist, LLM-based choice testing, log chance seize, metadata evaluation, and multi-node scaling for big evaluations.

The brand new options embrace:

- Customized metrics use the convey your personal metrics (BYOM) capabilities to regulate analysis standards to your use case.

- Nova LLM-as-a-Decide handles subjective evaluations by way of pairwise A/B comparisons, reporting win/tie/loss ratios and Bradley-Terry scores with explanations for every judgment.

- Token-level log possibilities reveal mannequin confidence, helpful for calibration and routing selections.

- Metadata passthrough retains per-row fields for evaluation by buyer phase, area, problem, or precedence degree with out additional processing.

- Multi-node execution distributes workloads whereas sustaining secure aggregation, scaling analysis datasets from 1000’s to thousands and thousands of examples.

In SageMaker AI, groups can outline mannequin evaluations utilizing JSONL recordsdata in Amazon Easy Storage Service (Amazon S3), then execute them as SageMaker coaching jobs with management over pre- and post-processing workflows with outcomes delivered as structured JSONL with per-example and aggregated metrics and detailed metadata. Groups can then combine outcomes with analytics instruments like Amazon Athena and AWS Glue, or straight route them into current observability stacks, with constant outcomes.

The remainder of this publish introduces the brand new options after which demonstrates step-by-step the right way to arrange evaluations, run decide experiments, seize and analyze log possibilities, use metadata for evaluation, and configure multi-node runs in an IT assist ticket classification instance.

Options for mannequin analysis utilizing Amazon SageMaker AI

When selecting which fashions to convey into manufacturing, correct analysis methodologies require testing a number of fashions, together with personalized variations in SageMaker AI. To take action successfully, groups want an identical check circumstances passing the identical prompts, metrics, and analysis logic to completely different fashions. This makes positive rating variations replicate mannequin efficiency, not analysis strategies.

Amazon Nova fashions which might be personalized in SageMaker AI now inherit the total analysis infrastructure as base fashions making it a good comparability. Outcomes land as structured JSONL in Amazon S3, prepared for Athena queries or routing to your observability stack. Let’s talk about a few of the new options obtainable for mannequin analysis.

Carry your personal metrics (BYOM)

Commonplace metrics may not all the time suit your particular necessities. Customized metrics options leverage AWS Lambda capabilities to deal with knowledge preprocessing, output post-processing, and metric calculation. For example, a customer support bot wants empathy and model consistency metrics; a medical assistant would possibly require medical accuracy measures. With {custom} metrics, you’ll be able to check what issues to your area.

On this function, pre- and post-processor capabilities are encapsulated in a Lambda perform that’s used to course of knowledge earlier than inference to normalize codecs or inject context and to then calculate your {custom} metrics utilizing post-processing perform after the mannequin responds. Lastly, the outcomes are aggregated utilizing your selection of min, max, common, or sum, thereby providing larger flexibility when completely different check examples carry various significance.

Multimodal LLM-as-a-judge analysis

LLM-as-a-judge automates choice testing for textual content in addition to multimodal duties utilizing Amazon Nova LLM-as-a-Decide fashions for response comparability. The system implements pairwise analysis: for every immediate, it compares baseline and challenger responses, operating the comparability in each ahead and backward passes to detect positional bias. The output contains Bradley-Terry possibilities (the probability one response is most popular over one other) with bootstrap-sampled confidence intervals, giving statistical confidence in choice outcomes.

Nova LLM-as-a-Decide fashions are purposefully personalized for judging associated analysis duties. Every judgment contains pure language rationales explaining why the decide most popular one response over different, serving to with focused enhancements reasonably than blind optimization. Nova LLM-as-a-Decide evaluates advanced reasoning duties like assist ticket classification, the place nuanced understanding issues greater than easy key phrase matching.

The tie detection is equally beneficial, figuring out the place fashions have reached parity. Mixed with commonplace error metrics, you’ll be able to decide whether or not efficiency variations are statistically significant or inside noise margins; that is essential when deciding if a mannequin replace justifies deployment.

Use log chance for mannequin analysis

Log possibilities present mannequin confidence for every generated token, revealing insights into mannequin uncertainty and prediction high quality. Log possibilities assist calibration research, confidence routing, and hallucination detection past fundamental accuracy. Token-level confidence helps determine unsure predictions for extra dependable programs.

A Nova analysis container with SageMaker AI mannequin analysis now captures token-level log possibilities throughout inference for uncertainty-aware analysis workflows. The function integrates with analysis pipelines and gives the inspiration for superior diagnostic capabilities. You’ll be able to correlate mannequin confidence with precise efficiency, implement high quality gates primarily based on uncertainty thresholds, and detect potential points earlier than they affect manufacturing programs. Add log chance seize by including the top_logprobs parameter to your analysis configuration:

When mixed with the metadata passthrough function as mentioned within the subsequent part, log possibilities assist with stratified confidence evaluation throughout completely different knowledge segments and use instances. This mixture gives detailed insights into mannequin conduct, so groups can perceive not simply the place fashions fail, however why they fail and the way assured they’re of their predictions giving them extra management over calibration.

Go metadata info when utilizing mannequin analysis

Customized datasets now assist metadata fields when making ready the analysis dataset. Metadata helps examine outcomes throughout completely different fashions and datasets. The metadata area accepts any string for tagging and evaluation with the enter knowledge and eval outcomes. With the addition of the metadata area, the general schema per knowledge level in JSONL file turns into the next:

Allow multi-node analysis

The analysis container helps multi-node analysis for quicker processing. Set the replicas parameter to allow multi-node analysis to a worth larger than one.

Case research: IT assist ticket classification assistant

The next case research demonstrates a number of of those new options utilizing IT assist ticket classification. On this use case, fashions classify tickets as {hardware}, software program, community, or entry points whereas explaining their reasoning. This assessments each accuracy and clarification high quality, and exhibits {custom} metrics, metadata passthrough, log chance evaluation, and multi-node scaling in follow.

Dataset overview

The assist ticket classification dataset incorporates IT assist tickets spanning completely different precedence ranges and technical domains, every with structured metadata for detailed evaluation. Every analysis instance features a assist ticket question, the system context, a structured response containing the expected class, the reasoning primarily based on ticket content material, and a pure language description. Amazon SageMaker Floor Fact responses embrace considerate explanations like Primarily based on the error message mentioning community timeout and the consumer's description of intermittent connectivity, this seems to be a community infrastructure problem requiring escalation to the community crew. The dataset contains metadata tags for problem degree (straightforward/medium/arduous primarily based on technical complexity), precedence (low/medium/excessive), and area class, demonstrating how metadata passthrough works for stratified evaluation with out post-processing joins.

Conditions

Earlier than you run the pocket book, be certain that the provisioned setting has the next:

- An AWS account

- AWS Identification and Entry Administration (IAM) permissions to create a Lambda perform, the power to run SageMaker coaching jobs inside the related AWS account within the earlier step, and skim and write permissions to an S3 bucket

- A improvement setting with SageMaker Python SDK and the Nova {custom} analysis SDK (

nova_custom_evaluation_sdk)

Step 1: Put together the immediate

For our assist ticket classification process, we have to assess not solely whether or not the mannequin identifies the proper class, but additionally whether or not it gives coherent reasoning and adheres to structured output codecs to have a whole overview required in manufacturing programs. For crafting the immediate, we’re going to use Nova prompting greatest practices.

System immediate design: Beginning with the system immediate, we set up the mannequin’s position and anticipated conduct by way of a targeted system immediate:

This immediate units clear expectations: the mannequin ought to act as a site skilled, base selections on visible proof, and prioritize accuracy. By framing the duty as skilled evaluation reasonably than informal remark, we encourage extra considerate, detailed responses.

Question construction: The question template requests each classification and justification:

The specific request for reasoning is essential—it forces the mannequin to articulate its decision-making course of, serving to with analysis of clarification high quality alongside classification accuracy. This mirrors real-world necessities the place mannequin selections usually should be interpretable for stakeholders or regulatory compliance.

Structured response format: We outline the anticipated output as JSON with three parts:

This construction helps the three-dimensional analysis technique we’ll talk about later on this publish:

- class area – Classification accuracy metrics (precision, recall, F1)

- thought area – Reasoning coherence analysis

- description area – Pure language high quality evaluation

By defining the response as parseable JSON, we assist with automated metric calculation by way of our {custom} Lambda capabilities whereas sustaining human-readable explanations for mannequin selections. This immediate structure transforms analysis from easy proper/flawed classification into a whole evaluation of mannequin capabilities. Manufacturing AI programs should be correct, explainable, and dependable of their output formatting—and our immediate design explicitly assessments all three dimensions. The structured format additionally facilitates the metadata-driven stratified evaluation we’ll use in later steps, the place we are able to correlate reasoning high quality with confidence scores and problem ranges throughout completely different breed classes.

Step 2: Put together the dataset for analysis with metadata

On this step, we’ll put together our assist ticket dataset with metadata assist to assist with stratified evaluation throughout completely different classes and problem ranges. The metadata passthrough function retains {custom} fields full for detailed efficiency evaluation with out post-hoc joins. Let’s overview an instance dataset.

Dataset schema with metadata

For our assist ticket classification analysis, we’ll use the improved gen_qa format with structured metadata:

Study this additional: how can we mechanically generate structured metadata for every analysis instance? This metadata enrichment course of analyzes the content material to categorise process sorts, assess problem ranges, and determine domains, creating the inspiration for stratified evaluation in later steps. By embedding this contextual info straight into our dataset, we assist the Nova analysis pipeline maintain these insights full, so we are able to perceive mannequin efficiency throughout completely different segments with out requiring advanced post-processing joins.

As soon as our dataset is enriched with metadata, we have to export it within the JSONL format required by the Nova analysis container.

The next export perform codecs our ready examples with embedded metadata in order that they’re prepared for the analysis pipeline, sustaining the precise schema construction wanted for the Amazon SageMaker processing workflow:

Step 3: Put together {custom} metrics to judge {custom} fashions

After making ready and verifying your knowledge adheres to the required schema, the subsequent essential step is to develop analysis metrics code to evaluate your {custom} mannequin’s efficiency. Use Nova analysis container and the convey your personal metric (BYOM) workflow to regulate your mannequin analysis pipeline with {custom} metrics and knowledge workflows.

Introduction to BYOM workflow

With the BYOM function, you’ll be able to tailor your mannequin analysis workflow to your particular wants with totally customizable pre-processing, post-processing, and metrics capabilities. BYOM offers you management over the analysis course of, serving to you to fine-tune and enhance your mannequin’s efficiency metrics in accordance with your undertaking’s distinctive necessities.

Key duties for this classification drawback

- Outline duties and metrics: On this use case, mannequin analysis requires three duties:

- Class prediction accuracy: This can assess how precisely the mannequin predicts the proper class for given inputs. For this we’ll use commonplace metrics resembling accuracy, precision, recall, and F1 rating to quantify efficiency.

- Schema adherence: Subsequent, we additionally wish to make sure that the mannequin’s outputs conform to the desired schema. This step is essential for sustaining consistency and compatibility with downstream functions. For this we’ll use validation strategies to confirm that the output format matches the required schema.

- Thought course of coherence: Subsequent, we additionally wish to consider the coherence and reasoning behind the mannequin’s selections. This includes analyzing the mannequin’s thought course of to assist validate predictions are logically sound. Methods resembling consideration mechanisms, interpretability instruments, and mannequin explanations can present insights into the mannequin’s decision-making course of.

The BYOM function for evaluating {custom} fashions requires constructing a Lambda perform.

- Configure a {custom} layer in your Lambda perform. Within the GitHub launch, discover and obtain the pre-built nova-custom-eval-layer.zip file.

- Use the next command to add the {custom} Lambda layer:

- Add the revealed layer and

AWSLambdaPowertoolsPythonV3-python312-arm64(or comparable AWS layer primarily based on Python model and runtime model compatibility) to your Lambda perform to make sure all needed dependencies are put in. - For improvement of the Lambda perform, import two key dependencies: one for importing the preprocessor and postprocessor decorators and one to construct the

lambda_handler:

- Add the preprocessor and postprocessor logic.

- Preprocessor logic: Implement capabilities that manipulate the info earlier than it’s handed to the inference server. This will embrace immediate manipulations or different knowledge preprocessing steps. The pre-processor expects an occasion dictionary (dict), a sequence of key worth pairs, as enter:

Instance:

- Postprocessor logic: Implement capabilities that course of the inference outcomes. This will contain parsing fields, including {custom} validations, or calculating particular metrics. The postprocessor expects an occasion dict as enter which has this format:

- Preprocessor logic: Implement capabilities that manipulate the info earlier than it’s handed to the inference server. This will embrace immediate manipulations or different knowledge preprocessing steps. The pre-processor expects an occasion dictionary (dict), a sequence of key worth pairs, as enter:

- Outline the Lambda handler, the place you add the pre-processor and post-processor logics, earlier than and after inference respectively.

Step 4: Launch the analysis job with {custom} metrics

Now that you’ve got constructed your {custom} processors and encoded your analysis metrics, you’ll be able to select a recipe and make needed changes to ensure the earlier BYOM logic will get executed. For this, first select convey your personal knowledge recipes from the general public repo, and ensure the next code modifications are made.

- Guarantee that the processor key’s added on to the recipe with appropriate particulars:

- lambda-arn: The Amazon Useful resource Identify (ARN) for a buyer Lambda perform that handles pre-processing and post-processing

- preprocessing: Whether or not so as to add {custom} pre-processing operations

- post-processing: Whether or not so as to add {custom} post-processing operations

- aggregation: In-built aggregation perform to select from.

min, max, common, or sum

- Launch a coaching job with an analysis container:

Step 5: Use metadata and log possibilities to calibrate the accuracy

You too can embrace log chance as an inference config variable to assist conduct logit-based evaluations. For this we are able to cross top_logprobs beneath inference within the recipe:

top_logprobs signifies the variety of most probably tokens to return at every token place every with an related log chance. This worth should be an integer from 0 to twenty. Logprobs include the thought of output tokens and log possibilities of every output token returned within the content material of message.

As soon as the job runs efficiently and you’ve got the outcomes, you will discover the log possibilities beneath the sphere pred_logprobs. This area incorporates the thought of output tokens and log possibilities of every output token returned within the content material of message. Now you can use the logits produced to do calibration to your classification process. The log possibilities of every output token may be helpful for calibration, to regulate the predictions and deal with these possibilities as confidence rating.

Step 6: Failure evaluation on low confidence prediction

After calibrating our mannequin utilizing metadata and log possibilities, we are able to now determine and analyze failure patterns in low-confidence predictions. This evaluation helps us perceive the place our mannequin struggles and guides focused enhancements.

Loading outcomes with log possibilities

Now, let’s study intimately how we mix the inference outputs with detailed log chance knowledge from the Amazon Nova analysis pipeline. This helps us carry out confidence-aware failure evaluation by merging the prediction outcomes with token-level uncertainty info.

Generate a confidence rating from log possibilities by changing the logprobs to possibilities and utilizing the rating of the primary token within the classification response. We solely use the primary token as we all know subsequent tokens within the classification would align the category label. This step creates downstream high quality gates during which we might route low confidence scores to human overview, have a view into mannequin uncertainty to validate if the mannequin is “guessing,” stopping hallucinations from reaching customers, and later permits stratified evaluation.

Preliminary evaluation

Subsequent, we carry out stratified failure evaluation, which mixes confidence scores with metadata classes to determine particular failure patterns. This multi-dimensional evaluation reveals failure modes throughout completely different process sorts, problem ranges, and domains. Stratified failure evaluation systematically examines low-confidence predictions to determine particular patterns and root causes. It first filters predictions beneath the arrogance threshold, then conducts multi-dimensional evaluation throughout metadata classes to pinpoint the place the mannequin struggles most. We additionally analyze content material patterns in failed predictions, searching for uncertainty language and categorizing error sorts (JSON format points, size issues, or content material errors) earlier than producing insights that inform groups precisely what to repair.

Preview preliminary outcomes

Now let’s overview our preliminary outcomes displaying what was parsed out.

Step 7: Scale the evaluations on multi-node prediction

After figuring out failure patterns, we have to scale our analysis to bigger datasets for testing. Nova analysis containers now assist multi-node analysis to enhance throughput and velocity by configuring the variety of replicas wanted within the recipe.

The Nova analysis container handles multi-node scaling mechanically once you specify multiple duplicate in your analysis recipe. Multi-node scaling distributes the workload throughout a number of nodes whereas sustaining the identical analysis high quality and metadata passthrough capabilities.

Outcome aggregation and efficiency evaluation

The Nova analysis container mechanically handles outcome aggregation from a number of replicas, however we are able to analyze the scaling effectiveness and restrict metadata-based evaluation to the distributed analysis.

Multi-node analysis makes use of the Nova analysis container’s built-in capabilities by way of the replicas parameter, distributing workloads mechanically and aggregating outcomes whereas preserving all metadata-based stratified evaluation capabilities. The container handles the complexity of distributed processing, serving to groups to scale from 1000’s to thousands and thousands of examples by growing the duplicate depend.

Conclusion

This instance demonstrated Nova mannequin analysis fundamentals displaying the capabilities of latest function releases for the Nova analysis container. We confirmed how us utilization of {custom} metrics (BYOM) with domain-specific assessments can drive deep insights. Then defined the right way to extract and use log possibilities to disclose mannequin uncertainty easing the implementation of high quality gates and confidence-based routing. Then confirmed how the metadata passthrough functionality is used downstream for stratified evaluation, pinpointing the place fashions battle and the place to focus enhancements. We then recognized a easy method to scale these methods with multi-node analysis capabilities. Together with these options in your analysis pipeline can assist you make knowledgeable selections on which fashions to undertake and the place customization needs to be utilized.

Get began now with the Nova analysis demo pocket book which has detailed executable code for every step above, from dataset preparation by way of failure evaluation, providing you with a baseline to switch so you’ll be able to consider your personal use case.

Try the Amazon Nova Samples repository for full code examples throughout quite a lot of use instances.

In regards to the authors

Tony Santiago is a Worldwide Companion Options Architect at AWS, devoted to scaling generative AI adoption throughout World Methods Integrators. He makes a speciality of answer constructing, technical go-to-market alignment, and functionality improvement—enabling tens of 1000’s of builders at GSI companions to ship AI-powered options for his or her prospects. Drawing on greater than 20 years of worldwide know-how expertise and a decade with AWS, Tony champions sensible applied sciences that drive measurable enterprise outcomes. Outdoors of labor, he’s obsessed with studying new issues and spending time with household.

Tony Santiago is a Worldwide Companion Options Architect at AWS, devoted to scaling generative AI adoption throughout World Methods Integrators. He makes a speciality of answer constructing, technical go-to-market alignment, and functionality improvement—enabling tens of 1000’s of builders at GSI companions to ship AI-powered options for his or her prospects. Drawing on greater than 20 years of worldwide know-how expertise and a decade with AWS, Tony champions sensible applied sciences that drive measurable enterprise outcomes. Outdoors of labor, he’s obsessed with studying new issues and spending time with household.

Akhil Ramaswamy is a Worldwide Specialist Options Architect at AWS, specializing in superior mannequin customization and inference on SageMaker AI. He companions with international enterprises throughout varied industries to unravel advanced enterprise issues utilizing the AWS generative AI stack. With experience in constructing production-grade agentic programs, Akhil focuses on creating scalable go-to-market options that assist enterprises drive innovation whereas maximizing ROI. Outdoors of labor, you will discover him touring, understanding, or having fun with a pleasant ebook.

Akhil Ramaswamy is a Worldwide Specialist Options Architect at AWS, specializing in superior mannequin customization and inference on SageMaker AI. He companions with international enterprises throughout varied industries to unravel advanced enterprise issues utilizing the AWS generative AI stack. With experience in constructing production-grade agentic programs, Akhil focuses on creating scalable go-to-market options that assist enterprises drive innovation whereas maximizing ROI. Outdoors of labor, you will discover him touring, understanding, or having fun with a pleasant ebook.

Anupam Dewan is a Senior Options Architect working in Amazon Nova crew with a ardour for generative AI and its real-world functions. He focuses on constructing, enabling, and benchmarking AI functions for GenAI prospects in Amazon. With a background in AI/ML, knowledge science, and analytics, Anupam helps prospects be taught and make Amazon Nova work for his or her GenAI use instances to ship enterprise outcomes. Outdoors of labor, you will discover him mountain climbing or having fun with nature.

Anupam Dewan is a Senior Options Architect working in Amazon Nova crew with a ardour for generative AI and its real-world functions. He focuses on constructing, enabling, and benchmarking AI functions for GenAI prospects in Amazon. With a background in AI/ML, knowledge science, and analytics, Anupam helps prospects be taught and make Amazon Nova work for his or her GenAI use instances to ship enterprise outcomes. Outdoors of labor, you will discover him mountain climbing or having fun with nature.