This submit is co-written by Mark Warner, Principal Options Architect for Thales, Cyber Safety Merchandise.

As generative AI purposes make their manner into manufacturing environments, they combine with a wider vary of enterprise techniques that course of delicate buyer knowledge. This integration introduces new challenges round defending personally identifiable data (PII) whereas sustaining the flexibility to get better unique knowledge when legitimately wanted by downstream purposes. Think about a monetary providers firm implementing generative AI throughout completely different departments. The customer support group wants an AI assistant that may entry buyer profiles and supply personalised responses that embody contact data, for instance: “We’ll ship your new card to your handle at 123 Fundamental Road.” In the meantime, the fraud evaluation group requires the identical buyer knowledge however should analyze patterns with out exposing precise PII, working solely with protected representations of delicate data.

Amazon Bedrock Guardrails helps detect delicate data, reminiscent of PII, in commonplace format in enter prompts or mannequin responses. Delicate data filters give organizations management over how delicate knowledge is dealt with, with choices to dam requests containing PII or masks the delicate data with generic placeholders like {NAME} or {EMAIL}. This functionality helps organizations adjust to knowledge safety laws whereas nonetheless utilizing the ability of huge language fashions (LLMs).

Though masking successfully protects delicate data, it creates a brand new problem: the lack of knowledge reversibility. When guardrails substitute delicate knowledge with generic masks, the unique data turns into inaccessible to downstream purposes that may want it for official enterprise processes. This limitation can impression workflows the place each safety and practical knowledge are required.

Tokenization provides a complementary strategy to this problem. In contrast to masking, tokenization replaces delicate knowledge with format-preserving tokens which might be mathematically unrelated to the unique data however keep its construction and value. These tokens may be securely reversed again to their unique values when wanted by approved techniques, making a path for safe knowledge flows all through a corporation’s atmosphere.

On this submit, we present you learn how to combine Amazon Bedrock Guardrails with third-party tokenization providers to guard delicate knowledge whereas sustaining knowledge reversibility. By combining these applied sciences, organizations can implement stronger privateness controls whereas preserving the performance of their generative AI purposes and associated techniques. The answer described on this submit demonstrates learn how to mix Amazon Bedrock Guardrails with tokenization providers from Thales CipherTrust Knowledge Safety Platform to create an structure that protects delicate knowledge with out sacrificing the flexibility to course of that knowledge securely when wanted. This strategy is especially beneficial for organizations in extremely regulated industries that have to stability innovation with compliance necessities.

Amazon Bedrock Guardrails APIs

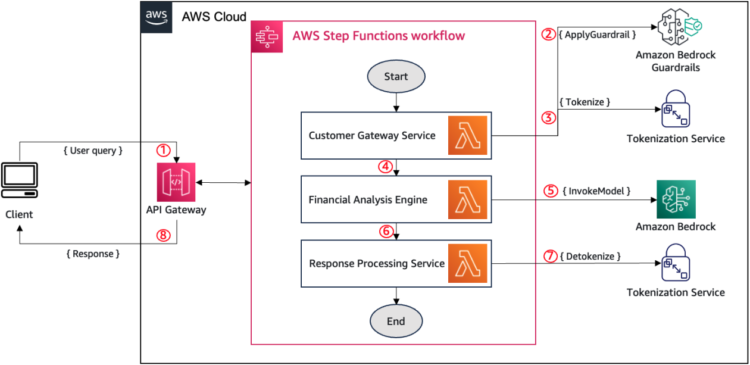

This part describes the important thing parts and workflow for the mixing between Amazon Bedrock Guardrails and a third-party tokenization service.

Amazon Bedrock Guardrails gives two distinct approaches for implementing content material security controls:

- Direct integration with mannequin invocation via APIs like InvokeModel and Converse, the place guardrails robotically consider inputs and outputs as a part of the mannequin inference course of.

- Standalone analysis via the ApplyGuardrail API, which decouples guardrails evaluation from mannequin invocation, permitting analysis of textual content towards outlined insurance policies.

This submit makes use of the ApplyGuardrail API for tokenization integration as a result of it separates content material evaluation from mannequin invocation, permitting for the insertion of tokenization processing between these steps. This separation creates the required area within the workflow to exchange guardrail masks with format-preserving tokens earlier than mannequin invocation, or after the mannequin response is handed over to the goal software downstream within the course of.

The answer extends the everyday ApplyGuardrail API implementation by inserting tokenization processing between guardrail analysis and mannequin invocation, as follows:

- The applying calls the ApplyGuardrail API to evaluate the person enter for delicate data.

- If no delicate data is detected (

motion = "NONE"), the applying proceeds to mannequin invocation by way of the InvokeModel API. - If delicate data is detected (

motion = "ANONYMIZED"):- The applying captures the detected PII and its positions.

- It calls a tokenization service to transform these entities into format-preserving tokens.

- It replaces the generic guardrail masks with these tokens.

- The applying then invokes the inspiration mannequin with the tokenized content material.

- For mannequin responses:

- The applying applies guardrails to test the output from the mannequin for delicate data.

- It tokenizes detected PII earlier than passing the response to downstream techniques.

Answer overview

For example how this workflow delivers worth in apply, think about a monetary advisory software that helps clients perceive their spending patterns and obtain personalised monetary suggestions. On this instance, three distinct software parts work collectively to supply safe, AI-powered monetary insights:

- Buyer gateway service – This trusted frontend orchestrator receives buyer queries that always include delicate data. For instance, a buyer would possibly ask: “Hello, that is j.smith@instance.com. Based mostly on my final 5 transactions on acme.com, and my present stability of $2,342.18, ought to I think about their new bank card provide?”

- Monetary evaluation engine – This AI-powered element analyzes monetary patterns and generates suggestions however doesn’t want entry to precise buyer PII. It really works with anonymized or tokenized data.

- Response processing service – This trusted service handles the ultimate buyer communication, together with detokenizing delicate data earlier than presenting outcomes to the client.

The next diagram illustrates the workflow for integrating Amazon Bedrock Guardrails with tokenization providers on this monetary advisory software. AWS Step Features orchestrates the sequential means of PII detection, tokenization, AI mannequin invocation, and detokenization throughout the three key parts (buyer gateway service, monetary evaluation engine, and response processing service) utilizing AWS Lambda capabilities.

The workflow operates as follows:

- The shopper gateway service (for this instance, via Amazon API Gateway) receives the person enter containing delicate data.

- It calls the ApplyGuardrail API to determine PII or different delicate data that needs to be anonymized or blocked.

- For detected delicate components (reminiscent of person names or service provider names), it calls the tokenization service to generate format-preserving tokens.

- The enter with tokenized values is handed to the monetary evaluation engine for processing. (For instance, “Hello, that is [[TOKEN_123]]. Based mostly on my final 5 transactions on [[TOKEN_456]] and my present stability of $2,342.18, ought to I think about their new bank card provide?”)

- The monetary evaluation engine invokes an LLM on Amazon Bedrock to generate monetary recommendation utilizing the tokenized knowledge.

- The mannequin response, doubtlessly containing tokenized values, is shipped to the response processing service.

- This service calls the tokenization service to detokenize the tokens, restoring the unique delicate values.

- The ultimate, detokenized response is delivered to the client.

This structure maintains knowledge confidentiality all through the processing move whereas preserving the data’s utility. The monetary evaluation engine works with structurally legitimate however cryptographically protected knowledge, permitting it to generate significant suggestions with out exposing delicate buyer data. In the meantime, the trusted parts on the entry and exit factors of the workflow can entry the precise knowledge when obligatory, making a safe end-to-end answer.

Within the following sections, we offer an in depth walkthrough of implementing the mixing between Amazon Bedrock Guardrails and tokenization providers.

Stipulations

To implement the answer described on this submit, you have to have the next parts configured in your atmosphere:

- An AWS account with Amazon Bedrock enabled in your goal AWS Area.

- Applicable AWS Id and Entry Administration (IAM) permissions configured following least privilege rules with particular actions enabled:

bedrock:CreateGuardrail,bedrock:ApplyGuardrail, andbedrock-runtime:InvokeModel. - For AWS Organizations, confirm Amazon Bedrock entry is permitted by service management insurance policies.

- A Python 3.7+ atmosphere with the boto3 library put in. For details about putting in the boto3 library, confer with AWS SDK for Python (Boto3).

- AWS credentials configured for programmatic entry utilizing the AWS Command Line Interface (AWS CLI). For extra particulars, confer with Configuring settings for the AWS CLI.

- This implementation requires a deployed tokenization service accessible via REST API endpoints. Though this walkthrough demonstrates integration with Thales CipherTrust, the sample adapts to tokenization suppliers providing defend and unprotect API operations. Make certain community connectivity exists between your software atmosphere and each AWS APIs and your tokenization service endpoints, together with legitimate authentication credentials for accessing your chosen tokenization service. For details about organising Thales CipherTrust particularly, confer with How Thales Allows PCI DSS Compliance with a Tokenization Answer on AWS.

Configure Amazon Bedrock Guardrails

Configure Amazon Bedrock Guardrails for PII detection and masking via the Amazon Bedrock console or programmatically utilizing the AWS SDK. Delicate data filter insurance policies can anonymize or redact data from mannequin requests or responses:

Combine the tokenization workflow

This part implements the tokenization workflow by first detecting PII entities with the ApplyGuardrail API, then changing the generic masks with format-preserving tokens out of your tokenization service.

Apply guardrails to detect PII entities

Use the ApplyGuardrail API to validate enter textual content from the person and detect PII entities:

Invoke tokenization service

The response from the ApplyGuadrail API contains the listing of PII entities matching the delicate data coverage. Parse these entities and invoke the tokenization service to generate the tokens.

The next instance code makes use of the Thales CipherTrust tokenization service:

Change guardrail masks with tokens

Subsequent, substitute the generic guardrail masks with the tokens generated by the Thales CipherTrust tokenization service. This permits downstream purposes to work with structurally legitimate knowledge whereas sustaining safety and reversibility.

The results of this course of transforms person inputs containing data that match the delicate data coverage utilized utilizing Amazon Bedrock Guardrails into distinctive and reversible tokenized variations.

The next instance enter comprises PII components:

The next is an instance of the sanitized person enter:

Downstream software processing

The sanitized enter is prepared for use by generative AI purposes, together with mannequin invocations on Amazon Bedrock. In response to the tokenized enter, an LLM invoked by the monetary evaluation engine would produce a related evaluation that maintains the safe token format:

When approved techniques have to get better unique values, tokens are detokenized. With Thales CipherTrust, that is achieved utilizing the Detokenize API, which requires the identical parameters as within the earlier tokenize motion. This completes the safe knowledge move whereas preserving the flexibility to get better unique data when wanted.

Clear up

As you comply with the strategy described on this submit, you’ll create new AWS sources in your account. To keep away from incurring extra fees, delete these sources if you not want them.

To wash up your sources, full the next steps:

- Delete the guardrails you created. For directions, confer with Delete your guardrail.

- Should you carried out the tokenization workflow utilizing Lambda, API Gateway, or Step Features as described on this submit, take away the sources you created.

- This submit assumes a tokenization answer is already out there in your account. Should you deployed a third-party tokenization answer (reminiscent of Thales CipherTrust) to check this implementation, confer with that answer’s documentation for directions to correctly decommission these sources and cease incurring fees.

Conclusion

This submit demonstrated learn how to mix Amazon Bedrock Guardrails with tokenization to boost dealing with of delicate data in generative AI workflows. By integrating these applied sciences, organizations can defend PII throughout processing whereas sustaining knowledge utility and reversibility for approved downstream purposes.

The implementation illustrated makes use of Thales CipherTrust Knowledge Safety Platform for tokenization, however the structure helps many tokenization options. To be taught extra a couple of serverless strategy to constructing customized tokenization capabilities, confer with Constructing a serverless tokenization answer to masks delicate knowledge.

This answer gives a sensible framework for builders to make use of the total potential of generative AI with applicable safeguards. By combining the content material security mechanisms of Amazon Bedrock Guardrails with the information reversibility of tokenization, you’ll be able to implement accountable AI workflows that align together with your software necessities and organizational insurance policies whereas preserving the performance wanted for downstream techniques.

To be taught extra about implementing accountable AI practices on AWS, see Remodel accountable AI from idea into apply.

Concerning the Authors

Nizar Kheir is a Senior Options Architect at AWS with greater than 15 years of expertise spanning numerous trade segments. He at the moment works with public sector clients in France and throughout EMEA to assist them modernize their IT infrastructure and foster innovation by harnessing the ability of the AWS Cloud.

Nizar Kheir is a Senior Options Architect at AWS with greater than 15 years of expertise spanning numerous trade segments. He at the moment works with public sector clients in France and throughout EMEA to assist them modernize their IT infrastructure and foster innovation by harnessing the ability of the AWS Cloud.

Mark Warner is a Principal Options Architect for Thales, Cyber Safety Merchandise division. He works with firms in numerous industries reminiscent of finance, healthcare, and insurance coverage to enhance their safety architectures. His focus is aiding organizations with decreasing danger, growing compliance, and streamlining knowledge safety operations to cut back the chance of a breach.

Mark Warner is a Principal Options Architect for Thales, Cyber Safety Merchandise division. He works with firms in numerous industries reminiscent of finance, healthcare, and insurance coverage to enhance their safety architectures. His focus is aiding organizations with decreasing danger, growing compliance, and streamlining knowledge safety operations to cut back the chance of a breach.