Giant language fashions (LLMs) have quickly advanced, turning into integral to functions starting from conversational AI to advanced reasoning duties. Nevertheless, as fashions develop in dimension and functionality, successfully evaluating their efficiency has grow to be more and more difficult. Conventional benchmarking metrics like perplexity and BLEU scores typically fail to seize the nuances of real-world interactions, making human-aligned analysis frameworks essential. Understanding how LLMs are assessed can result in extra dependable deployments and truthful comparisons throughout totally different fashions.

On this publish, we discover automated and human-aligned judging strategies primarily based on LLM-as-a-judge. LLM-as-a-judge refers to utilizing a extra highly effective LLM to judge and rank responses generated by different LLMs primarily based on predefined standards resembling correctness, coherence, helpfulness, or reasoning depth. This strategy has grow to be more and more in style because of the scalability, consistency, sooner iteration, and cost-efficiency in comparison with solely counting on human judges. We focus on totally different LLM-as-a-judge analysis eventualities, together with pairwise comparisons, the place two fashions or responses are judged in opposition to one another, and single-response scoring, the place particular person outputs are rated primarily based on predefined standards. To supply concrete insights, we use MT-Bench and Enviornment-Arduous, two broadly used analysis frameworks. MT-Bench presents a structured, multi-turn analysis strategy tailor-made for chatbot-like interactions, whereas Enviornment-Arduous focuses on rating LLMs by way of head-to-head response battles in difficult reasoning and instruction-following duties. These frameworks purpose to bridge the hole between automated and human judgment, ensuring that LLMs aren’t evaluated solely primarily based on artificial benchmarks but additionally on sensible use circumstances.

The repositories for MT-Bench and Enviornment-Arduous had been initially developed utilizing OpenAI’s GPT API, primarily using GPT-4 because the decide. Our staff has expanded its performance by integrating it with the Amazon Bedrock API to allow utilizing Anthropic’s Claude Sonnet on Amazon as decide. On this publish, we use each MT-Bench and Enviornment-Arduous to benchmark Amazon Nova fashions by evaluating them to different main LLMs accessible by way of Amazon Bedrock.

Amazon Nova fashions and Amazon Bedrock

Our research evaluated all 4 fashions from the Amazon Nova household, together with Amazon Nova Premier, which is the latest addition to the household. Launched at AWS re:Invent in December 2024, Amazon Nova fashions are designed to supply frontier-level intelligence with main price-performance ratios. These fashions rank among the many quickest and most economical choices of their respective intelligence classes and are particularly optimized for powering enterprise generative AI functions in a cheap, safe, and dependable method.

The understanding mannequin household includes 4 distinct tiers: Amazon Nova Micro (text-only, designed for ultra-efficient edge deployment), Amazon Nova Lite (multimodal, optimized for versatility), Amazon Nova Professional (multimodal, providing a super steadiness between intelligence and pace for many enterprise functions), and Amazon Nova Premier (multimodal, representing probably the most superior Nova mannequin for advanced duties and serving as a trainer for mannequin distillation). Amazon Nova fashions help a variety of functions, together with coding, reasoning, and structured textual content era.

Moreover, by way of Amazon Bedrock Mannequin Distillation, prospects can switch the intelligence capabilities of Nova Premier to sooner, more cost effective fashions resembling Nova Professional or Nova Lite, tailor-made to particular domains or use circumstances. This performance is accessible by way of each the Amazon Bedrock console and APIs, together with the Converse API and Invoke API.

MT-Bench evaluation

MT-Bench is a unified framework that makes use of LLM-as-a-judge, primarily based on a set of predefined questions. The analysis questions are a set of difficult multi-turn open-ended questions designed to judge chat assistants. Customers even have the flexibleness to outline their very own query and reply pairs in a method that fits their wants. The framework presents fashions with difficult multi-turn questions throughout eight key domains:

- Writing

- Roleplay

- Reasoning

- Arithmetic

- Coding

- Information Extraction

- STEM

- Humanities

The LLMs are evaluated utilizing two forms of analysis:

- Single-answer grading – This mode asks the LLM decide to grade and provides a rating to a mannequin’s reply immediately with out pairwise comparability. For every flip, the LLM decide provides a rating on a scale of 0–10. Then the typical rating is computed on all turns.

- Win-rate primarily based grading – This mode makes use of two metrics:

- pairwise-baseline – Run a pairwise comparability in opposition to a baseline mannequin.

- pairwise-all – Run a pairwise comparability between all mannequin pairs on all questions.

Analysis setup

On this research, we employed Anthropic’s Claude 3.7 Sonnet as our LLM decide, given its place as one of the vital superior language fashions accessible on the time of our research. We targeted completely on single-answer grading, whereby the LLM decide immediately evaluates and scores model-generated responses with out conducting pairwise comparisons.

The eight domains lined in our research will be broadly categorized into two teams: these with definitive floor reality and people with out. Particularly, Reasoning, Arithmetic, Coding, and Information Extraction fall into the previous class as a result of they usually have reference solutions in opposition to which responses will be objectively evaluated. Conversely, Writing, Roleplay, STEM, and Humanities typically lack such clear-cut floor reality. Right here we offer an instance query from the Writing and Math classes:

To account for this distinction, MT-Bench employs totally different judging prompts for every class (seek advice from the next GitHub repo), tailoring the analysis course of to the character of the duty at hand. As proven within the following analysis immediate, for questions with out a reference reply, MT-Bench adopts the single-v1 immediate, solely passing the query and model-generated reply. When evaluating questions with a reference reply, it solely passes the reference_answer, as proven within the single-math-v1 immediate.

General efficiency evaluation throughout Amazon Nova Fashions

In our analysis utilizing Anthropic’s Claude 3.7 Sonnet as an LLM-as-a-judge framework, we noticed a transparent efficiency hierarchy amongst Amazon Nova fashions. The scores ranged from 8.0 to eight.6, with Amazon Nova Premier reaching the very best median rating of 8.6, adopted carefully by Amazon Nova Professional at 8.5. Each Amazon Nova Lite and Nova Micro achieved respectable median scores of 8.0.

What distinguishes these fashions past their median scores is their efficiency consistency. Nova Premier demonstrated probably the most steady efficiency throughout analysis classes with a slender min-max margin of 1.5 (starting from 7.94 to 9.47). Compared, Nova Professional confirmed larger variability with a min-max margin of two.7 (from 6.44 to 9.13). Equally, Nova Lite exhibited extra constant efficiency than Nova Micro, as evidenced by their respective min-max margins. For enterprise deployments the place response time is essential, Nova Lite and Nova Micro excel with lower than 6-second common latencies for single question-answer era. This efficiency attribute makes them notably appropriate for edge deployment eventualities and functions with strict latency necessities. When factoring of their decrease value, these fashions current compelling choices for a lot of sensible use circumstances the place the slight discount in efficiency rating is a suitable trade-off.

Curiously, our evaluation revealed that Amazon Nova Premier, regardless of being the biggest mannequin, demonstrates superior token effectivity. It generates extra concise responses that eat as much as 190 fewer tokens for single question-answer era than comparable fashions. This commentary aligns with analysis indicating that extra subtle fashions are usually simpler at filtering irrelevant info and structuring responses effectively.

The slender 0.6-point differential between the very best and lowest performing fashions suggests that each one Amazon Nova variants show sturdy capabilities. Though bigger fashions resembling Nova Premier provide marginally higher efficiency with larger consistency, smaller fashions present compelling alternate options when latency and price are prioritized. This efficiency profile provides builders flexibility to pick out the suitable mannequin primarily based on their particular software necessities.

The next graph summarizes the general efficiency scores and latency for all 4 fashions.

The next desk reveals token consumption and price evaluation for Amazon Nova Fashions.

| Mannequin | Avg. whole tokens per question | Value per 1k enter tokens | Avg. value per question (cents) |

| Amazon Nova Premier | 2154 | $0.0025 | $5.4 |

| Amazon Nova Professional | 2236 | $0.0008 | $1.8 |

| Amazon Nova Lite | 2343 | $0.00006 | $0.14 |

| Amazon Nova Micro | 2313 | $0.000035 | $0.08 |

Class-specific mannequin comparability

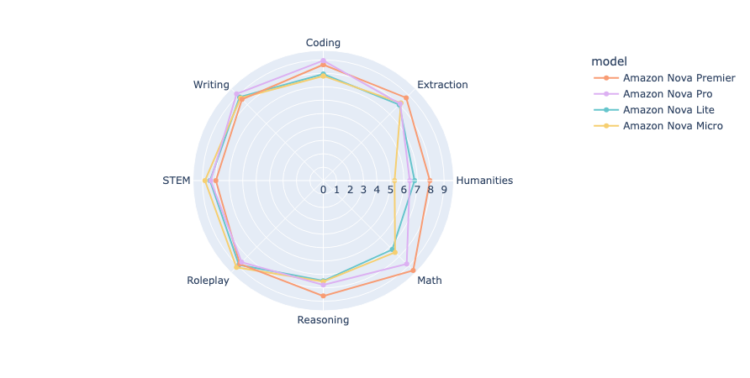

The next radar plot compares the Amazon Nova fashions throughout all eight domains.

The radar plot reveals distinct efficiency patterns throughout the Amazon Nova mannequin household, with a transparent stratification throughout domains. Nova Premier constantly outperforms its counterparts, displaying specific strengths in Math, Reasoning, Humanities, and Extraction, the place it achieves scores approaching or exceeding 9. Nova Professional follows carefully behind Premier in most classes, sustaining aggressive efficiency particularly in Writing and Coding, whereas displaying extra pronounced gaps in Humanities, Reasoning, and Math. Each Nova Lite and Micro show comparable efficiency profiles to one another, with their strongest displaying in Roleplay, and their most important limitations in Humanities and Math, the place the differential between Premier and the smaller fashions is most pronounced (roughly 1.5–3 factors).

The constant efficiency hierarchy throughout all domains (Premier > Professional > Lite ≈ Micro) aligns with mannequin dimension and computational sources, although the magnitude of those variations varies considerably by class. Math and reasoning emerge among the many most discriminating domains for mannequin functionality evaluation and counsel substantial profit from the extra scale of Amazon Nova Premier. Nevertheless, workloads targeted on inventive content material (Roleplay, Writing) present probably the most constant efficiency throughout the Nova household and counsel smaller fashions as compelling choices given their latency and price advantages. This domain-specific evaluation presents practitioners priceless steering when choosing the suitable Nova mannequin primarily based on their software’s main information necessities.

On this research, we adopted Anthropic’s Claude 3.7 Sonnet as the only LLM decide. Nevertheless, though Anthropic’s Claude 3.7 Sonnet is a well-liked alternative for LLM judging on account of its capabilities, research have proven that it does exhibit sure bias (for instance, it prefers longer responses). If permitted by time and sources, think about adopting a multi-LLM decide analysis framework to successfully scale back biases intrinsic to particular person LLM judges and enhance analysis reliability.

Enviornment-Arduous-Auto evaluation

Enviornment-Arduous-Auto is a benchmark that makes use of 500 difficult prompts as a dataset to judge totally different LLMs utilizing LLM-as-a-judge. The dataset is curated by way of an automatic pipeline known as BenchBuilder, which makes use of LLMs to routinely cluster, grade, and filter open-ended prompts from giant, crowd-sourced datasets resembling Chatbot-Enviornment to allow steady benchmarking with out a human within the loop. The paper studies that the brand new analysis metrics present 3 times greater separation of mannequin performances in comparison with MT-Bench and obtain a 98.6% correlation with human choice rankings.

Check framework and methodology

The Enviornment-Arduous-Auto benchmarking framework evaluates totally different LLMs utilizing a pairwise comparability. Every mannequin’s efficiency is quantified by evaluating it in opposition to a powerful baseline mannequin, utilizing a structured, rigorous setup to generate dependable and detailed judgments. We use the next parts for the analysis:

- Pairwise comparability setup – As an alternative of evaluating fashions in isolation, they’re in contrast immediately with a powerful baseline mannequin. This baseline offers a set customary, making it easy to know how the fashions carry out relative to an already high-performing mannequin.

- Decide mannequin with fine-grained classes – A strong mannequin (Anthropic’s Claude 3.7 Sonnet) is used as a decide. This decide doesn’t merely determine which mannequin is healthier, it additionally categorizes the comparability into 5 detailed choice labels. Through the use of this nuanced scale, giant efficiency gaps are penalized extra closely than small ones, which helps separate fashions extra successfully primarily based on efficiency variations:

A >> B(A is considerably higher than B)A > B(A is healthier than B)A ~= B(A and B are comparable)B > A(B is healthier than A)B >> A(B is considerably higher than A)

- Chain-of-thought (CoT) prompting – CoT prompting encourages the decide mannequin to elucidate its reasoning earlier than giving a closing judgment. This course of can result in extra considerate and dependable evaluations by serving to the mannequin analyze every response in depth fairly than making a snap choice.

- Two-game setup to keep away from place bias – To attenuate bias that may come up from a mannequin constantly being offered first or second, every mannequin pair is evaluated twice, swapping the order of the fashions. This fashion, if there’s a choice for fashions in sure positions, the setup controls for it. The full variety of judgments is doubled (for instance, 500 queries x 2 positions = 1,000 judgments).

- Bradley-Terry mannequin for scoring – After the comparisons are made, the Bradley-Terry mannequin is utilized to calculate every mannequin’s closing rating. This mannequin makes use of pairwise comparability knowledge to estimate the relative energy of every mannequin in a method that displays not solely the variety of wins but additionally the energy of wins. This scoring technique is extra sturdy than merely calculating win-rate as a result of it accounts for pairwise outcomes throughout the fashions.

- Bootstrapping for statistical stability – By repeatedly sampling the comparability outcomes (bootstrapping), the analysis turns into statistically steady. This stability is useful as a result of it makes positive the mannequin rankings are dependable and fewer delicate to random variations within the knowledge.

- Model management – Sure fashion options like response size and markdown formatting are separated from content material high quality, utilizing fashion controls, to supply a clearer evaluation of every mannequin’s intrinsic capabilities.

The unique work focuses on pairwise comparability solely. For our benchmarking, we additionally included our personal implementation of single-score judgment, taking inspiration from MT-Bench. We once more use Anthropic’s Claude 3.7 Sonnet because the decide and use the next immediate for judging with out a reference mannequin:

Efficiency comparability

We evaluated 5 fashions, together with Amazon Nova Premier, Amazon Nova Professional, Amazon Nova Lite, Amazon Nova Micro, DeepSeek-R1, and a powerful reference mannequin. The Enviornment-Arduous benchmark generates confidence intervals by bootstrapping, as defined earlier than. The 95% confidence interval reveals the uncertainty of the fashions and is indicative of mannequin efficiency. From the next plot, we will see that each one the Amazon Nova fashions get a excessive pairwise Bradley-Terry rating. It needs to be famous that the Bradley-Terry rating for the reference mannequin is 5; it is because Bradley-Terry scores are computed by pairwise comparisons the place the reference mannequin is among the fashions within the pair. So, for the reference mannequin, the rating will probably be 50%, and since the entire rating is normalized between 0 and 10, the reference mannequin has a rating of 5.

The arrogance interval evaluation, as proven within the following desk, was executed to statistically consider the Amazon Nova mannequin household alongside DeepSeek-R1, offering deeper insights past uncooked scores. Nova Premier leads the pack (8.36–8.72), with DeepSeek-R1 (7.99–8.30) and Nova Professional (7.72–8.12) following carefully. The overlapping confidence intervals amongst these high performers point out statistically comparable capabilities. Nova Premier demonstrates sturdy efficiency consistency with a decent confidence interval (−0.16, +0.20), whereas sustaining the very best total scores. A transparent statistical separation exists between these main fashions and the purpose-built Nova Lite (6.51–6.98) and Nova Micro (5.68–6.14), that are designed for various use circumstances. This complete evaluation confirms the place of Nova Premier as a high performer, with the complete Nova household providing choices throughout the efficiency spectrum to fulfill different buyer necessities and useful resource constraints.

| Mannequin | Pairwise rating twenty fifth quartile | Pairwise rating seventy fifth quartile | Confidence interval |

| Amazon Nova Premier | 8.36 | 8.72 | (−0.16, +0.20) |

| Amazon Nova Professional | 7.72 | 8.12 | (−0.18, +0.23) |

| Amazon Nova Lite | 6.51 | 6.98 | (−0.22, +0.25) |

| Amazon Nova Micro | 5.68 | 6.14 | (−0.21, +0.25) |

| DeepSeek-R1 | 7.99 | 8.30 | (−0.15, +0.16) |

Value per output token is among the contributors to the general value of the LLM mannequin and impacts the utilization. The fee was computed primarily based on the typical output tokens over the five hundred responses. Though Amazon Nova Premier leads in efficiency (85.22), Nova Mild and Nova Micro provide compelling worth regardless of their wider confidence intervals. Nova Micro delivers 69% of the efficiency of Nova Premier at 89 occasions cheaper value, whereas Nova Mild achieves 79% of the capabilities of Nova Premier, at 52 occasions cheaper price. These dramatic value efficiencies make the extra inexpensive Nova fashions engaging choices for a lot of functions the place absolute high efficiency isn’t important, highlighting the efficient performance-cost tradeoffs throughout the Amazon Nova household.

Conclusion

On this publish, we explored using LLM-as-a-judge by way of MT-Bench and Enviornment-Arduous benchmarks to judge mannequin efficiency rigorously. We then in contrast Amazon Nova fashions in opposition to a number one reasoning mannequin, that’s, DeepSeek-R1 hosted on Amazon Bedrock, analyzing their capabilities throughout varied duties. Our findings point out that Amazon Nova fashions ship sturdy efficiency, particularly in Extraction, Humanities, STEM, and Roleplay, whereas sustaining decrease operational prices, making them a aggressive alternative for enterprises seeking to optimize effectivity with out compromising on high quality. These insights spotlight the significance of benchmarking methodologies in guiding mannequin choice and deployment selections in real-world functions.

For extra info on Amazon Bedrock and the most recent Amazon Nova fashions, seek advice from the Amazon Bedrock Consumer Information and Amazon Nova Consumer Information. The AWS Generative AI Innovation Middle has a bunch of AWS science and technique consultants with complete experience spanning the generative AI journey, serving to prospects prioritize use circumstances, construct a roadmap, and transfer options into manufacturing. Take a look at Generative AI Innovation Middle for our newest work and buyer success tales.

Concerning the authors

Mengdie (Flora) Wang is a Information Scientist at AWS Generative AI Innovation Middle, the place she works with prospects to architect and implement scalable Generative AI options that deal with their distinctive enterprise challenges. She makes a speciality of mannequin customization methods and agent-based AI methods, serving to organizations harness the complete potential of generative AI know-how. Previous to AWS, Flora earned her Grasp’s diploma in Pc Science from the College of Minnesota, the place she developed her experience in machine studying and synthetic intelligence.

Mengdie (Flora) Wang is a Information Scientist at AWS Generative AI Innovation Middle, the place she works with prospects to architect and implement scalable Generative AI options that deal with their distinctive enterprise challenges. She makes a speciality of mannequin customization methods and agent-based AI methods, serving to organizations harness the complete potential of generative AI know-how. Previous to AWS, Flora earned her Grasp’s diploma in Pc Science from the College of Minnesota, the place she developed her experience in machine studying and synthetic intelligence.

Baishali Chaudhury is an Utilized Scientist on the Generative AI Innovation Middle at AWS, the place she focuses on advancing Generative AI options for real-world functions. She has a powerful background in pc imaginative and prescient, machine studying, and AI for healthcare. Baishali holds a PhD in Pc Science from College of South Florida and PostDoc from Moffitt Most cancers Centre.

Baishali Chaudhury is an Utilized Scientist on the Generative AI Innovation Middle at AWS, the place she focuses on advancing Generative AI options for real-world functions. She has a powerful background in pc imaginative and prescient, machine studying, and AI for healthcare. Baishali holds a PhD in Pc Science from College of South Florida and PostDoc from Moffitt Most cancers Centre.

Rahul Ghosh is an Utilized Scientist at Amazon’s Generative AI Innovation Middle, the place he works with AWS prospects throughout totally different verticals to expedite their use of Generative AI. Rahul holds a Ph.D. in Pc Science from the College of Minnesota.

Rahul Ghosh is an Utilized Scientist at Amazon’s Generative AI Innovation Middle, the place he works with AWS prospects throughout totally different verticals to expedite their use of Generative AI. Rahul holds a Ph.D. in Pc Science from the College of Minnesota.

Jae Oh Woo is a Senior Utilized Scientist on the AWS Generative AI Innovation Middle, the place he makes a speciality of growing customized options and mannequin customization for a various vary of use circumstances. He has a powerful ardour for interdisciplinary analysis that connects theoretical foundations with sensible functions within the quickly evolving area of generative AI. Previous to becoming a member of Amazon, Jae Oh was a Simons Postdoctoral Fellow on the College of Texas at Austin. He holds a Ph.D. in Utilized Arithmetic from Yale College.

Jae Oh Woo is a Senior Utilized Scientist on the AWS Generative AI Innovation Middle, the place he makes a speciality of growing customized options and mannequin customization for a various vary of use circumstances. He has a powerful ardour for interdisciplinary analysis that connects theoretical foundations with sensible functions within the quickly evolving area of generative AI. Previous to becoming a member of Amazon, Jae Oh was a Simons Postdoctoral Fellow on the College of Texas at Austin. He holds a Ph.D. in Utilized Arithmetic from Yale College.

Jamal Saboune is an Utilized Science Supervisor with AWS Generative AI Innovation Middle. He’s at the moment main a staff targeted on supporting AWS prospects construct revolutionary and scalable Generative AI merchandise throughout a number of industries. Jamal holds a PhD in AI and Pc Imaginative and prescient from the INRIA Lab in France, and has an extended R&D expertise designing and constructing AI options that add worth to customers.

Jamal Saboune is an Utilized Science Supervisor with AWS Generative AI Innovation Middle. He’s at the moment main a staff targeted on supporting AWS prospects construct revolutionary and scalable Generative AI merchandise throughout a number of industries. Jamal holds a PhD in AI and Pc Imaginative and prescient from the INRIA Lab in France, and has an extended R&D expertise designing and constructing AI options that add worth to customers.

Wan Chen is an Utilized Science Supervisor on the Generative AI Innovation Middle. As a ML/AI veteran in tech trade, she has wide selection of experience on conventional machine studying, recommender system, deep studying and Generative AI. She is a stronger believer of Superintelligence, and may be very passionate to push the boundary of AI analysis and software to reinforce human life and drive enterprise development. She holds Ph.D in Utilized Arithmetic from College of British Columbia, and had labored as postdoctoral fellow in Oxford College.

Wan Chen is an Utilized Science Supervisor on the Generative AI Innovation Middle. As a ML/AI veteran in tech trade, she has wide selection of experience on conventional machine studying, recommender system, deep studying and Generative AI. She is a stronger believer of Superintelligence, and may be very passionate to push the boundary of AI analysis and software to reinforce human life and drive enterprise development. She holds Ph.D in Utilized Arithmetic from College of British Columbia, and had labored as postdoctoral fellow in Oxford College.

Anila Joshi has greater than a decade of expertise constructing AI options. As a AWSI Geo Chief at AWS Generative AI Innovation Middle, Anila pioneers revolutionary functions of AI that push the boundaries of chance and speed up the adoption of AWS companies with prospects by serving to prospects ideate, establish, and implement safe generative AI options.

Anila Joshi has greater than a decade of expertise constructing AI options. As a AWSI Geo Chief at AWS Generative AI Innovation Middle, Anila pioneers revolutionary functions of AI that push the boundaries of chance and speed up the adoption of AWS companies with prospects by serving to prospects ideate, establish, and implement safe generative AI options.