IT operations groups face the problem of offering clean functioning of vital programs whereas managing a excessive quantity of incidents filed by end-users. Guide intervention in incident administration could be time-consuming and error inclined as a result of it depends on repetitive duties, human judgment, and potential communication gaps. Utilizing generative AI for IT operations gives a transformative answer that helps automate incident detection, analysis, and remediation, enhancing operational effectivity.

AI for IT operations (AIOps) is the applying of AI and machine studying (ML) applied sciences to automate and improve IT operations. AIOps helps IT groups handle and monitor large-scale programs by routinely detecting, diagnosing, and resolving incidents in actual time. It combines knowledge from varied sources—resembling logs, metrics, and occasions—to investigate system habits, establish anomalies, and suggest or execute automated remediation actions. By decreasing guide intervention, AIOps improves operational effectivity, accelerates incident decision, and minimizes downtime.

This put up presents a complete AIOps answer that mixes varied AWS providers resembling Amazon Bedrock, AWS Lambda, and Amazon CloudWatch to create an AI assistant for efficient incident administration. This answer additionally makes use of Amazon Bedrock Data Bases and Amazon Bedrock Brokers. The answer makes use of the facility of Amazon Bedrock to allow the deployment of clever brokers able to monitoring IT programs, analyzing logs and metrics, and invoking automated remediation processes.

Amazon Bedrock is a totally managed service that makes basis fashions (FMs) from main AI startups and Amazon out there by way of a single API, so you’ll be able to select from a variety of FMs to search out the mannequin that’s finest suited to your use case. With the Amazon Bedrock serverless expertise, you may get began rapidly, privately customise FMs with your individual knowledge, and combine and deploy them into your purposes utilizing AWS instruments with out having to handle the infrastructure. Amazon Bedrock Data Bases is a totally managed functionality with built-in session context administration and supply attribution that helps you implement your entire Retrieval Augmented Era (RAG) workflow, from ingestion to retrieval and immediate augmentation, with out having to construct customized integrations to knowledge sources and handle knowledge flows. Amazon Bedrock Brokers is a totally managed functionality that make it easy for builders to create generative AI-based purposes that may full complicated duties for a variety of use circumstances and ship up-to-date solutions primarily based on proprietary information sources.

Generative AI is quickly remodeling companies and unlocking new prospects throughout industries. This put up highlights the transformative influence of huge language fashions (LLMs). With the flexibility to encode human experience and talk in pure language, generative AI can assist increase human capabilities and permit organizations to harness information at scale.

Challenges in IT operations with runbooks

Runbooks are detailed, step-by-step guides that define the processes, procedures, and duties wanted to finish particular operations, sometimes in IT and programs administration. They’re generally used to doc repetitive duties, troubleshooting steps, and routine upkeep. By standardizing responses to points and facilitating consistency in activity execution, runbooks assist groups enhance operational effectivity and streamline workflows. Most organizations depend on runbooks to simplify complicated processes, making it easy for groups to deal with routine operations and reply successfully to system points. For organizations, managing tons of of runbooks, monitoring their standing, conserving monitor of failures, and establishing the suitable alerting can change into troublesome. This creates visibility gaps for IT groups. When you’ve a number of runbooks for varied processes, managing the dependencies and run order between them can change into complicated and tedious. It’s difficult to deal with failure situations and ensure every part runs in the suitable sequence.

The next are a number of the challenges that the majority organizations face with guide IT operations:

- Guide analysis by way of run logs and metrics

- Runbook dependency and sequence mapping

- No automated remediation processes

- No real-time visibility into runbook progress

Resolution overview

Amazon Bedrock is the muse of this answer, empowering clever brokers to observe IT programs, analyze knowledge, and automate remediation. The answer offers pattern AWS Cloud Improvement Package (AWS CDK) code to deploy this answer. The AIOps answer offers an AI assistant utilizing Amazon Bedrock Brokers to assist with operations automation and runbook execution.

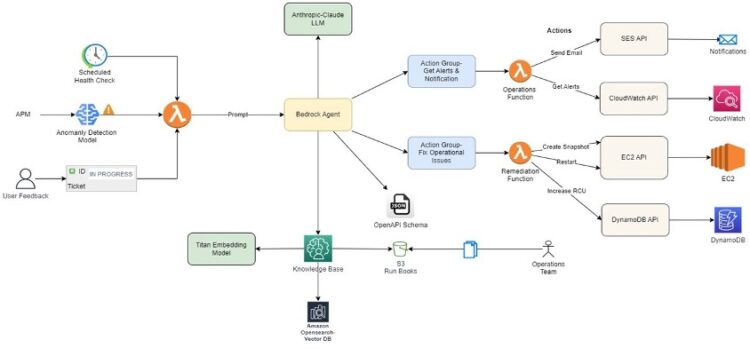

The next structure diagram explains the general move of this answer.

The agent makes use of Anthropic’s Claude LLM out there on Amazon Bedrock as one of many FMs to investigate incident particulars and retrieve related info from the information base, a curated assortment of runbooks and finest practices. This equips the agent with business-specific context, ensuring responses are exact and backed by knowledge from Amazon Bedrock Data Bases. Primarily based on the evaluation, the agent dynamically generates a runbook tailor-made to the particular incident and invokes acceptable remediation actions, resembling creating snapshots, restarting situations, scaling assets, or working customized workflows.

Amazon Bedrock Data Bases create an Amazon OpenSearch Serverless vector search assortment to retailer and index incident knowledge, runbooks, and run logs, enabling environment friendly search and retrieval of data. Lambda capabilities are employed to run particular actions, resembling sending notifications, invoking API calls, or invoking automated workflows. The answer additionally integrates with Amazon Easy E mail Service (Amazon SES) for well timed notifications to stakeholders.

The answer workflow consists of the next steps:

- Present runbooks in varied codecs (resembling Phrase paperwork, PDFs, or textual content recordsdata) are uploaded to Amazon Easy Storage Service (Amazon S3).

- Amazon Bedrock Data Bases converts these paperwork into vector embeddings utilizing a particular embedding mannequin, configured as a part of the information base setup.

- These vector embeddings are saved in OpenSearch Serverless for environment friendly retrieval, additionally configured through the information base setup.

- Brokers and motion teams are then arrange with the required APIs and prompts for dealing with completely different situations.

- The OpenAPI specification defines which APIs must be known as, together with their enter parameters and anticipated output, permitting Amazon Bedrock Brokers to make knowledgeable choices.

- When a consumer immediate is obtained, Amazon Bedrock Brokers makes use of RAG, motion teams, and the OpenAPI specification to find out the suitable API calls. If extra particulars are wanted, the agent prompts the consumer for added info.

- Amazon Bedrock Brokers can iterate and name a number of capabilities as wanted till the duty is efficiently full.

Stipulations

To implement this AIOps answer, you want an lively AWS account and fundamental information of the AWS CDK and the next AWS providers:

- Amazon Bedrock

- Amazon CloudWatch

- AWS Lambda

- Amazon OpenSearch Serverless

- Amazon SES

- Amazon S3

Moreover, it is advisable to provision the required infrastructure parts, resembling Amazon Elastic Compute Cloud (Amazon EC2) situations, Amazon Elastic Block Retailer (Amazon EBS) volumes, and different assets particular to your IT operations surroundings.

Construct the RAG pipeline with OpenSearch Serverless

This answer makes use of a RAG pipeline to search out related content material and finest practices from operations runbooks to generate responses. The RAG strategy helps be sure the agent generates responses which can be grounded in factual documentation, which avoids hallucinations. The related matches from the information base information Anthropic’s Claude 3 Haiku mannequin so it focuses on the related info. The RAG course of is powered by Amazon Bedrock Data Bases, which shops info that the Amazon Bedrock agent can entry and use. For this use case, our information base accommodates present runbooks from the group with step-by-step procedures to resolve completely different operational points on AWS assets.

The pipeline has the next key duties:

- Ingest paperwork in an S3 bucket – Step one ingests present runbooks into an S3 bucket to create a searchable index with the assistance of OpenSearch Serverless.

- Monitor infrastructure well being utilizing CloudWatch – An Amazon Bedrock motion group is used to invoke Lambda capabilities to get CloudWatch metrics and alerts for EC2 situations from an AWS account. These particular checks are then used as Anthropic’s Claude 3 Haiku mannequin inputs to kind a well being standing overview of the account.

Configure Amazon Bedrock Brokers

Amazon Bedrock Brokers increase the consumer request with the suitable info from Amazon Bedrock Data Bases to generate an correct response. For this use case, our information base accommodates present runbooks from the group with step-by-step procedures to resolve completely different operational points on AWS assets.

By configuring the suitable motion teams and populating the information base with related knowledge, you’ll be able to tailor the Amazon Bedrock agent to help with particular duties or domains and supply correct and useful responses inside its supposed scopes.

Amazon Bedrock brokers empower Anthropic’s Claude 3 Haiku to make use of instruments, overcoming LLM limitations like information cutoffs and hallucinations, for enhanced activity completion by way of API calls and different exterior interactions.

The agent’s workflow is to examine for useful resource alerts utilizing an API, then if discovered, fetch and execute the related runbook’s steps (for instance, create snapshots, restart situations, and ship emails).

The general system allows automated detection and remediation of operational points on AWS whereas imposing adherence to documented procedures by way of the runbook strategy.

To arrange this answer utilizing Amazon Bedrock Brokers, consult with the GitHub repo that provisions the next assets. Make certain to confirm the AWS Id and Entry Administration (IAM) permissions and comply with IAM finest practices whereas deploying the code. It’s suggested to use least-privilege permissions for IAM insurance policies.

- S3 bucket

- Amazon Bedrock agent

- Motion group

- Amazon Bedrock agent IAM position

- Amazon Bedrock agent motion group

- Lambda perform

- Lambda service coverage permission

- Lambda IAM position

Advantages

With this answer, organizations can automate their operations and save plenty of time. The automation can be much less vulnerable to errors in comparison with guide execution. It gives the next extra advantages:

- Decreased guide intervention – Automating incident detection, analysis, and remediation helps reduce human involvement, decreasing the chance of errors, delays, and inconsistencies that always come up from guide processes.

- Elevated operational effectivity – Through the use of generative AI, the answer quickens incident decision and optimizes operational workflows. The automation of duties resembling runbook execution, useful resource monitoring, and remediation permits IT groups to give attention to extra strategic initiatives.

- Scalability – As organizations develop, managing IT operations manually turns into more and more complicated. Automating operations utilizing generative AI can scale with the enterprise, managing extra incidents, runbooks, and infrastructure with out requiring proportional will increase in personnel.

Clear up

To keep away from incurring pointless prices, it’s advisable to delete the assets created through the implementation of this answer when not in use. You are able to do this by deleting the AWS CloudFormation stacks deployed as a part of the answer, or manually deleting the assets on the AWS Administration Console or utilizing the AWS Command Line Interface (AWS CLI).

Conclusion

The AIOps pipeline introduced on this put up empowers IT operations groups to streamline incident administration processes, scale back guide interventions, and improve operational effectivity. With the facility of AWS providers, organizations can automate incident detection, analysis, and remediation, enabling sooner incident decision and minimizing downtime.

By way of the mixing of Amazon Bedrock, Anthropic’s Claude on Amazon Bedrock, Amazon Bedrock Brokers, Amazon Bedrock Data Bases, and different supporting providers, this answer offers real-time visibility into incidents, automated runbook technology, and dynamic remediation actions. Moreover, the answer offers well timed notifications and seamless collaboration between AI brokers and human operators, fostering a extra proactive and environment friendly strategy to IT operations.

Generative AI is quickly remodeling how companies can make the most of cloud applied sciences with ease. This answer utilizing Amazon Bedrock demonstrates the immense potential of generative AI fashions to reinforce human capabilities. By offering builders professional steering grounded in AWS finest practices, this AI assistant allows DevOps groups to overview and optimize cloud structure throughout of AWS accounts.

Check out the answer your self and depart any suggestions or questions within the feedback.

Concerning the Authors

Upendra V is a Sr. Options Architect at Amazon Net Companies, specializing in Generative AI and cloud options. He helps enterprise clients design and deploy production-ready Generative AI workloads, implement Giant Language Fashions (LLMs) and Agentic AI programs, and optimize cloud deployments. With experience in cloud adoption and machine studying, he allows organizations to construct and scale AI-driven purposes effectively.

Upendra V is a Sr. Options Architect at Amazon Net Companies, specializing in Generative AI and cloud options. He helps enterprise clients design and deploy production-ready Generative AI workloads, implement Giant Language Fashions (LLMs) and Agentic AI programs, and optimize cloud deployments. With experience in cloud adoption and machine studying, he allows organizations to construct and scale AI-driven purposes effectively.

Deepak Dixit is a Options Architect at Amazon Net Companies, specializing in Generative AI and cloud options. He helps enterprises architect scalable AI/ML workloads, implement Giant Language Fashions (LLMs), and optimize cloud-native purposes.

Deepak Dixit is a Options Architect at Amazon Net Companies, specializing in Generative AI and cloud options. He helps enterprises architect scalable AI/ML workloads, implement Giant Language Fashions (LLMs), and optimize cloud-native purposes.