Asure, an organization of over 600 staff, is a number one supplier of cloud-based workforce administration options designed to assist small and midsized companies streamline payroll and human assets (HR) operations and guarantee compliance. Their choices embrace a complete suite of human capital administration (HCM) options for payroll and tax, HR compliance companies, time monitoring, 401(ok) plans, and extra.

Asure anticipated that generative AI may help contact heart leaders to grasp their workforce’s assist efficiency, establish gaps and ache factors of their merchandise, and acknowledge the simplest methods for coaching buyer assist representatives utilizing name transcripts. The Asure workforce was manually analyzing 1000’s of name transcripts to uncover themes and developments, a course of that lacked scalability. The overarching objective of this engagement was to enhance upon this guide method. Failing to undertake a extra automated method may have probably led to decreased buyer satisfaction scores and, consequently, a loss in future income. Due to this fact, it was worthwhile to supply Asure a post-call analytics pipeline able to offering useful insights, thereby enhancing the general buyer assist expertise and driving enterprise progress.

Asure acknowledged the potential of generative AI to additional improve the person expertise and higher perceive the wants of the client and needed to discover a associate to assist notice it.

Pat Goepel, chairman and CEO of Asure, shares,

“In collaboration with the AWS Generative AI Innovation Heart, we’re using Amazon Bedrock, Amazon Comprehend, and Amazon Q in QuickSight to grasp developments in our personal buyer interactions, prioritize objects for product improvement, and detect points sooner in order that we might be much more proactive in our assist for our clients. Our partnership with AWS and our dedication to be early adopters of revolutionary applied sciences like Amazon Bedrock underscore our dedication to creating superior HCM know-how accessible for companies of any measurement.”

“We’re thrilled to associate with AWS on this groundbreaking generative AI venture. The strong AWS infrastructure and superior AI capabilities present the right basis for us to innovate and push the boundaries of what’s doable. This collaboration will allow us to ship cutting-edge options that not solely meet however exceed our clients’ expectations. Collectively, we’re poised to rework the panorama of AI-driven know-how and create unprecedented worth for our purchasers.”

—Yasmine Rodriguez, CTO of Asure.

“As we launched into our journey at Asure to combine generative AI into our options, discovering the precise associate was essential. Having the ability to associate with the Gen AI Innovation Heart at AWS brings not solely technical experience with AI however the expertise of creating options at scale. This collaboration confirms that our AI options will not be simply revolutionary but in addition resilient. Collectively, we consider that we are able to harness the facility of AI to drive effectivity, improve buyer experiences, and keep forward in a quickly evolving market.”

—John Canada, VP of Engineering at Asure.

On this submit, we discover why Asure used the Amazon Internet Providers (AWS) post-call analytics (PCA) pipeline that generated insights throughout name facilities at scale with the superior capabilities of generative AI-powered companies corresponding to Amazon Bedrock and Amazon Q in QuickSight. Asure selected this method as a result of it offered in-depth shopper analytics, categorized name transcripts round widespread themes, and empowered contact heart leaders to make use of pure language to reply queries. This finally allowed Asure to supply its clients with enhancements in product and buyer experiences.

Resolution Overview

At a excessive degree, the answer consists of first changing audio into transcripts utilizing Amazon Transcribe and producing and evaluating abstract fields for every transcript utilizing Amazon Bedrock. As well as, Q&A might be completed at a single name degree utilizing Amazon Bedrock or for a lot of calls utilizing Amazon Q in QuickSight. In the remainder of this part, we describe these parts and the companies utilized in higher element.

We added upon the prevailing PCA answer with the next companies:

Customer support and name heart operations are extremely dynamic, with evolving buyer expectations, market developments, and technological developments reshaping the business at a speedy tempo. Staying forward on this aggressive panorama calls for agile, scalable, and clever options that may adapt to altering calls for.

On this context, Amazon Bedrock emerges as an distinctive alternative for creating a generative AI-powered answer to research customer support name transcripts. This totally managed service supplies entry to cutting-edge basis fashions (FMs) from main AI suppliers, enabling the seamless integration of state-of-the-art language fashions tailor-made for textual content evaluation duties. Amazon Bedrock provides fine-tuning capabilities that permit you to customise these pre-trained fashions utilizing proprietary name transcript knowledge, facilitating excessive accuracy and relevance with out the necessity for in depth machine studying (ML) experience. Furthermore, Amazon Bedrock provides integration with different AWS companies like Amazon SageMaker, which streamlines the deployment course of, and its scalable structure makes certain the answer can adapt to rising name volumes effortlessly.

With strong safety measures, knowledge privateness safeguards, and an economical pay-as-you-go mannequin, Amazon Bedrock provides a safe, versatile, and cost-efficient service to harness generative AI’s potential in enhancing customer support analytics, finally resulting in improved buyer experiences and operational efficiencies.

Moreover, by integrating a information base containing organizational knowledge, insurance policies, and domain-specific data, the generative AI fashions can ship extra contextual, correct, and related insights from the decision transcripts. This data base permits the fashions to grasp and reply primarily based on the corporate’s distinctive terminology, merchandise, and processes, enabling deeper evaluation and extra actionable intelligence from buyer interactions.

On this use case, Amazon Bedrock is used for each era of abstract fields for pattern name transcripts and analysis of those abstract fields in opposition to a floor reality dataset. Its worth comes from its easy integration into current pipelines and varied analysis frameworks. Amazon Bedrock additionally means that you can select varied fashions for various use instances, making it an apparent alternative for the answer as a consequence of its flexibility. Utilizing Amazon Bedrock permits for iteration of the answer utilizing information bases for easy storage and entry of name transcripts in addition to guardrails for constructing accountable AI purposes.

Amazon Bedrock

Amazon Bedrock is a totally managed service that makes FMs out there by means of an API, so you may select from a variety of FMs to search out the mannequin that’s finest suited to your use case. With the Amazon Bedrock serverless expertise, you will get began shortly, privately customise FMs with your individual knowledge, and shortly combine and deploy them into your purposes utilizing AWS instruments with out having to handle the infrastructure.

Amazon Q in QuickSight

Amazon Q in QuickSight is a generative AI assistant that accelerates decision-making and enhances enterprise productiveness with generative enterprise intelligence (BI) capabilities.

The unique PCA answer consists of the next companies:

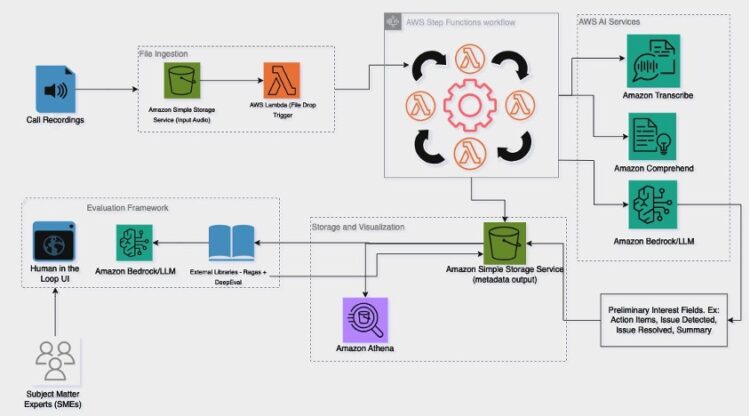

The answer consisted of the next parts:

- Name metadata era – After the file ingestion step when transcripts are generated for every name transcript utilizing Amazon Transcribe, Anthropic’s Claude Haiku FM in Amazon Bedrock is used to generate call-related metadata. This features a abstract, the class, the foundation trigger, and different high-level fields generated from a name transcript. That is orchestrated utilizing AWS Step Capabilities.

- Particular person name Q&A – For questions requiring a particular name, corresponding to, “How did the client react in name ID X,” Anthropic’s Claude Haiku is used to energy a Q&A assistant situated in a CloudFront software. That is powered by the online app portion of the structure diagram (offered within the subsequent part).

- Combination name Q&A – To reply questions requiring a number of calls, corresponding to “What are the commonest points detected,” Amazon Q on QuickSight is used to reinforce the Agent Help interface. This step is proven by enterprise analysts interacting with QuickSight within the storage and visualization step by means of pure language.

To study extra in regards to the architectural parts of the PCA answer, together with file ingestion, perception extraction, storage and visualization, and net software parts, check with Submit name analytics to your contact heart with Amazon language AI companies.

Structure

The next diagram illustrates the answer structure. The analysis framework, name metadata era, and Amazon Q in QuickSight have been new parts launched from the unique PCA answer.

Ragas and a human-in-the-loop UI (as described within the buyer blogpost with Tealium) have been used to judge the metadata era and particular person name Q&A parts. Ragas is an open supply analysis framework that helps consider FM-generated textual content.

The high-level takeaways from this work are the next:

- Anthropic’s Claude 3 Haiku efficiently took in a name transcript and decided its abstract, root trigger, if the difficulty was resolved, and, if it was a callback, subsequent steps by the client and agent (generative AI-powered fields). When utilizing Anthropic’s Claude 3 Haiku versus Anthropic’s Claude On the spot, there was a discount in latency. With chain-of-thought reasoning, there was a rise in total high quality (consists of how factual, comprehensible, and related responses are on a 1–5 scale, described in additional element later on this submit) as measured by subject material consultants (SMEs). With using Amazon Bedrock, varied fashions might be chosen primarily based on completely different use instances, illustrating its flexibility on this software.

- Amazon Q in QuickSight proved to be a strong analytical instrument in understanding and producing related insights from knowledge by means of intuitive chart and desk visualizations. It will probably carry out easy calculations each time essential whereas additionally facilitating deep dives into points and exploring knowledge from a number of views, demonstrating nice worth in perception era.

- The human-in-the-loop UI plus Ragas metrics proved efficient to judge outputs of FMs used all through the pipeline. Notably, reply correctness, reply relevance, faithfulness, and summarization metrics (alignment and protection rating) have been used to judge the decision metadata era and particular person name Q&A parts utilizing Amazon Bedrock. Its flexibility in varied FMs allowed the testing of many sorts of fashions to generate analysis metrics, together with Anthropic’s Claude Sonnet 3.5 and Anthropic’s Claude Haiku 3.

Name metadata era

The decision metadata era pipeline consisted of changing an audio file to a name transcript in a JSON format utilizing Amazon Transcribe after which producing key data for every transcript utilizing Amazon Bedrock and Amazon Comprehend. The next diagram reveals a subset of the previous structure diagram that demonstrates this.

The unique PCA submit linked beforehand reveals how Amazon Transcribe and Amazon Comprehend are used within the metadata era pipeline.

The decision transcript enter that was outputted from the Amazon Transcribe step of the Step Capabilities workflow adopted the format within the following code instance:

Metadata was generated utilizing Amazon Bedrock. Particularly, it extracted the abstract, root trigger, matter, and subsequent steps, and answered key questions corresponding to if the decision was a callback and if the difficulty was finally resolved.

Prompts have been saved in Amazon DynamoDB, permitting Asure to shortly modify prompts or add new generative AI-powered fields primarily based on future enhancements. The next screenshot reveals how prompts might be modified by means of DynamoDB.

Particular person name Q&A

The chat assistant powered by Anthropic’s Claude Haiku was used to reply pure language queries on a single transcript. This assistant, the decision metadata values generated from the earlier part, and sentiments generated from Amazon Comprehend have been displayed in an software hosted by CloudFront.

The person of the ultimate chat assistant can modify the immediate in DynamoDB. The next screenshot reveals the final immediate for a person name Q&A.

The UI hosted by CloudFront permits an agent or supervisor to research a particular name to extract further particulars. The next screenshot reveals the insights Asure gleaned for a pattern customer support name.

The next screenshot reveals the chat assistant, which exists in the identical webpage.

Analysis Framework

This part outlines parts of the analysis framework used. It finally permits Asure to focus on vital metrics for his or her use case and supplies visibility into the generative AI software’s strengths and weaknesses. This was completed utilizing automated quantitative metrics offered by Ragas, DeepEval, and conventional ML metrics in addition to human-in-the-loop analysis completed by SMEs.

Quantitative Metrics

The outcomes of the generated name metadata and particular person name Q&A have been evaluated utilizing quantitative metrics offered by Ragas: reply correctness, reply relevance, and faithfulness; and DeepEval: alignment and protection, each powered by FMs from Amazon Bedrock. Its easy integration with exterior libraries allowed Amazon Bedrock to be configured with current libraries. As well as, conventional ML metrics have been used for “Sure/No” solutions. The next are the metrics used for various parts of the answer:

- Name metadata era – This included the next:

- Abstract – Alignment and protection (discover a description of those metrics within the DeepEval repository) and reply correctness

- Problem resolved, callback – F1-score and accuracy

- Matter, subsequent steps, root trigger – Reply correctness, reply relevance, and faithfulness

- Particular person name Q&A – Reply correctness, reply relevance, and faithfulness

- Human within the loop – Each particular person name Q&A and name metadata era used human-in-the-loop metrics

For an outline of reply correctness, reply relevance, and faithfulness, check with the client weblog submit with Tealium.

Using Amazon Bedrock within the analysis framework allowed for a flexibility of various fashions primarily based on completely different use instances. For instance, Anthropic’s Claude Sonnet 3.5 was used to generate DeepEval metrics, whereas Anthropic’s Claude 3 Haiku (with its low latency) was perfect for Ragas.

Human within the Loop

The human-in-the-loop UI is described within the Human-in-the-Loop part of the client weblog submit with Tealium. To make use of it to judge this answer, some modifications needed to be made:

- There’s a alternative for the person to research one of many generated metadata fields for a name (corresponding to a abstract) or a particular Q&A pair.

- The person can herald two mannequin outputs for comparability. This will embrace outputs from the identical FMs however utilizing completely different prompts, outputs from completely different FMs however utilizing the identical immediate, and outputs from completely different FMs and utilizing completely different prompts.

- Extra checks for fluency, coherence, creativity, toxicity, relevance, completeness, and total high quality have been added, the place the person provides in a measure of this metric primarily based on the mannequin output from a variety of 0–4.

The next screenshots present the UI.

The human-in-the-loop system establishes a mechanism between area experience and Amazon Bedrock outputs. This in flip will result in improved generative AI purposes and finally to excessive buyer belief of such methods.

To demo the human-in-the-loop UI, observe the directions within the GitHub repo.

Pure Language Q&A utilizing Amazon Q in Quicksight

QuickSight, built-in with Amazon Q, enabled Asure to make use of pure language queries for complete buyer analytics. By deciphering queries on sentiments, name volumes, challenge resolutions, and agent efficiency, the service delivered data-driven visualizations. This empowered Asure to shortly establish ache factors, optimize operations, and ship distinctive buyer experiences by means of a streamlined, scalable analytics answer tailor-made for name heart operations.

Combine Amazon Q in QuickSight with the PCA answer

The Amazon Q in QuickSight integration was completed by following three high-level steps:

- Create a dataset on QuickSight.

- Create a subject on QuickSight from the dataset.

- Question utilizing pure language.

Create a dataset on QuickSight

We used Athena as the information supply, which queries knowledge from Amazon S3. QuickSight might be configured by means of a number of knowledge sources (for extra data, check with Supported knowledge sources). For this use case, we used the information generated from the PCA pipeline as the information supply for additional analytics and pure language queries in Amazon Q in QuickSight. The PCA pipeline shops knowledge in Amazon S3, which might be queried in Athena, an interactive question service that means that you can analyze knowledge immediately in Amazon S3 utilizing normal SQL.

- On the QuickSight console, select Datasets within the navigation pane.

- Select Create new.

- Select Athena as the information supply and enter the actual catalog, database, and desk that Amazon Q in QuickSight will reference.

Affirm the dataset was created efficiently and proceed to the following step.

Create a subject on Amazon QuickSight from the dataset created

Customers can use subjects in QuickSight, powered by Amazon Q integration, to carry out pure language queries on their knowledge. This characteristic permits for intuitive knowledge exploration and evaluation by posing questions in plain language, assuaging the necessity for advanced SQL queries or specialised technical expertise. Earlier than organising a subject, be sure that the customers have Professional degree entry. To arrange a subject, observe these steps:

- On the QuickSight console, select Matters within the navigation pane.

- Select New matter.

- Enter a reputation for the subject and select the information supply created.

- Select the created matter after which select Open Q&A to start out querying in pure language

Question utilizing pure language

We carried out intuitive pure language queries to realize actionable insights into buyer analytics. This functionality permits customers to research sentiments, name volumes, challenge resolutions, and agent efficiency by means of conversational queries, enabling data-driven decision-making, operational optimization, and enhanced buyer experiences inside a scalable, name center-tailored analytics answer. Examples of the straightforward pure language queries “Which buyer had constructive sentiments and a posh question?” and “What are the commonest points and which brokers handled them?” are proven within the following screenshots.

These capabilities are useful when enterprise leaders need to dive deep on a selected challenge, empowering them to make knowledgeable selections on varied points.

Success metrics

The first success metric gained from this answer is boosting worker productiveness, primarily by shortly understanding buyer interactions from calls to uncover themes and developments whereas additionally figuring out gaps and ache factors of their merchandise. Earlier than the engagement, analysts have been taking 14 days to manually undergo every name transcript to retrieve insights. After engagement, Asure noticed how Amazon Bedrock and Amazon Q in QuickSight may scale back this time to minutes, even seconds, to acquire each insights queried immediately from all saved name transcripts and visualizations that can be utilized for report era.

Within the pipeline, Anthropic’s Claude 3 Haiku was used to acquire preliminary name metadata fields (corresponding to abstract, root trigger, subsequent steps, and sentiments) that was saved in Athena. This allowed every name transcript to be queried utilizing pure language from Amazon Q in QuickSight, letting enterprise analysts reply high-level questions on points, themes, and buyer and agent insights in seconds.

Pat Goepel, chairman and CEO of Asure, shares,

“In collaboration with the AWS Generative AI Innovation Heart, we have now improved upon a post-call analytics answer to assist us establish and prioritize options that would be the most impactful for our clients. We’re using Amazon Bedrock, Amazon Comprehend, and Amazon Q in QuickSight to grasp developments in our personal buyer interactions, prioritize objects for product improvement, and detect points sooner in order that we might be much more proactive in our assist for our clients. Our partnership with AWS and our dedication to be early adopters of revolutionary applied sciences like Amazon Bedrock underscore our dedication to creating superior HCM know-how accessible for companies of any measurement.”

Takeaways

We had the next takeaways:

- Enabling chain-of-thought reasoning and particular assistant prompts for every immediate within the name metadata era part and calling it utilizing Anthropic’s Claude 3 Haiku improved metadata era for every transcript. Primarily, the pliability of Amazon Bedrock in using varied FMs allowed full experimentation of many sorts of fashions with minimal modifications. Utilizing Amazon Bedrock can enable for using varied fashions relying on the use case, making it the apparent alternative for this software as a consequence of its flexibility.

- Ragas metrics, significantly faithfulness, reply correctness, and reply relevance, have been used to judge name metadata era and particular person Q&A. Nonetheless, summarization required completely different metrics, alignment, and protection, which didn’t require floor reality summaries. Due to this fact, DeepEval was used to calculate summarization metrics. Total, the benefit of integrating Amazon Bedrock allowed it to energy the calculation of quantitative metrics with minimal modifications to the analysis libraries. This additionally allowed using several types of fashions for various analysis libraries.

- The human-in-the-loop method can be utilized by SMEs to additional consider Amazon Bedrock outputs. There is a chance to enhance upon an Amazon Bedrock FM primarily based on this suggestions, however this was not labored on on this engagement.

- The post-call analytics workflow, with using Amazon Bedrock, might be iterated upon sooner or later utilizing options corresponding to Amazon Bedrock Data Bases to carry out Q&A over a particular variety of name transcripts in addition to Amazon Bedrock Guardrails to detect dangerous and hallucinated responses whereas additionally creating extra accountable AI purposes.

- Amazon Q in QuickSight was capable of reply pure language questions on buyer analytics, root trigger, and agent analytics, however some questions required reframing to get significant responses.

- Information fields inside Amazon Q in QuickSight wanted to be outlined correctly and synonyms wanted to be added to make Amazon Q extra strong with pure language queries.

Safety finest practices

We suggest the next safety tips for constructing safe purposes on AWS:

Conclusion

On this submit, we showcased how Asure used the PCA answer powered by Amazon Bedrock and Amazon Q in QuickSight to generate shopper and agent insights each at particular person and combination ranges. Particular insights included these centered round a typical theme or challenge. With these companies, Asure was capable of enhance worker productiveness to generate these insights in minutes as a substitute of weeks.

This is likely one of the some ways builders can ship nice options utilizing Amazon Bedrock and Amazon Q in QuickSight. To study extra, check with Amazon Bedrock and Amazon Q in QuickSight.

In regards to the Authors

Suren Gunturu is a Information Scientist working within the Generative AI Innovation Heart, the place he works with varied AWS clients to unravel high-value enterprise issues. He makes a speciality of constructing ML pipelines utilizing massive language fashions, primarily by means of Amazon Bedrock and different AWS Cloud companies.

Suren Gunturu is a Information Scientist working within the Generative AI Innovation Heart, the place he works with varied AWS clients to unravel high-value enterprise issues. He makes a speciality of constructing ML pipelines utilizing massive language fashions, primarily by means of Amazon Bedrock and different AWS Cloud companies.

Avinash Yadav is a Deep Studying Architect on the Generative AI Innovation Heart, the place he designs and implements cutting-edge GenAI options for numerous enterprise wants. He makes a speciality of constructing ML pipelines utilizing massive language fashions, with experience in cloud structure, Infrastructure as Code (IaC), and automation. His focus lies in creating scalable, end-to-end purposes that leverage the facility of deep studying and cloud applied sciences.

Avinash Yadav is a Deep Studying Architect on the Generative AI Innovation Heart, the place he designs and implements cutting-edge GenAI options for numerous enterprise wants. He makes a speciality of constructing ML pipelines utilizing massive language fashions, with experience in cloud structure, Infrastructure as Code (IaC), and automation. His focus lies in creating scalable, end-to-end purposes that leverage the facility of deep studying and cloud applied sciences.

John Canada is the VP of Engineering at Asure Software program, the place he leverages his expertise in constructing revolutionary, dependable, and performant options and his ardour for AI/ML to steer a gifted workforce devoted to utilizing Machine Studying to reinforce the capabilities of Asure’s software program and meet the evolving wants of companies.

John Canada is the VP of Engineering at Asure Software program, the place he leverages his expertise in constructing revolutionary, dependable, and performant options and his ardour for AI/ML to steer a gifted workforce devoted to utilizing Machine Studying to reinforce the capabilities of Asure’s software program and meet the evolving wants of companies.

Yasmine Rodriguez Wakim is the Chief Expertise Officer at Asure Software program. She is an revolutionary Software program Architect & Product Chief with deep experience in creating payroll, tax, and workforce software program improvement. As a results-driven tech strategist, she builds and leads know-how imaginative and prescient to ship environment friendly, dependable, and customer-centric software program that optimizes enterprise operations by means of automation.

Yasmine Rodriguez Wakim is the Chief Expertise Officer at Asure Software program. She is an revolutionary Software program Architect & Product Chief with deep experience in creating payroll, tax, and workforce software program improvement. As a results-driven tech strategist, she builds and leads know-how imaginative and prescient to ship environment friendly, dependable, and customer-centric software program that optimizes enterprise operations by means of automation.

Vidya Sagar Ravipati is a Science Supervisor on the Generative AI Innovation Heart, the place he leverages his huge expertise in large-scale distributed methods and his ardour for machine studying to assist AWS clients throughout completely different business verticals speed up their AI and cloud adoption.

Vidya Sagar Ravipati is a Science Supervisor on the Generative AI Innovation Heart, the place he leverages his huge expertise in large-scale distributed methods and his ardour for machine studying to assist AWS clients throughout completely different business verticals speed up their AI and cloud adoption.