Generative AI purposes appear easy—invoke a basis mannequin (FM) with the proper context to generate a response. In actuality, it’s a way more advanced system involving workflows that invoke FMs, instruments, and APIs and that use domain-specific knowledge to floor responses with patterns equivalent to Retrieval Augmented Era (RAG) and workflows involving brokers. Security controls must be utilized to enter and output to stop dangerous content material, and foundational components need to be established equivalent to monitoring, automation, and steady integration and supply (CI/CD), that are wanted to operationalize these techniques in manufacturing.

Many organizations have siloed generative AI initiatives, with growth managed independently by numerous departments and contours of companies (LOBs). This typically ends in fragmented efforts, redundant processes, and the emergence of inconsistent governance frameworks and insurance policies. Inefficiencies in useful resource allocation and utilization drive up prices.

To deal with these challenges, organizations are more and more adopting a unified method to construct purposes the place foundational constructing blocks are provided as companies to LOBs and groups for creating generative AI purposes. This method facilitates centralized governance and operations. Some organizations use the time period “generative AI platform” to explain this method. This may be tailored to completely different working fashions of a corporation: centralized, decentralized, and federated. A generative AI basis gives core companies, reusable parts, and blueprints, whereas making use of standardized safety and governance insurance policies.

This method offers organizations many key advantages, equivalent to streamlined growth, the power to scale generative AI growth and operations throughout group, mitigated danger as central administration simplifies implementation of governance frameworks, optimized prices due to reuse, and accelerated innovation as groups can shortly construct and ship use instances.

On this submit, we give an summary of a well-established generative AI basis, dive into its parts, and current an end-to-end perspective. We have a look at completely different working fashions and discover how such a basis can function inside these boundaries. Lastly, we current a maturity mannequin that helps enterprises assess their evolution path.

Overview

Laying out a robust generative AI basis contains providing a complete set of parts to assist the end-to-end generative AI utility lifecycle. The next diagram illustrates these parts.

On this part, we focus on the important thing parts in additional element.

Hub

On the core of the inspiration are a number of hubs that embody:

- Mannequin hub – Offers entry to enterprise FMs. As a system matures, a broad vary of off-the-shelf or personalized fashions could be supported. Most organizations conduct thorough safety and authorized opinions earlier than fashions are accepted to be used. The mannequin hub acts as a central place to entry accepted fashions.

- Device/Agent hub – Permits discovery and connectivity to software catalog and brokers. This might be enabled by way of protocols equivalent to MCP, Agent2Agent (A2A).

Gateway

A mannequin gateway gives safe entry to the mannequin hub by means of standardized APIs. Gateway is constructed as a multi-tenant part to supply isolation throughout groups and enterprise models which can be onboarded. Key options of a gateway embody:

- Entry and authorization – The gateway facilitates authentication, authorization, and safe communication between customers and the system. It helps confirm that solely approved customers can use particular fashions, and can even implement fine-grained entry management.

- Unified API – The gateway offers unified APIs to fashions and options equivalent to guardrails and analysis. It might additionally assist automated immediate translation to completely different immediate templates throughout completely different fashions.

- Price limiting and throttling – It handles API requests effectively by controlling the variety of requests allowed in a given time interval, stopping overload and managing site visitors spikes.

- Value attribution – The gateway screens utilization throughout the group and allocates prices to the groups. As a result of these fashions could be resource-intensive, monitoring mannequin utilization helps allocate prices correctly, optimize sources, and keep away from overspending.

- Scaling and cargo balancing – The gateway can deal with load balancing throughout completely different servers, mannequin cases, or AWS Areas in order that purposes stay responsive.

- Guardrails – The gateway applies content material filters to requests and responses by means of guardrails and helps adhere to organizational safety and compliance requirements.

- Caching – The cache layer shops prompts and responses that may assist enhance efficiency and cut back prices.

The AWS Options Library gives resolution steering to arrange a multi-provider generative AI gateway. The answer makes use of an open supply LiteLLM proxy wrapped in a container that may be deployed on Amazon Elastic Container Service (Amazon ECS) or Amazon Elastic Kubernetes Service (Amazon EKS). This gives organizations a constructing block to develop an enterprise large mannequin hub and gateway. The generative AI basis can begin with the gateway and supply extra options because it matures.

The gateway sample for software/agent hub are nonetheless evolving. The mannequin gateway is usually a common gateway to all of the hubs or alternatively particular person hubs may have their very own purpose-built gateways.

Orchestration

Orchestration encapsulates generative AI workflows, that are often a multi-step course of. The steps may contain invocation of fashions, integrating knowledge sources, utilizing instruments, or calling APIs. Workflows could be deterministic, the place they’re created as predefined templates. An instance of a deterministic circulate is a RAG sample. On this sample, a search engine is used to retrieve related sources and increase the info into the immediate context, earlier than the mannequin makes an attempt to generate the response for the person immediate. This goals to cut back hallucination and encourage the era of responses grounded in verified content material.

Alternatively, advanced workflows could be designed utilizing brokers the place a big language mannequin (LLM) decides the circulate by planning and reasoning. Throughout reasoning, the agent can resolve when to proceed pondering, name exterior instruments (equivalent to APIs or search engines like google and yahoo), or submit its remaining response. Multi-agent orchestration is used to deal with much more advanced drawback domains by defining a number of specialised subagents, which might work together with one another to decompose and full a activity requiring completely different data or expertise. A generative AI basis can present primitives equivalent to fashions, vector databases, and guardrails as a service and higher-level companies for outlining AI workflows, brokers and multi-agents, instruments, and likewise a catalog to encourage reuse.

Mannequin customization

A key foundational functionality that may be provided is mannequin customization, together with the next methods:

- Continued pre-training – Area-adaptive pre-training, the place current fashions are additional skilled on domain-specific knowledge. This method can supply a stability between customization depth and useful resource necessities, necessitating fewer sources than coaching from scratch.

- Superb-tuning – Mannequin adaptation methods equivalent to instruction fine-tuning and supervised fine-tuning to study task-specific capabilities. Although much less intensive than pre-training, this method nonetheless requires important computational sources.

- Alignment – Coaching fashions with user-generated knowledge utilizing methods equivalent to Reinforcement Studying with Human Suggestions (RLHF) and Direct Choice Optimization (DPO).

For the previous methods, the inspiration ought to present scalable infrastructure for knowledge storage and coaching, a mechanism to orchestrate tuning and coaching pipelines, a mannequin registry to centrally register and govern the mannequin, and infrastructure to host the mannequin.

Information administration

Organizations usually have a number of knowledge sources, and knowledge from these sources is generally aggregated in knowledge lakes and knowledge warehouses. Frequent datasets could be made obtainable as a foundational providing to completely different groups. The next are extra foundational parts that may be provided:

- Integration with enterprise knowledge sources and exterior sources to usher in the info wanted for patterns equivalent to RAG or mannequin customization

- Absolutely managed or pre-built templates and blueprints for RAG that embody a selection of vector databases, chunking knowledge, changing knowledge into embeddings, and indexing them in vector databases

- Information processing pipelines for mannequin customization, together with instruments to create labeled and artificial datasets

- Instruments to catalog knowledge, making it fast to look, uncover, entry, and govern knowledge

GenAIOps

Generative AI operations (GenAIOps) encompasses overarching practices of managing and automating operations of generative AI techniques. The next diagram illustrates its parts.

Essentially, GenAIOps falls into two broad classes:

- Operationalizing purposes that devour FMs – Though operationalizing RAG or agentic purposes shares core rules with DevOps, it requires extra, AI-specific concerns and practices. RAGOps addresses operational practices for managing the lifecycle of RAG techniques, which mix generative fashions with data retrieval mechanisms. Concerns listed below are selection of vector database, optimizing indexing pipelines, and retrieval methods. AgentOps helps facilitate environment friendly operation of autonomous agentic techniques. The important thing considerations listed below are software administration, agent coordination utilizing state machines, and short-term and long-term reminiscence administration.

- Operationalizing FM coaching and tuning – ModelOps is a class below GenAIOps, which is targeted on governance and lifecycle administration of fashions, together with mannequin choice, steady tuning and coaching of fashions, experiments monitoring, central mannequin registry, immediate administration and analysis, mannequin deployment, and mannequin governance. FMOps, which is operationalizing FMs, and LLMOps, which is particularly operationalizing LLMs, fall below this class.

As well as, operationalization includes implementing CI/CD processes for automating deployments, integrating analysis and immediate administration techniques, and gathering logs, traces, and metrics to optimize operations.

Observability

Observability for generative AI must account for the probabilistic nature of those techniques—fashions may hallucinate, responses could be subjective, and troubleshooting is tougher. Like different software program techniques, logs, metrics, and traces ought to be collected and centrally aggregated. There ought to be instruments to generate insights out of this knowledge that can be utilized to optimize the purposes even additional. Along with component-level monitoring, as generative AI purposes mature, deeper observability ought to be carried out, equivalent to instrumenting traces, gathering real-world suggestions, and looping it again to enhance fashions and techniques. Analysis ought to be provided as a core foundational part, and this contains automated and human analysis and LLM-as-a-judge pipelines together with storage of floor reality knowledge.

Accountable AI

To stability the advantages of generative AI with the challenges that come up from it, it’s vital to include instruments, methods, and mechanisms that align to a broad set of accountable AI dimensions. At AWS, these Accountable AI dimensions embody privateness and safety, security, transparency, explainability, veracity and robustness, equity, controllability, and governance. Every group would have its personal governing set of accountable AI dimensions that may be centrally integrated as finest practices by means of the generative AI basis.

Safety and privateness

Communication ought to be over TLS, and personal community entry ought to be supported. Person entry ought to be safe, and a system ought to assist fine-grained entry management. Price limiting and throttling ought to be in place to assist forestall abuse. For knowledge safety, knowledge ought to be encrypted at relaxation and transit, and tenant knowledge isolation patterns ought to be carried out. Embeddings saved in vector shops ought to be encrypted. For mannequin safety, customized mannequin weights ought to be encrypted and remoted for various tenants. Guardrails ought to be utilized to enter and output to filter matters and dangerous content material. Telemetry ought to be collected for actions that customers tackle the central system. Information high quality is possession of the consuming purposes or knowledge producers. The consuming purposes ought to combine observability into purposes.

Governance

The 2 key areas of governance are mannequin and knowledge:

- Mannequin governance – Monitor mannequin for efficiency, robustness, and equity. Mannequin variations ought to be managed centrally in a mannequin registry. Applicable permissions and insurance policies ought to be in place for mannequin deployments. Entry controls to fashions ought to be established.

- Information governance – Apply fine-grained entry management to knowledge managed by the system, together with coaching knowledge, vector shops, analysis knowledge, immediate templates, workflow, and agent definitions. Set up knowledge privateness insurance policies equivalent to managing delicate knowledge (for instance, personally identifiable data (PII) redaction), for the info managed by the system, defending prompts and knowledge and never utilizing them to enhance fashions.

Instruments panorama

Quite a lot of AWS companies, AWS associate options, and third-party instruments and frameworks can be found to architect a complete generative AI basis. The next determine may not cowl your complete gamut of instruments, however we’ve got created a panorama based mostly on our expertise with these instruments.

Operational boundaries

One of many challenges to resolve for is who owns the foundational parts and the way do they function inside the group’s working mannequin. Let’s have a look at three frequent working fashions:

- Centralized – Operations are centralized to at least one crew. Some organizations discuss with this crew because the platform crew or platform engineering crew. On this mannequin, foundational parts are managed by a central crew and provided to LOBs and enterprise groups.

- Decentralized – LOBs and groups construct their respective techniques and function independently. The central crew takes on a task of a Heart of Excellence (COE) that defines finest practices, requirements, and governance frameworks. Logs and metrics could be aggregated in a central place.

- Federated – A extra versatile mannequin is a hybrid of the 2. A central crew manages the inspiration that gives constructing blocks for mannequin entry, analysis, guardrails, central logs, and metrics aggregation to groups. LOBs and groups use the foundational parts but in addition construct and handle their very own parts as crucial.

Multi-tenant structure

No matter the working mannequin, it’s vital to outline how tenants are remoted and managed inside the system. The multi-tenant sample relies on quite a lot of elements:

- Tenant and knowledge isolation – Information possession is essential for constructing generative AI techniques. A system ought to set up clear insurance policies on knowledge possession and entry rights, ensuring knowledge is accessible solely to approved customers. Tenant knowledge ought to be securely remoted from others to take care of privateness and confidentiality. This may be by means of bodily isolation of knowledge, for instance, organising remoted vector databases for every tenant for a RAG utility, or by logical separation, for instance, utilizing completely different indexes inside a shared database. Function-based entry management ought to be arrange to verify customers inside a tenant can entry sources and knowledge particular to their group.

- Scalability and efficiency – Noisy neighbors is usually a actual drawback, the place one tenant is extraordinarily chatty in comparison with others. Correct useful resource allocation in accordance with tenant wants ought to be established. Containerization of workloads is usually a good technique to isolate and scale tenants individually. This additionally ties into the deployment technique described later on this part, by way of which a chatty tenant could be fully remoted from others.

- Deployment technique – If strict isolation is required to be used instances, then every tenant can have devoted cases of compute, storage, and mannequin entry. This implies gateway, knowledge pipelines, knowledge storage, coaching infrastructure, and different parts are deployed on an remoted infrastructure per tenant. For tenants who don’t want strict isolation, shared infrastructure can be utilized and partitioning of sources could be achieved by a tenant identifier. A hybrid mannequin will also be used, the place the core basis is deployed on shared infrastructure and particular parts are remoted by tenant. The next diagram illustrates an instance structure.

- Observability – A mature generative AI system ought to present detailed visibility into operations at each the central and tenant-specific stage. The inspiration gives a central place for gathering logs, metrics, and traces, so you’ll be able to arrange reporting based mostly on tenant wants.

- Value Administration – A metered billing system ought to be arrange based mostly on utilization. This requires establishing value monitoring based mostly on useful resource utilization of various parts plus mannequin inference prices. Mannequin inference prices range by fashions and by suppliers, however there ought to be a typical mechanism of allocating prices per tenant. System directors ought to be capable of observe and monitor utilization throughout groups.

Let’s break this down by taking a RAG utility for example. Within the hybrid mannequin, the tenant deployment incorporates cases of a vector database that shops the embeddings, which helps strict knowledge isolation necessities. The deployment will moreover embody the applying layer that incorporates the frontend code and orchestration logic to take the person question, increase the immediate with context from the vector database, and invoke FMs on the central system. The foundational parts that provide companies equivalent to analysis and guardrails for purposes to devour to construct a production-ready utility are in a separate shared deployment. Logs, metrics, and traces from the purposes could be fed right into a central aggregation place.

Generative AI basis maturity mannequin

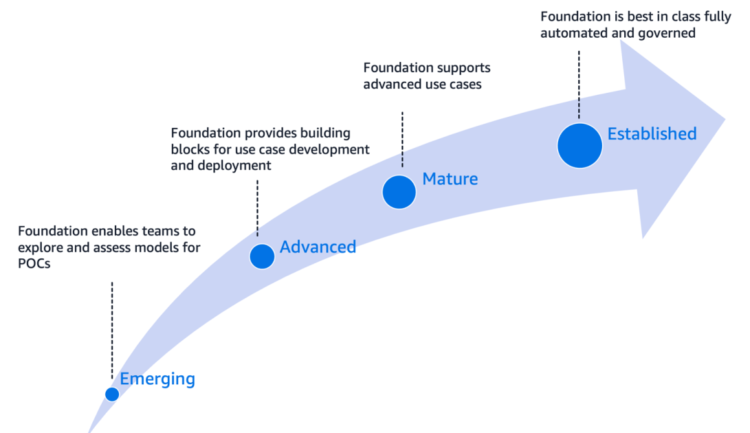

We’ve got outlined a maturity mannequin to trace the evolution of the generative AI basis throughout completely different levels of adoption. The maturity mannequin can be utilized to evaluate the place you’re within the growth journey and plan for growth. We outline the curve alongside 4 levels of adoption: rising, superior, mature, and established.

The main points for every stage are as follows:

- Rising – The inspiration gives a playground for mannequin exploration and evaluation. Groups are in a position to develop proofs of idea utilizing enterprise accepted fashions.

- Superior – The inspiration facilitates first manufacturing use instances. A number of environments exist for growth, testing, and manufacturing deployment. Monitoring and alerts are established.

- Mature – A number of groups are utilizing the inspiration and are in a position to develop advanced use instances. CI/CD and infrastructure as code (IaC) practices speed up the rollout of reusable parts. Deeper observability equivalent to tracing is established.

- Established – A best-in-class system, absolutely automated and working at scale, with governance and accountable AI practices, is established. The inspiration permits various use instances, and is absolutely automated and ruled. Many of the enterprise groups are onboarded on it.

The evolution may not be precisely linear alongside the curve by way of particular capabilities, however sure key efficiency indicators can be utilized to judge the adoption and progress.

Conclusion

Establishing a complete generative AI basis is usually a essential step in harnessing the facility of AI at scale. Enterprise AI growth brings distinctive challenges starting from agility, reliability, governance, scale, and collaboration. Subsequently, a well-constructed basis with the proper parts and tailored to the working mannequin of enterprise aids in constructing and scaling generative AI purposes throughout the enterprise.

The quickly evolving generative AI panorama means there is likely to be cutting-edge instruments we haven’t lined below the instruments panorama. In case you’re utilizing or conscious of state-of-the artwork options that align with the foundational parts, we encourage you to share them within the feedback part.

Our crew is devoted to serving to prospects resolve challenges in generative AI growth at scale—whether or not it’s architecting a generative AI basis, organising operational finest practices, or implementing accountable AI practices. Go away us a remark and we will likely be glad to collaborate.

Concerning the authors

Chaitra Mathur is as a GenAI Specialist Options Architect at AWS. She works with prospects throughout industries in constructing scalable generative AI platforms and operationalizing them. All through her profession, she has shared her experience at quite a few conferences and has authored a number of blogs within the Machine Studying and Generative AI domains.

Chaitra Mathur is as a GenAI Specialist Options Architect at AWS. She works with prospects throughout industries in constructing scalable generative AI platforms and operationalizing them. All through her profession, she has shared her experience at quite a few conferences and has authored a number of blogs within the Machine Studying and Generative AI domains.

Dr. Alessandro Cerè is a GenAI Analysis Specialist and Options Architect at AWS. He assists prospects throughout industries and areas in operationalizing and governing their generative AI techniques at scale, making certain they meet the very best requirements of efficiency, security, and moral concerns. Bringing a novel perspective to the sphere of AI, Alessandro has a background in quantum physics and analysis expertise in quantum communications and quantum recollections. In his spare time, he pursues his ardour for panorama and underwater images.

Dr. Alessandro Cerè is a GenAI Analysis Specialist and Options Architect at AWS. He assists prospects throughout industries and areas in operationalizing and governing their generative AI techniques at scale, making certain they meet the very best requirements of efficiency, security, and moral concerns. Bringing a novel perspective to the sphere of AI, Alessandro has a background in quantum physics and analysis expertise in quantum communications and quantum recollections. In his spare time, he pursues his ardour for panorama and underwater images.

Aamna Najmi is a GenAI and Information Specialist at AWS. She assists prospects throughout industries and areas in operationalizing and governing their generative AI techniques at scale, making certain they meet the very best requirements of efficiency, security, and moral concerns, bringing a novel perspective of recent knowledge methods to enhance the sphere of AI. In her spare time, she pursues her ardour of experimenting with meals and discovering new locations.

Aamna Najmi is a GenAI and Information Specialist at AWS. She assists prospects throughout industries and areas in operationalizing and governing their generative AI techniques at scale, making certain they meet the very best requirements of efficiency, security, and moral concerns, bringing a novel perspective of recent knowledge methods to enhance the sphere of AI. In her spare time, she pursues her ardour of experimenting with meals and discovering new locations.

Dr. Andrew Kane is the WW Tech Chief for Safety and Compliance for AWS Generative AI Companies, main the supply of under-the-hood technical belongings for purchasers round safety, in addition to working with CISOs across the adoption of generative AI companies inside their organizations. Earlier than becoming a member of AWS firstly of 2015, Andrew spent twenty years working within the fields of sign processing, monetary funds techniques, weapons monitoring, and editorial and publishing techniques. He’s a eager karate fanatic (only one belt away from Black Belt) and can also be an avid home-brewer, utilizing automated brewing {hardware} and different IoT sensors. He was the authorized licensee in his historic (AD 1468) English countryside village pub till early 2020.

Dr. Andrew Kane is the WW Tech Chief for Safety and Compliance for AWS Generative AI Companies, main the supply of under-the-hood technical belongings for purchasers round safety, in addition to working with CISOs across the adoption of generative AI companies inside their organizations. Earlier than becoming a member of AWS firstly of 2015, Andrew spent twenty years working within the fields of sign processing, monetary funds techniques, weapons monitoring, and editorial and publishing techniques. He’s a eager karate fanatic (only one belt away from Black Belt) and can also be an avid home-brewer, utilizing automated brewing {hardware} and different IoT sensors. He was the authorized licensee in his historic (AD 1468) English countryside village pub till early 2020.

Bharathi Srinivasan is a Generative AI Information Scientist on the AWS Worldwide Specialist Group. She works on creating options for Accountable AI, specializing in algorithmic equity, veracity of huge language fashions, and explainability. Bharathi guides inner groups and AWS prospects on their accountable AI journey. She has offered her work at numerous studying conferences.

Bharathi Srinivasan is a Generative AI Information Scientist on the AWS Worldwide Specialist Group. She works on creating options for Accountable AI, specializing in algorithmic equity, veracity of huge language fashions, and explainability. Bharathi guides inner groups and AWS prospects on their accountable AI journey. She has offered her work at numerous studying conferences.

Denis V. Batalov is a 17-year Amazon veteran and a PhD in Machine Studying, Denis labored on such thrilling tasks as Search Contained in the Ebook, Amazon Cell apps and Kindle Direct Publishing. Since 2013 he has helped AWS prospects undertake AI/ML expertise as a Options Architect. Presently, Denis is a Worldwide Tech Chief for AI/ML answerable for the functioning of AWS ML Specialist Options Architects globally. Denis is a frequent public speaker, you’ll be able to comply with him on Twitter @dbatalov.

Denis V. Batalov is a 17-year Amazon veteran and a PhD in Machine Studying, Denis labored on such thrilling tasks as Search Contained in the Ebook, Amazon Cell apps and Kindle Direct Publishing. Since 2013 he has helped AWS prospects undertake AI/ML expertise as a Options Architect. Presently, Denis is a Worldwide Tech Chief for AI/ML answerable for the functioning of AWS ML Specialist Options Architects globally. Denis is a frequent public speaker, you’ll be able to comply with him on Twitter @dbatalov.

Nick McCarthy is a Generative AI Specialist at AWS. He has labored with AWS shoppers throughout numerous industries together with healthcare, finance, sports activities, telecoms and power to speed up their enterprise outcomes by means of using AI/ML. Exterior of labor he likes to spend time touring, attempting new cuisines and studying about science and expertise. Nick has a Bachelors diploma in Astrophysics and a Masters diploma in Machine Studying.

Nick McCarthy is a Generative AI Specialist at AWS. He has labored with AWS shoppers throughout numerous industries together with healthcare, finance, sports activities, telecoms and power to speed up their enterprise outcomes by means of using AI/ML. Exterior of labor he likes to spend time touring, attempting new cuisines and studying about science and expertise. Nick has a Bachelors diploma in Astrophysics and a Masters diploma in Machine Studying.

Alex Thewsey is a Generative AI Specialist Options Architect at AWS, based mostly in Singapore. Alex helps prospects throughout Southeast Asia to design and implement options with ML and Generative AI. He additionally enjoys karting, working with open supply tasks, and attempting to maintain up with new ML analysis.

Alex Thewsey is a Generative AI Specialist Options Architect at AWS, based mostly in Singapore. Alex helps prospects throughout Southeast Asia to design and implement options with ML and Generative AI. He additionally enjoys karting, working with open supply tasks, and attempting to maintain up with new ML analysis.

Willie Lee is a Senior Tech PM for the AWS worldwide specialists crew specializing in GenAI. He’s captivated with machine studying and the various methods it might influence our lives, particularly within the space of language comprehension.

Willie Lee is a Senior Tech PM for the AWS worldwide specialists crew specializing in GenAI. He’s captivated with machine studying and the various methods it might influence our lives, particularly within the space of language comprehension.