On this new period of rising AI applied sciences, now we have the chance to construct AI-powered assistants tailor-made to particular enterprise necessities. Amazon Q Enterprise, a brand new generative AI-powered assistant, can reply questions, present summaries, generate content material, and securely full duties primarily based on information and data in an enterprise’s techniques.

Massive-scale information ingestion is essential for purposes equivalent to doc evaluation, summarization, analysis, and data administration. These duties usually contain processing huge quantities of paperwork, which may be time-consuming and labor-intensive. Nonetheless, ingesting giant volumes of enterprise information poses vital challenges, notably in orchestrating workflows to collect information from numerous sources.

On this put up, we suggest an end-to-end resolution utilizing Amazon Q Enterprise to simplify integration of enterprise data bases at scale.

Enhancing AWS Help Engineering effectivity

The AWS Help Engineering workforce confronted the daunting process of manually sifting by quite a few instruments, inner sources, and AWS public documentation to search out options for buyer inquiries. For advanced buyer points, the method was particularly time-consuming, laborious, and at instances prolonged the wait time for purchasers in search of resolutions. To handle this, the workforce applied a chat assistant utilizing Amazon Q Enterprise. This resolution ingests and processes information from tons of of 1000’s of assist tickets, escalation notices, public AWS documentation, re:Submit articles, and AWS weblog posts.

By utilizing Amazon Q Enterprise, which simplifies the complexity of growing and managing ML infrastructure and fashions, the workforce quickly deployed their chat resolution. The Amazon Q Enterprise pre-built connectors like Amazon Easy Storage Service (Amazon S3), doc retrievers, and add capabilities streamlined information ingestion and processing, enabling the workforce to supply swift, correct responses to each fundamental and superior buyer queries.

On this put up, we suggest an end-to-end resolution utilizing Amazon Q Enterprise to handle comparable enterprise information challenges, showcasing the way it can streamline operations and improve customer support throughout numerous industries. First we focus on end-to-end large-scale information integration with Amazon Q Enterprise, protecting information preprocessing, safety guardrail implementation, and Amazon Q Enterprise greatest practices. Then we introduce the answer deployment utilizing three AWS CloudFormation templates.

Answer overview

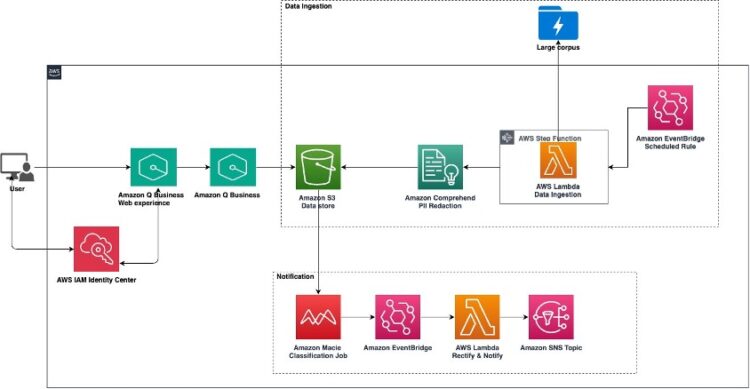

The next structure diagram represents the high-level design of an answer confirmed efficient in manufacturing environments for AWS Help Engineering. This resolution makes use of the highly effective capabilities of Amazon Q Enterprise. We’ll stroll by the implementation of key parts, together with configuring enterprise information sources to construct our data base, doc indexing and boosting, and implementing complete safety controls.

Amazon Q Enterprise helps three customers varieties as a part of id and entry administration:

- Service consumer – An end-user who accesses Amazon Q Enterprise purposes with permissions granted by their administrator to carry out their job duties

- Service administrator – A consumer who manages Amazon Q Enterprise sources and determines function entry for service customers inside the group

- IAM administrator – A consumer accountable for creating and managing entry insurance policies for Amazon Q Enterprise by AWS IAM Id Heart

The next workflow particulars how a service consumer accesses the applying:

- The service consumer initiates an interplay with the Amazon Q Enterprise software, accessible by the net expertise, which is an endpoint URL.

- The service consumer’s permissions are authenticated utilizing IAM Id Heart, an AWS resolution that connects workforce customers to AWS managed purposes like Amazon Q Enterprise. It allows end-user authentication and streamlines entry administration.

- The authenticated service consumer submits queries in pure language to the Amazon Q Enterprise software.

- The Amazon Q Enterprise software generates and returns solutions drawing from the enterprise information uploaded to an S3 bucket, which is linked as an information supply to Amazon Q Enterprise. This S3 bucket information is constantly refreshed, ensuring that Amazon Q Enterprise accesses essentially the most present info for question responses by utilizing a retriever to tug information from the index.

Massive-scale information ingestion

Earlier than ingesting the info to Amazon Q Enterprise, the info may want transformation into codecs supported by Amazon Q Enterprise. Moreover, it would comprise delicate information or personally identifiable info (PII) requiring redaction. These information ingestion challenges create a must orchestrate duties like transformation, redaction, and safe ingestion.

Knowledge ingestion workflow

To facilitate orchestration, this resolution incorporates AWS Step Capabilities. Step Capabilities gives a visible workflow service to orchestrate duties and workloads resiliently and effectively by built-in AWS integrations and error dealing with. The answer makes use of the Step Capabilities Map state, which permits for parallel processing of a number of gadgets in a dataset, thereby effectively orchestrating workflows and rushing up general processing.

The next diagram illustrates an instance structure for ingesting information by an endpoint interfacing with a big corpus.

Step Capabilities orchestrates AWS providers like AWS Lambda and group APIs like DataStore to ingest, course of, and retailer information securely. The workflow consists of the next steps:

- The Put together Map Enter Lambda perform prepares the required enter for the Map state. For instance, the Datastore API may require sure enter like date durations to question information. This step can be utilized to outline the date durations for use by the Map state as an enter.

- The Ingest Knowledge Lambda perform fetches information from the Datastore API—which may be in or exterior of the digital personal cloud (VPC)—primarily based on the inputs from the Map state. To deal with giant volumes, the info is break up into smaller chunks to mitigate Lambda perform overload. This allows Step Capabilities to handle the workload, retry failed chunks, and isolate failures to particular person chunks as a substitute of disrupting the whole ingestion course of.

- The fetched information is put into an S3 information retailer bucket for processing.

- The Course of Knowledge Lambda perform redacts delicate information by Amazon Comprehend. Amazon Comprehend gives real-time APIs, equivalent to DetectPiiEntities and DetectEntities, which use pure language processing (NLP) machine studying (ML) fashions to determine textual content parts for redaction. When Amazon Comprehend detects PII, the phrases will likely be redacted and changed by a personality of your alternative (equivalent to *). You can even use common expressions to take away identifiers with predetermined codecs.

- Lastly, the Lambda perform creates two separate information:

- A sanitized information doc in an Amazon Q Enterprise supported format that will likely be parsed to generate chat responses.

- A JSON metadata file for every doc containing extra info to customise chat outcomes for end-users and apply boosting strategies to boost consumer expertise (which we focus on extra within the subsequent part).

The next is the pattern metadata file:

Within the previous JSON file, the DocumentId for every information doc should be distinctive. All the opposite attributes are optionally available; nonetheless, the file has extra attributes like providers, _created_at, and _last_updated_at with values outlined.

The 2 information are positioned in a brand new S3 folder for Amazon Q to index. Moreover, the uncooked unprocessed information is deleted from the S3 bucket. You may additional limit entry to paperwork uploaded to an S3 bucket for particular customers or teams utilizing Amazon S3 entry management lists (ACLs).

Utilizing the Amazon Q Enterprise information supply connector function, we built-in the S3 bucket with our software. This connector performance allows the consolidation of knowledge from a number of sources right into a unified index for the Amazon Q Enterprise software. The service gives numerous integration choices, with Amazon S3 being one of many supported information sources.

Boosting efficiency

When working along with your particular dataset in Amazon Q Enterprise, you need to use relevance tuning to boost the efficiency and accuracy of search outcomes. This function means that you can customise how Amazon Q Enterprise prioritizes info inside your ingested paperwork. For instance, in case your dataset consists of product descriptions, buyer opinions, and technical specs, you need to use relevance tuning to spice up the significance of sure fields. You may select to prioritize product names in titles, give extra weight to latest buyer opinions, or emphasize particular technical attributes which can be essential for your online business. By adjusting these parameters, you possibly can affect the rating of search outcomes to higher align along with your dataset’s distinctive traits and your customers’ info wants, finally offering extra related solutions to their queries.

For the metadata file used on this instance, we deal with boosting two key metadata attributes: _document_title and providers. By assigning greater weights to those attributes, we made positive paperwork with particular titles or providers obtained larger prominence within the search outcomes, bettering their visibility and relevance for the customers

The next code is the pattern CloudFormation template snippet to allow greater weights to _document_title and providers:

Amazon Q Enterprise guardrails

Implementing sturdy safety measures is essential to guard delicate info. On this regard, Amazon Q Enterprise guardrails or chat controls proved invaluable, providing a robust resolution to take care of information privateness and safety.

Amazon Q Enterprise guardrails present configurable guidelines designed to regulate the applying’s habits. These guardrails act as a security internet, minimizing entry, processing, or revealing of delicate or inappropriate info. By defining boundaries for the applying’s operations, organizations can keep compliance with inner insurance policies and exterior rules. You may allow global- or topic-level controls, which management how Amazon Q Enterprise responds to particular subjects in chat.

The next is the pattern CloudFormation template snippet to allow topic-level controls:

This topic-level management blocks the Amazon Q Enterprise chat dialog that has AWS service Amazon Useful resource Names (ARNs). When comparable chat messages have been detected by the Amazon Q Enterprise software, the system will block the responses and return the message “This message is blocked because it incorporates safe content material.”

For details about deploying the Amazon Q Enterprise software with pattern boosting and guardrails, confer with the GitHub repo.

The next screenshot exhibits an instance of the Amazon Q Enterprise assistant chat touchdown web page.

The next screenshot illustrates the assistant’s habits if a consumer consists of textual content that matches one of many similarity-based examples specified within the guardrail subject management.

Notification system

To reinforce information safety, you possibly can deploy Amazon Macie classification jobs to scan for delicate or PII information saved in S3 buckets. The next diagram illustrates a pattern notification structure to alert customers on delicate info that could be inadvertently saved. Macie makes use of machine studying to mechanically uncover, classify, and shield delicate information saved in AWS. It focuses on figuring out PII, mental property, and different delicate information varieties to assist organizations meet compliance necessities and shield their information from unauthorized entry or breaches.

The workflow consists of the next steps:

- Macie opinions the info retailer S3 bucket for delicate info earlier than being ingested.

- If Macie detects delicate info, it publishes its findings to Amazon EventBridge.

- An EventBridge rule invokes the Rectify & Notify Lambda perform.

- The Lambda perform processes the alert, remediates it by eradicating the affected information from the S3 bucket, and sends a notification utilizing Amazon Easy Notification Service (Amazon SNS) to the subscribed electronic mail addresses.

This technique allows speedy response to potential safety alerts, permitting for rapid motion to guard delicate information.

The Macie detection and subsequent notification system may be demonstrated by importing a brand new file to the S3 bucket, equivalent to sample-file-with-credentials.txt, containing the PII information varieties monitored by Macie, equivalent to pretend short-term AWS credentials. After the file is uploaded to Amazon S3 and the scheduled Macie detection job discovers it, the Lambda perform instantly removes the file and sends the next notification electronic mail to the SNS subject subscribers:

The notification incorporates the total Macie discovering occasion, which is omitted from the previous excerpt. For extra info on Macie discovering occasions format, confer with Amazon EventBridge occasion schema for Macie findings.

Moreover, the findings are seen on the Macie console, as proven within the following screenshot.

Extra suggestions

To additional improve the safety and reliability of the Amazon Q Enterprise software, we advocate implementing the next measures. These extra safety and logging implementations be certain that the info is protected, alerts are despatched in response to potential warnings, and well timed actions may be taken for safety incidents.

- Amazon CloudWatch logging for Amazon Q Enterprise – You need to use Amazon CloudWatch logging for Amazon Q Enterprise to avoid wasting the logs for the info supply connectors and document-level errors, focusing notably on failed ingestion jobs. This follow is significant from a safety perspective as a result of it permits monitoring and fast identification of points within the information ingestion course of. By monitoring failed jobs, potential information loss or corruption may be mitigated, sustaining the reliability and completeness of the data base.

- Unauthorized entry monitoring on Amazon S3 – You may implement EventBridge guidelines to monitor mutating API actions on the S3 buckets. These guidelines are configured to invoke SNS notifications when such actions are carried out by unauthorized customers. Allow Amazon S3 server entry logging to retailer detailed entry data in a delegated bucket, which may be analyzed utilizing Amazon Athena for deeper insights. This strategy gives real-time alerts for rapid response to potential safety breaches, whereas additionally sustaining an in depth audit path for thorough safety evaluation, ensuring that solely licensed entities can modify vital information.

Stipulations

Within the following sections, we stroll by implementing the end-to-end resolution. For this resolution to work, the next stipulations are wanted:

- A brand new or present AWS account that would be the information assortment account

- Corresponding AWS Id and Entry Administration (IAM) permissions to create S3 buckets and deploy CloudFormation stacks

Configure the info ingestion

On this put up, we display the answer utilizing publicly accessible documentation as our pattern dataset. In your implementation, you possibly can adapt this resolution to work along with your group’s particular content material sources, equivalent to assist tickets, JIRA points, inner wikis, or different related documentation.

Deploy the next CloudFormation template to create the info ingestion sources:

- S3 information bucket

- Ingestion Lambda perform

- Processing Lambda perform

- Step Capabilities workflow

The info ingestion workflow on this instance fetches and processes public information from the Amazon Q Enterprise and Amazon SageMaker official documentation in PDF format. Particularly, the Ingest Knowledge Lambda perform downloads the uncooked PDF paperwork, briefly shops them in Amazon S3, and passes their Amazon S3 URLs to the Course of Knowledge Lambda perform, which performs the PII redaction (if enabled) and shops the processed paperwork and their metadata to the S3 path listed by the Amazon Q Enterprise software.

You may adapt the Step Capabilities Lambda code for ingestion and processing in keeping with your individual inner information, ensuring that the paperwork and metadata are in a sound format for Amazon Q Enterprise to index, and are correctly redacted for PII information.

Configure IAM Id Heart

You may solely have one IAM Id Heart occasion per account. In case your account already has an Id Heart occasion, skip this step and proceed to configuring the Amazon Q Enterprise software.

Deploy the next CloudFormation template to configure IAM Id Heart.

You will have so as to add particulars for a consumer equivalent to consumer identify, electronic mail, first identify, and surname.

After deploying the CloudFormation template, you’ll obtain an electronic mail the place you have to to simply accept the invitation and alter the password for the consumer.

Earlier than logging in, you have to to deploy the Amazon Q Enterprise software.

Configure the Amazon Q Enterprise software

Deploy the next CloudFormation template to configure the Amazon Q Enterprise software.

You will have so as to add particulars such because the IAM Id Heart stack identify deployed beforehand and the S3 bucket identify provisioned by the info ingestion stack.

After you deploy the CloudFormation template, full the next steps to handle consumer entry:

- On the Amazon Q Enterprise console, select Functions within the navigation pane.

- Select the applying you provisioned (

workshop-app-01). - Below Person entry, select Handle consumer entry.

- On the Customers tab, select the consumer you specified when deploying the CloudFormation stack.

- Select Edit subscription.

- Below New subscription, select Enterprise Lite or Enterprise Professional.

- Select Verify after which Verify

Now you possibly can log in utilizing the consumer you’ve gotten specified. You could find the URL for the net expertise below Internet expertise settings.

If you’re unable to log in, ensure that the consumer has been verified.

Sync the info supply

Earlier than you need to use the Amazon Q Enterprise software, the info supply must be synchronized. The applying’s information supply is configured to sync hourly. It would take a while to synchronize.

When the synchronization is full, you need to now have the ability to entry the applying and ask questions.

Clear up

After you’re carried out testing the answer, you possibly can delete the sources to keep away from incurring extra prices. See the Amazon Q Enterprise pricing web page for extra info. Comply with the directions within the GitHub repository to delete the sources and corresponding CloudFormation templates. Ensure to delete the CloudFormation stacks provisioned as follows:

- Delete the Amazon Q Enterprise software stack.

- Delete the IAM Id Heart stack.

- Delete the info ingestion

- For every deleted stack, test for any sources that had been skipped within the deletion course of, equivalent to S3 buckets.

Delete any skipped sources on the console.

Conclusion

On this put up, we demonstrated the right way to construct a data base resolution by integrating enterprise information with Amazon Q Enterprise utilizing Amazon S3. This strategy helps organizations enhance operational effectivity, scale back response instances, and acquire worthwhile insights from their historic information. The answer makes use of AWS safety greatest practices to advertise information safety whereas enabling groups to create a complete data base from numerous information sources.

Whether or not you’re managing assist tickets, inner documentation, or different enterprise content material, this resolution can deal with a number of information sources and scale in keeping with your wants, making it appropriate for organizations of various sizes. By implementing this resolution, you possibly can improve your operations with AI-powered help, automated responses, and clever routing of advanced queries.

Do that resolution with your individual use case, and tell us about your expertise within the feedback part.

In regards to the Writer

Omar Elkharbotly is a Senior Cloud Help Engineer at AWS, specializing in Knowledge, Machine Studying, and Generative AI options. With in depth expertise in serving to prospects architect and optimize their cloud-based AI/ML/GenAI workloads, Omar works intently with AWS prospects to unravel advanced technical challenges and implement greatest practices throughout the AWS AI/ML/GenAI service portfolio. He’s keen about serving to organizations leverage the total potential of cloud computing to drive innovation in generative AI and machine studying.

Omar Elkharbotly is a Senior Cloud Help Engineer at AWS, specializing in Knowledge, Machine Studying, and Generative AI options. With in depth expertise in serving to prospects architect and optimize their cloud-based AI/ML/GenAI workloads, Omar works intently with AWS prospects to unravel advanced technical challenges and implement greatest practices throughout the AWS AI/ML/GenAI service portfolio. He’s keen about serving to organizations leverage the total potential of cloud computing to drive innovation in generative AI and machine studying.

Vania Toma is a Principal Cloud Help Engineer at AWS, targeted on Networking and Generative AI options. He has deep experience in resolving advanced, cross-domain technical challenges by systematic problem-solving methodologies. With a customer-obsessed mindset, he leverages rising applied sciences to drive innovation and ship distinctive buyer experiences.

Vania Toma is a Principal Cloud Help Engineer at AWS, targeted on Networking and Generative AI options. He has deep experience in resolving advanced, cross-domain technical challenges by systematic problem-solving methodologies. With a customer-obsessed mindset, he leverages rising applied sciences to drive innovation and ship distinctive buyer experiences.

Bhavani Kanneganti is a Principal Cloud Help Engineer at AWS. She focuses on fixing advanced buyer points on the AWS Cloud, specializing in infrastructure-as-code, container orchestration, and generative AI applied sciences. She collaborates with groups throughout AWS to design options that improve the shopper expertise. Exterior of labor, Bhavani enjoys cooking and touring.

Bhavani Kanneganti is a Principal Cloud Help Engineer at AWS. She focuses on fixing advanced buyer points on the AWS Cloud, specializing in infrastructure-as-code, container orchestration, and generative AI applied sciences. She collaborates with groups throughout AWS to design options that improve the shopper expertise. Exterior of labor, Bhavani enjoys cooking and touring.

Mattia Sandrini is a Senior Cloud Help Engineer at AWS, specialised in Machine Studying applied sciences and Generative AI options, serving to prospects function and optimize their ML workloads. With a deep ardour for driving efficiency enhancements, he dedicates himself to empowering each prospects and groups by revolutionary ML-enabled options. Away from his technical pursuits, Mattia embraces his ardour for journey and journey.

Mattia Sandrini is a Senior Cloud Help Engineer at AWS, specialised in Machine Studying applied sciences and Generative AI options, serving to prospects function and optimize their ML workloads. With a deep ardour for driving efficiency enhancements, he dedicates himself to empowering each prospects and groups by revolutionary ML-enabled options. Away from his technical pursuits, Mattia embraces his ardour for journey and journey.

Kevin Draai is a Senior Cloud Help Engineer at AWS who focuses on Serverless applied sciences and growth inside the AWS cloud. Kevin has a ardour for creating options by code whereas making certain it’s constructed on strong infrastructure. Exterior of labor, Kevin enjoys artwork and sport.

Kevin Draai is a Senior Cloud Help Engineer at AWS who focuses on Serverless applied sciences and growth inside the AWS cloud. Kevin has a ardour for creating options by code whereas making certain it’s constructed on strong infrastructure. Exterior of labor, Kevin enjoys artwork and sport.

Tipu Qureshi is a Senior Principal Engineer main AWS. Tipu helps prospects with designing and optimizing their cloud expertise technique as a senior principal engineer in AWS Help & Managed Companies. For over 15 years, he has designed, operated and supported numerous distributed techniques at scale with a ardour for operational excellence. He presently works on generative AI and operational excellence.

Tipu Qureshi is a Senior Principal Engineer main AWS. Tipu helps prospects with designing and optimizing their cloud expertise technique as a senior principal engineer in AWS Help & Managed Companies. For over 15 years, he has designed, operated and supported numerous distributed techniques at scale with a ardour for operational excellence. He presently works on generative AI and operational excellence.