, dealing with streaming knowledge was thought of an avant-garde method. Because the introduction of relational database administration techniques within the Nineteen Seventies and conventional knowledge warehousing techniques within the late Eighties, all knowledge workloads started and ended with the so-called batch processing. Batch processing depends on the idea of accumulating quite a few duties in a gaggle (or batch) and processing these duties in a single operation.

On the flip aspect, there’s a idea of streaming knowledge. Though streaming knowledge continues to be generally thought of a cutting-edge know-how, it already has a strong historical past. All the pieces began in 2002, when Stanford College researchers printed the paper known as “Fashions and Points in Information Stream Methods”. Nonetheless, it wasn’t till virtually a decade later (2011) that streaming knowledge techniques began to achieve a wider viewers, when the Apache Kafka platform for storing and processing streaming knowledge was open-sourced. The remaining is historical past, as folks say. These days, processing streaming knowledge just isn’t thought of a luxurious however a necessity.

Microsoft acknowledged the rising must course of the information “as quickly because it arrives”. Therefore, Microsoft Material doesn’t disappoint in that regard, as Actual-time Intelligence is on the core of all the platform and presents an entire vary of capabilities to deal with streaming knowledge effectively.

Earlier than we dive deep into explaining every part of Actual-time Intelligence, let’s take one step again and take a extra tool-agnostic method to stream processing usually.

What’s stream processing?

In case you enter the phrase from the part title in Google Search, you’ll get greater than 100,000 outcomes! Subsequently, I’m sharing an illustration that represents our understanding of stream processing.

Let’s now study typical use circumstances for stream processing:

- Fraud detection

- Actual-time inventory trades

- Buyer exercise

- Log monitoring — troubleshooting techniques, gadgets, and many others.

- Safety data and occasion administration — analyzing logs and real-time occasion knowledge for monitoring and risk detection

- Warehouse stock

- Trip share matching

- Machine studying and predictive analytics

As you might have seen, streaming knowledge has develop into an integral a part of quite a few real-life situations and is taken into account vastly superior to conventional batch processing for the aforementioned use circumstances.

Let’s now discover how streaming knowledge processing is carried out in Microsoft Material and which instruments of commerce we now have at our disposal.

The next illustration exhibits the high-level overview of all Actual-time Intelligence elements in Microsoft Material:

Actual-Time hub

Let’s kick it off by introducing a Actual-Time hub. Each Microsoft Material tenant routinely provisions a Actual-Time hub. This can be a point of interest for all data-in-motion throughout all the group. Just like OneLake, there might be one, and just one, Actual-Time hub per tenant — this implies, you’ll be able to’t provision or create a number of Actual-Time hubs.

The primary objective of the Actual-Time hub is to allow fast and simple discovery, ingestion, administration, and consumption of streaming knowledge from a variety of sources. Within the following illustration, you will discover the overview of all the information streams within the Actual-Time hub in Microsoft Material:

Let’s now discover all of the out there choices within the Actual-Time hub.

- All knowledge streams tab shows all of the streams and tables you’ll be able to entry. Streams symbolize the output from Material eventstreams, whereas tables come from KQL databases. We’ll discover each evenstreams and KQL databases in additional element within the following sections

- My knowledge streams tab exhibits all of the streams you introduced into Microsoft Material into My workspace

- Information sources tab is on the core of bringing the information into Material, each from inside and outdoors. As soon as you end up within the Information sources tab, you’ll be able to select between quite a few, out-of-the-box offered connectors, resembling Kafka, CDC streams for numerous database techniques, exterior cloud options like AWS and GCP, and plenty of extra

- Microsoft sources tab filters out the earlier set of sources to incorporate Microsoft knowledge sources solely

- Material occasions tab shows the record of system occasions generated in Microsoft Material that you would be able to entry. Right here, chances are you’ll select between Job occasions, OneLake occasions, and Workspace merchandise occasions. Let’s dive into every of those three choices:

- Job occasions are occasions produced by standing adjustments on Material monitor actions, resembling job created, succeeded, or failed

- OneLake occasions symbolize occasions produced by actions on recordsdata and folders in OneLake, resembling file created, deleted, or renamed

- Workspace merchandise occasions are produced by actions on workspace objects, resembling merchandise created, deleted, or renamed

- Azure occasions tab exhibits the record of system occasions generated in Azure blob storage

The Actual-Time hub supplies numerous connectors for ingesting the information into Microsoft Material. It additionally allows creating streams for all the supported sources. After the stream is created, you’ll be able to course of, analyze, and act on them.

- Processing a stream permits you to apply quite a few transformations, resembling mixture, filter, union, and plenty of extra. The aim is to remodel the information earlier than you ship the output to supported locations

- Analyzing a stream lets you add a KQL database as a vacation spot of the stream, after which open the KQL Database and execute queries in opposition to the database.

- Appearing on streams assumes setting the alerts based mostly on circumstances and specifying actions to be taken when sure circumstances are met

Eventstreams

In case you’re a low-code or no-code knowledge skilled and you’ll want to deal with streaming knowledge, you’ll love Eventstreams. In a nutshell, Eventstream permits you to connect with quite a few knowledge sources, which we examined within the earlier part, optionally apply numerous knowledge transformation steps, and eventually output outcomes into a number of locations. The next determine illustrates a standard workflow for ingesting streaming knowledge into three completely different locations — Eventhouse, Lakehouse, and Activator:

Throughout the Eventstream settings, you’ll be able to regulate the retention interval for the incoming knowledge. By default, the information is retained for sooner or later, and occasions are routinely eliminated when the retention interval expires.

Apart from that, you might also need to fine-tune the occasion throughput for incoming and outgoing occasions. There are three choices to select from:

- Low: < 10 MB/s

- Medium: 10-100 MB/s

- Excessive: > 100 MB/s

Eventhouse and KQL database

Within the earlier part, you’ve realized how to connect with numerous streaming knowledge sources, optionally rework the information, and eventually load it into the ultimate vacation spot. As you might need seen, one of many out there locations is the Eventhouse. On this part, we’ll discover Microsoft Material objects used to retailer the information throughout the Actual-Time Intelligence workload.

Eventhouse

We’ll first introduce the Eventhouse merchandise. The Eventhouse is nothing else however a container for KQL databases. Eventhouse itself doesn’t retailer any knowledge — it merely supplies the infrastructure throughout the Material workspace for coping with streaming knowledge. The next determine shows the System overview web page of the Eventhouse:

The beauty of the System overview web page is that it supplies all the important thing data at a look. Therefore, you’ll be able to instantly perceive the operating state of the eventhouse, OneLake storage utilization, additional damaged down per particular person KQL database degree, compute utilization, most energetic databases and customers, and up to date occasions.

If we swap to the Databases web page, we can see a high-level overview of KQL databases which are a part of the prevailing Eventhouse, as proven beneath:

You possibly can create a number of eventhouses in a single Material workspace. Additionally, a single eventhouse could include a number of KQL databases:

Let’s wrap up the story in regards to the Eventhouse by explaining the idea of Minimal consumption. By design, the Eventhouse is optimized to auto-suspend providers when not in use. Subsequently, when these providers are reactivated, it’d take a while for the Eventhouse to be totally out there once more. Nonetheless, there are specific enterprise situations when this latency just isn’t acceptable. In these situations, be sure that to configure the Minimal consumption function. By configuring the Minimal consumption, the service is at all times out there, however you might be answerable for figuring out the minimal degree, which is then out there for KQL databases contained in the Eventhouse.

KQL database

Now that you simply’ve realized in regards to the Eventhouse container, let’s give attention to inspecting the core merchandise for storing real-time analytics knowledge — the KQL database.

Let’s take one step again and clarify the title of the merchandise first. Whereas most knowledge professionals have not less than heard about SQL (which stands for Structured Question Language), I’m fairly assured that KQL is far more cryptic than its “structured” relative.

You might need rightly assumed that QL within the abbreviation stands for Question Language. However, what does this letter Ok symbolize? It’s an abbreviation for Kusto. I hear you, I hear you: what’s now Kusto?! Though the city legend says that the language was named after the well-known polymath and oceanographer Jacques Cousteau (his final title is pronounced “Kusto”), I couldn’t discover any official affirmation from Microsoft to substantiate this story. What is certainly identified is that it was the interior undertaking title for the Log Analytics Question Language.

Once we speak about historical past, let’s share some extra historical past classes. In case you ever labored with Azure Information Explorer (ADX) prior to now, you might be in luck. KQL database in Microsoft Material is the official successor of ADX. Just like many different Azure knowledge providers that had been rebuilt and built-in into SaaS-fied nature of Material, ADX offered platform for storing and querying real-time analytics knowledge for KQL databases. The engine and core capabilities of the KQL database are the identical as in Azure Information Explorer — the important thing distinction is the administration conduct: Azure Information Explorer represents a PaaS (Platform-as-a-Service), whereas KQL database is a SaaS (Software program-as-a-Service) resolution.

Though chances are you’ll retailer any knowledge within the KQL database (non-structured, semi-structured, and structured), its predominant objective is dealing with telemetry, logs, occasions, traces, and time collection knowledge. Below the hood, the engine leverages optimized storage codecs, automated indexing and partitioning, and superior knowledge statistics for environment friendly question planning.

Let’s now study tips on how to leverage the KQL database in Microsoft Material to retailer and question real-time analytics knowledge. Making a database is as easy because it may very well be. The next determine illustrates the 2-step course of of making a KQL database in Material:

- Click on on the “+” signal subsequent to KQL databases

- Present the database title and select its sort. Kind might be the default new database, or a shortcut database. Shortcut database is a reference to a special database that may be both one other KQL database in Actual-Time Intelligence in Microsoft Material, or an Azure Information Explorer database

Don’t combine the idea of OneLake shortcuts with the idea of shortcut database sort in Actual-Time Intelligence! Whereas the latter merely references all the KQL/Azure Information Explorer database, OneLake shortcuts permit the usage of the information saved in Delta tables throughout different OneLake workloads, resembling lakehouses and/or warehouses, and even exterior knowledge sources (ADLS Gen2, Amazon S3, Dataverse, Google Cloud Storage, to call a number of). This knowledge can then be accessed from KQL databases through the use of the external_table() operate

Let’s now take a fast tour of the important thing options of the KQL database from the user-interface perspective. The determine beneath illustrates the details of curiosity:

- Tables – shows all of the tables within the database

- Shortcuts – exhibits tables created as OneLake shortcuts

- Materialized views – a materialized view represents the aggregation question over a supply desk or one other materialized view. It consists of a single summarize assertion

- Capabilities – these are Consumer-defined features saved and managed on a database degree, just like tables. These features are created through the use of the .create operate command

- Information streams – all streams which are related for the chosen KQL database

- Information Exercise Tracker – exhibits the exercise within the database for the chosen time interval

- Tables/Information preview – allows switching between two completely different views. Tables shows the high-level overview of the database tables, whereas Information preview exhibits the highest 100 information of the chosen desk

Question and visualize knowledge in Actual-Time Intelligence

Now that you simply’ve realized tips on how to retailer real-time analytics knowledge in Microsoft Material, it’s time to get our fingers soiled and supply some enterprise perception out of this knowledge. On this part, I’ll give attention to explaining numerous choices for extracting helpful data from the information saved within the KQL database.

Therefore, on this part, I’ll introduce frequent KQL features for knowledge retrieval, and discover Actual-time dashboards for visualizing the information.

KQL queryset

The KQL queryset is the material merchandise used to run queries and consider and customise outcomes from numerous knowledge sources. As quickly as you create a brand new KQL database, the KQL queryset merchandise can be provisioned out of the field. This can be a default KQL queryset that’s routinely linked to the KQL database beneath which it exists. The default KQL queryset doesn’t permit a number of connections.

On the flip aspect, while you create a customized KQL queryset merchandise, you’ll be able to join it to a number of knowledge sources, as proven within the following illustration:

Let’s now introduce the constructing blocks of the KQL and study a few of the mostly used operators and features. KQL is a reasonably easy but highly effective language. To some extent, it’s similar to SQL, particularly when it comes to utilizing schema entities which are organized in hierarchies, resembling databases, tables, and columns.

The most typical sort of KQL question assertion is a tabular expression assertion. Which means each question enter and output encompass tables or tabular datasets. Operators in a tabular assertion are sequenced by the “|” (pipe) image. Information is flowing (is piped) from one operator to the subsequent, as displayed within the following code snippet:

MyTable

| the place StartTime between (datetime(2024-11-01) .. datetime(2024-12-01))

| the place State == "Texas"

| relyThe piping is sequential — the information is flowing from one operator to a different — because of this the question operator order is essential and should have an effect on each the output outcomes and efficiency.

Within the above code instance, the information in MyTable is first filtered on the StartTime column, then filtered on the State column, and eventually, the question returns a desk containing a single column and single row, displaying the rely of the filtered rows.

The truthful query at this level can be: what if I already know SQL? Do I must study one other language only for the sake of querying real-time analytics knowledge? The reply is as common: it relies upon.

Fortunately, I’ve good and nice information to share right here!

The excellent news is: you CAN write SQL statements to question the information saved within the KQL database. However, the truth that you can do one thing, doesn’t imply you ought to…By utilizing SQL-only queries, you might be lacking the purpose, and limitting your self from utilizing many KQL-specific features which are constructed to deal with real-time analytics queries in probably the most environment friendly approach

The nice information is: by leveraging the clarify operator, you’ll be able to “ask” Kusto to translate your SQL assertion into an equal KQL assertion, as displayed within the following determine:

Within the following examples, we’ll question the pattern Climate dataset, which incorporates knowledge about climate storms and damages within the USA. Let’s begin easy after which introduce some extra advanced queries. Within the first instance, we’ll rely the variety of information within the Climate desk:

//Rely information

Climate

| relyQuestioning tips on how to retrieve solely a subset of information? You need to use both take or restrict operator:

//Pattern knowledge

Climate

| take 10Please remember that the take operator won’t return the TOP n variety of information, except your knowledge is sorted within the particular order. Usually, the take operator returns any n variety of information from the desk.

Within the subsequent step, we need to lengthen this question and return not solely a subset of rows, but in addition a subset of columns:

//Pattern knowledge from a subset of columns

Climate

| take 10

| undertaking State, EventType, DamagePropertyThe undertaking operator is the equal of the SELECT assertion in SQL. It specifies which columns must be included within the end result set.

Within the following instance, we’re making a calculated column, Period, that represents a length between EndTime and StartTime values. As well as, we need to show solely high 10 information sorted by the DamageProperty worth in descending order:

//Create calculated columns

Climate

| the place State == 'NEW YORK' and EventType == 'Winter Climate'

| high 10 by DamageProperty desc

| undertaking StartTime, EndTime, Period = EndTime - StartTime, DamagePropertyIt’s the proper second to introduce the summarize operator. This operator produces a desk that aggregates the content material of the enter desk. Therefore, the next assertion will show the full variety of information per every state, together with solely the highest 5 states:

//Use summarize operator

Climate

| summarize TotalRecords = rely() by State

| high 5 by TotalRecordsLet’s develop on the earlier code and visualize the information instantly within the end result set. I’ll add one other line of KQL code to render outcomes as a bar chart:

As chances are you’ll discover, the chart might be moreover personalized from the Visible formatting pane on the right-hand aspect, which supplies much more flexibility when visualizing the information saved within the KQL database.

These had been simply primary examples of utilizing KQL language to retrieve the information saved within the Eventhouse and KQL databases. I can guarantee you that KQL gained’t allow you to down in additional superior use circumstances when you’ll want to manipulate and retrieve real-time analytics knowledge.

I perceive that SQL is the “Lingua franca” of many knowledge professionals. And though you’ll be able to write SQL to retrieve the information from the KQL database, I strongly encourage you to chorus from doing this. As a fast reference, I’m offering you with a “SQL to KQL cheat sheet” to present you a head begin when transitioning from SQL to KQL.

Additionally, my good friend and fellow MVP Brian Bønk printed and maintains a incredible reference information for the KQL language right here. Be certain to present it a strive if you’re working with KQL.

Actual-time dashboards

Whereas KQL querysets symbolize a strong approach of exploring and querying knowledge saved in Eventhouses and KQL databases, their visualization capabilities are fairly restricted. Sure, you can visualize ends in the question view, as you’ve seen in one of many earlier examples, however that is extra of a “first help” visualization that gained’t make your managers and enterprise decision-makers comfortable.

Fortuitously, there may be an out-of-the-box resolution in Actual-Time Intelligence that helps superior knowledge visualization ideas and options. Actual-Time Dashboard is a Material merchandise that allows the creation of interactive and visually interesting business-reporting options.

Let’s first determine the core components of the Actual-Time Dashboard. A dashboard consists of a number of tiles, optionally structured and arranged in pages, the place every tile is populated by the underlying KQL question.

As a primary step within the course of of making Actual-Time Dashboards, this setting should be enabled within the Admin portal of your Material tenant:

Subsequent, you need to create a brand new Actual-Time Dashboard merchandise within the Material workspace. From there, let’s connect with our Climate dataset and configure our first dashboard tile. We’ll execute one of many queries from the earlier part to retrieve the highest 10 states with the conditional rely operate. The determine beneath exhibits the tile settings panel with quite a few choices to configure:

- KQL question to populate the tile

- Visible illustration of the information

- Visible formatting pane with choices to set the tile title and outline

- Visible sort drop-down menu to pick the specified visible sort (in our case, it’s desk visible)

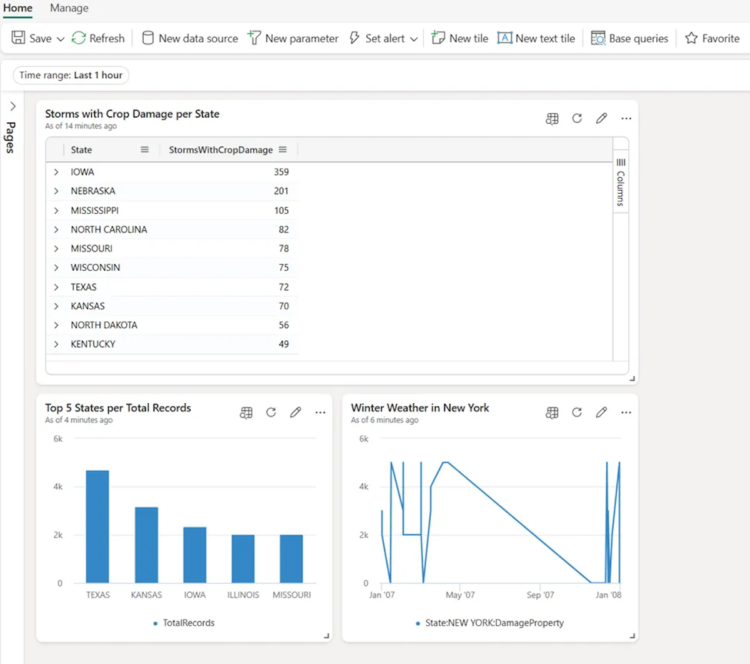

Let’s now add two extra tiles to our dashboard. I’ll copy and paste two queries that we beforehand used — the primary will retrieve the highest 5 states per whole variety of information, whereas the opposite will show the harm property worth change over time for the state of New York and for occasion sort, which equals winter climate.

You may as well add a tile instantly from the KQL queryset to the prevailing dashboard, as illustrated beneath:

Let’s now give attention to the assorted capabilities you could have when working with Actual-Time Dashboards. Within the high ribbon, you’ll discover choices so as to add a New knowledge supply, set a brand new parameter, and add base queries. Nonetheless, what actually makes Actual-Time Dashboards highly effective is the likelihood to set alerts on a Actual-Time Dashboard. Relying if the circumstances outlined within the alert are met, you’ll be able to set off a selected motion, resembling sending an e-mail or Microsoft Groups message. An alert is created utilizing the Activator merchandise.

Visualize knowledge with Energy BI

Energy BI is a mature and extensively adopted software for constructing sturdy, scalable, and interactive enterprise reporting options. On this part, we particularly give attention to inspecting how Energy BI works in synergy with the Actual-Time Intelligence workload in Microsoft Material.

Making a Energy BI report based mostly on the information saved within the KQL database couldn’t be simpler. You possibly can select to create a Energy BI report instantly from the KQL queryset, as displayed beneath:

Every question within the KQL queryset represents a desk within the Energy BI semantic mannequin. From right here, you’ll be able to construct visualizations and leverage all the prevailing Energy BI options to design an efficient, visually interesting report.

Clearly, you’ll be able to nonetheless leverage the “common” Energy BI workflow, which assumes connecting from the Energy BI Desktop to a KQL database as a knowledge supply. On this case, you’ll want to open a OneLake knowledge hub and choose KQL Databases as a knowledge supply:

The identical as for SQL-based knowledge sources, you’ll be able to select between the Import and DirectQuery storage modes on your real-time analytics knowledge. Import mode creates a neighborhood copy of the information in Energy BI’s database, whereas DirectQuery allows querying the KQL database in near-real-time.

Activator

Activator is among the most modern options in all the Microsoft Material realm. I’ll cowl Activator intimately in a separate article. Right here, I simply need to introduce this service and briefly emphasize its predominant traits.

Activator is a no-code resolution for routinely taking actions when circumstances within the underlying knowledge are met. Activator can be utilized along with Eventstreams, Actual-Time Dashboards, and Energy BI stories. As soon as the information hits a sure threshold, the Activator routinely triggers the required motion — for instance, sending the e-mail or Microsoft Groups message, and even firing Energy Automate flows. I’ll cowl all these situations in additional depth in a separate article, the place I additionally present some sensible situations for implementing the Activator merchandise.

Conclusion

Actual-Time Intelligence — one thing that began as part of the “Synapse expertise” in Microsoft Material, is now a separate, devoted workload. That tells us quite a bit about Microsoft’s imaginative and prescient and roadmap for Actual-Time Intelligence!

Don’t neglect: initially, Actual-Time Analytics was included beneath the Synapse umbrella, along with Information Engineering, Information Warehousing, and Information Science experiences. Nonetheless, Microsoft thought that dealing with streaming knowledge deserves a devoted workload in Microsoft Material, which completely is smart contemplating the rising must cope with knowledge in movement and supply perception from this knowledge as quickly as it’s captured. In that sense, Microsoft Material supplies an entire suite of highly effective providers, as the subsequent technology of instruments for processing, analyzing, and appearing on knowledge because it’s generated.

I’m fairly assured that the Actual-Time Intelligence workload will develop into increasingly important sooner or later, contemplating the evolution of information sources and the rising tempo of information technology.

Thanks for studying!