Within the first submit of this sequence, we launched a complete analysis framework for Amazon Q Enterprise, a completely managed Retrieval Augmented Technology (RAG) answer that makes use of your organization’s proprietary information with out the complexity of managing massive language fashions (LLMs). The primary submit centered on deciding on acceptable use circumstances, making ready information, and implementing metrics to help a human-in-the-loop analysis course of.

On this submit, we dive into the answer structure essential to implement this analysis framework to your Amazon Q Enterprise software. We discover two distinct analysis options:

- Complete analysis workflow – This ready-to-deploy answer makes use of AWS CloudFormation stacks to arrange an Amazon Q Enterprise software, full with consumer entry, a customized UI for assessment and analysis, and the supporting analysis infrastructure

- Light-weight AWS Lambda based mostly analysis – Designed for customers with an present Amazon Q Enterprise software, this streamlined answer employs an AWS Lambda perform to effectively assess the applying’s accuracy

By the top of this submit, you’ll have a transparent understanding of tips on how to implement an analysis framework that aligns together with your particular wants with an in depth walkthrough, so your Amazon Q Enterprise software delivers correct and dependable outcomes.

Challenges in evaluating Amazon Q Enterprise

Evaluating the efficiency of Amazon Q Enterprise, which makes use of a RAG mannequin, presents a number of challenges on account of its integration of retrieval and technology elements. It’s essential to determine which points of the answer want analysis. For Amazon Q Enterprise, each the retrieval accuracy and the standard of the reply output are necessary components to evaluate. On this part, we talk about key metrics that should be included for a RAG generative AI answer.

Context recall

Context recall measures the extent to which all related content material is retrieved. Excessive recall gives complete data gathering however may introduce extraneous information.

For instance, a consumer may ask the query “What are you able to inform me concerning the geography of the USA?” They may get the next responses:

- Anticipated: The USA is the third-largest nation on the earth by land space, protecting roughly 9.8 million sq. kilometers. It has a various vary of geographical options.

- Excessive context recall: The USA spans roughly 9.8 million sq. kilometers, making it the third-largest nation globally by land space. nation’s geography is extremely numerous, that includes the Rocky Mountains stretching from New Mexico to Alaska, the Appalachian Mountains alongside the japanese states, the expansive Nice Plains within the central area, arid deserts just like the Mojave within the southwest.

- Low context recall: The USA options vital geographical landmarks. Moreover, the nation is residence to distinctive ecosystems just like the Everglades in Florida, an enormous community of wetlands.

The next diagram illustrates the context recall workflow.

Context precision

Context precision assesses the relevance and conciseness of retrieved data. Excessive precision signifies that the retrieved data intently matches the question intent, lowering irrelevant information.

For instance, “Why Silicon Valley is nice for tech startups?”may give the next solutions:

- Floor fact reply: Silicon Valley is known for fostering innovation and entrepreneurship within the expertise sector.

- Excessive precision context: Many groundbreaking startups originate from Silicon Valley, benefiting from a tradition that encourages innovation, risk-taking

- Low precision context: Silicon Valley experiences a Mediterranean local weather, with gentle, moist, winters and heat, dry summers, contributing to its attraction as a spot to reside and works

The next diagram illustrates the context precision workflow.

Reply relevancy

Reply relevancy evaluates whether or not responses absolutely handle the question with out pointless particulars. Related solutions improve consumer satisfaction and belief within the system.

For instance, a consumer may ask the query “What are the important thing options of Amazon Q Enterprise Service, and the way can it profit enterprise prospects?” They may get the next solutions:

- Excessive relevance reply: Amazon Q Enterprise Service is a RAG Generative AI answer designed for enterprise use. Key options embody a completely managed Generative AI options, integration with enterprise information sources, sturdy safety protocols, and customizable digital assistants. It advantages enterprise prospects by enabling environment friendly data retrieval, automating buyer help duties, enhancing worker productiveness by fast entry to information, and offering insights by analytics on consumer interactions.

- Low relevance reply: Amazon Q Enterprise Service is a part of Amazon’s suite of cloud companies. Amazon additionally gives on-line purchasing and streaming companies.

The next diagram illustrates the reply relevancy workflow.

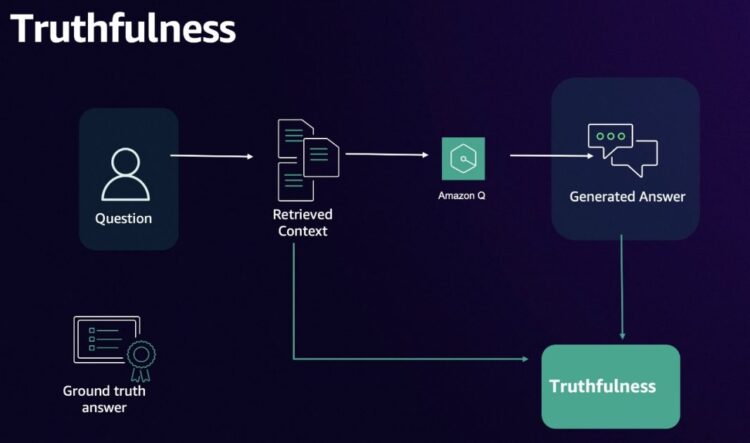

Truthfulness

Truthfulness verifies factual accuracy by evaluating responses to verified sources. Truthfulness is essential to take care of the system’s credibility and reliability.

For instance, a consumer may ask “What’s the capital of Canada?” They may get the next responses:

- Context: Canada’s capital metropolis is Ottawa, situated within the province of Ontario. Ottawa is understood for its historic Parliament Hill, the middle of presidency, and the scenic Rideau Canal, a UNESCO World Heritage website

- Excessive truthfulness reply: The capital of Canada is Ottawa

- Low truthfulness reply: The capital of Canada is Toronto

The next diagram illustrates the truthfulness workflow.

Analysis strategies

Deciding on who ought to conduct the analysis can considerably affect outcomes. Choices embody:

- Human-in-the-Loop (HITL) – Human evaluators manually assess the accuracy and relevance of responses, providing nuanced insights that automated techniques may miss. Nevertheless, it’s a sluggish course of and troublesome to scale.

- LLM-aided analysis – Automated strategies, such because the Ragas framework, use language fashions to streamline the analysis course of. Nevertheless, these may not absolutely seize the complexities of domain-specific data.

Every of those preparatory and evaluative steps contributes to a structured strategy to evaluating the accuracy and effectiveness of Amazon Q Enterprise in supporting enterprise wants.

Answer overview

On this submit, we discover two totally different options to supply you the small print of an analysis framework, so you need to use it and adapt it to your personal use case.

Answer 1: Finish-to-end analysis answer

For a fast begin analysis framework, this answer makes use of a hybrid strategy with Ragas (automated scoring) and HITL analysis for sturdy accuracy and reliability. The structure contains the next elements:

- Consumer entry and UI – Authenticated customers work together with a frontend UI to add datasets, assessment RAGAS output, and supply human suggestions

- Analysis answer infrastructure – Core elements embody:

- Ragas scoring – Automated metrics present an preliminary layer of analysis

- HITL assessment – Human evaluators refine Ragas scores by the UI, offering nuanced accuracy and reliability

By integrating a metric-based strategy with human validation, this structure makes certain Amazon Q Enterprise delivers correct, related, and reliable responses for enterprise customers. This answer additional enhances the analysis course of by incorporating HITL opinions, enabling human suggestions to refine automated scores for greater precision.

A fast video demo of this answer is proven under:

Answer structure

The answer structure is designed with the next core functionalities to help an analysis framework for Amazon Q Enterprise:

- Consumer entry and UI – Customers authenticate by Amazon Cognito, and upon profitable login, work together with a Streamlit-based customized UI. This frontend permits customers to add CSV datasets to Amazon Easy Storage Service (Amazon S3), assessment Ragas analysis outputs, and supply human suggestions for refinement. The appliance exchanges the Amazon Cognito token for an AWS IAM Id Heart token, granting scoped entry to Amazon Q Enterprise.UI

- infrastructure – The UI is hosted behind an Utility Load Balancer, supported by Amazon Elastic Compute Cloud (Amazon EC2) situations operating in an Auto Scaling group for top availability and scalability.

- Add dataset and set off analysis – Customers add a CSV file containing queries and floor fact solutions to Amazon S3, which triggers an analysis course of. A Lambda perform reads the CSV, shops its content material in a DynamoDB desk, and initiates additional processing by a DynamoDB stream.

- Consuming DynamoDB stream – A separate Lambda perform processes new entries from the DynamoDB stream, and publishes messages to an SQS queue, which serves as a set off for the analysis Lambda perform.

- Ragas scoring – The analysis Lambda perform consumes SQS messages, sending queries (prompts) to Amazon Q Enterprise for producing solutions. It then evaluates the immediate, floor fact, and generated reply utilizing the Ragas analysis framework. Ragas computes automated analysis metrics equivalent to context recall, context precision, reply relevancy, and truthfulness. The outcomes are saved in DynamoDB and visualized within the UI.

HITL assessment – Authenticated customers can assessment and refine RAGAS scores instantly by the UI, offering nuanced and correct evaluations by incorporating human insights into the method.

This structure makes use of AWS companies to ship a scalable, safe, and environment friendly analysis answer for Amazon Q Enterprise, combining automated and human-driven evaluations.

Stipulations

For this walkthrough, it’s best to have the next stipulations:

Moreover, ensure that all of the assets you deploy are in the identical AWS Area.

Deploy the CloudFormation stack

Full the next steps to deploy the CloudFormation stack:

- Clone the repository or obtain the recordsdata to your native pc.

- Unzip the downloaded file (should you used this feature).

- Utilizing your native pc command line, use the ‘cd’ command and alter listing into

./sample-code-for-evaluating-amazon-q-business-applications-using-ragas-main/end-to-end-solution - Be certain the

./deploy.shscript can run by executing the commandchmod 755 ./deploy.sh. - Execute the CloudFormation deployment script supplied as follows:

You’ll be able to comply with the deployment progress on the AWS CloudFormation console. It takes roughly quarter-hour to finish the deployment, after which you will notice an analogous web page to the next screenshot.

Add customers to Amazon Q Enterprise

It’s worthwhile to provision customers for the pre-created Amazon Q Enterprise software. Discuss with Establishing for Amazon Q Enterprise for directions so as to add customers.

Add the analysis dataset by the UI

On this part, you assessment and add the next CSV file containing an analysis dataset by the deployed customized UI.

This CSV file accommodates two columns: immediate and ground_truth. There are 4 prompts and their related floor fact on this dataset:

- What are the index sorts of Amazon Q Enterprise and the options of every?

- I wish to use Q Apps, which subscription tier is required to make use of Q Apps?

- What’s the file dimension restrict for Amazon Q Enterprise through file add?

- What information encryption does Amazon Q Enterprise help?

To add the analysis dataset, full the next steps:

- On the AWS CloudFormation console, select Stacks within the navigation pane.

- Select the

evalsstack that you simply already launched. - On the Outputs tab, be aware of the consumer identify and password to log in to the UI software, and select the UI URL.

The customized UI will redirect you to the Amazon Cognito login web page for authentication.

The UI software authenticates the consumer with Amazon Cognito, and initiates the token trade workflow to implement a safe Chatsync API name with Amazon Q Enterprise.

- Use the credentials you famous earlier to log in.

For extra details about the token trade circulation between IAM Id Heart and the identification supplier (IdP), consult with Constructing a Customized UI for Amazon Q Enterprise.

- After you log in to the customized UI used for Amazon Q analysis, select Add Dataset, then add the dataset CSV file.

After the file is uploaded, the analysis framework will ship the immediate to Amazon Q Enterprise to generate the reply, after which ship the immediate, floor fact, and reply to Ragas to guage. Throughout this course of, you too can assessment the uploaded dataset (together with the 4 questions and related floor fact) on the Amazon Q Enterprise console, as proven within the following screenshot.

After about 7 minutes, the workflow will end, and it’s best to see the analysis outcome for first query.

Carry out HITL analysis

After the Lambda perform has accomplished its execution, Ragas scoring shall be proven within the customized UI. Now you possibly can assessment metric scores generated utilizing Ragas (an-LLM aided analysis technique), and you may present human suggestions as an evaluator to supply additional calibration. This human-in-the-loop calibration can additional enhance the analysis accuracy, as a result of the HITL course of is especially helpful in fields the place human judgment, experience, or moral concerns are essential.

Let’s assessment the primary query: “What are the index sorts of Amazon Q Enterprise and the options of every?” You’ll be able to learn the query, Amazon Q Enterprise generated solutions, floor fact, and context.

Subsequent, assessment the analysis metrics scored through the use of Ragas. As mentioned earlier, there are 4 metrics:

- Reply relevancy – Measures relevancy of solutions. Greater scores point out higher alignment with the consumer enter, and decrease scores are given if the response is incomplete or contains redundant data.

- Truthfulness – Verifies factual accuracy by evaluating responses to verified sources. Greater scores point out a greater consistency with verified sources.

- Context precision – Assesses the relevance and conciseness of retrieved data. Greater scores point out that the retrieved data intently matches the question intent, lowering irrelevant information.

- Context recall – Measures how lots of the related paperwork (or items of knowledge) had been efficiently retrieved. It focuses on not lacking necessary outcomes. Greater recall means fewer related paperwork had been omitted.

For this query, all metrics confirmed Amazon Q Enterprise achieved a high-quality response. It’s worthwhile to check your personal analysis with these scores generated by Ragas.

Subsequent, let’s assessment a query that returned with a low reply relevancy rating. For instance: “I wish to use Q Apps, which subscription tier is required to make use of Q Apps?”

Analyzing each query and reply, we will contemplate the reply related and aligned with the consumer query, however the reply relevancy rating from Ragas doesn’t replicate this human evaluation, displaying a decrease rating than anticipated. It’s necessary to calibrate Ragas analysis judgement as Human within the Lopp. It is best to learn the query and reply fastidiously, and make crucial modifications of the metric rating to replicate the HITL evaluation. Lastly, the outcomes shall be up to date in DynamoDB.

Lastly, save the metric rating within the CSV file, and you may obtain and assessment the ultimate metric scores.

Answer 2: Lambda based mostly analysis

For those who’re already utilizing Amazon Q Enterprise, AmazonQEvaluationLambda permits for fast integration of analysis strategies into your software with out organising a customized UI software. It gives the next key options:

- Evaluates responses from Amazon Q Enterprise utilizing Ragas towards a predefined take a look at set of questions and floor fact information

- Outputs analysis metrics that may be visualized instantly in Amazon CloudWatch

- Each options present you outcomes based mostly on the enter dataset and the responses from the Amazon Q Enterprise software, utilizing Ragas to guage 4 key analysis metrics (context recall, context precision, reply relevancy, and truthfulness).

This answer gives you pattern code to guage the Amazon Q Enterprise software response. To make use of this answer, it’s essential have or create a working Amazon Q Enterprise software built-in with IAM Id Heart or Amazon Cognito as an IdP. This Lambda perform works in the identical approach because the Lambda perform within the end-to-end analysis answer, utilizing RAGAS towards a take a look at set of questions and floor fact. This light-weight answer doesn’t have a customized UI, however it will probably present outcome metrics (context recall, context precision, reply relevancy, truthfulness), for visualization in CloudWatch. For deployment directions, consult with the next GitHub repo.

Utilizing analysis outcomes to enhance Amazon Q Enterprise software accuracy

This part outlines methods to boost key analysis metrics—context recall, context precision, reply relevance, and truthfulness—for a RAG answer within the context of Amazon Q Enterprise.

Context recall

Let’s look at the next issues and troubleshooting suggestions:

- Aggressive question filtering – Overly strict search filters or metadata constraints may exclude related information. It is best to assessment the metadata filters or boosting settings utilized in Amazon Q Enterprise to ensure they don’t unnecessarily prohibit outcomes.

- Knowledge supply ingestion errors – Paperwork from sure information sources aren’t efficiently ingested into Amazon Q Enterprise. To handle this, examine the doc sync historical past report in Amazon Q Enterprise to verify profitable ingestion and resolve ingestion errors.

Context precision

Think about the next potential points:

- Over-retrieval of paperwork – Massive top-Ok values may retrieve semi-related or off-topic passages, which the LLM may incorporate unnecessarily. To handle this, refine metadata filters or apply boosting to enhance passage relevance and scale back noise within the retrieved context.

- Poor question specificity – Broad or poorly fashioned consumer queries can yield loosely associated outcomes. It is best to ensure consumer queries are clear and particular. Prepare customers or implement question refinement mechanisms to optimize question high quality.

Reply relevance

Think about the next troubleshooting strategies:

- Partial protection – Retrieved context addresses elements of the query however fails to cowl all points, particularly in multi-part queries. To handle this, decompose advanced queries into sub-questions. Instruct the LLM or a devoted module to retrieve and reply every sub-question earlier than composing the ultimate response. For instance:

- Break down the question into sub-questions.

- Retrieve related passages for every sub-question.

- Compose a last reply addressing every half.

- Context/reply mismatch – The LLM may misread retrieved passages, omit related data, or merge content material incorrectly on account of hallucination. You need to use immediate engineering to information the LLM extra successfully. For instance, for the unique question “What are the highest 3 causes for X?” you need to use the rewritten immediate “Checklist the highest 3 causes for X clearly labeled as #1, #2, and #3, based mostly strictly on the retrieved context.”

Truthfulness

Think about the next:

- Stale or inaccurate information sources – Outdated or conflicting data within the data corpus may result in incorrect solutions. To handle this, evaluate the retrieved context with verified sources to supply accuracy. Collaborate with SMEs to validate the information.

- LLM hallucination – The mannequin may fabricate or embellish particulars, even with correct retrieved context. Though Amazon Q Enterprise is a RAG generative AI answer, and may considerably scale back the hallucination, it’s not potential to get rid of hallucination completely. You’ll be able to measure the frequency of low context precision solutions to determine patterns and quantify the affect of hallucinations to realize an aggregated view with the analysis answer.

By systematically analyzing and addressing the foundation causes of low analysis metrics, you possibly can optimize your Amazon Q Enterprise software. From doc retrieval and rating to immediate engineering and validation, these methods will assist improve the effectiveness of your RAG answer.

Clear up

Don’t overlook to return to the CloudFormation console and delete the CloudFormation stack to delete the underlying infrastructure that you simply arrange, to keep away from further prices in your AWS account.

Conclusion

On this submit, we outlined two analysis options for Amazon Q Enterprise: a complete analysis workflow and a light-weight Lambda based mostly analysis. These approaches mix automated analysis approaches equivalent to Ragas with human-in-the-loop validation, offering dependable and correct assessments.

By utilizing our steering on tips on how to enhance analysis metrics, you possibly can constantly optimize your Amazon Q Enterprise software to satisfy enterprise wants with Amazon Q Enterprise. Whether or not you’re utilizing the end-to-end answer or the light-weight strategy, these frameworks present a scalable and environment friendly path to enhance accuracy and relevance.

To study extra about Amazon Q Enterprise and tips on how to consider Amazon Q Enterprise outcomes, discover these hands-on workshops:

Concerning the authors

Rui Cardoso is a accomplice options architect at Amazon Internet Providers (AWS). He’s specializing in AI/ML and IoT. He works with AWS Companions and help them in growing options in AWS. When not working, he enjoys biking, climbing and studying new issues.

Rui Cardoso is a accomplice options architect at Amazon Internet Providers (AWS). He’s specializing in AI/ML and IoT. He works with AWS Companions and help them in growing options in AWS. When not working, he enjoys biking, climbing and studying new issues.

Julia Hu is a Sr. AI/ML Options Architect at Amazon Internet Providers. She is specialised in Generative AI, Utilized Knowledge Science and IoT structure. Presently she is a part of the Amazon Bedrock group, and a Gold member/mentor in Machine Studying Technical Discipline Neighborhood. She works with prospects, starting from start-ups to enterprises, to develop AWSome generative AI options. She is especially keen about leveraging Massive Language Fashions for superior information analytics and exploring sensible functions that handle real-world challenges.

Julia Hu is a Sr. AI/ML Options Architect at Amazon Internet Providers. She is specialised in Generative AI, Utilized Knowledge Science and IoT structure. Presently she is a part of the Amazon Bedrock group, and a Gold member/mentor in Machine Studying Technical Discipline Neighborhood. She works with prospects, starting from start-ups to enterprises, to develop AWSome generative AI options. She is especially keen about leveraging Massive Language Fashions for superior information analytics and exploring sensible functions that handle real-world challenges.

Amit Gupta is a Senior Q Enterprise Options Architect Options Architect at AWS. He’s keen about enabling prospects with well-architected generative AI options at scale.

Amit Gupta is a Senior Q Enterprise Options Architect Options Architect at AWS. He’s keen about enabling prospects with well-architected generative AI options at scale.

Neil Desai is a expertise government with over 20 years of expertise in synthetic intelligence (AI), information science, software program engineering, and enterprise structure. At AWS, he leads a group of Worldwide AI companies specialist options architects who assist prospects construct revolutionary Generative AI-powered options, share greatest practices with prospects, and drive product roadmap. He’s keen about utilizing expertise to resolve real-world issues and is a strategic thinker with a confirmed monitor document of success.

Neil Desai is a expertise government with over 20 years of expertise in synthetic intelligence (AI), information science, software program engineering, and enterprise structure. At AWS, he leads a group of Worldwide AI companies specialist options architects who assist prospects construct revolutionary Generative AI-powered options, share greatest practices with prospects, and drive product roadmap. He’s keen about utilizing expertise to resolve real-world issues and is a strategic thinker with a confirmed monitor document of success.

Ricardo Aldao is a Senior Companion Options Architect at AWS. He’s a passionate AI/ML fanatic who focuses on supporting companions in constructing generative AI options on AWS.

Ricardo Aldao is a Senior Companion Options Architect at AWS. He’s a passionate AI/ML fanatic who focuses on supporting companions in constructing generative AI options on AWS.