Generative AI has emerged as a transformative know-how in healthcare, driving digital transformation in important areas resembling affected person engagement and care administration. It has proven potential to revolutionize how clinicians present improved care by way of automated methods with diagnostic help instruments that present well timed, personalised ideas, finally main to higher well being outcomes. For instance, a research reported in BMC Medical Training that medical college students who obtained massive language mannequin (LLM)-generated suggestions throughout simulated affected person interactions considerably improved their medical decision-making in comparison with those that didn’t.

On the middle of most generative AI methods are LLMs able to producing remarkably pure conversations, enabling healthcare clients to construct merchandise throughout billing, analysis, therapy, and analysis that may carry out duties and function independently with human oversight. Nonetheless, the utility of generative AI requires an understanding of the potential dangers and impacts on healthcare service supply, which necessitates the necessity for cautious planning, definition, and execution of a system-level strategy to constructing secure and accountable generative AI-infused functions.

On this submit, we deal with the design part of constructing healthcare generative AI functions, together with defining system-level insurance policies that decide the inputs and outputs. These insurance policies may be regarded as pointers that, when adopted, assist construct a accountable AI system.

Designing responsibly

LLMs can remodel healthcare by lowering the price and time required for concerns resembling high quality and reliability. As proven within the following diagram, accountable AI concerns may be efficiently built-in into an LLM-powered healthcare software by contemplating high quality, reliability, belief, and equity for everybody. The aim is to advertise and encourage sure accountable AI functionalities of AI methods. Examples embody the next:

- Every part’s enter and output is aligned with medical priorities to take care of alignment and promote controllability

- Safeguards, resembling guardrails, are applied to boost the security and reliability of your AI system

- Complete AI red-teaming and evaluations are utilized to your entire end-to-end system to evaluate security and privacy-impacting inputs and outputs

Conceptual structure

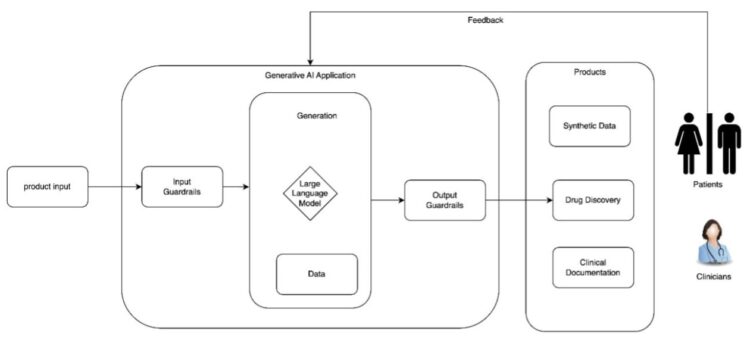

The next diagram exhibits a conceptual structure of a generative AI software with an LLM. The inputs (straight from an end-user) are mediated by way of enter guardrails. After the enter has been accepted, the LLM can course of the person’s request utilizing inside knowledge sources. The output of the LLM is once more mediated by way of guardrails and may be shared with end-users.

Set up governance mechanisms

When constructing generative AI functions in healthcare, it’s important to think about the assorted dangers on the particular person mannequin or system degree, in addition to on the software or implementation degree. The dangers related to generative AI can differ from and even amplify present AI dangers. Two of crucial dangers are confabulation and bias:

- Confabulation — The mannequin generates assured however faulty outputs, typically known as hallucinations. This might mislead sufferers or clinicians.

- Bias — This refers back to the threat of exacerbating historic societal biases amongst completely different subgroups, which may outcome from non-representative coaching knowledge.

To mitigate these dangers, think about establishing content material insurance policies that clearly outline the kinds of content material your functions ought to keep away from producing. These insurance policies must also information learn how to fine-tune fashions and which applicable guardrails to implement. It’s essential that the insurance policies and pointers are tailor-made and particular to the meant use case. As an illustration, a generative AI software designed for medical documentation ought to have a coverage that prohibits it from diagnosing illnesses or providing personalised therapy plans.

Moreover, defining clear and detailed insurance policies which are particular to your use case is prime to constructing responsibly. This strategy fosters belief and helps builders and healthcare organizations rigorously think about the dangers, advantages, limitations, and societal implications related to every LLM in a selected software.

The next are some instance insurance policies you may think about using on your healthcare-specific functions. The primary desk summarizes the roles and tasks for human-AI configurations.

| Motion ID | Advised Motion | Generative AI Dangers |

| GV-3.2-001 | Insurance policies are in place to bolster oversight of generative AI methods with unbiased evaluations or assessments of generative AI fashions or methods the place the sort and robustness of evaluations are proportional to the recognized dangers. | CBRN Data or Capabilities; Dangerous Bias and Homogenization |

| GV-3.2-002 | Think about adjustment of organizational roles and parts throughout lifecycle levels of enormous or advanced generative AI methods, together with: take a look at and analysis, validation, and red-teaming of generative AI methods; generative AI content material moderation; generative AI system improvement and engineering; elevated accessibility of generative AI instruments, interfaces, and methods; and incident response and containment. | Human-AI Configuration; Data Safety; Dangerous Bias and Homogenization |

| GV-3.2-003 | Outline acceptable use insurance policies for generative AI interfaces, modalities, and human-AI configurations (for instance, for AI assistants and decision-making duties), together with standards for the sorts of queries generative AI functions ought to refuse to reply to. | Human-AI Configuration |

| GV-3.2-004 | Set up insurance policies for person suggestions mechanisms for generative AI methods that embody thorough directions and any mechanisms for recourse. | Human-AI Configuration |

| GV-3.2-005 | Have interaction in risk modeling to anticipate potential dangers from generative AI methods. | CBRN Data or Capabilities; Data Safety |

The next desk summarizes insurance policies for threat administration in AI system design.

| Motion ID | Advised Motion | Generative AI Dangers |

| GV-4.1-001 | Set up insurance policies and procedures that handle continuous enchancment processes for generative AI threat measurement. Tackle common dangers related to a scarcity of explainability and transparency in generative AI methods through the use of ample documentation and methods resembling software of gradient-based attributions, occlusion or time period discount, counterfactual prompts and immediate engineering, and evaluation of embeddings. Assess and replace threat measurement approaches at common cadences. | Confabulation |

| GV-4.1-002 | Set up insurance policies, procedures, and processes detailing threat measurement in context of use with standardized measurement protocols and structured public suggestions workouts resembling AI red-teaming or unbiased exterior evaluations. | CBRN Data and Functionality; Worth Chain and Part Integration |

Transparency artifacts

Selling transparency and accountability all through the AI lifecycle can foster belief, facilitate debugging and monitoring, and allow audits. This entails documenting knowledge sources, design choices, and limitations by way of instruments like mannequin playing cards and providing clear communication about experimental options. Incorporating person suggestions mechanisms additional helps steady enchancment and fosters larger confidence in AI-driven healthcare options.

AI builders and DevOps engineers ought to be clear in regards to the proof and causes behind all outputs by offering clear documentation of the underlying knowledge sources and design choices in order that end-users could make knowledgeable choices about using the system. Transparency allows the monitoring of potential issues and facilitates the analysis of AI methods by each inside and exterior groups. Transparency artifacts information AI researchers and builders on the accountable use of the mannequin, promote belief, and assist end-users make knowledgeable choices about using the system.

The next are some implementation ideas:

- When constructing AI options with experimental fashions or providers, it’s important to focus on the potential for sudden mannequin conduct so healthcare professionals can precisely assess whether or not to make use of the AI system.

- Think about publishing artifacts resembling Amazon SageMaker mannequin playing cards or AWS system playing cards. Additionally, at AWS we offer detailed details about our AI methods by way of AWS AI Service Playing cards, which listing meant use circumstances and limitations, accountable AI design selections, and deployment and efficiency optimization greatest practices for a few of our AI providers. AWS additionally recommends establishing transparency insurance policies and processes for documenting the origin and historical past of coaching knowledge whereas balancing the proprietary nature of coaching approaches. Think about making a hybrid doc that mixes components of each mannequin playing cards and repair playing cards, as a result of your software possible makes use of basis fashions (FMs) however offers a particular service.

- Provide a suggestions person mechanism. Gathering common and scheduled suggestions from healthcare professionals might help builders make crucial refinements to enhance system efficiency. Additionally think about establishing insurance policies to assist builders enable for person suggestions mechanisms for AI methods. These ought to embody thorough directions and think about establishing insurance policies for any mechanisms for recourse.

Safety by design

When creating AI methods, think about safety greatest practices at every layer of the appliance. Generative AI methods is perhaps susceptible to adversarial assaults suck as immediate injection, which exploits the vulnerability of LLMs by manipulating their inputs or immediate. These kind of assaults can lead to knowledge leakage, unauthorized entry, or different safety breaches. To handle these considerations, it may be useful to carry out a threat evaluation and implement guardrails for each the enter and output layers of the appliance. As a common rule, your working mannequin ought to be designed to carry out the next actions:

- Safeguard affected person privateness and knowledge safety by implementing personally identifiable info (PII) detection, configuring guardrails that examine for immediate assaults

- Regularly assess the advantages and dangers of all generative AI options and instruments and frequently monitor their efficiency by way of Amazon CloudWatch or different alerts

- Completely consider all AI-based instruments for high quality, security, and fairness earlier than deploying

Developer sources

The next sources are helpful when architecting and constructing generative AI functions:

- Amazon Bedrock Guardrails helps you implement safeguards on your generative AI functions based mostly in your use circumstances and accountable AI insurance policies. You possibly can create a number of guardrails tailor-made to completely different use circumstances and apply them throughout a number of FMs, offering a constant person expertise and standardizing security and privateness controls throughout your generative AI functions.

- The AWS accountable AI whitepaper serves as a useful useful resource for healthcare professionals and different builders which are creating AI functions in essential care environments the place errors might have life-threatening penalties.

- AWS AI Service Playing cards explains the use circumstances for which the service is meant, how machine studying (ML) is utilized by the service, and key concerns within the accountable design and use of the service.

Conclusion

Generative AI has the potential to enhance practically each side of healthcare by enhancing care high quality, affected person expertise, medical security, and administrative security by way of accountable implementation. When designing, creating, or working an AI software, attempt to systematically think about potential limitations by establishing a governance and analysis framework grounded by the necessity to preserve the security, privateness, and belief that your customers count on.

For extra details about accountable AI, consult with the next sources:

Concerning the authors

Tonny Ouma is an Utilized AI Specialist at AWS, specializing in generative AI and machine studying. As a part of the Utilized AI staff, Tonny helps inside groups and AWS clients incorporate modern AI methods into their merchandise. In his spare time, Tonny enjoys driving sports activities bikes, {golfing}, and entertaining household and mates along with his mixology abilities.

Tonny Ouma is an Utilized AI Specialist at AWS, specializing in generative AI and machine studying. As a part of the Utilized AI staff, Tonny helps inside groups and AWS clients incorporate modern AI methods into their merchandise. In his spare time, Tonny enjoys driving sports activities bikes, {golfing}, and entertaining household and mates along with his mixology abilities.

Simon Handley, PhD, is a Senior AI/ML Options Architect within the International Healthcare and Life Sciences staff at Amazon Net Providers. He has greater than 25 years’ expertise in biotechnology and machine studying and is obsessed with serving to clients resolve their machine studying and life sciences challenges. In his spare time, he enjoys horseback driving and taking part in ice hockey.

Simon Handley, PhD, is a Senior AI/ML Options Architect within the International Healthcare and Life Sciences staff at Amazon Net Providers. He has greater than 25 years’ expertise in biotechnology and machine studying and is obsessed with serving to clients resolve their machine studying and life sciences challenges. In his spare time, he enjoys horseback driving and taking part in ice hockey.