On this submit, we discover how generative AI can revolutionize menace modeling practices by automating vulnerability identification, producing complete assault situations, and offering contextual mitigation methods. In contrast to earlier automation makes an attempt that struggled with the inventive and contextual facets of menace evaluation, generative AI overcomes these limitations by its potential to know complicated system relationships, cause about novel assault vectors, and adapt to distinctive architectural patterns. The place conventional automation instruments relied on inflexible rule units and predefined templates, AI fashions can now interpret nuanced system designs, infer safety implications throughout elements, and generate menace situations that human analysts may overlook, making efficient automated menace modeling a sensible actuality.

Menace modeling and why it issues

Menace modeling is a structured strategy to figuring out, quantifying, and addressing safety dangers related to an utility or system. It includes analyzing the structure from an attacker’s perspective to find potential vulnerabilities, decide their affect, and implement applicable mitigations. Efficient menace modeling examines information flows, belief boundaries, and potential assault vectors to create a complete safety technique tailor-made to the precise system.

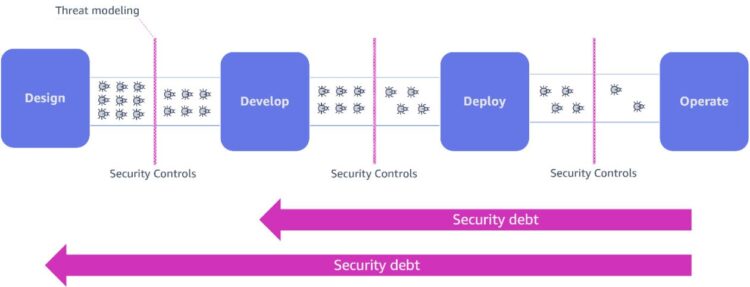

In a shift-left strategy to safety, menace modeling serves as a important early intervention. By implementing menace modeling through the design part—earlier than a single line of code is written—organizations can establish and handle potential vulnerabilities at their inception level. The next diagram illustrates this workflow.

This proactive technique considerably reduces the buildup of safety debt and transforms safety from a bottleneck into an enabler of innovation. When safety issues are built-in from the start, groups can implement applicable controls all through the event lifecycle, leading to extra resilient techniques constructed from the bottom up.

Regardless of these clear advantages, menace modeling stays underutilized within the software program growth trade. This restricted adoption stems from a number of important challenges inherent to conventional menace modeling approaches:

- Time necessities – The method takes 1–8 days to finish, with a number of iterations wanted for full protection. This conflicts with tight growth timelines in trendy software program environments.

- Inconsistent evaluation – Menace modeling suffers from subjectivity. Safety specialists usually fluctuate of their menace identification and threat degree assignments, creating inconsistencies throughout tasks and groups.

- Scaling limitations – Handbook menace modeling can’t successfully handle trendy system complexity. The expansion of microservices, cloud deployments, and system dependencies outpaces safety groups’ capability to establish vulnerabilities.

How generative AI will help

Generative AI has revolutionized menace modeling by automating historically complicated analytical duties that required human judgment, reasoning, and experience. Generative AI brings highly effective capabilities to menace modeling, combining pure language processing with visible evaluation to concurrently consider system architectures, diagrams, and documentation. Drawing from intensive safety databases like MITRE ATT&CK and OWASP, these fashions can rapidly establish potential vulnerabilities throughout complicated techniques. This twin functionality of processing each textual content and visuals whereas referencing complete safety frameworks permits quicker, extra thorough menace assessments than conventional guide strategies.

Our answer, Menace Designer, makes use of enterprise-grade basis fashions (FMs) accessible in Amazon Bedrock to rework menace modeling. Utilizing Anthropic’s Claude Sonnet 3.7 superior multimodal capabilities, we create complete menace assessments at scale. You may also use different accessible fashions from the mannequin catalog or use your personal fine-tuned mannequin, providing you with most flexibility to make use of pre-trained experience or custom-tailored capabilities particular to your safety area and organizational necessities. This adaptability makes certain your menace modeling answer delivers exact insights aligned together with your distinctive safety posture.

Resolution overview

Menace Designer is a user-friendly net utility that makes superior menace modeling accessible to growth and safety groups. Menace Designer makes use of giant language fashions (LLMs) to streamline the menace modeling course of and establish vulnerabilities with minimal human effort.

Key options embrace:

- Structure diagram evaluation – Customers can submit system structure diagrams, which the appliance processes utilizing multimodal AI capabilities to know system elements and relationships

- Interactive menace catalog – The system generates a complete catalog of potential threats that customers can discover, filter, and refine by an intuitive interface

- Iterative refinement – With the replay performance, groups can rerun the menace modeling course of with design enhancements or modifications, and see how adjustments affect the system’s safety posture

- Standardized exports – Outcomes could be exported in PDF or DOCX codecs, facilitating integration with current safety documentation and compliance processes

- Serverless structure – The answer runs on a cloud-based serverless infrastructure, assuaging the necessity for devoted servers and offering automated scaling based mostly on demand

The next diagram illustrates the Menace Designer structure.

The answer is constructed on a serverless stack, utilizing AWS managed providers for automated scaling, excessive availability, and cost-efficiency. The answer consists of the next core elements:

- Frontend – AWS Amplify hosts a ReactJS utility constructed with the Cloudscape design system, offering the UI

- Authentication – Amazon Cognito manages the consumer pool, dealing with authentication flows and securing entry to utility sources

- API layer – Amazon API Gateway serves because the communication hub, offering proxy integration between frontend and backend providers with request routing and authorization

- Information storage – We use the next providers for storage:

- Two Amazon DynamoDB tables:

- The agent execution state desk maintains processing state

- The menace catalog desk shops recognized threats and vulnerabilities

- An Amazon Easy Storage Service (Amazon S3) structure bucket shops system diagrams and artifacts

- Two Amazon DynamoDB tables:

- Generative AI – Amazon Bedrock gives the FM for menace modeling, analyzing structure diagrams and figuring out potential vulnerabilities

- Backend service – An AWS Lambda perform incorporates the REST interface enterprise logic, constructed utilizing Powertools for AWS Lambda (Python)

- Agent service – Hosted on a Lambda perform, the agent service works asynchronously to handle menace evaluation workflows, processing diagrams and sustaining execution state in DynamoDB

Agent service workflow

The agent service is constructed on LangGraph by LangChain, with which we will orchestrate complicated workflows by a graph-based construction. This strategy incorporates two key design patterns:

- Separation of considerations – The menace modeling course of is decomposed into discrete, specialised steps that may be executed independently and iteratively. Every node within the graph represents a particular perform, akin to picture processing, asset identification, information move evaluation, or menace enumeration.

- Structured output – Every part within the workflow produces standardized, well-defined outputs that function inputs to subsequent steps, offering consistency and facilitating downstream integrations for constant illustration.

The agent workflow follows a directed graph the place processing begins on the Begin node and proceeds by a number of specialised levels, as illustrated within the following diagram.

The workflow contains the next nodes:

- Picture processing – The Picture processing node processes the structure diagram picture and converts it within the applicable format for the LLM to devour

- Belongings – This info, together with textual descriptions, feeds into the Belongings node, which identifies and catalogs system elements

- Flows – The workflow then progresses to the Flows node, mapping information actions and belief boundaries between elements

- Threats – Lastly, the Threats node makes use of this info to establish potential vulnerabilities and assault vectors

A important innovation in our agent structure is the adaptive iteration mechanism carried out by conditional edges within the graph. This function addresses one of many elementary challenges in LLM-based menace modeling: controlling the comprehensiveness and depth of the evaluation.

The conditional edge after the Threats node permits two highly effective operational modes:

- Consumer-controlled iteration – On this mode, the consumer specifies the variety of iterations the agent ought to carry out. With every move by the loop, the agent enriches the menace catalog by analyzing edge instances which may have been missed in earlier iterations. This strategy provides safety professionals direct management over the thoroughness of the evaluation.

- Autonomous hole evaluation – In totally agentic mode, a specialised hole evaluation part evaluates the present menace catalog. This part identifies potential blind spots or underdeveloped areas within the menace mannequin and triggers extra iterations till it determines the menace catalog is sufficiently complete. The agent primarily performs its personal high quality assurance, repeatedly refining its output till it meets predefined completeness standards.

Stipulations

Earlier than you deploy Menace Designer, be sure to have the required stipulations in place. For extra info, confer with the GitHub repo.

Get began with Menace Designer

To start out utilizing Menace Designer, observe the step-by-step deployment directions from the venture’s README accessible in GitHub. After you deploy the answer, you’re able to create your first menace mannequin. Log in and full the next steps:

- Select Submit menace mannequin to provoke a brand new menace mannequin.

- Full the submission kind together with your system particulars:

- Required fields: Present a title and structure diagram picture.

- Beneficial fields: Present an answer description and assumptions (these considerably enhance the standard of the menace mannequin).

- Configure evaluation parameters:

- Select your iteration mode:

- Auto (default): The agent intelligently determines when the menace catalog is complete.

- Handbook: Specify as much as 15 iterations for extra management.

- Configure your reasoning enhance to specify how a lot time the mannequin spends on evaluation (accessible when utilizing Anthropic’s Claude Sonnet 3.7).

- Select your iteration mode:

- Select Begin menace modeling to launch the evaluation.

You may monitor progress by the intuitive interface, which shows every execution step in actual time. The entire evaluation sometimes takes between 5–quarter-hour, relying on system complexity and chosen parameters.

When the evaluation is full, you should have entry to a complete menace mannequin that you could discover, refine, and export.

Clear up

To keep away from incurring future prices, delete the answer by operating the ./destroy.sh script. Discuss with the README for extra particulars.

Conclusion

On this submit, we demonstrated how generative AI transforms menace modeling from an unique, expert-driven course of into an accessible safety apply for all growth groups. Through the use of FMs by our Menace Designer answer, we’ve democratized subtle safety evaluation, enabling organizations to establish vulnerabilities earlier and extra constantly. This AI-powered strategy removes the normal obstacles of time, experience, and scalability, making shift-left safety a sensible actuality somewhat than simply an aspiration—in the end constructing extra resilient techniques with out sacrificing growth velocity.

Deploy Menace Designer following the README directions, add your structure diagram, and rapidly obtain AI-generated safety insights. This streamlined strategy helps you combine proactive safety measures into your growth course of with out compromising pace or innovation—making complete menace modeling accessible to groups of various sizes.

Concerning the Authors

Edvin Hallvaxhiu is a senior safety architect at Amazon Internet Companies, specialised in cybersecurity and automation. He helps clients design safe, compliant cloud options.

Edvin Hallvaxhiu is a senior safety architect at Amazon Internet Companies, specialised in cybersecurity and automation. He helps clients design safe, compliant cloud options.

Sindi Cali is a advisor with AWS Skilled Companies. She helps clients in constructing data-driven purposes in AWS.

Sindi Cali is a advisor with AWS Skilled Companies. She helps clients in constructing data-driven purposes in AWS.

Aditi Gupta is a Senior World Engagement Supervisor at AWS ProServe. She makes a speciality of delivering impactful Massive Information and AI/ML options that allow AWS clients to maximise their enterprise worth by information utilization.

Aditi Gupta is a Senior World Engagement Supervisor at AWS ProServe. She makes a speciality of delivering impactful Massive Information and AI/ML options that allow AWS clients to maximise their enterprise worth by information utilization.

Rahul Shaurya is a Principal Information Architect at Amazon Internet Companies. He helps and works intently with clients constructing information platforms and analytical purposes on AWS.

Rahul Shaurya is a Principal Information Architect at Amazon Internet Companies. He helps and works intently with clients constructing information platforms and analytical purposes on AWS.