I loved studying this paper, not as a result of I’ve met among the authors earlier than🫣, however as a result of it felt obligatory. Many of the papers I’ve written about to this point have made waves within the broader ML group, which is nice. This one, although, is unapologetically African (i.e. it solves a really African downside), and I feel each African ML researcher, particularly these considering speech, must learn it.

AccentFold tackles a particular challenge many people can relate to: present Asr programs simply don’t work effectively for African-accented English. And it’s not for lack of attempting.

Most present approaches use strategies like multitask studying, area adaptation, or positive tuning with restricted knowledge, however all of them hit the identical wall: African accents are underrepresented in datasets, and gathering sufficient knowledge for each accent is pricey and unrealistic.

Take Nigeria, for instance. We have now a whole bunch of native languages, and many individuals develop up talking multiple. So once we communicate English, the accent is formed by how our native languages work together with it — by way of pronunciation, rhythm, and even switching mid-sentence. Throughout Africa, this solely will get extra advanced.

As a substitute of chasing extra knowledge, this paper presents a better workaround: it introduces AccentFold, a technique that learns accent Embeddings from over 100 African accents. These embeddings seize deep linguistic relationships (phonological, syntactic, morphological), and assist ASR programs generalize to accents they’ve by no means seen.

That concept alone makes this paper such an necessary contribution.

Associated Work

One factor I discovered fascinating on this part is how the authors positioned their work inside latest advances in probing language fashions. Earlier analysis has proven that pre educated speech fashions like DeepSpeech and XLSR already seize linguistic or accent particular data of their embeddings, even with out being explicitly educated for it. Researchers have used this to research language variation, detect dialects, and enhance ASR programs with restricted labeled knowledge.

AccentFold builds on that concept however takes it additional. Essentially the most carefully associated work additionally used mannequin embeddings to assist accented ASR, however AccentFold differs in two necessary methods.

- First, relatively than simply analyzing embeddings, the authors use them to information the number of coaching subsets. This helps the mannequin generalize to accents it has not seen earlier than.

- Second, they function at a a lot bigger scale, working with 41 African English accents. That is practically twice the scale of earlier efforts.

The Dataset

The authors used AfriSpeech 200, a Pan African speech corpus with over 200 hours of audio, 120 accents, and greater than 2,000 distinctive audio system. One of many authors of this paper additionally helped construct the dataset, which I feel is basically cool. In keeping with them, it’s the most numerous dataset of African accented English accessible for ASR to this point.

What stood out to me was how the dataset is break up. Out of the 120 accents, 41 seem solely within the check set. This makes it supreme for evaluating zero shot generalization. For the reason that mannequin isn’t educated on these accents, the check outcomes give a transparent image of how effectively it adapts to unseen accents.

What AccentFold Is

Like I discussed earlier, AccentFold is constructed on the concept of utilizing realized accent embeddings to information adaptation. Earlier than going additional, it helps to clarify what embeddings are. Embeddings are vector representations of advanced knowledge. They seize construction, patterns, and relationships in a means that lets us examine totally different inputs — on this case, totally different accents. Every accent is represented as a degree in a excessive dimensional area, and accents which might be linguistically or geographically associated are usually shut collectively.

What makes this convenient is that AccentFold doesn’t want specific labels to know which accents are related. The mannequin learns that by way of the embeddings, which permits it to generalize even to accents it has not seen throughout coaching.

How AccentFold Works

The way in which it really works is pretty easy. AccentFold is constructed on prime of a big pre educated speech mannequin known as XLSR. As a substitute of coaching it on only one activity, the authors use multitask studying, which suggests the mannequin is educated to do just a few various things directly utilizing the identical enter. It has three heads:

- An ASR head for Speech Recognition, changing speech to textual content. That is educated utilizing CTC loss, which helps match audio to the right phrase sequence.

- An accent classification head for predicting the speaker’s accent, educated with cross entropy loss.

- A area classification head for figuring out whether or not the audio is medical or normal, additionally educated with cross entropy however in a binary setting.

Every activity helps the mannequin be taught higher accent representations. For instance, attempting to categorise accents teaches the mannequin to acknowledge how individuals communicate otherwise, which is important for adapting to new accents.

After coaching, the mannequin creates a vector for every accent by averaging the encoder output. That is known as imply pooling, and the result’s the accent embedding.

When the mannequin is requested to transcribe speech from a brand new accent it has not seen earlier than, it finds accents with related embeddings and makes use of their knowledge to positive tune the ASR system. So even with none labeled knowledge from the goal accent, the mannequin can nonetheless adapt. That’s what makes AccentFold work in zero shot settings.

What Info Does AccentFold Seize

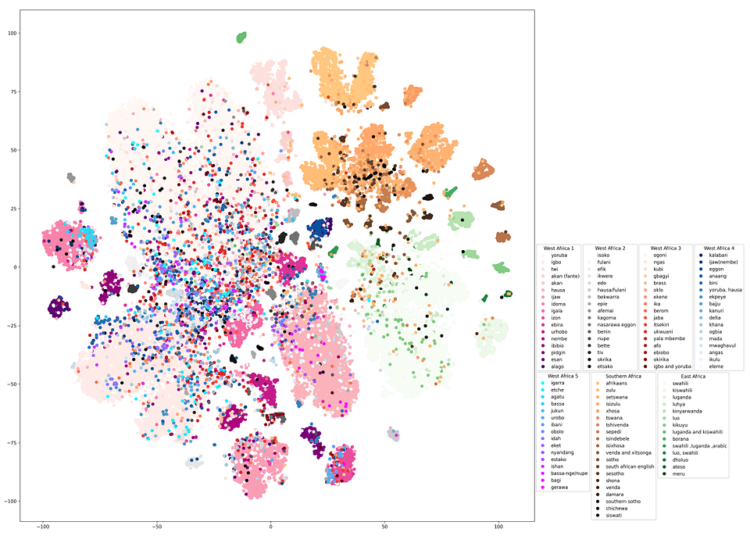

This part of the paper seems at what the accent embeddings are literally studying. Utilizing a sequence of tSNE plots, the authors discover whether or not AccentFold captures linguistic, geographical, and sociolinguistic construction. And truthfully, the visuals communicate for themselves.

- Clusters Type, However Not Randomly

In Determine 2, every level is an accent embedding, coloured by area. You instantly discover that the factors will not be scattered randomly. Accents from the identical area are likely to cluster. For instance, the pinkish cluster on the left represents West African accents like Yoruba, Igbo, Hausa, and Twi. On the higher proper, the orange cluster represents Southern African accents like Zulu, Xhosa, and Tswana.

What issues is not only that clusters kind, however how tightly they do. Some are dense and compact, suggesting inner similarity. Others are extra unfold out. South African Bantu accents are grouped very carefully, which suggests sturdy inner consistency. West African clusters are broader, seemingly reflecting the variation in how West African English is spoken, even inside a single nation like Nigeria.

2. Geography Is Not Simply Visible. It Is Spatial

Determine 3 reveals embeddings labeled by nation. Nigerian accents, proven in orange, kind a dense core. Ghanaian accents in blue are close by, whereas Kenyan and Ugandan accents seem removed from them in vector area.

There may be nuance too. Rwanda, which has each Francophone and Anglophone influences, falls between clusters. It doesn’t absolutely align with East or West African embeddings. This displays its blended linguistic identification, and reveals the mannequin is studying one thing actual.

3. Twin Accents Fall Between

Determine 4 reveals embeddings for audio system who reported twin accents. Audio system who recognized as Igbo and Yoruba fall between the Igbo cluster in blue and the Yoruba cluster in orange. Much more distinct combos like Yoruba and Hausa land in between.

This reveals that AccentFold is not only classifying accents. It’s studying how they relate. The mannequin treats accent as one thing steady and relational, which is what a great embedding ought to do.

4. Linguistic Households Are Strengthened and Generally Challenged

In Determine 9, the embeddings are coloured by language households. Most Niger Congo languages kind one massive cluster, as anticipated. However in Determine 10, the place accents are grouped by household and area, one thing surprising seems. Ghanaian Kwa accents are positioned close to South African Bantu accents.

This challenges widespread assumptions in classification programs like Ethnologue. AccentFold could also be choosing up on phonological or morphological similarities that aren’t captured by conventional labels.

5. Accent Embeddings Can Assist Repair Labels

The authors additionally present that the embeddings can clear up mislabeled or ambiguous knowledge. For instance:

- Eleven Nigerian audio system labeled their accent as English, however their embeddings clustered with Berom, a neighborhood accent.

- Twenty audio system labeled their accent as Pidgin, however have been positioned nearer to Ijaw, Ibibio, and Efik.

This implies AccentFold just isn’t solely studying which accents exist, but in addition correcting noisy or imprecise enter. That’s particularly helpful for actual world datasets the place customers usually self report inconsistently.

Evaluating AccentFold: Which Accents Ought to You Choose

This part is one among my favorites as a result of it frames a really sensible downside. If you wish to construct an ASR system for a brand new accent however wouldn’t have knowledge for that accent, which accents must you use to coach your mannequin?

Let’s say you’re focusing on the Afante accent. You don’t have any labeled knowledge from Afante audio system, however you do have a pool of speech knowledge from different accents. Let’s name that pool A. Resulting from useful resource constraints like time, funds, and compute, you possibly can solely choose s accents from A to construct your positive tuning dataset. Of their experiments, they repair s as 20, that means 20 accents are used to coach every goal accent. So the query turns into: which 20 accents must you select to assist your mannequin carry out effectively on Afante?

Setup: How They Consider

To check this, the authors simulate the setup utilizing 41 goal accents from the Afrispeech 200 dataset. These accents don’t seem within the coaching or improvement units. For every goal accent, they:

- Choose a subset of s accents from A utilizing one among three methods

- Fantastic tune the pre educated XLS R mannequin utilizing solely knowledge from these s accents

- Consider the mannequin on a check set for that concentrate on accent

- Report the Phrase Error Charge, or WER, averaged over 10 epochs

The check set is similar throughout all experiments and consists of 108 accents from the Afrispeech 200 check break up. This ensures a good comparability of how effectively every technique generalizes to new accents.

The authors check three methods for choosing coaching accents:

- Random Sampling: Choose s accents randomly from A. It’s easy however unguided.

- GeoProx: Choose accents primarily based on geographical proximity. They use geopy to search out nations closest to the goal and select accents from there.

- AccentFold: Use the realized accent embeddings to pick the s accents most much like the goal in illustration area.

Desk 1 reveals that AccentFold outperforms each GeoProx and Random sampling throughout all 41 goal accents.

This ends in a few 3.5 p.c absolute enchancment in WER in comparison with random choice, which is significant for low useful resource ASR. AccentFold additionally has decrease variance, that means it performs extra constantly. Random sampling has the best variance, making it much less dependable.

Does Extra Knowledge Assist

The paper asks a traditional machine studying query: does efficiency hold enhancing as you add extra coaching accents?

Determine 5 reveals that WER improves as s will increase, however solely up to a degree. After about 20 to 25 accents, the efficiency ranges off.

So extra knowledge helps, however solely to a degree. What issues most is utilizing the precise knowledge.

Key Takeaways

- AccentFold addresses an actual African downside: ASR programs usually fail on African accented English because of restricted and imbalanced datasets.

- The paper introduces accent embeddings that seize linguistic and geographic similarities with no need labeled knowledge from the goal accent.

- It formalizes a subset choice downside: given a brand new accent with no knowledge, which different accents must you prepare on to get the very best outcomes?

- Three methods are examined: random sampling, geographical proximity, and AccentFold utilizing embedding similarity.

- AccentFold outperforms each baselines, with decrease Phrase Error Charges and extra constant outcomes

- Embedding similarity beats geography. The closest accents in embedding area will not be at all times geographically shut, however they’re extra useful.

- Extra knowledge helps solely up to a degree. Efficiency improves at first, however ranges off. You do not want all the info, simply the precise accents.

- Embeddings might help clear up noisy or mislabeled knowledge, enhancing dataset high quality.

- Limitation: outcomes are primarily based on one pre educated mannequin. Generalization to different fashions or languages just isn’t examined.

- Whereas this work focuses on African accents, the core methodology — studying from what fashions already know — might encourage extra normal approaches to adaptation in low-resource settings.

Supply Word:

This text summarizes findings from the paper AccentFold: A Journey by way of African Accents for Zero Shot ASR Adaptation to Goal Accents by Owodunni et al. (2024). Figures and insights are sourced from the unique paper, accessible at https://arxiv.org/abs/2402.01152.