Intricate workflows that require dynamic and complicated API orchestration can typically be complicated to handle. In industries like insurance coverage, the place unpredictable situations are the norm, conventional automation falls brief, resulting in inefficiencies and missed alternatives. With the facility of clever brokers, you may simplify these challenges. On this put up, we discover how chaining domain-specific brokers utilizing Amazon Bedrock Brokers can remodel a system of complicated API interactions into streamlined, adaptive workflows, empowering your small business to function with agility and precision.

Amazon Bedrock is a totally managed service that provides a selection of high-performing basis fashions (FMs) from main synthetic intelligence (AI) corporations like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon via a single API, together with a broad set of capabilities to construct generative AI purposes with safety, privateness, and accountable AI.

Advantages of chaining Amazon Bedrock Brokers

Designing brokers is like designing different software program elements—they have an inclination to work greatest after they have a centered goal. When you’ve centered, single-purpose brokers, combining them into chains can enable them to unravel considerably complicated issues collectively. Utilizing pure language processing (NLP) and OpenAPI specs, Amazon Bedrock Brokers dynamically manages API sequences, minimizing dependency administration complexities. Moreover, brokers allow conversational context administration in real-time situations, utilizing session IDs and, if vital, backend databases like Amazon DynamoDB for prolonged context storage. By utilizing immediate directions and API descriptions, brokers acquire important info from API schemas to unravel particular issues effectively. This strategy not solely enhances agility and adaptability, but in addition demonstrates the worth of chaining brokers to simplify complicated workflows and remedy bigger issues successfully.

On this put up, we discover an insurance coverage claims use case, the place we display the idea of chaining with Amazon Bedrock Brokers. This includes an orchestrator agent calling and interacting with different brokers to collaboratively carry out a collection of duties, enabling environment friendly workflow administration.

Answer overview

For our use case, we develop a workflow for an insurance coverage digital assistant centered on streamlining duties similar to submitting claims, assessing damages, and dealing with coverage inquiries. The workflow simulates API sequencing dependencies, similar to conducting fraud checks throughout declare creation and analyzing uploaded photographs for injury evaluation if the person offers photographs. The orchestration dynamically adapts to person situations, guided by pure language prompts from domain-specific brokers like an insurance coverage orchestrator agent, coverage info agent, and injury evaluation notification agent. Utilizing OpenAPI specs and pure language prompts, the API sequencing in our insurance coverage digital assistant adapts to dynamic person situations, similar to customers opting in or out of picture uploads for injury evaluation, failing fraud checks or selecting to ask a wide range of questions associated to their insurance coverage insurance policies and coverages. This flexibility is achieved by chaining domain-specific brokers just like the insurance coverage orchestrator agent, coverage info agent, and injury evaluation notification agent.

Historically, insurance coverage processes are inflexible, with mounted steps for duties like fraud detection. Nonetheless, agent chaining permits for larger flexibility and flexibility, enabling the system to answer real-time person inputs and variations in situations. As an example, as an alternative of strictly adhering to predefined thresholds for fraud checks, the brokers can dynamically modify the workflow primarily based on person interactions and context. Equally, when customers select to add photographs whereas submitting a declare, the workflow can carry out real-time injury evaluation and instantly ship a abstract to claims adjusters for additional evaluation. This permits a faster response and extra correct decision-making. This strategy not solely streamlines the claims course of but in addition permits for a extra nuanced and environment friendly dealing with of duties, offering the required stability between automation and human intervention. By chaining Amazon Bedrock Brokers, we create a system that’s adaptable. This method caters to various person wants whereas sustaining the integrity of enterprise processes.

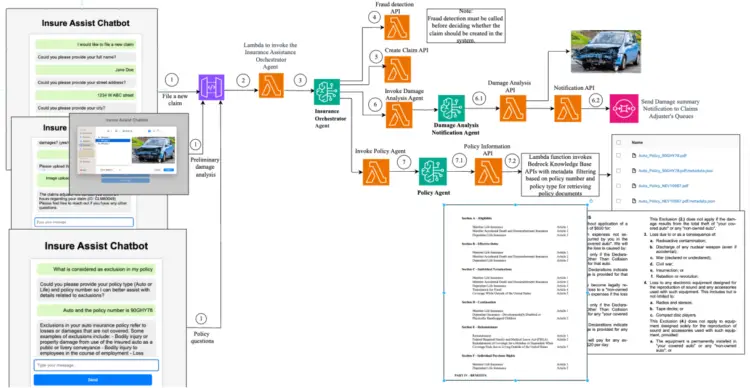

The next diagram illustrates the end-to-end insurance coverage claims workflow utilizing chaining with Amazon Bedrock Brokers.

The diagram reveals how specialised brokers use numerous instruments to streamline your complete claims course of—from submitting claims and assessing damages to answering buyer questions on insurance coverage insurance policies.

Stipulations

Earlier than continuing, ensure you have the next sources arrange:

Deploy the answer with AWS CloudFormation

Full the next steps to arrange the answer sources:

- Sign up to the AWS Administration Console as an IAM administrator or applicable IAM person.

- Select Launch Stack to deploy the CloudFormation template.

- Present the required parameters and create the stack.

For this setup, we use us-east-1 as our AWS Area, the Anthropic Claude 3 Haiku mannequin for orchestrating the circulate between the totally different brokers, the Anthropic Claude 3 Sonnet mannequin for injury evaluation of the uploaded photographs, and the Cohere Embed English V3 mannequin as an embedding mannequin to translate textual content from the insurance coverage coverage paperwork into numerical vectors, which permits for environment friendly search, comparability, and categorization of the paperwork.

If you wish to select different fashions on Amazon Bedrock, you are able to do so by making applicable modifications within the CloudFormation template. Verify for applicable mannequin help within the Area and the options which are supported by the fashions.

It will take about quarter-hour to deploy the answer. After the stack is deployed, you may view the varied outputs of the CloudFormation stack on the Outputs tab, as proven within the following screenshot.

The next screenshot reveals the three Amazon Bedrock brokers that had been deployed in your account.

Take a look at the claims creation, injury detection, and notification workflows

The primary a part of the deployed answer is to imitate submitting a brand new insurance coverage declare, fraud detection, non-compulsory injury evaluation of importing photographs, and subsequent notification to claims adjusters. This can be a smaller model of job automation to meet a specific enterprise downside achieved by chaining brokers, every performing a set of particular duties. The brokers work in concord to unravel the bigger operate of insurance coverage claims dealing with.

Let’s discover the structure of the declare creation workflow, the place the insurance coverage orchestrator agent and the injury evaluation notification agent work collectively to simulate submitting new claims, assessing damages, and sending a abstract of damages to the declare adjusters for human oversight. The next diagram illustrates this workflow.

On this workflow, the insurance coverage orchestrator agent mimics fraud detection and claims creation in addition to orchestrates handing off the duty to different task-specific brokers. The picture injury evaluation notification agent is accountable for doing a preliminary evaluation of the photographs uploaded for a injury. This agent invokes a Lambda operate that internally calls the Anthropic Claude Sonnet giant language mannequin (LLM) on Amazon Bedrock to carry out preliminary evaluation on the photographs. The LLM generates a abstract of the injury, which is shipped to an SQS queue, and is subsequently reviewed by the declare adjusters.

The NLP instruction prompts mixed with the OpenAPI specs for every motion group information the brokers of their decision-making course of, figuring out which motion group to invoke, the sequence of invocation, and the required parameters for calling particular APIs.

Use the UI to invoke the claims processing workflow

Full the next steps to invoke the claims processing workflow:

- From the outputs of the CloudFormation stack, select the URL for

HttpApiEndpoint.

- You may ask the chatbots pattern questions to start out exploring the performance of submitting a brand new declare.

Within the following instance, we ask for submitting a brand new declare and importing photographs as proof for the declare.

- On the Amazon SQS console, you may view the SQS queue that has been created by the CloudFormation stack and test the message that reveals the injury evaluation from the picture carried out by our LLM.

Take a look at the coverage info workflow

The next diagram reveals the structure of simply the coverage info agent. The coverage agent accesses the Coverage Data API to extract solutions to insurance-related questions from unstructured coverage paperwork similar to PDF information.

The coverage info agent is accountable for doing a lookup towards the insurance coverage coverage paperwork saved within the information base. The agent invokes a Lambda operate that can internally invoke the information base to seek out solutions to policy-related questions.

Arrange the coverage paperwork and metadata within the knowledge supply for the information base

We use Amazon Bedrock Data Bases to handle our paperwork and metadata. As a part of deploying the answer, the CloudFormation stack created a information base. Full the next steps to arrange its knowledge supply:

- On the Amazon Bedrock console, navigate to the deployed information base and navigate to the S3 bucket that’s talked about as its knowledge supply.

- Add just a few insurance coverage coverage paperwork and metadata paperwork to the S3 bucket to imitate the naming conventions as proven within the following screenshot.

The naming conventions are

The next screenshot reveals an instance of what a pattern metadata.json file seems like.

- After the paperwork are uploaded to Amazon S3, navigate to the deployed information base, choose the info supply, and select Sync.

To know extra about how metadata help in Data Bases on Amazon Bedrock helps you get correct outcomes, check with Amazon Bedrock Data Bases now helps metadata filtering to enhance retrieval accuracy.

- Now you may return to the UI and begin asking questions associated to the coverage paperwork.

The next screenshot reveals the set of questions we requested for locating solutions associated to coverage protection.

Clear up

To keep away from sudden expenses, full the next steps to scrub up your sources:

- Delete the contents from the S3 buckets equivalent to the

ImageBucketNameandPolicyDocumentsBucketNamekeys from the outputs of the CloudFormation stack. - Delete the deployed stack utilizing the AWS CloudFormation console.

Finest practices

The next are some extra greatest practices you could comply with in your brokers:

- Automated testing – Implement automated exams utilizing instruments to recurrently check the orchestration workflows. You should use mock APIs to simulate numerous situations and validate the agent’s decision-making course of.

- Model management – Preserve model management in your agent configurations and prompts in a repository. This offers traceability and fast rollback if wanted.

- Monitoring and logging – Use Amazon CloudWatch to observe agent interactions and API calls. Arrange alarms for sudden behaviors or failures.

- Steady integration – Arrange a steady integration and supply (CI/CD) pipeline that integrates automated testing, immediate validation, and deployment to take care of easy updates with out disrupting ongoing workflows.

Conclusion

On this put up, we demonstrated the facility of chaining Amazon Bedrock brokers, providing a contemporary perspective on integrating back-office automation workflows and enterprise APIs. This answer affords a number of advantages: as new enterprise APIs emerge, dependencies in current ones might be minimized, decreasing coupling. Furthermore, Amazon Bedrock Brokers can keep conversational context, enabling follow-up queries to make use of dialog historical past. For prolonged contextual reminiscence, a extra persistent backend implementation might be thought of.

To study extra, check with Amazon Bedrock Brokers.

In regards to the Writer

Piyali Kamra is a seasoned enterprise architect and a hands-on technologist who has over 20 years of expertise constructing and executing giant scale enterprise IT initiatives throughout geographies. She believes that constructing giant scale enterprise techniques is just not a precise science however extra like an artwork, the place you may’t at all times select the very best know-how that comes to at least one’s thoughts however quite instruments and applied sciences should be rigorously chosen primarily based on the crew’s tradition , strengths, weaknesses and dangers, in tandem with having a futuristic imaginative and prescient as to the way you need to form your product just a few years down the street.

Piyali Kamra is a seasoned enterprise architect and a hands-on technologist who has over 20 years of expertise constructing and executing giant scale enterprise IT initiatives throughout geographies. She believes that constructing giant scale enterprise techniques is just not a precise science however extra like an artwork, the place you may’t at all times select the very best know-how that comes to at least one’s thoughts however quite instruments and applied sciences should be rigorously chosen primarily based on the crew’s tradition , strengths, weaknesses and dangers, in tandem with having a futuristic imaginative and prescient as to the way you need to form your product just a few years down the street.