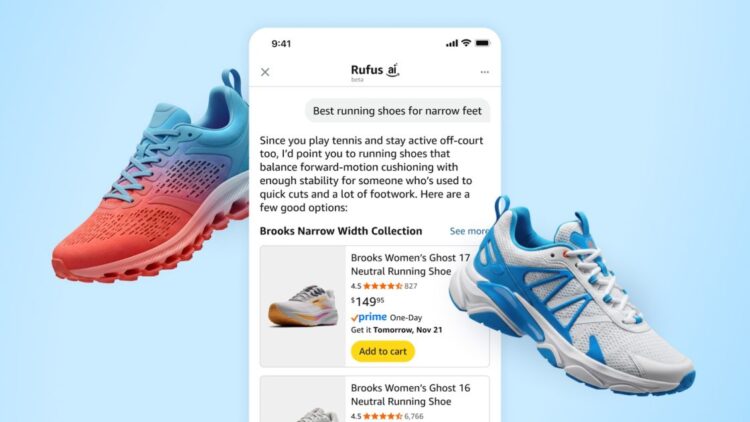

Our crew at Amazon builds Rufus, an AI-powered buying assistant which delivers clever, conversational experiences to thrill our clients.

Greater than 250 million clients have used Rufus this 12 months. Month-to-month customers are up 140% YoY and interactions are up 210% YoY. Moreover, clients that use Rufus throughout a buying journey are 60% extra more likely to full a purchase order. To make this doable, our crew fastidiously evaluates each resolution, aiming to give attention to what issues most: constructing the very best agentic buying assistant expertise. By specializing in customer-driven options, Rufus is now smarter, sooner, and extra helpful.

On this publish, we’ll share how our adoption of Amazon Bedrock accelerated the evolution of Rufus.

Constructing a customer-driven structure

Defining clear use circumstances are basic to shaping each necessities and implementation, and constructing an AI-powered buying assistant is not any exception. For a buying assistant like Rufus our use circumstances align with the sorts of questions clients ask, and we purpose to exceed their expectations with each reply. For instance, a buyer could wish to know one thing factual in regards to the footwear they’re contemplating and ask, “are these footwear waterproof?” One other buyer could wish to ask Rufus for suggestions and ask, “give me a couple of good choices for footwear appropriate for marathon working.” These examples signify only a fraction of the various query varieties we designed Rufus to assist by working backwards from buyer use circumstances.

After we outlined our buyer use circumstances, we design Rufus with your complete stack in thoughts to work seamlessly for patrons. From preliminary launch to subsequent iterations, we acquire metrics to see how nicely Rufus is doing with the purpose to maintain getting higher. This implies not solely measuring how precisely questions are answered utilizing instruments like LLM-as-a-judge, but in addition analyzing elements corresponding to latency, repeat buyer engagement, and variety of dialog turns per interplay, to realize deeper insights into buyer engagement.

Increasing past our in-house LLM

We first launched Rufus by constructing our personal in-house massive language mannequin (LLM). The choice to construct a customized LLM was pushed by the necessity to use a mannequin that was specialised on buying area questions. At first, we thought-about off-the-shelf fashions however most of those didn’t do nicely in our buying evaluations (evals). Different fashions got here with the price of being bigger and due to this fact have been slower and extra expensive. We didn’t want a mannequin that did nicely throughout many domains, we wanted a mannequin that did nicely within the buying area, whereas sustaining excessive accuracy, low latency, and value efficiency. By constructing our customized LLM and deploying it utilizing AWS silicon, we have been in a position to enter manufacturing worldwide supporting massive scale occasions corresponding to Prime Day once we used 80,000 AWS Inferentia and Trainium chips.

After the preliminary success of Rufus, we aimed to develop into use circumstances requiring superior reasoning, bigger context home windows, and multi-step reasoning. Nevertheless, coaching an LLM presents a major problem: iterations can take weeks or months to finish. With newer extra succesful fashions being launched at an accelerated tempo, we aimed to enhance Rufus as shortly as doable and started to judge and undertake state-of-the-art fashions quickly. To launch these new options and construct a very exceptional buying assistant Amazon Bedrock was the pure resolution.

Accelerating Rufus with Amazon Bedrock

Amazon Bedrock is a complete, safe, and versatile platform for constructing generative AI purposes and brokers. Amazon Bedrock connects you to main basis fashions (FMs), providers to deploy and function brokers, and instruments for fine-tuning, safeguarding, and optimizing fashions together with information bases to attach purposes to your newest knowledge so that you’ve all the pieces you’ll want to shortly transfer from experimentation to real-world deployment. Amazon Bedrock provides you entry to lots of of FMs from main AI firms together with analysis instruments to select the very best mannequin based mostly in your distinctive efficiency and value wants.

Amazon Bedrock supplies us nice worth by:

- Managing internet hosting of main basis fashions (FMs) from totally different suppliers and making them out there by mannequin agnostic interfaces such because the converse API. By offering entry to frontier fashions we are able to consider and combine them shortly with minimal modifications to our current methods. This elevated our velocity. We will use the very best mannequin for the duty whereas balancing traits like price, latency, and accuracy.

- Addressing vital operational overhead from the Rufus crew corresponding to managing mannequin internet hosting infrastructure, dealing with scaling challenges, or sustaining mannequin serving pipelines all over the world the place Amazon operates. Bedrock handles the heavy lifting, permitting clients to focus on constructing modern options for his or her distinctive wants.

- Offering international availability for constant deployment supporting a number of geographic areas. Through the use of Amazon Bedrock we launched in new marketplaces shortly with minimal effort.

Fashions hosted by Amazon Bedrock additionally helps Rufus assist a variety of experiences throughout modalities, together with textual content and pictures. Even inside a selected modality like text-to-text, use circumstances can differ in complexity, site visitors, and latency necessities. Some eventualities corresponding to “planning a tenting journey,” “reward suggestions for my mother,” or fashion recommendation requires deeper reasoning, multi-turn dialogue, and entry to instruments like internet search to supply contextually wealthy, customized solutions. Simple product inquiries, corresponding to, “what’s the wattage on this drill?” will be dealt with effectively by smaller, sooner fashions.

Our technique combines a number of fashions to energy Rufus together with Amazon Nova, and Anthropic’s Claude Sonnet, and our customized mannequin, so we are able to ship essentially the most dependable, quick, and intuitive buyer expertise doable.

Integrating Amazon Bedrock with Rufus

With Amazon Bedrock, we are able to consider and choose the optimum mannequin for every question kind, balancing reply high quality, latency, and engagement. The advantages of utilizing Amazon Bedrock elevated our improvement velocity by over 6x. Utilizing a number of fashions provides us the flexibility to interrupt down a dialog into granular items. By doing so, we’re in a position to reply questions extra successfully and we’ve seen significant advantages. After we all know what fashions we plan to make use of, we additionally take a hybrid strategy in offering the mannequin correct context to carry out its process successfully. In some circumstances, we could have already got the context that Rufus must reply a query. For instance, if we all know a buyer is asking a query about their earlier orders, we are able to present their order historical past to the preliminary inference request of the mannequin. This optimizes the variety of inference calls we have to make and in addition supplies extra determinism to assist keep away from downstream errors. In different circumstances, we are able to defer the choice to the mannequin and when it believes it wants extra info it will possibly use a device to retrieve extra context.

We discovered that it’s essential to floor the mannequin with the right info. One of many methods we do that is through the use of Amazon Nova Net Grounding as a result of it will possibly work together with internet browsers to retrieve and cite authoritative web sources, leading to considerably decreased reply defects and improved accuracy and buyer belief. Along with optimizing mannequin accuracy, we’ve additionally labored with Amazon Bedrock options to lower latency at any time when doable. Through the use of immediate caching and parallel device calling we decreased latency much more. These optimizations, from mannequin response to service latency, means clients that use Rufus are 60% extra more likely to full a purchase order.

Agentic performance by device integration

Extra importantly, the Amazon Bedrock structure helps agentic capabilities that makes Rufus extra helpful for customers by device use. Utilizing fashions on Bedrock, Rufus can dynamically name providers as instruments to supply customized, real-time, correct info or take actions on behalf of the person. When a buyer asks Rufus about product availability, pricing, or specs, Rufus goes far past its built-in information. It retrieves related info corresponding to your order historical past and makes use of built-in instruments at inference time to question stay databases, verify the newest product catalog, and entry real-time knowledge. To be extra private Rufus now has account reminiscence, understanding clients based mostly on their particular person buying exercise. Rufus can use info you will have shared beforehand corresponding to hobbies you get pleasure from, or a earlier point out of a pet, to supply a way more customized and efficient expertise.

When constructing these agentic capabilities, it is perhaps needed construct a service in your agent to work together with to be more practical. For instance, Rufus has a Value historical past function on the product element web page that lets clients immediately view historic pricing to see in the event that they’re getting a fantastic deal. Consumers can ask Rufus straight for worth historical past whereas searching (for instance, For instance, “Has this merchandise been on sale prior to now thirty days?”) or set an agentic worth alert to be notified when a product reaches a goal worth (“Purchase these headphones once they’re 30% off”). With the auto-buy function, Rufus can full purchases in your behalf inside half-hour of when the specified worth is met and finalize the order utilizing your default cost and transport particulars. Auto-buy requests stay energetic for six months, and clients at present utilizing this function are saving a mean of 20% per buy. The agent itself can create a persistent document within the worth alert and auto-buy service, however the system then makes use of conventional software program to handle the document and act on it accordingly. This tight integration of fashions, instruments, and providers transforms Rufus into a very dynamic customized buying agent.

Past worth monitoring, Rufus helps pure, conversational reordering. Prospects can merely say, “Reorder all the pieces we used to make pumpkin pie final week,” or “Order the mountain climbing boots and poles I browsed yesterday.” Rufus connects the dots between previous exercise and present intent and might counsel options if gadgets are unavailable. Rufus makes use of agentic AI capabilities to mechanically add merchandise to the cart for fast evaluation and checkout. In these eventualities, Rufus can decide when to collect info to supply a greater reply or to carry out an motion that’s directed by the client. These are simply two examples of the numerous agentic options we’ve launched.

The end result: AI-powered buying at Amazon scale

Through the use of Amazon Bedrock, Rufus demonstrates how organizations can construct subtle AI purposes that scale to serve thousands and thousands of customers. The mixture of versatile mannequin choice, managed infrastructure, and agentic capabilities permits Amazon to ship a buying assistant that’s each clever and sensible whereas sustaining tight controls on accuracy, latency, and value. In case you are contemplating your individual AI initiatives, Rufus showcases Bedrock’s potential to simplify the journey from AI experimentation to manufacturing deployment, permitting you to give attention to buyer worth reasonably than infrastructure complexity. We encourage you to attempt Bedrock and observe the identical advantages we have now and focusing in your agentic options and their core capabilities.

In regards to the authors

James Park is a ML Specialist Options Architect at Amazon Net Providers. He works with Amazon.com to design, construct, and deploy know-how options on AWS, and has a selected curiosity in AI and machine studying. In his spare time he enjoys in search of out new cultures, new experiences, and staying updated with the newest know-how tendencies.

James Park is a ML Specialist Options Architect at Amazon Net Providers. He works with Amazon.com to design, construct, and deploy know-how options on AWS, and has a selected curiosity in AI and machine studying. In his spare time he enjoys in search of out new cultures, new experiences, and staying updated with the newest know-how tendencies.

Shrikar Katti is a Principal TPM at Amazon. His present focus is on driving end-to-end supply, technique, and cross-org alignment for a large-scale AI merchandise that transforms the Amazon buying expertise, whereas guaranteeing security, scalability, and operational excellence. In his spare time, he enjoys taking part in chess, and exploring the newest developments in AI.

Shrikar Katti is a Principal TPM at Amazon. His present focus is on driving end-to-end supply, technique, and cross-org alignment for a large-scale AI merchandise that transforms the Amazon buying expertise, whereas guaranteeing security, scalability, and operational excellence. In his spare time, he enjoys taking part in chess, and exploring the newest developments in AI.

Gaurang Sinkar is a Principal Engineer at Amazon. His latest focus is on scaling, efficiency engineering and optimizing generative ai options. Past work, he enjoys spending time with household, touring, occasional mountain climbing and taking part in cricket.

Gaurang Sinkar is a Principal Engineer at Amazon. His latest focus is on scaling, efficiency engineering and optimizing generative ai options. Past work, he enjoys spending time with household, touring, occasional mountain climbing and taking part in cricket.

Sean Foo is an engineer at Amazon. His latest focus is constructing low latency buyer experiences and sustaining a extremely out there methods at Amazon scale. In his spare time, he enjoys taking part in video and board video games with pals and wandering round.

Sean Foo is an engineer at Amazon. His latest focus is constructing low latency buyer experiences and sustaining a extremely out there methods at Amazon scale. In his spare time, he enjoys taking part in video and board video games with pals and wandering round.

Saurabh Trikande is a Senior Product Supervisor for Amazon Bedrock and Amazon SageMaker Inference. He’s keen about working with clients and companions, motivated by the purpose of democratizing AI. He focuses on core challenges associated to deploying advanced AI purposes, inference with multi-tenant fashions, price optimizations, and making the deployment of generative AI fashions extra accessible. In his spare time, Saurabh enjoys mountain climbing, studying about modern applied sciences, following TechCrunch, and spending time together with his household.

Saurabh Trikande is a Senior Product Supervisor for Amazon Bedrock and Amazon SageMaker Inference. He’s keen about working with clients and companions, motivated by the purpose of democratizing AI. He focuses on core challenges associated to deploying advanced AI purposes, inference with multi-tenant fashions, price optimizations, and making the deployment of generative AI fashions extra accessible. In his spare time, Saurabh enjoys mountain climbing, studying about modern applied sciences, following TechCrunch, and spending time together with his household.

Somu Perianayagam is an Engineer at AWS specializing in distributed methods for Amazon DynamoDB and Amazon Bedrock. He builds large-scale, resilient architectures that assist clients obtain constant efficiency throughout areas, simplify their knowledge paths, and function reliably at huge scale.

Somu Perianayagam is an Engineer at AWS specializing in distributed methods for Amazon DynamoDB and Amazon Bedrock. He builds large-scale, resilient architectures that assist clients obtain constant efficiency throughout areas, simplify their knowledge paths, and function reliably at huge scale.