On this article, you’ll find out how the ReAct (Reasoning + Performing) sample works and find out how to implement it with LangGraph — first with a easy, hardcoded loop after which with an LLM-driven agent.

Matters we are going to cowl embrace:

- The ReAct cycle (Motive → Act → Observe) and why it’s helpful for brokers.

- Methods to mannequin agent workflows as graphs with LangGraph.

- Constructing a hardcoded ReAct loop, then upgrading it to an LLM-powered model.

Let’s discover these strategies.

Constructing ReAct Brokers with LangGraph: A Newbie’s Information

Picture by Creator

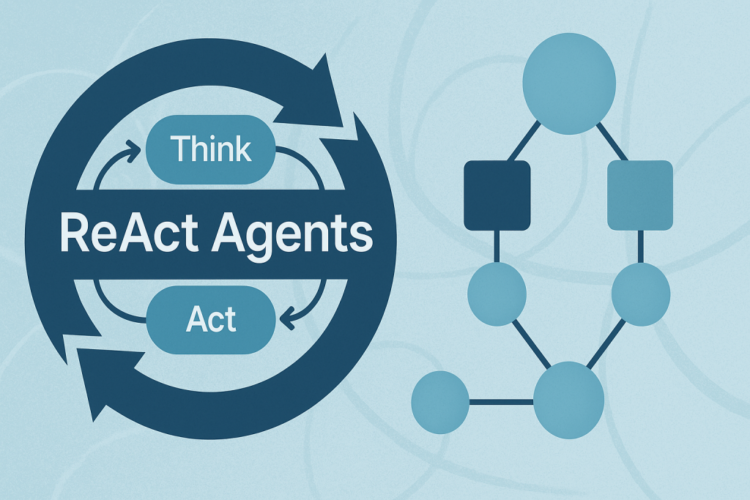

What’s the ReAct Sample?

ReAct (Reasoning + Performing) is a typical sample for constructing AI brokers that suppose by means of issues and take actions to unravel them. The sample follows a easy cycle:

- Reasoning: The agent thinks about what it must do subsequent.

- Performing: The agent takes an motion (like trying to find info).

- Observing: The agent examines the outcomes of its motion.

This cycle repeats till the agent has gathered sufficient info to reply the consumer’s query.

Why LangGraph?

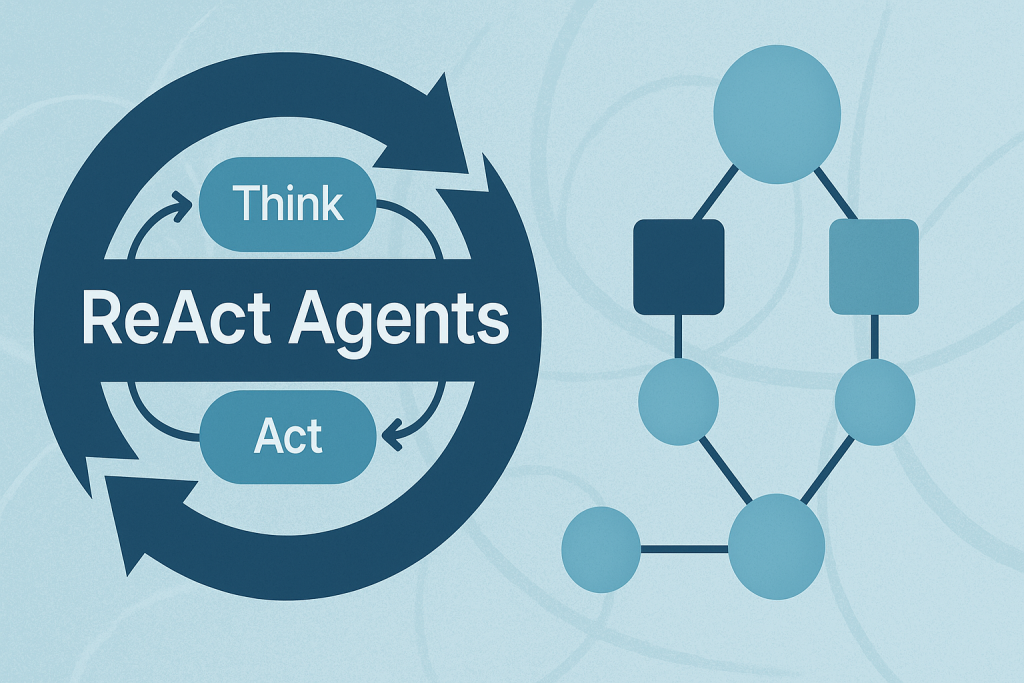

LangGraph is a framework constructed on prime of LangChain that allows you to outline agent workflows as graphs. A graph (on this context) is a knowledge construction consisting of nodes (steps in your course of) linked by edges (the paths between steps). Every node within the graph represents a step in your agent’s course of, and edges outline how info flows between steps. This construction permits for complicated flows like loops and conditional branching. For instance, your agent can cycle between reasoning and motion nodes till it gathers sufficient info. This makes complicated agent habits simple to grasp and keep.

Tutorial Construction

We’ll construct two variations of a ReAct agent:

- Half 1: A easy hardcoded agent to grasp the mechanics.

- Half 2: An LLM-powered agent that makes dynamic selections.

Half 1: Understanding ReAct with a Easy Instance

First, we’ll create a primary ReAct agent with hardcoded logic. This helps you perceive how the ReAct loop works with out the complexity of LLM integration.

Setting Up the State

Each LangGraph agent wants a state object that flows by means of the graph nodes. This state serves as shared reminiscence that accumulates info. Nodes learn the present state and add their contributions earlier than passing it alongside.

|

from langgraph.graph import StateGraph, END from typing import TypedDict, Annotated import operator

# Outline the state that flows by means of our graph class AgentState(TypedDict): messages: Annotated[list, operator.add] next_action: str iterations: int |

Key Parts:

StateGraph: The primary class from LangGraph that defines our agent’s workflow.AgentState: A TypedDict that defines what info our agent tracks.messages: Makes use ofoperator.addto build up all ideas, actions, and observations.next_action: Tells the graph which node to execute subsequent.iterations: Counts what number of reasoning cycles we’ve accomplished.

Making a Mock Instrument

In an actual ReAct agent, instruments are capabilities that carry out actions on this planet — like looking the net, querying databases, or calling APIs. For this instance, we’ll use a easy mock search instrument.

|

# Easy mock search instrument def search_tool(question: str) -> str: # Simulate a search – in actual utilization, this may name an API responses = { “climate tokyo”: “Tokyo climate: 18°C, partly cloudy”, “inhabitants japan”: “Japan inhabitants: roughly 125 million”, } return responses.get(question.decrease(), f“No outcomes discovered for: {question}”) |

This operate simulates a search engine with hardcoded responses. In manufacturing, this may name an actual search API like Google, Bing, or a customized data base.

The Reasoning Node — The “Mind” of ReAct

That is the place the agent thinks about what to do subsequent. On this easy model, we’re utilizing hardcoded logic, however you’ll see how this turns into dynamic with an LLM in Half 2.

|

# Reasoning node – decides what to do def reasoning_node(state: AgentState): messages = state[“messages”] iterations = state.get(“iterations”, 0)

# Easy logic: first search climate, then inhabitants, then end if iterations == 0: return {“messages”: [“Thought: I need to check Tokyo weather”], “next_action”: “motion”, “iterations”: iterations + 1} elif iterations == 1: return {“messages”: [“Thought: Now I need Japan’s population”], “next_action”: “motion”, “iterations”: iterations + 1} else: return {“messages”: [“Thought: I have enough info to answer”], “next_action”: “finish”, “iterations”: iterations + 1} |

The way it works:

The reasoning node examines the present state and decides:

- Ought to we collect extra info? (return

"motion") - Do we’ve got sufficient to reply? (return

"finish")

Discover how every return worth updates the state:

- Provides a “Thought” message explaining the choice.

- Units

next_actionto path to the subsequent node. - Increments the iteration counter.

This mimics how a human would strategy a analysis process: “First I would like climate information, then inhabitants knowledge, then I can reply.”

The Motion Node — Taking Motion

As soon as the reasoning node decides to behave, this node executes the chosen motion and observes the outcomes.

|

# Motion node – executes the instrument def action_node(state: AgentState): iterations = state[“iterations”]

# Select question primarily based on iteration question = “climate tokyo” if iterations == 1 else “inhabitants japan” end result = search_tool(question)

return {“messages”: [f“Action: Searched for ‘{query}'”, f“Observation: {result}”], “next_action”: “reasoning”}

# Router – decides subsequent step def route(state: AgentState): return state[“next_action”] |

The ReAct Cycle in Motion:

- Motion: Calls the search_tool with a question.

- Statement: Data what the instrument returned.

- Routing: Units next_action again to “reasoning” to proceed the loop.

The router operate is an easy helper that reads the next_action worth and tells LangGraph the place to go subsequent.

Constructing and Executing the Graph

Now we assemble all of the items right into a LangGraph workflow. That is the place the magic occurs!

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# Construct the graph workflow = StateGraph(AgentState) workflow.add_node(“reasoning”, reasoning_node) workflow.add_node(“motion”, action_node)

# Outline edges workflow.set_entry_point(“reasoning”) workflow.add_conditional_edges(“reasoning”, route, { “motion”: “motion”, “finish”: END }) workflow.add_edge(“motion”, “reasoning”)

# Compile and run app = workflow.compile()

# Execute end result = app.invoke({“messages”: [“User: Tell me about Tokyo and Japan”], “iterations”: 0, “next_action”: “”})

# Print the dialog circulation print(“n=== ReAct Loop Output ===”) for msg in end result[“messages”]: print(msg) |

Understanding the Graph Construction:

- Add Nodes: We register our reasoning and motion capabilities as nodes.

- Set Entry Level: The graph all the time begins on the reasoning node.

- Add Conditional Edges: Primarily based on the reasoning node’s choice:

- If

next_action == "motion"→ go to the motion node. - If

next_action == "finish"→ cease execution.

- If

- Add Fastened Edge: After motion completes, all the time return to reasoning.

The app.invoke() name kicks off this whole course of.

Output:

|

=== ReAct Loop Output === Person: Inform me about Tokyo and Japan

Thought: I want to verify Tokyo climate Motion: search(‘climate tokyo’) Statement: Tokyo climate: 18°C, partly cloudy

Thought: Now I want Japan‘s inhabitants Motion: search(‘inhabitants japan‘) Statement: Japan inhabitants: roughly 125 million

Thought: I have sufficient information to reply |

Now let’s see how LLM-powered reasoning makes this sample really dynamic.

Half 2: LLM-Powered ReAct Agent

Now that you simply perceive the mechanics, let’s construct a actual ReAct agent that makes use of an LLM to make clever selections.

Why Use an LLM?

The hardcoded model works, nevertheless it’s rigid — it might solely deal with the precise situation we programmed. An LLM-powered agent can:

- Perceive various kinds of questions.

- Determine dynamically what info to collect.

- Adapt its reasoning primarily based on what it learns.

Key Distinction

As a substitute of hardcoded if/else logic, we’ll immediate the LLM to resolve what to do subsequent. The LLM turns into the “reasoning engine” of our agent.

Setting Up the LLM Surroundings

We’ll use OpenAI’s GPT-4o as our reasoning engine, however you could possibly use any LLM (Anthropic, open-source fashions, and so on.).

|

from langgraph.graph import StateGraph, END from typing import TypedDict, Annotated import operator import os from openai import OpenAI

consumer = OpenAI(api_key=os.environ.get(“OPENAI_API_KEY”))

class AgentStateLLM(TypedDict): messages: Annotated[list, operator.add] next_action: str iteration_count: int |

New State Definition:

AgentStateLLM is much like AgentState, however we’ve renamed it to tell apart between the 2 examples. The construction is equivalent — we nonetheless monitor messages, actions, and iterations.

The LLM Instrument — Gathering Info

As a substitute of a mock search, we’ll let the LLM reply queries utilizing its personal data. This demonstrates how one can flip an LLM right into a instrument!

|

def llm_tool(question: str) -> str: “”“Let the LLM reply the question immediately utilizing its data”“” response = consumer.chat.completions.create( mannequin=“gpt-4o”, max_tokens=150, messages=[{“role”: “user”, “content”: f“Answer this query briefly: {query}”}] ) return response.selections[0].message.content material.strip() |

This operate makes a easy API name to GPT-4 with the question. The LLM responds with factual info, which our agent will use in its reasoning.

Notice: In manufacturing, you would possibly mix this with internet search, databases, or different instruments for extra correct, up-to-date info.

LLM-Powered Reasoning — The Core Innovation

That is the place ReAct really shines. As a substitute of hardcoded logic, we immediate the LLM to resolve what info to collect subsequent.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

def reasoning_node_llm(state: AgentStateLLM): iteration_count = state.get(“iteration_count”, 0) if iteration_count >= 3: return {“messages”: [“Thought: I have gathered enough information”], “next_action”: “finish”, “iteration_count”: iteration_count}

historical past = “n”.be a part of(state[“messages”]) immediate = f“”“You’re an AI agent answering: “Inform me about Tokyo and Japan“

Dialog to this point: {historical past}

Queries accomplished: {iteration_count}/3

You MUST make precisely 3 queries to collect info. Reply ONLY with: QUERY:

Do NOT be conversational. Do NOT thank the consumer. ONLY output: QUERY:

choice = consumer.chat.completions.create( mannequin=“gpt-4o”, max_tokens=100, messages=[{“role”: “user”, “content”: prompt}] ).selections[0].message.content material.strip()

if choice.startswith(“QUERY:”): return {“messages”: [f“Thought: {decision}”], “next_action”: “motion”, “iteration_count”: iteration_count} return {“messages”: [f“Thought: {decision}”], “next_action”: “finish”, “iteration_count”: iteration_count} |

How This Works:

- Context Constructing: We embrace the dialog historical past so the LLM is aware of what’s already been gathered.

- Structured Prompting: We give clear directions to output in a particular format (

QUERY:). - Iteration Management: We implement a most of three queries to stop infinite loops.

- Choice Parsing: We verify if the LLM desires to take motion or end.

The Immediate Technique:

The immediate tells the LLM:

- What query it’s making an attempt to reply

- What info has been gathered to this point

- What number of queries it’s allowed to make

- Precisely find out how to format its response

- To not be conversational

LLMs are skilled to be useful and chatty. For agent workflows, we’d like concise, structured outputs. This directive retains responses targeted on the duty.

Executing the Motion

The motion node works equally to the hardcoded model, however now it processes the LLM’s dynamically generated question.

|

def action_node_llm(state: AgentStateLLM): last_thought = state[“messages”][–1] question = last_thought.change(“Thought: QUERY:”, “”).strip() end result = llm_tool(question) return {“messages”: [f“Action: query(‘{query}’)”, f“Observation: {result}”], “next_action”: “reasoning”, “iteration_count”: state.get(“iteration_count”, 0) + 1} |

The Course of:

- Extract the question from the LLM’s reasoning (eradicating the “Thought: QUERY:” prefix).

- Execute the question utilizing our llm_tool.

- Document each the motion and commentary.

- Route again to reasoning for the subsequent choice.

Discover how that is extra versatile than the hardcoded model — the agent can ask for any info it thinks is related!

Constructing the LLM-Powered Graph

The graph construction is equivalent to Half 1, however now the reasoning node makes use of LLM intelligence as an alternative of hardcoded guidelines.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

workflow_llm = StateGraph(AgentStateLLM) workflow_llm.add_node(“reasoning”, reasoning_node_llm) workflow_llm.add_node(“motion”, action_node_llm) workflow_llm.set_entry_point(“reasoning”) workflow_llm.add_conditional_edges(“reasoning”, lambda s: s[“next_action”], {“motion”: “motion”, “finish”: END}) workflow_llm.add_edge(“motion”, “reasoning”)

app_llm = workflow_llm.compile() result_llm = app_llm.invoke({ “messages”: [“User: Tell me about Tokyo and Japan”], “next_action”: “”, “iteration_count”: 0 })

print(“n=== LLM-Powered ReAct (No Mock Knowledge) ===”) for msg in result_llm[“messages”]: print(msg) |

What’s Completely different:

- Identical graph topology (reasoning ↔ motion with conditional routing).

- Identical state administration strategy.

- Solely the reasoning logic modified – from if/else to LLM prompting.

This demonstrates the ability of LangGraph: you’ll be able to swap elements whereas retaining the workflow construction intact!

The Output:

You’ll see the agent autonomously resolve what info to collect. Every iteration exhibits:

- Thought: What the LLM determined to ask about.

- Motion: The question being executed.

- Statement: The knowledge gathered.

Watch how the LLM strategically gathers info to construct an entire reply!

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

=== LLM–Powered ReAct (No Mock Knowledge) === Person: Inform me about Tokyo and Japan

Thought: QUERY: What is the historical past and significance of Tokyo in Japan?

Motion: question(‘What’s the historical past and significance of Tokyo in Japan?’)

Statement: Tokyo, initially identified as Edo, has a wealthy historical past and important function in Japan. It started as a small fishing village till Tokugawa Ieyasu established it as the middle of his shogunate in 1603, marking the begin of the Edo interval. Throughout this time, Edo flourished as a political and cultural hub, turning into one of the world‘s largest cities by the 18th century.

In 1868, after the Meiji Restoration, the emperor moved from Kyoto to Edo, renaming it Tokyo, which means “Jap Capital”. This transformation marked the start of Tokyo’s modernization and fast improvement. Over the twentieth century, Tokyo confronted challenges, together with the Nice Kanto Earthquake in 1923 and heavy bombings

Thought: QUERY: What are the main cultural and financial contributions of Tokyo to Japan?

Motion: question(‘What are the key cultural and financial contributions of Tokyo to Japan?’)

Statement: Tokyo, as the capital of Japan, is a main cultural and financial powerhouse. Culturally, Tokyo is a hub for conventional and modern arts, together with theater, music, and visible arts. The metropolis is residence to quite a few museums, galleries, and cultural websites such as the Tokyo Nationwide Museum, Senso–ji Temple, and the Meiji Shrine. It additionally hosts worldwide occasions like the Tokyo Worldwide Movie Pageant and numerous vogue weeks, contributing to its repute as a world vogue and cultural middle.

Economically, Tokyo is one of the world‘s main monetary facilities. It hosts the Tokyo Inventory Trade, one of many largest inventory exchanges globally, and is the headquarters for quite a few multinational companies. The town’s superior infrastructure and innovation in expertise and business make it a focal

Thought: QUERY: What are the key historic and cultural elements of Japan as a complete?

Motion: question(‘What are the important thing historic and cultural elements of Japan as a complete?’)

Statement: Japan boasts a wealthy tapestry of historic and cultural elements, formed by centuries of improvement. Traditionally, Japan‘s tradition was influenced by its isolation as an island nation, main to a distinctive mix of indigenous practices and international influences. Key historic intervals embrace the Jomon and Yayoi eras, characterised by early settlement and tradition, and the subsequent intervals of imperial rule and samurai governance, such as the Heian, Kamakura, and Edo intervals. These intervals fostered developments like the tea ceremony, calligraphy, and kabuki theater.

Culturally, Japan is identified for its Shinto and Buddhist traditions, which coexist seamlessly. Its aesthetic ideas emphasize simplicity and nature, mirrored in conventional structure, gardens, and arts such as ukiyo–e prints and later

Thought: I have gathered sufficient info |

Wrapping Up

You’ve now constructed two ReAct brokers with LangGraph — one with hardcoded logic to be taught the mechanics, and one powered by an LLM that makes dynamic selections.

The important thing perception? LangGraph helps you to separate your workflow construction from the intelligence that drives it. The graph topology stayed the identical between Half 1 and Half 2, however swapping hardcoded logic for LLM reasoning reworked a inflexible script into an adaptive agent.

From right here, you’ll be able to lengthen these ideas by including actual instruments (internet search, calculators, databases), implementing instrument choice logic, and even constructing multi-agent programs the place a number of ReAct brokers collaborate.