The Cohere Embed 4 multimodal embeddings mannequin is now out there as a completely managed, serverless possibility in Amazon Bedrock. Customers can select between cross-Area inference (CRIS) or International cross-Area inference to handle unplanned site visitors bursts by using compute sources throughout completely different AWS Areas. Actual-time data requests and time zone concentrations are instance occasions that may trigger inference demand to exceed anticipated site visitors.

The brand new Embed 4 mannequin on Amazon Bedrock is purpose-built for analyzing enterprise paperwork. The mannequin delivers main multilingual capabilities and reveals notable enhancements over Embed 3 throughout the important thing benchmarks, making it very best to be used circumstances akin to enterprise search.

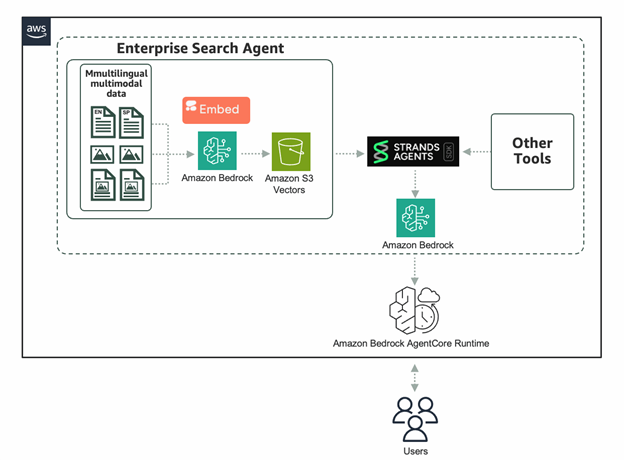

On this put up, we dive into the advantages and distinctive capabilities of Embed 4 for enterprise search use circumstances. We’ll present you the right way to rapidly get began utilizing Embed 4 on Amazon Bedrock, profiting from integrations with Strands Brokers, S3 Vectors, and Amazon Bedrock AgentCore to construct highly effective agentic retrieval-augmented technology (RAG) workflows.

Embed 4 advances multimodal embedding capabilities by natively supporting advanced enterprise paperwork that mix textual content, photographs, and interleaved textual content and pictures right into a unified vector illustration. Embed 4 handles as much as 128,000 tokens, minimizing the necessity for tedious doc splitting and preprocessing pipelines. Embed 4 additionally affords configurable compressed embeddings that scale back vector storage prices by as much as 83% (Introducing Embed 4: Multimodal seek for enterprise). Along with multilingual understanding throughout over 100 languages, enterprises in regulated industries akin to finance, healthcare, and manufacturing can effectively course of unstructured paperwork, accelerating perception extraction for optimized RAG programs. Examine Embed 4 in this launch weblog from July 2025 to discover the right way to deploy on Amazon SageMaker JumpStart.

Embed 4 will be built-in into your functions utilizing the InvokeModel API, and right here’s an instance of the right way to use the AWS SDK for Python (Boto3) with Embed 4:

For the textual content solely enter:

For the combined modalities enter:

For extra particulars, you possibly can examine Amazon Bedrock Consumer Information for Cohere Embed 4.

Enterprise search use case

On this part, we give attention to utilizing Embed 4 for an enterprise search use case within the finance {industry}. Embed 4 unlocks a spread of capabilities for enterprises looking for to:

- Streamline data discovery

- Improve generative AI workflows

- Optimize storage effectivity

Utilizing basis fashions in Amazon Bedrock is a completely serverless atmosphere which removes infrastructure administration and simplifies integration with different Amazon Bedrock capabilities. See extra particulars for different potential use circumstances with Embed 4.

Answer overview

With the serverless expertise out there in Amazon Bedrock, you will get began rapidly with out spending an excessive amount of effort on infrastructure administration. Within the following sections, we present the right way to get began with Cohere Embed 4. Embed 4 is already designed with storage effectivity in thoughts.

We select Amazon S3 vectors for storage as a result of it’s a cost-optimized, AI-ready storage with native assist for storing and querying vectors at scale. S3 vectors can retailer billions of vector embeddings with sub-second question latency, lowering complete prices by as much as 90% in comparison with conventional vector databases. We leverage the extensible Strands Agent SDK to simplify agent growth and make the most of mannequin alternative flexibility. We additionally use Bedrock AgentCore as a result of it gives a completely managed, serverless runtime particularly constructed to deal with dynamic, long-running agentic workloads with industry-leading session isolation, safety, and real-time monitoring.

Conditions

To get began with Embed 4, confirm you’ve the next stipulations in place:

- IAM permissions: Configure your IAM function with vital Amazon Bedrock permissions, or generate API keys by means of the console or SDK for testing. For extra data, see Amazon Bedrock API keys.

- Strands SDK set up: Set up the required SDK in your growth atmosphere. For extra data, see the Strands quickstart information.

- S3 Vectors configuration: Create an S3 vector bucket and vector index for storing and querying vector knowledge. For extra data, see the getting began with S3 Vectors tutorial.

Initialize Strands brokers

The Strands Brokers SDK affords an open supply, modular framework that streamlines the event, integration, and orchestration of AI brokers. With the versatile structure builders can construct reusable agent elements and create customized instruments with ease. The system helps a number of fashions, giving customers freedom to pick optimum options for his or her particular use circumstances. Fashions will be hosted on Amazon Bedrock, Amazon SageMaker, or elsewhere.

For instance, Cohere Command A is a generative mannequin with 111B parameters and a 256K context size. The mannequin excels at software use which may lengthen baseline performance whereas avoiding pointless software calls. The mannequin can be appropriate for multilingual duties and RAG duties akin to manipulating numerical data in monetary settings. When paired with Embed 4, which is purpose-built for extremely regulated sectors like monetary companies, this mix delivers substantial aggressive advantages by means of its adaptability.

We start by defining a software {that a} Strands agent can use. The software searches for paperwork saved in S3 utilizing semantic similarity. It first converts the person’s question into vectors with Cohere Embed 4. It then returns essentially the most related paperwork by querying the embeddings saved within the S3 vector bucket. The code beneath reveals solely the inference portion. Embeddings created from the monetary paperwork had been saved in a S3 vector bucket earlier than querying.

We then outline a monetary analysis agent that may use the software to go looking monetary paperwork. As your use case turns into extra advanced, extra brokers will be added for specialised duties.

Merely utilizing the software returns the next outcomes. Multilingual monetary paperwork are ranked by semantic similarity to the question about evaluating earnings progress charges. An agent can use this data to generate helpful insights.

The instance above depends on the QueryVectors API operation for S3 Vectors, which may work properly for small paperwork. This strategy will be improved to deal with giant and sophisticated enterprise paperwork utilizing subtle chunking and reranking strategies. Sentence boundaries can be utilized to create doc chunks to protect semantic coherence. The doc chunks are then used to generate embeddings. The next API name passes the identical question to the Strands agent:

The Strands agent makes use of the search software we outlined to generate a solution for the question about evaluating earnings progress charges. The ultimate reply considers the outcomes returned from the search software:

A customized software just like the S3 Vector search perform used on this instance is only one of many potentialities. With Strands it’s easy to develop and orchestrate autonomous brokers whereas Bedrock AgentCore serves because the managed deployment system to host and scale these Strands brokers in manufacturing.

Deploy to Amazon Bedrock AgentCore

As soon as an agent is constructed and examined, it is able to be deployed. AgentCore Runtime is a safe and serverless runtime purpose-built for deploying and scaling dynamic AI brokers. Use the starter toolkit to mechanically create the IAM execution function, container picture, and Amazon Elastic Container Registry repository to host an agent in AgentCore Runtime. You possibly can outline a number of instruments out there to your agent. On this instance, we use the Strands Agent powered by Embed 4:

Clear up

To keep away from incurring pointless prices once you’re accomplished, empty and delete the S3 Vector buckets created, functions that may make requests to the Amazon Bedrock APIs, the launched AgentCore Runtimes and related ECR repositories.

For extra data, see this documentation to delete a vector index and this documentation to delete a vector bucket, and see this step for eradicating sources created by the Bedrock AgentCore starter toolkit.

Conclusion

Embed 4 on Amazon Bedrock is helpful for enterprises aiming to unlock the worth of their unstructured, multimodal knowledge. With assist for as much as 128,000 tokens, compressed embeddings for value effectivity, and multilingual capabilities throughout 100+ languages, Embed 4 gives the scalability and precision required for enterprise search at scale.

Embed 4 has superior capabilities which can be optimized with area particular understanding of information from regulated industries akin to finance, healthcare, and manufacturing. When mixed with S3 Vectors for cost-optimized storage, Strands Brokers for agent orchestration, and Bedrock AgentCore for deployment, organizations can construct safe, high-performing agentic workflows with out the overhead of managing infrastructure. Verify the full Area checklist for future updates.

To be taught extra, try the Cohere in Amazon Bedrock product web page and the Amazon Bedrock pricing web page. In case you’re all in favour of diving deeper try the code pattern and the Cohere on AWS GitHub repository.

Concerning the authors

James Yi is a Senior AI/ML Associate Options Architect at AWS. He spearheads AWS’s strategic partnerships in Rising Applied sciences, guiding engineering groups to design and develop cutting-edge joint options in generative AI. He allows area and technical groups to seamlessly deploy, function, safe, and combine associate options on AWS. James collaborates intently with enterprise leaders to outline and execute joint Go-To-Market methods, driving cloud-based enterprise progress. Exterior of labor, he enjoys taking part in soccer, touring, and spending time along with his household.

James Yi is a Senior AI/ML Associate Options Architect at AWS. He spearheads AWS’s strategic partnerships in Rising Applied sciences, guiding engineering groups to design and develop cutting-edge joint options in generative AI. He allows area and technical groups to seamlessly deploy, function, safe, and combine associate options on AWS. James collaborates intently with enterprise leaders to outline and execute joint Go-To-Market methods, driving cloud-based enterprise progress. Exterior of labor, he enjoys taking part in soccer, touring, and spending time along with his household.

Nirmal Kumar is Sr. Product Supervisor for the Amazon SageMaker service. Dedicated to broadening entry to AI/ML, he steers the event of no-code and low-code ML options. Exterior work, he enjoys travelling and studying non-fiction.

Nirmal Kumar is Sr. Product Supervisor for the Amazon SageMaker service. Dedicated to broadening entry to AI/ML, he steers the event of no-code and low-code ML options. Exterior work, he enjoys travelling and studying non-fiction.

Hugo Tse is a Options Architect at AWS, with a give attention to Generative AI and Storage options. He’s devoted to empowering clients to beat challenges and unlock new enterprise alternatives utilizing know-how. He holds a Bachelor of Arts in Economics from the College of Chicago and a Grasp of Science in Data Know-how from Arizona State College.

Hugo Tse is a Options Architect at AWS, with a give attention to Generative AI and Storage options. He’s devoted to empowering clients to beat challenges and unlock new enterprise alternatives utilizing know-how. He holds a Bachelor of Arts in Economics from the College of Chicago and a Grasp of Science in Data Know-how from Arizona State College.

Mehran Najafi, PhD, serves as AWS Principal Options Architect and leads the Generative AI Answer Architects crew for AWS Canada. His experience lies in making certain the scalability, optimization, and manufacturing deployment of multi-tenant generative AI options for enterprise clients.

Mehran Najafi, PhD, serves as AWS Principal Options Architect and leads the Generative AI Answer Architects crew for AWS Canada. His experience lies in making certain the scalability, optimization, and manufacturing deployment of multi-tenant generative AI options for enterprise clients.

Sagar Murthy is an agentic AI GTM chief at AWS who enjoys collaborating with frontier basis mannequin companions, agentic frameworks, startups, and enterprise clients to evangelize AI and knowledge improvements, open supply options, and allow impactful partnerships and launches, whereas constructing scalable GTM motions. Sagar brings a mix of technical resolution and enterprise acumen, holding a BE in Electronics Engineering from the College of Mumbai, MS in Laptop Science from Rochester Institute of Know-how, and an MBA from UCLA Anderson Faculty of Administration.

Sagar Murthy is an agentic AI GTM chief at AWS who enjoys collaborating with frontier basis mannequin companions, agentic frameworks, startups, and enterprise clients to evangelize AI and knowledge improvements, open supply options, and allow impactful partnerships and launches, whereas constructing scalable GTM motions. Sagar brings a mix of technical resolution and enterprise acumen, holding a BE in Electronics Engineering from the College of Mumbai, MS in Laptop Science from Rochester Institute of Know-how, and an MBA from UCLA Anderson Faculty of Administration.

Payal Singh is a Options Architect at Cohere with over 15 years of cross-domain experience in DevOps, Cloud, Safety, SDN, Knowledge Middle Structure, and Virtualization. She drives partnerships at Cohere and helps clients with advanced GenAI resolution integrations.

Payal Singh is a Options Architect at Cohere with over 15 years of cross-domain experience in DevOps, Cloud, Safety, SDN, Knowledge Middle Structure, and Virtualization. She drives partnerships at Cohere and helps clients with advanced GenAI resolution integrations.