Extracting structured information from paperwork like invoices, receipts, and varieties is a persistent enterprise problem. Variations in format, structure, language, and vendor make standardization troublesome, and handbook information entry is gradual, error-prone, and unscalable. Conventional optical character recognition (OCR) and rule-based programs usually fall brief in dealing with this complexity. For example, a regional financial institution may have to course of hundreds of disparate paperwork—mortgage functions, tax returns, pay stubs, and IDs—the place handbook strategies create bottlenecks and improve the chance of error. Clever doc processing (IDP) goals to resolve these challenges through the use of AI to categorise paperwork, extract or derive related data, and validate the extracted information to make use of it in enterprise processes. One in all its core objectives is to transform unstructured or semi-structured paperwork into usable, structured codecs akin to JSON, which then comprise particular fields, tables, or different structured goal data. The goal construction must be constant, in order that it may be used as a part of workflows or different downstream enterprise programs or for reporting and insights era. The next determine reveals the workflow, which entails ingesting unstructured paperwork (for instance, invoices from a number of distributors with various layouts) and extracting related data. Regardless of variations in key phrases, column names, or codecs throughout paperwork, the system normalizes and outputs the extracted information right into a constant, structured JSON format.

Imaginative and prescient language fashions (VLMs) mark a revolutionary development in IDP. VLMs combine massive language fashions (LLMs) with specialised picture encoders, creating actually multi-modal AI capabilities of each textual reasoning and visible interpretation. Not like conventional doc processing instruments, VLMs course of paperwork extra holistically—concurrently analyzing textual content content material, doc structure, spatial relationships, and visible components in a way that extra carefully resembles human comprehension. This method allows VLMs to extract which means from paperwork with unprecedented accuracy and contextual understanding. For readers taken with exploring the foundations of this know-how, Sebastian Raschka’s submit—Understanding Multimodal LLMs—gives a superb primer on multimodal LLMs and their capabilities.

This submit has 4 important sections that replicate the first contributions of our work and embrace:

- An outline of the varied IDP approaches accessible, together with the choice (our really useful resolution) for fine-tuning as a scalable method.

- Pattern code for fine-tuning VLMs for document-to-JSON conversion utilizing Amazon SageMaker AI and the SWIFT framework, a light-weight toolkit for fine-tuning varied massive fashions.

- Creating an analysis framework to evaluate efficiency processing structured information.

- A dialogue of the potential deployment choices, together with an express instance for deploying the fine-tuned adapter.

SageMaker AI is a completely managed service to construct, practice and deploy fashions at scale. On this submit, we use SageMaker AI to fine-tune the VLMs and deploy them for each batch and real-time inference.

Stipulations

Earlier than you start, ensure you have the next arrange as a way to efficiently comply with the steps outlined on this submit and the accompanying GitHub repository:

- AWS account: You want an lively AWS account with permissions to create and handle assets in SageMaker AI, Amazon Easy Storage Service (Amazon S3), and Amazon Elastic Container Registry (Amazon ECR).

- IAM permissions: Your IAM consumer or position will need to have adequate permissions. For manufacturing setups, comply with the precept of least privilege as described in safety greatest practices in IAM. For a sandbox setup we propose the next roles:

- Full entry to Amazon SageMaker AI (for instance,

AmazonSageMakerFullAccess). - Learn/write entry to S3 buckets for storing datasets and mannequin artifacts.

- Permissions to push and pull Docker photos from Amazon ECR (for instance,

AmazonEC2ContainerRegistryPowerUser). - If utilizing particular SageMaker occasion varieties, ensure that your service quotas are adequate.

- Full entry to Amazon SageMaker AI (for instance,

- GitHub repository: Clone or obtain the challenge code from our GitHub repository. This repository comprises the notebooks, scripts, and Docker artifacts referenced on this submit.

- Native surroundings arrange:

- Python: Python 3.10 or increased is really useful.

- AWS CLI: Be sure that the AWS Command Line Interface (AWS CLI) is put in and configured with credentials which have the required permissions.

- Docker: Docker have to be put in and working in your native machine should you plan to construct the customized Docker container for deployment.

- Jupyter Pocket book and Lab: To run the supplied notebooks.

- Set up the required Python packages by working

pip set up -r necessities.txtfrom the cloned repository’s root listing.

- Familiarity (really useful):

- Fundamental understanding of Python programming.

- Familiarity with AWS providers, significantly SageMaker AI.

- Conceptual information of LLMs, VLMs, and the container know-how will probably be useful.

Overview of doc processing and generative AI approaches

There are various levels of autonomy in clever doc processing. On one finish of the spectrum are totally handbook processes: People manually studying paperwork and coming into the data right into a kind utilizing a pc system. Most programs right now are semi-autonomous doc processing options. For instance, a human taking an image of a receipt and importing it to a pc system that robotically extracts a part of the data. The aim is to get to completely autonomous clever doc processing programs. This implies lowering the error price and assessing the use case particular threat of errors. AI is considerably reworking doc processing by enabling higher ranges of automation. A wide range of approaches exist, ranging in complexity and accuracy—from specialised fashions for OCR, to generative AI.

Specialised OCR fashions that don’t depend on generative AI are designed as pre-trained, task-specific ML fashions that excel at extracting structured data akin to tables, varieties, and key-value pairs from frequent doc varieties like invoices, receipts, and IDs. Amazon Textract is one instance of one of these service. This service gives excessive accuracy out of the field and requires minimal setup, making it well-suited for workloads the place fundamental textual content extraction is required, and paperwork don’t differ considerably in construction or comprise photos.

Nonetheless, as you improve the complexity and variability of paperwork, along with including multimodality, utilizing generative AI can assist enhance doc processing pipelines.

Whereas highly effective, making use of general-purpose VLMs or LLMs to doc processing isn’t simple. Efficient immediate engineering is vital to information the mannequin. Processing massive volumes of paperwork (scaling) requires environment friendly batching and infrastructure. As a result of LLMs are stateless, offering historic context or particular schema necessities for each doc may be cumbersome.

Approaches to clever doc processing that use LLMs or VLMs fall into 4 classes:

- Zero-shot prompting: the inspiration mannequin (FM) receives the results of earlier OCR or a PDF and the directions to carry out the doc processing process.

- Few-shot prompting: the FM receives the results of earlier OCR or a PDF, the directions to carry out the doc processing process, and a few examples.

- Retrieval-augmented few-shot prompting: just like the previous technique, however the examples despatched to the mannequin are chosen dynamically utilizing Retrieval Augmented Era (RAG).

- Advantageous-tuning VLMs

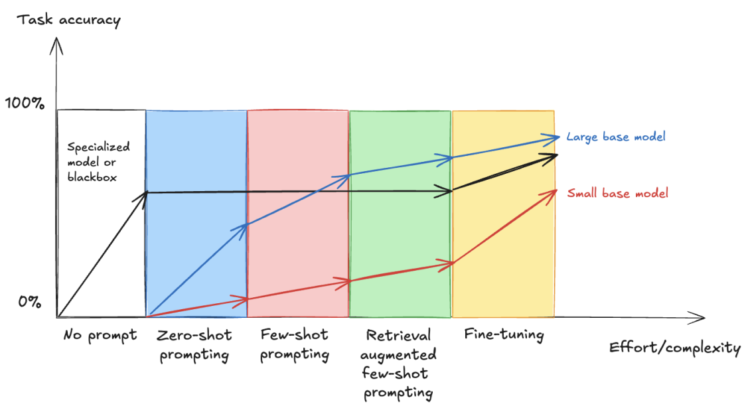

Within the following, you’ll be able to see the connection between rising effort and complexity and process accuracy, demonstrating how totally different strategies—from fundamental immediate engineering to superior fine-tuning—influence the efficiency of huge and small base fashions in comparison with a specialised resolution (impressed by the weblog submit Evaluating LLM fine-tuning strategies)

As you progress throughout the horizontal axis, the methods develop in complexity, and as you progress up the vertical axis, you enhance general accuracy. Usually, massive base fashions present higher efficiency than small base fashions within the methods that require immediate engineering, nevertheless as we clarify within the outcomes of this submit, fine-tuning small base fashions can ship comparable outcomes as fine-tuning massive base fashions for a particular process.

Zero-shot prompting

Zero-shot prompting is a way to make use of language fashions the place the mannequin is given a process with out prior examples or fine-tuning. As an alternative, it depends solely on the immediate’s wording and its pre-trained information to generate a response. In doc processing, this method entails giving the mannequin both a picture of a PDF doc, the OCR-extracted textual content from the PDF, or a structured markdown illustration of the doc and offering directions to carry out the doc processing process, along with the specified output format.

Amazon Bedrock Knowledge Automation makes use of zero-shot prompting with generative AI to carry out IDP. You should utilize Bedrock Knowledge Automation to automate the transformation of multi-modal information—together with paperwork containing textual content and complicated buildings, akin to tables, charts and pictures—into structured codecs. You possibly can profit from customization capabilities by way of the creation of blueprints that specify output necessities utilizing pure language or a schema editor. Bedrock Knowledge Automation also can extract bounding bins for the recognized entities and route paperwork appropriately to the right blueprint. These options may be configured and used by way of a single API, making it considerably extra highly effective than a fundamental zero-shot prompting method.

Whereas out-of-the-box VLMs can deal with common OCR duties successfully, they usually battle with the distinctive construction and nuances of customized paperwork—akin to invoices from various distributors. Though crafting a immediate for a single doc may be simple, the variability throughout a whole lot of vendor codecs makes immediate iteration a labor-intensive and time-consuming course of.

Few-shot prompting

Shifting to a extra advanced method, you might have few-shot prompting, a way used with LLMs the place a small variety of examples are supplied inside the immediate to information the mannequin in finishing a particular process. Not like zero-shot prompting, which depends solely on pure language directions, few-shot prompting improves accuracy and consistency by demonstrating the specified input-output conduct by way of examples.

One different is to make use of the Amazon Bedrock Converse API to carry out few shot prompting. Converse API supplies a constant technique to entry LLMs utilizing Amazon Bedrock. It helps turn-based messages between the consumer and the generative AI mannequin, and permits together with paperwork as a part of the content material. An alternative choice is utilizing Amazon SageMaker Jumpstart, which you should utilize to deploy fashions from suppliers like HuggingFace.

Nonetheless, most definitely your small business must course of several types of paperwork (for instance, invoices, contracts and hand written notes) and even inside one doc sort there are numerous variations, for instance, there may be not one standardized bill structure and as an alternative every vendor has their very own structure that you just can’t management. Discovering a single or just a few examples that cowl all of the totally different paperwork you wish to course of is difficult.

Retrieval-augmented few-shot prompting

One technique to deal with the problem of discovering the proper examples is to dynamically retrieve beforehand processed paperwork as examples and add them to the immediate at runtime (RAG).

You possibly can retailer just a few annotated samples in a vector retailer and retrieve them primarily based on the doc that must be processed. Amazon Bedrock Information Bases helps you implement all the RAG workflow from ingestion to retrieval and immediate augmentation with out having to construct customized integrations to information sources and handle information flows.

This turns the clever doc processing downside right into a search downside, which comes with its personal challenges on easy methods to enhance the accuracy of the search. Along with easy methods to scale for a number of sorts of paperwork, the few-shot method is dear as a result of each doc processed requires an extended immediate with examples. This leads to an elevated variety of enter tokens.

As proven within the previous determine, the immediate context will differ primarily based on the technique chosen (zero-shot, few-shot or few-shot with RAG), which can general change the outcomes obtained.

Advantageous-tuning VLMs

On the finish of the spectrum, you might have the choice to fine-tune a customized mannequin to carry out doc processing. That is our really useful method and what we give attention to on this submit. Advantageous-tuning is a technique the place a pre-trained LLM is additional educated on a particular dataset to specialize it for a specific process or area. Within the context of doc processing, fine-tuning entails utilizing labeled examples—akin to annotated invoices, contracts, or insurance coverage varieties—to show the mannequin precisely easy methods to extract or interpret related data. Often, the labor-intensive a part of fine-tuning is buying an acceptable, high-quality dataset. Within the case of doc processing, your organization in all probability already has a historic dataset in its current doc processing system. You possibly can export this information out of your doc processing system (for instance out of your enterprise useful resource planning (ERP) system) and use it because the dataset for fine-tuning. This fine-tuning method is what we give attention to on this submit as a scalable, excessive accuracy, and cost-effective method for clever doc processing.

The previous approaches symbolize a spectrum of methods to enhance LLM efficiency alongside two axes: LLM optimization (shaping mannequin conduct by way of immediate engineering or fine-tuning) and context optimization (enhancing what the mannequin is aware of at inference by way of strategies akin to few-shot studying or RAG). These strategies may be mixed—for instance, utilizing RAG with few-shot prompts or incorporating retrieved information into fine-tuning—to maximise accuracy.

Advantageous-tuning VLMs for document-to-JSON conversion

Our method—the really useful resolution for cost-effective document-to-JSON conversion—makes use of a VLM and fine-tunes it utilizing a dataset of historic paperwork paired with their corresponding ground-truth JSON that we think about as annotations. This permits the mannequin to study the particular patterns, fields, and output construction related to your historic information, successfully instructing it to learn your paperwork and extract data based on your required schema.

The next determine reveals a high-level structure of the document-to-JSON conversion course of for fine-tuning VLMs through the use of historic information. This permits the VLM to study from excessive information variations and helps be certain that the structured output matches the goal system construction and format.

Advantageous-tuning gives a number of benefits over relying solely on OCR or common VLMs:

- Schema adherence: The mannequin learns to output JSON matching a particular goal construction, which is important for integration with downstream programs like ERPs.

- Implicit area location: Advantageous-tuned VLMs usually study to find and extract fields with out express bounding field annotations within the coaching information, simplifying information preparation considerably.

- Improved textual content extraction high quality: The mannequin turns into extra correct at extracting textual content even from visually advanced or noisy doc layouts.

- Contextual understanding: The mannequin can higher perceive the relationships between totally different items of knowledge on the doc.

- Diminished immediate engineering: Submit fine-tuning, the mannequin requires much less advanced or shorter prompts as a result of the specified extraction conduct is constructed into its weights.

For our fine-tuning course of, we chosen the Swift framework. Swift supplies a complete, light-weight toolkit for fine-tuning varied massive language fashions, together with VLMs like Qwen-VL and Llama-Imaginative and prescient.

Knowledge preparation

To fine-tune the VLMs, you’ll use the Fatura2 dataset, a multi-layout bill picture dataset comprising 10,000 invoices with 50 distinct layouts.

The Swift framework expects coaching information in a particular JSONL (JSON Traces) format. Every line within the file is a JSON object representing a single coaching instance. For multimodal duties, this JSON object usually consists of:

messages: A listing of conversational turns (for instance, system, consumer, assistant). The consumer flip comprises placeholders for photos (for instance,) and the textual content immediate that guides the mannequin. The assistant flip comprises the goal output, which on this case is the ground-truth JSON string. photos: A listing of relative paths—inside the dataset listing construction—to the doc web page photos (JPG recordsdata) related to this coaching instance.

As with customary ML follow, the dataset is cut up into coaching, growth (validation), and take a look at units to successfully practice the mannequin, tune hyperparameters, and consider its remaining efficiency on unseen information. Every doc (which could possibly be single-page or multi-page) paired with its corresponding ground-truth JSON annotation constitutes a single row or instance in our dataset. In our use case, one coaching pattern is the bill picture (or a number of photos of doc pages) and the corresponding detailed JSON extraction. This one-to-one mapping is crucial for supervised fine-tuning.

The conversion course of, detailed within the dataset creation pocket book from the related GitHub repo, entails a number of key steps:

- Picture dealing with: If the supply doc is a PDF, every web page is rendered right into a high-quality PNG picture.

- Annotation processing (fill lacking values): We apply gentle pre-processing to the uncooked JSON annotation. Advantageous-tuning a number of fashions on an open supply dataset, we noticed that the efficiency will increase when all keys are current in each JSON pattern. To take care of this consistency, the goal JSONs within the dataset are made to incorporate the identical set of top-level keys (derived from all the dataset). If a secret is lacking for a specific doc, it’s added with a null worth.

- Key ordering: The keys inside the processed JSON annotation are sorted alphabetically. This constant ordering helps the mannequin study a secure output construction.

- Immediate building: A consumer immediate is constructed. This immediate consists of

tags (one for every web page of the doc) and explicitly lists the JSON keys the mannequin is anticipated to extract. Together with the JSON keys within the prompts improves the fine-tuned mannequin’s efficiency. - Swift formatting: These elements (immediate, picture paths, goal JSON) are assembled into the Swift JSONL format. Swift datasets assist multimodal inputs, together with photos, movies and audios.

The next is an instance construction of a single coaching occasion in Swift’s JSONL format, demonstrating how multimodal inputs are organized. This consists of conversational messages, paths to pictures, and objects containing bounding field (bbox) coordinates for visible references inside the textual content. For extra details about easy methods to create a customized dataset for Swift, see the Swift documentation.

Advantageous-tuning frameworks and assets

In our analysis of fine-tuning frameworks to be used with SageMaker AI, we thought-about a number of distinguished choices highlighted locally and related to our wants. These included Hugging Face Transformers, Hugging Face Autotrain, Llama Manufacturing unit, Unsloth, Torchtune, and ModelScope SWIFT (referred to easily as SWIFT on this submit, aligning with the SWIFT 2024 paper by Zhao and others.).

After experimenting with these, we determined to make use of SWIFT due to its light-weight nature, complete assist for varied Parameter-Environment friendly Advantageous-Tuning (PEFT) strategies like LoRA and DoRA, and its design tailor-made for environment friendly coaching of a wide selection of fashions, together with the VLMS used on this submit (for instance, Qwen-VL 2.5). Its scripting method integrates seamlessly with SageMaker AI coaching jobs, permitting for scalable and reproducible fine-tuning runs within the cloud.

There are a number of methods for adapting pre-trained fashions: full fine-tuning, the place all mannequin parameters are up to date, PEFT, which gives a extra environment friendly different by updating solely a small new variety of parameters (adapters), and quantization, a way that reduces mannequin measurement and quickens inference utilizing lower-precision codecs (see Sebastian Rashka’s submit on fine-tuning to study extra about every method).

Our challenge makes use of LoRA and DoRA, as configured within the fine-tuning pocket book.

The next is an instance of configuring and working a fine-tuning job (LoRA) as a SageMaker AI coaching job utilizing SWIFT and distant operate. When executing this operate, the fine-tuning will probably be executed remotely as a SageMaker AI coaching job.

Advantageous-tuning VLMs usually requires GPU situations due to their computational calls for. For fashions like Qwen2.5-VL 3B, an occasion akin to an Amazon SageMaker AI ml.g5.2xlarge or ml.g6.8xlarge may be appropriate. Coaching time is a operate of dataset measurement, mannequin measurement, batch measurement, variety of epochs, and different hyperparameters. For example, as famous in our challenge readme.md, fine-tuning Qwen2.5 VL 3B on 300 Fatura2 samples took roughly 2,829 seconds (roughly 47 minutes) on an ml.g6.8xlarge occasion utilizing Spot pricing. This demonstrates how smaller fashions, when fine-tuned successfully, can ship distinctive efficiency cost-efficiently. Bigger fashions like Llama-3.2-11B-Imaginative and prescient would typically require extra substantial GPU assets (for instance, ml.g5.12xlarge or bigger) and longer coaching instances.

Analysis and visualization of structured outputs (JSON)

A key facet of any automation or machine studying challenge is analysis. With out evaluating your resolution, you don’t understand how nicely it performs at fixing your small business downside. We wrote an analysis pocket book that you should utilize as a framework. Evaluating the efficiency of document-to-JSON fashions entails evaluating the model-generated JSON outputs for unseen enter paperwork (take a look at dataset) in opposition to the ground-truth JSON annotations.

Key metrics employed in our challenge embrace:

- Actual match (EM) – accuracy: This metric measures whether or not the extracted worth for a particular area is a precise character-by-character match to the ground-truth worth. It’s a strict metric, usually reported as a proportion.

- Character error price (CER) – edit distance: calculates the minimal variety of single-character edits (insertions, deletions, or substitutions) required to vary the mannequin’s predicted string into the ground-truth string, usually normalized by the size of the ground-truth string. A decrease CER signifies higher efficiency.

- Recall-Oriented Understudy for Gisting Analysis (ROGUE): It is a suite of metrics that evaluate n-grams (sequences of phrases) and the longest frequent subsequence between the expected output and the reference. Whereas historically used for textual content summarization, ROUGE scores also can present insights into the general textual similarity of the generated JSON string in comparison with the bottom reality.

Visualizations are useful for understanding mannequin efficiency nuances. The next edit distance heatmap picture supplies a granular view, exhibiting how carefully the predictions match the bottom reality (inexperienced means the mannequin’s output precisely matches the bottom reality, and shades of yellow, orange, and purple depict rising deviations). Every mannequin has its personal bar chart, permitting fast comparability throughout fashions. The X-axis is the variety of pattern paperwork. On this case, we ran inference on 250 unseen samples from the Fatura2 dataset. The Y-axis reveals the JSON keys that we requested the mannequin to extract; which will probably be totally different for you relying on what construction your downstream system requires.

Within the picture, you’ll be able to see the efficiency of three totally different fashions on the Fatura2 dataset. From left to proper: Qwen2.5 VL 3B fine-tuned on 300 samples from the Fatura2 dataset, within the center Qwen2.5 VL 3B with out fine-tuning (labeled vanilla), and Llama 3.2 11B imaginative and prescient fine-tuned on 1,000 samples.

The gray colour reveals the samples for which the Fatura2 dataset doesn’t comprise any floor reality, which is why these are the identical throughout the three fashions.

For an in depth, step-by-step walk-through of how the analysis metrics are calculated, the particular Python code used, and the way the visualizations are generated, see the excellent analysis pocket book in our challenge.

The picture reveals that Qwen2.5 vanilla is just first rate at extracting the Title and Vendor Title from the doc. For the opposite keys it makes greater than six character edit errors. Nonetheless, out of the field Qwen2.5 is sweet at adhering to the JSON schema with only some predictions the place the bottom line is lacking (darkish blue colour) and no predictions of JSON that couldn’t be parsed (for instance, lacking citation marks, lacking parentheses, or a lacking comma). Inspecting the 2 fine-tuned fashions, you’ll be able to see enchancment in efficiency with most samples, precisely matching the bottom reality on all keys. There are solely slight variations between fine-tuned Qwen2.5 and fine-tuned Llama 3.2, for instance fine-tuned Qwen2.5 barely outperforms fine-tuned Llama 3.2 on Complete, Title, Situations, and Purchaser; whereas fine-tuned Llama 3.2 barely outperforms fine-tuned Qwen2.5 on Vendor Tackle, Low cost, Tax, and Low cost.

The aim is to enter a doc into your fine-tuned mannequin and obtain a clear, structured JSON object that precisely maps the extracted data to predefined fields. JSON-constrained decoding enforces adherence to a specified JSON schema throughout inference and is beneficial to ensure the output is legitimate JSON. For the Fatura2 dataset, this method was not mandatory—our fine-tuned Qwen 2.5 mannequin persistently produced legitimate JSON outputs with out extra constraints. Nonetheless, incorporating constrained decoding stays a priceless safeguard, significantly for manufacturing environments the place output reliability is vital.

Pocket book 07 visualizes the enter doc and the extracted JSON information side-by-side.

Deploying the fine-tuned mannequin

After you fine-tune a mannequin and consider it in your dataset, it would be best to deploy it to run inference to course of your paperwork. Relying in your use case, a distinct deployment possibility may be extra appropriate.

Choice a: vLLM container prolonged for SageMaker

To deploy our fine-tuned mannequin for real-time inference, we use SageMaker endpoints. SageMaker endpoints present totally managed internet hosting for real-time inference for FMs, deep studying, and different ML fashions and permits managed autoscaling and value optimum deployment strategies. The method, detailed in our deploy mannequin pocket book, entails constructing a customized Docker container. This container packages the vLLM serving engine, extremely optimized for LLM and VLM inference, together with the Swift framework elements wanted to load our particular mannequin and adapter. vLLM supplies an OpenAI-compatible API server by default, appropriate for dealing with doc and picture inputs with VLMs. Our customized docker-artifacts and Dockerfile adapts this vLLM base for SageMaker deployment. Key steps embrace:

- Establishing the required surroundings and dependencies.

- Configuring an entry level that initializes the vLLM server.

- Ensuring the server can load the bottom VLM and dynamically apply our fine-tuned LoRA adapter. The Amazon S3 path to the adapter (

mannequin.tar.gz) is handed utilizing theADAPTER_URIsurroundings variable when creating the SageMaker mannequin. - The container, after being constructed and pushed to Amazon ECR, is then deployed to a SageMaker endpoint, which listens for invocation requests and routes them to the vLLM engine contained in the container.

The next picture reveals a SageMaker vLLM deployment structure, the place a customized Docker container from Amazon ECR is deployed to a SageMaker endpoint. The container makes use of vLLM’s OpenAI-compatible API and Swift to serve a base VLM with a fine-tuned LoRA adapter dynamically loaded from Amazon S3.

Choice b (non-obligatory): Inference elements on SageMaker

For extra advanced inference workflows that may contain subtle pre-processing of enter paperwork, post-processing of the extracted JSON, and even chaining a number of fashions (for instance, a classification mannequin adopted by an extraction mannequin), Amazon SageMaker inference elements provide enhanced flexibility. You should utilize them to construct a pipeline of a number of containers or fashions inside a single endpoint, every dealing with a particular a part of the inference logic.

Choice c: Customized mannequin inference in Amazon Bedrock

Now you can import your customized fashions in Amazon Bedrock after which use Amazon Bedrock options to make inference calls to the mannequin. Qwen 2.5 structure is supported (see Supported Architectures). For extra data, see Amazon Bedrock Customized Mannequin Import now typically accessible.

Clear up

To keep away from ongoing expenses, it’s vital to take away the AWS assets created for this challenge while you’re completed.

- SageMaker endpoints and fashions:

- Within the AWS Administration Console for SageMaker AI, go to Inference after which Endpoints. Choose and delete endpoints created for this challenge.

- Then, go to Inference after which Fashions and delete the related fashions.

- Amazon S3 information:

- Navigate to the Amazon S3 console.

- Delete the S3 buckets or particular folders or prefixes used for datasets, mannequin artifacts (for instance, mannequin.tar.gz from coaching jobs), and inference outcomes. Word: Be sure you don’t delete information wanted by different initiatives.

- Amazon ECR photos and repositories:

- Within the Amazon ECR console, delete Docker photos and the repository created for the customized vLLM container should you deployed one.

- CloudWatch logs (non-obligatory):

- Logs from SageMaker actions are saved in Amazon CloudWatch. You possibly can delete related log teams (for instance,

/aws/sagemaker/TrainingJobsand/aws/sagemaker/Endpoints) if desired, although many have automated retention insurance policies.

- Logs from SageMaker actions are saved in Amazon CloudWatch. You possibly can delete related log teams (for instance,

Essential: At all times confirm assets earlier than deletion. Should you experimented with Amazon Bedrock customized mannequin imports, ensure that these are additionally cleaned up. Use AWS Value Explorer to observe for sudden expenses.

Conclusion and future outlook

On this submit, we demonstrated that fine-tuning VLMs supplies a robust and versatile method to automate and considerably improve doc understanding capabilities. We’ve additionally demonstrated that utilizing targeted fine-tuning permits smaller, multi-modal fashions to compete successfully with a lot bigger counterparts (98% accuracy with Qwen2.5 VL 3B). The challenge additionally highlights that fine-tuning VLMs for document-to-JSON processing may be accomplished cost-effectively through the use of Spot situations and PEFT strategies (roughly $1 USD to fine-tune a 3 billion parameter mannequin on round 200 paperwork).

The fine-tuning process was carried out utilizing Amazon SageMaker coaching jobs and the Swift framework, which proved to be a flexible and efficient toolkit for orchestrating this fine-tuning course of.

The potential for enhancing and increasing this work is huge. Some thrilling future instructions embrace deploying structured doc fashions on CPU-based, serverless compute like AWS Lambda or Amazon SageMaker Serverless Inference utilizing instruments like llama.cpp or vLLM. Utilizing quantized fashions can allow low-latency, cost-efficient inference for sporadic workloads. One other future path consists of bettering analysis of structured outputs by going past field-level metrics. This consists of validating advanced nested buildings and tables utilizing strategies like tree edit distance for tables (TEDS).

The whole code repository, together with the notebooks, utility scripts, and Docker artifacts, is accessible on GitHub that will help you get began unlocking insights out of your paperwork. For the same method, utilizing Amazon Nova, please discuss with this AWS weblog for optimizing doc AI and structured outputs by fine-tuning Amazon Nova Fashions and on-demand inference.

Concerning the Authors

Arlind Nocaj is a GTM Specialist Options Architect for AI/ML and Generative AI for Europe central primarily based in AWS Zurich Workplace, who guides enterprise clients by way of their digital transformation journeys. With a PhD in community analytics and visualization (Graph Drawing) and over a decade of expertise as a analysis scientist and software program engineer, he brings a novel mix of educational rigor and sensible experience to his position. His main focus lies in utilizing the total potential of information, algorithms, and cloud applied sciences to drive innovation and effectivity. His areas of experience embrace Machine Studying, Generative AI and particularly Agentic programs with Multi-modal LLMs for doc processing and structured insights.

Arlind Nocaj is a GTM Specialist Options Architect for AI/ML and Generative AI for Europe central primarily based in AWS Zurich Workplace, who guides enterprise clients by way of their digital transformation journeys. With a PhD in community analytics and visualization (Graph Drawing) and over a decade of expertise as a analysis scientist and software program engineer, he brings a novel mix of educational rigor and sensible experience to his position. His main focus lies in utilizing the total potential of information, algorithms, and cloud applied sciences to drive innovation and effectivity. His areas of experience embrace Machine Studying, Generative AI and particularly Agentic programs with Multi-modal LLMs for doc processing and structured insights.

Malte Reimann is a Options Architect primarily based in Zurich, working with clients throughout Switzerland and Austria on their cloud initiatives. His focus lies in sensible machine studying functions—from immediate optimization to fine-tuning imaginative and prescient language fashions for doc processing. The latest instance, working in a small workforce to supply deployment choices for Apertus on AWS. An lively member of the ML group, Malte balances his technical work with a disciplined method to health, preferring early morning fitness center periods when it’s empty. Throughout summer season weekends, he explores the Swiss Alps on foot and having fun with time in nature. His method to each know-how and life is simple: constant enchancment by way of deliberate follow, whether or not that’s optimizing a buyer’s cloud deployment or making ready for the following hike within the clouds.

Malte Reimann is a Options Architect primarily based in Zurich, working with clients throughout Switzerland and Austria on their cloud initiatives. His focus lies in sensible machine studying functions—from immediate optimization to fine-tuning imaginative and prescient language fashions for doc processing. The latest instance, working in a small workforce to supply deployment choices for Apertus on AWS. An lively member of the ML group, Malte balances his technical work with a disciplined method to health, preferring early morning fitness center periods when it’s empty. Throughout summer season weekends, he explores the Swiss Alps on foot and having fun with time in nature. His method to each know-how and life is simple: constant enchancment by way of deliberate follow, whether or not that’s optimizing a buyer’s cloud deployment or making ready for the following hike within the clouds.

Nick McCarthy is a Senior Generative AI Specialist Options Architect on the Amazon Bedrock workforce, targeted on mannequin customization. He has labored with AWS shoppers throughout a variety of industries — together with healthcare, finance, sports activities, telecommunications, and vitality — serving to them speed up enterprise outcomes by way of using AI and machine studying. Exterior of labor, Nick loves touring, exploring new cuisines, and studying about science and know-how. He holds a Bachelor’s diploma in Physics and a Grasp’s diploma in Machine Studying.

Nick McCarthy is a Senior Generative AI Specialist Options Architect on the Amazon Bedrock workforce, targeted on mannequin customization. He has labored with AWS shoppers throughout a variety of industries — together with healthcare, finance, sports activities, telecommunications, and vitality — serving to them speed up enterprise outcomes by way of using AI and machine studying. Exterior of labor, Nick loves touring, exploring new cuisines, and studying about science and know-how. He holds a Bachelor’s diploma in Physics and a Grasp’s diploma in Machine Studying.

Irene Marban Alvarez is a Generative AI Specialist Options Architect at Amazon Net Companies (AWS), working with clients in the UK and Eire. With a background in Biomedical Engineering and Masters in Synthetic Intelligence, her work focuses on serving to organizations leverage the newest AI applied sciences to speed up their enterprise. In her spare time, she loves studying and cooking for her mates.

Irene Marban Alvarez is a Generative AI Specialist Options Architect at Amazon Net Companies (AWS), working with clients in the UK and Eire. With a background in Biomedical Engineering and Masters in Synthetic Intelligence, her work focuses on serving to organizations leverage the newest AI applied sciences to speed up their enterprise. In her spare time, she loves studying and cooking for her mates.