On this put up, we present you the way Amazon Search optimized GPU occasion utilization by leveraging AWS Batch for SageMaker Coaching jobs. This managed answer enabled us to orchestrate machine studying (ML) coaching workloads on GPU-accelerated occasion households like P5, P4, and others. We will even present a step-by-step walkthrough of the use case implementation.

Machine studying at Amazon Search

At Amazon Search, we use tons of of GPU-accelerated situations to coach and consider ML fashions that assist our prospects uncover merchandise they love. Scientists sometimes prepare multiple mannequin at a time to seek out the optimum set of options, mannequin structure, and hyperparameter settings that optimize the mannequin’s efficiency. We beforehand leveraged a first-in-first-out (FIFO) queue to coordinate mannequin coaching and analysis jobs. Nonetheless, we wanted to make use of a extra nuanced standards to prioritize which jobs ought to run in what order. Manufacturing fashions wanted to run with excessive precedence, exploratory analysis as medium precedence, and hyperparameter sweeps and batch inference as low precedence. We additionally wanted a system that would deal with interruptions. Ought to a job fail, or a given occasion sort change into saturated, we wanted the job to run on different accessible appropriate occasion sorts whereas respecting the general prioritization standards. Lastly, we wished a managed answer so we may focus extra on mannequin growth as a substitute of managing infrastructure.

After evaluating a number of choices, we selected AWS Batch for Amazon SageMaker Coaching jobs as a result of it greatest met our necessities. This answer seamlessly built-in AWS Batch with Amazon SageMaker and allowed us to run jobs per our prioritization standards. This enables utilized scientists to submit a number of concurrent jobs with out handbook useful resource administration. By leveraging AWS Batch options reminiscent of superior prioritization by way of fair-share scheduling, we elevated peak utilization of GPU-accelerated situations from 40% to over 80%.

Amazon Search: AWS Batch for SageMaker Coaching Job implementation

We leveraged three AWS applied sciences to arrange our job queue. We used Service Environments to configure the SageMaker AI parameters that AWS Batch makes use of to submit and handle SageMaker Coaching jobs. We used Share Identifiers to prioritize our workloads. Lastly, we used Amazon CloudWatch to observe and the supply of alerting functionality for important occasions or deviations from anticipated habits. Let’s dive deep into these constructs.

Service environments. We arrange service environments to symbolize the full GPU capability accessible for every occasion household, reminiscent of P5s and P4s. Every service surroundings was configured with fastened limits based mostly on our crew’s reserved capability in AWS Batch. Be aware that for groups utilizing SageMaker Coaching Plans, these limits could be set to the variety of reserved situations, making capability planning extra simple. By defining these boundaries, we established how the full GPU occasion capability inside a service surroundings was distributed throughout totally different manufacturing jobs. Every manufacturing experiment was allotted a portion of this capability by way of Share Identifiers.

Determine 1 gives a real-world instance of how we used AWS Batch’s fair-share scheduling to divide 100 GPU occasion between ShareIDs. We allotted 60 situations to ProdExp1, and 40 to ProdExp2. When ProdExp2 used solely 25 GPU situations, the remaining 15 might be borrowed by ProdExp1, permitting it to scale as much as 75 GPU situations. When ProdExp2 later wanted its full 40 GPU situations, the scheduler preempted jobs from ProdExp1 to revive steadiness. This instance used the P4 occasion household, however the identical strategy may apply to any SageMaker-supported EC2 occasion household. This ensured that manufacturing workloads have assured entry to their assigned capability, whereas exploratory or ad-hoc experiments may nonetheless make use of any idle GPU situations. This design safeguarded important workloads and improved total occasion utilization by making certain that no reserved capability went unused.

Determine 1: AWS Batch fair-share scheduling

Share Identifiers. We used Share Identifiers to allocate fractions of a service surroundings’s capability to manufacturing experiments. Share Identifiers are string tags utilized at job submission time. AWS Batch used these tags to trace utilization and implement fair-share scheduling. For initiatives that required devoted capability, we outlined preset Share Identifiers with quotas in AWS Batch. This reserved capability for manufacturing tracks. These quotas acted as equity targets quite than laborious limits. Idle capability may nonetheless be borrowed, however underneath rivalry, AWS Batch enforced equity by preempting assets from overused identifiers and reassigned them to underused ones.

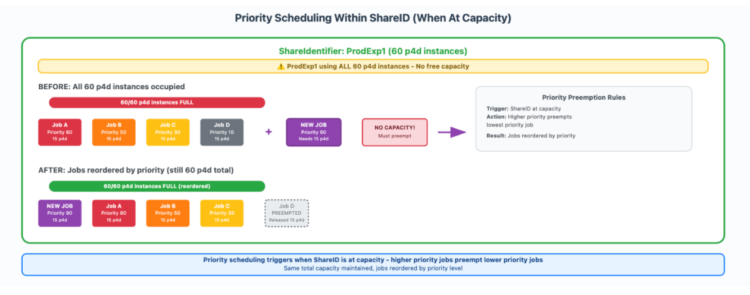

Inside every Share Identifier, job priorities starting from 0 to 99 decided execution order, however priority-based preemption solely triggered when the ShareIdentifier reached its allotted capability restrict. Determine 2 illustrates how we setup and used our share identifiers. ProdExp1 had 60 p4d situations and ran jobs at varied priorities. Job A had a precedence of 80, Job B was set to 50, Job C was set to at 30, and Job D had a precedence 10. When all 60 situations have been occupied and a brand new high-priority job (precedence 90) requiring 15 situations was submitted, the system preempted the bottom precedence working job (Job D) to make room, whereas sustaining the full of 60 situations for that Share Identifier.

Determine 2: Precedence scheduling inside a Share ID

Amazon CloudWatch. We used Amazon CloudWatch to instrument our SageMaker coaching jobs. SageMaker routinely publishes metrics on job progress and useful resource utilization, whereas AWS Batch gives detailed info on job scheduling and execution. With AWS Batch, we queried the standing of every job by way of the AWS Batch APIs. This made it potential to trace jobs as they transitioned by way of states reminiscent of SUBMITTED, PENDING, RUNNABLE, STARTING, RUNNING, SUCCEEDED, and FAILED. We revealed these metrics and job states to CloudWatch and configured dashboards and alarms to alert anytime we encountered prolonged wait occasions, sudden failures, or underutilized assets. This built-in integration offered each real-time visibility and historic pattern evaluation, which helped our crew preserve operational effectivity throughout GPU clusters with out constructing customized monitoring methods.

Operational influence on crew efficiency

By adopting AWS Batch for SageMaker Coaching jobs, we enabled experiments to run with out issues about useful resource availability or rivalry. Researchers may submit jobs with out ready for handbook scheduling, which elevated the variety of experiments that might be run in parallel. This led to shorter queue occasions, greater GPU utilization, and sooner turnaround of coaching outcomes, straight bettering each analysis throughput and supply timelines.

Methods to arrange AWS Batch for SageMaker Coaching jobs

To arrange the same surroundings, you may comply with this tutorial, which reveals you learn how to orchestrate a number of GPU massive language mannequin (LLM) fine-tuning jobs utilizing a number of GPU-powered situations. The answer can also be accessible on GitHub.

Stipulations

To orchestrate a number of SageMaker Coaching jobs with AWS Batch, first it’s essential to full the next stipulations:

Clone the GitHub repository with the property for this deployment. This repository consists of notebooks that reference property:

Create AWS Batch assets

To create the required assets to handle SageMaker Coaching job queues with AWS Batch, we offer utility capabilities within the instance to automate the creation of the Service Setting, Scheduling Coverage, and Job Queue.

The service surroundings represents the Amazon SageMaker AI capability limits accessible to schedule, expressed by most variety of situations. The scheduling coverage signifies how useful resource computes are allotted in a job queue between customers or workloads. The job queue is the scheduler interface that researchers work together with to submit jobs and interrogate job standing. AWS Batch gives two totally different queues we are able to function with:

- FIFO queues – Queues by which no scheduling insurance policies are required

- Truthful-share queues – Queues by which a scheduling coverage Amazon Useful resource Title (ARN) is required to orchestrate the submitted jobs

We advocate creating devoted service environments for every job queue in a 1:1 ratio. FIFO queues present primary message supply, whereas fair-share scheduling (FSS) queues present extra subtle scheduling, balancing utilization inside a Share Identifier, share weights, and job precedence. For purchasers who don’t want a number of shares however would really like the flexibility to assign a precedence on job submission, we advocate creating an FSS queue and utilizing a single share inside it for all submissions.To create the assets, execute the next instructions:

You may navigate the AWS Batch Dashboard, proven within the following screenshot, to discover the created assets.

This automation script created two queues:

ml-c5-xlarge-queue– A FIFO queue with precedence 2 used for CPU workloadsml-g6-12xlarge-queue– A good-share queue with precedence 1 used for GPU workloads

The related scheduling coverage for the queue ml-g6-12xlarge-queue is with share attributes reminiscent of Excessive precedence (HIGHPRI), Medium precedence (MIDPRI) and Low precedence (LOWPRI) together with the queue weights. Customers can submit jobs and assign them to one in all three shares: HIGHPRI, MIDPRI, or LOWPRI and assign weights reminiscent of 1 for prime precedence and three for medium and 5 for low precedence. Beneath is the screenshot displaying the scheduling coverage particulars:

For directions on learn how to arrange the service surroundings and a job queue, check with the Getting began part in Introducing AWS Batch assist for SageMaker Coaching Jobs weblog.

Run LLM fine-tuning jobs on SageMaker AI

We run the pocket book pocket book.ipynb to begin submitting SageMaker Coaching jobs with AWS Batch. The pocket book incorporates the code to organize the info used for the workload, add on Amazon Easy Storage Service (Amazon S3), and outline the hyperparameters required by the job to be executed.

To run the fine-tuning workload utilizing SageMaker Coaching jobs, this instance makes use of the ModelTrainer class. The ModelTrainer class is a more moderen and extra intuitive strategy to mannequin coaching that considerably enhances consumer expertise. It helps distributed coaching, construct your personal container (BYOC), and recipes.

For added details about ModelTrainer, you may check with Speed up your ML lifecycle utilizing the brand new and improved Amazon SageMaker Python SDK – Half 1: ModelTrainer.

To arrange the fine-tuning workload, full the next steps:

- Choose the occasion sort, the container picture for the coaching job, and outline the checkpoint path the place the mannequin shall be saved:

- Create the ModelTrainer operate to encapsulate the coaching setup. The ModelTrainer class simplifies the expertise by encapsulating code and coaching setup. On this instance:

SourceCode– The supply code configuration. That is used to configure the supply code for working the coaching job through the use of your native python scripts.Compute– The compute configuration. That is used to specify the compute assets for the coaching job.

- Arrange the enter channels for ModelTrainer by creating InputData objects from the offered S3 bucket paths for the coaching and validation datasets:

Queue SageMaker Coaching jobs

This part and the next are meant for use interactively so to discover learn how to use the Amazon SageMaker Python SDK to submit jobs to your Batch queues. Observe these steps:

- Choose the queue to make use of:

- Within the subsequent cell, submit two coaching jobs within the queue:

LOW PRIORITYMEDIUM PRIORITY

- Use the API

submitto submit all the roles:

Show the standing of working and in queue jobs

We will use the job queue checklist and job queue snapshot APIs to programmatically view a snapshot of the roles that the queue will run subsequent. For fair-share queues, this ordering is dynamic and infrequently must be refreshed as a result of new jobs are submitted to the queue or as share utilization adjustments over time.

The next screenshot reveals the roles submitted with low precedence and medium precedence within the Runnable State and within the queue.

You can even check with the AWS Batch Dashboard, proven within the following screenshot, to investigate the standing of the roles.

As proven within the following screenshot, the primary job executed with the SageMaker Coaching job is the MEDIUM PRIORITY one, by respecting the scheduling coverage guidelines outlined beforehand.

You may discover the working coaching job within the SageMaker AI console, as proven within the following screenshot.

Submit an extra job

Now you can submit an extra SageMaker Coaching job with HIGH PRIORITY to the queue:

You may discover the standing from the dashboard, as proven within the following screenshot.

The HIGH PRIORITY job, regardless of being submitted later within the queue, shall be executed earlier than the opposite runnable jobs by respecting the scheduling coverage guidelines, as proven within the following screenshot.

Because the scheduling coverage within the screenshot reveals, the LOWPRI share has the next weight issue (5) than the MIDPRI share (3). Since a decrease weight signifies greater precedence, a LOWPRI job shall be executed after a MIDPRI job, even when they’re submitted on the identical time.

Clear up

To wash up your assets to keep away from incurring future fees, comply with these steps:

- Confirm that your coaching job isn’t working anymore. To take action, in your SageMaker console, select Coaching and test Coaching jobs.

- Delete AWS Batch assets through the use of the command

python create_resources.py --cleanfrom the GitHub instance or by manually deleting them from the AWS Administration Console.

Conclusion

On this put up, we demonstrated how Amazon Search used AWS Batch for SageMaker Coaching Jobs to optimize GPU useful resource utilization and coaching job administration. The answer remodeled their coaching infrastructure by implementing subtle queue administration and fair proportion scheduling, growing peak GPU utilization from 40% to over 80%.We advocate that organizations going through comparable ML coaching infrastructure challenges discover AWS Batch integration with SageMaker, which gives built-in queue administration capabilities and priority-based scheduling. The answer eliminates handbook useful resource coordination whereas offering workloads with applicable prioritization by way of configurable scheduling insurance policies.

To start implementing AWS Batch with SageMaker Coaching jobs, you may entry our pattern code and implementation information within the amazon-sagemaker-examples repository on GitHub. The instance demonstrates learn how to arrange AWS Identification and Entry Administration (IAM) permissions, create AWS Batch assets, and orchestrate a number of GPU-powered coaching jobs utilizing ModelTrainer class.

The authors wish to thank Charles Thompson and Kanwaljit Khurmi for his or her collaboration.

In regards to the authors