This put up is cowritten with Gayathri Rengarajan and Harshit Kumar Nyati from PowerSchool.

PowerSchool is a number one supplier of cloud-based software program for Okay-12 training, serving over 60 million college students in additional than 90 international locations and over 18,000 prospects, together with greater than 90 of the highest 100 districts by scholar enrollment in the USA. Once we launched PowerBuddy™, our AI assistant built-in throughout our a number of academic platforms, we confronted a vital problem: implementing content material filtering refined sufficient to differentiate between respectable educational discussions and dangerous content material in academic contexts.

On this put up, we display how we constructed and deployed a customized content material filtering answer utilizing Amazon SageMaker AI that achieved higher accuracy whereas sustaining low false constructive charges. We stroll via our technical strategy to wonderful tuning Llama 3.1 8B, our deployment structure, and the efficiency outcomes from inner validations.

PowerSchool’s PowerBuddy

PowerBuddy is an AI assistant that gives personalised insights, fosters engagement, and offers assist all through the academic journey. Academic leaders profit from PowerBuddy being delivered to their knowledge and their customers’ most typical workflows inside the PowerSchool ecosystem – equivalent to Schoology Studying, Naviance CCLR, PowerSchool SIS, Efficiency Issues, and extra – to make sure a constant expertise for college kids and their community of assist suppliers in school and at residence.

The PowerBuddy suite consists of a number of AI options: PowerBuddy for Studying capabilities as a digital tutor; PowerBuddy for School and Profession offers insights for profession exploration; PowerBuddy for Neighborhood simplifies entry to district and faculty data, and others. The answer consists of built-in accessibility options equivalent to speech-to-text and text-to-speech performance.

Content material filtering for PowerBuddy

As an training know-how supplier serving tens of millions of scholars—lots of whom are minors—scholar security is our highest precedence. Nationwide knowledge reveals that roughly 20% of scholars ages 12–17 expertise bullying, and 16% of highschool college students have reported severely contemplating suicide. With PowerBuddy’s widespread adoption throughout Okay-12 colleges, we would have liked strong guardrails particularly calibrated for academic environments.

The out-of-the-box content material filtering and security guardrails options out there in the marketplace didn’t totally meet PowerBuddy’s necessities, primarily due to the necessity for domain-specific consciousness and fine-tuning inside the training context. For instance, when a highschool scholar is studying about delicate historic matters equivalent to World Warfare II or the Holocaust, it’s essential that academic discussions aren’t mistakenly flagged for violent content material. On the similar time, the system should have the ability to detect and instantly alert college directors to indications of potential hurt or threats. Attaining this nuanced steadiness requires deep contextual understanding, which might solely be enabled via focused fine-tuning.

We would have liked to implement a complicated content material filtering system that would intelligently differentiate between respectable educational inquiries and actually dangerous content material—detecting and blocking prompts indicating bullying, self-harm, hate speech, inappropriate sexual content material, violence, or dangerous materials not appropriate for academic settings. Our problem was discovering a cloud answer to coach and host a customized mannequin that would reliably defend college students whereas sustaining the academic performance of PowerBuddy.

After evaluating a number of AI suppliers and cloud providers that enable mannequin customization and fine-tuning, we chosen Amazon SageMaker AI as probably the most appropriate platform primarily based on these vital necessities:

- Platform stability: As a mission-critical service supporting tens of millions of scholars each day, we require an enterprise-grade infrastructure with excessive availability and reliability.

- Autoscaling capabilities: Scholar utilization patterns in training are extremely cyclical, with important site visitors spikes throughout college hours. Our answer wanted to deal with these fluctuations with out degrading efficiency.

- Management of mannequin weights after fine-tuning: We would have liked management over our fine-tuned fashions to allow steady refinement of our security guardrails, enabling us to shortly reply to new sorts of dangerous content material which may emerge in academic settings.

- Incremental coaching functionality: The power to repeatedly enhance our content material filtering mannequin with new examples of problematic content material was important.

- Price-effectiveness: We would have liked an answer that will enable us to guard college students with out creating prohibitive prices that will restrict colleges’ entry to our academic instruments.

- Granular management and transparency: Scholar security calls for visibility into how our filtering choices are made, requiring an answer that isn’t a black field however offers transparency into mannequin habits and efficiency.

- Mature managed service: Our staff wanted to concentrate on academic purposes reasonably than infrastructure administration, making a complete managed service with production-ready capabilities important.

Resolution overview

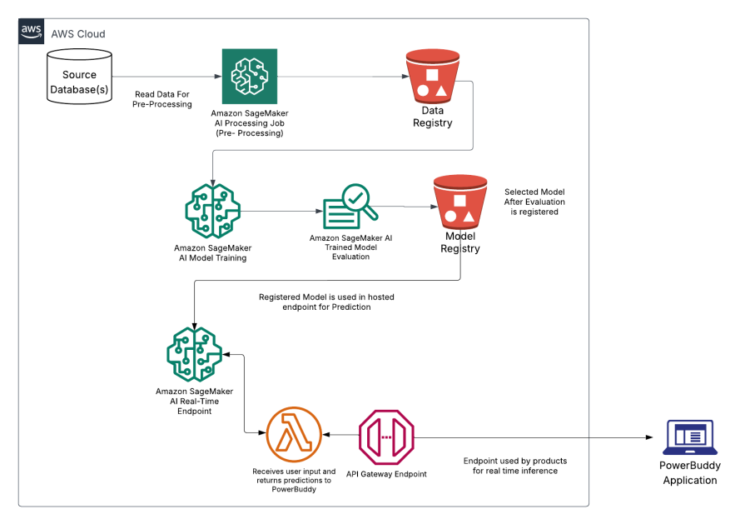

Our content material filtering system structure, proven within the previous determine, consists of a number of key elements:

- Information preparation pipeline:

- Curated datasets of secure and unsafe content material examples particular to academic contexts

- Information preprocessing and augmentation to make sure strong mannequin coaching

- Safe storage in Amazon S3 buckets with applicable encryption and entry controls

Word: All coaching knowledge was totally anonymized and didn’t embody personally identifiable scholar data

- Mannequin coaching infrastructure:

- SageMaker coaching jobs for fine-tuning Llama 3.1 8B

- Inference structure:

- Deployment on SageMaker managed endpoints with auto-scaling configured

- Integration with PowerBuddy via Amazon API Gateway for real-time content material filtering

- Monitoring and logging via Amazon CloudWatch for steady high quality evaluation

- Steady enchancment loop:

- Suggestions assortment mechanism for false positives/negatives

- Scheduled retraining cycles to include new knowledge and enhance efficiency

- A/B testing framework to guage mannequin enhancements earlier than full deployment

Growth course of

After exploring a number of approaches to content material filtering, we determined to fine-tune Llama 3.1 8B utilizing Amazon SageMaker JumpStart. This resolution adopted our preliminary makes an attempt to develop a content material filtering mannequin from scratch, which proved difficult to optimize for consistency throughout numerous sorts of dangerous content material.

SageMaker JumpStart considerably accelerated our improvement course of by offering pre-configured environments and optimized hyperparameters for fine-tuning basis fashions. The platform’s streamlined workflow allowed our staff to concentrate on curating high-quality coaching knowledge particular to academic security issues reasonably than spending time on infrastructure setup and hyperparameter tuning.

We fine-tuned Llama 3.1 8B mannequin utilizing Low Rank Adaptation (LoRA) method on Amazon SageMaker AI coaching jobs, which allowed us to take care of full management over the coaching course of.

After the fine-tuning was achieved, we deployed the mannequin on SageMaker AI managed endpoint and built-in it as a vital security part inside our PowerBuddy structure.

For our manufacturing deployment, we chosen NVIDIA A10G GPUs out there via ml.g5.12xlarge situations, which supplied the perfect steadiness of efficiency and cost-effectiveness for our mannequin dimension. The AWS staff supplied essential steerage on choosing optimum mannequin serving configuration for our use case. This recommendation helped us optimize each efficiency and value by making certain we weren’t over-provisioning assets.

Technical implementation

Beneath is the code snippet to fine-tune the mannequin on the pre-processed dataset. Instruction tuning dataset is first transformed into area adaptation dataset format and scripts make the most of Absolutely Sharded Information Parallel (FSDP) in addition to Low Rank Adaptation (LoRA) methodology for fine-tuning the mannequin.

We outline an estimator object first. By default, these fashions prepare through area adaptation, so you need to point out instruction tuning by setting the instruction_tuned hyperparameter to True.

After we outline the estimator, we’re prepared to start out coaching:

estimator.match({"coaching": train_data_location})

After coaching, we created a mannequin utilizing the artifacts saved in S3 and deployed the mannequin to a real-time endpoint for analysis. We examined the mannequin utilizing our take a look at dataset that covers key situations to validate efficiency and habits. We calculated recall, F1, confusion matrix and inspected misclassifications. If wanted, regulate hyperparameters/immediate template and retrain; in any other case proceed with manufacturing deployment.

You can too try the pattern pocket book for wonderful tuning Llama 3 fashions on SageMaker JumpStart in SageMaker examples.

We used the Quicker autoscaling on Amazon SageMaker realtime endpoints pocket book to arrange autoscaling on SageMaker AI endpoints.

Validation of answer

To validate our content material filtering answer, we performed in depth testing throughout a number of dimensions:

- Accuracy testing: In our inner validation testing, the mannequin achieved ~93% accuracy in figuring out dangerous content material throughout a various take a look at set representing numerous types of inappropriate materials.

- False constructive evaluation: We labored to attenuate situations the place respectable academic content material was incorrectly flagged as dangerous, reaching a false constructive charge of lower than 3.75% in take a look at environments; outcomes might fluctuate by college context.

- Efficiency testing: Our answer maintained response occasions averaging 1.5 seconds. Even throughout peak utilization durations simulating actual classroom environments, the system constantly delivered seamless consumer expertise with no failed transactions.

- Scalability and reliability validation:

- Complete load testing achieved 100% transaction success charge with constant efficiency distribution, validating system reliability beneath sustained academic workload circumstances.

- Transactions accomplished efficiently with out degradation in efficiency or accuracy, demonstrating the system’s skill to scale successfully for classroom-sized concurrent utilization situations.

- Manufacturing deployment: Preliminary rollout to a choose group of colleges confirmed constant efficiency in real-world academic environments.

- Scholar security outcomes: Faculties reported a big discount in reported incidents of AI-enabled bullying or inappropriate content material era in comparison with different AI methods with out specialised content material filtering.

Superb-tuned mannequin metrics in comparison with out-of-the-box content material filtering options

The fine-tuned content material filtering mannequin demonstrated greater efficiency than generic, out-of-the-box filtering options in key security metrics. It achieved a better accuracy (0.93 in comparison with 0.89), and higher F1-scores for each the secure (0.95 in comparison with 0.91) and unsafe (0.90 in comparison with 0.87) courses. The fine-tuned mannequin additionally demonstrated a extra balanced trade-off between precision and recall, indicating extra constant efficiency throughout courses. Importantly, it makes fewer false constructive errors by misclassifying solely 6 secure circumstances as unsafe, in comparison with 19 authentic responses in a take a look at set of 160— a big benefit in safety-sensitive purposes. General, our fine-tuned content material filtering mannequin proved to be extra dependable and efficient.

Future plans

Because the PowerBuddy suite evolves and is built-in into different PowerSchool merchandise and agent flows, the content material filter mannequin might be repeatedly tailored and improved with wonderful tuning for different merchandise with particular wants.

We plan to implement further specialised adapters utilizing the SageMaker AI multi-adapter inference characteristic alongside our content material filtering mannequin topic to feasibility and compliance consideration. The concept is to deploy fine-tuned small language fashions (SLMs) for particular drawback fixing in circumstances the place giant language fashions (LLMs) are large and generic and don’t meet the necessity for narrower drawback domains. For instance:

- Choice making brokers particular to the Schooling area

- Information area identification in circumstances of textual content to SQL queries

This strategy will ship important value financial savings by eliminating the necessity for separate mannequin deployments whereas sustaining the specialised efficiency of every adapter.

The aim is to create an AI studying atmosphere that isn’t solely secure but in addition inclusive and attentive to various scholar wants throughout our international implementations, finally empowering college students to be taught successfully whereas being shielded from dangerous content material.

Conclusion

The implementation of our specialised content material filtering system on Amazon SageMaker AI has been transformative for PowerSchool’s skill to ship secure AI experiences in academic settings. By constructing strong guardrails, we’ve addressed one of many major issues educators and fogeys have about introducing AI into school rooms—serving to to make sure scholar security.

As Shivani Stumpf, our Chief Product Officer, explains: “We’re now monitoring round 500 college districts who’ve both bought PowerBuddy or activated included options, reaching over 4.2 million college students roughly. Our content material filtering know-how ensures college students can profit from AI-powered studying assist with out publicity to dangerous content material, making a secure area for tutorial development and exploration.”

The impression extends past simply blocking dangerous content material. By establishing belief in our AI methods, we’ve enabled colleges to embrace PowerBuddy as a priceless academic instrument. Lecturers report spending much less time monitoring scholar interactions with know-how and extra time on personalised instruction. College students profit from 24/7 studying assist with out the dangers which may in any other case include AI entry.

For organizations requiring domain-specific security guardrails, think about how the fine-tuning capabilities and managed endpoints of SageMaker AI might be tailored to your use case.

As we proceed to increase PowerBuddy’s capabilities with the multi-adapter inference of SageMaker, we stay dedicated to sustaining the proper steadiness between academic innovation and scholar security—serving to to make sure that AI turns into a constructive pressure in training that folks, academics, and college students can belief.

In regards to the authors

![]() Gayathri Rengarajan is the Affiliate Director of Information Science at PowerSchool, main the PowerBuddy initiative. Recognized for bridging deep technical experience with strategic enterprise wants, Gayathri has a confirmed observe report of delivering enterprise-grade generative AI options from idea to manufacturing.

Gayathri Rengarajan is the Affiliate Director of Information Science at PowerSchool, main the PowerBuddy initiative. Recognized for bridging deep technical experience with strategic enterprise wants, Gayathri has a confirmed observe report of delivering enterprise-grade generative AI options from idea to manufacturing.

Harshit Kumar Nyati is a Lead Software program Engineer at PowerSchool with 10+ years of expertise in software program engineering and analytics. He focuses on constructing enterprise-grade Generative AI purposes utilizing Amazon SageMaker AI, Amazon Bedrock, and different cloud providers. His experience consists of fine-tuning LLMs, coaching ML fashions, internet hosting them in manufacturing, and designing MLOps pipelines to assist the total lifecycle of AI purposes.

Harshit Kumar Nyati is a Lead Software program Engineer at PowerSchool with 10+ years of expertise in software program engineering and analytics. He focuses on constructing enterprise-grade Generative AI purposes utilizing Amazon SageMaker AI, Amazon Bedrock, and different cloud providers. His experience consists of fine-tuning LLMs, coaching ML fashions, internet hosting them in manufacturing, and designing MLOps pipelines to assist the total lifecycle of AI purposes.

Anjali Vijayakumar is a Senior Options Architect at AWS with over 9 years of expertise serving to prospects construct dependable and scalable cloud options. Based mostly in Seattle, she focuses on architectural steerage for EdTech options, working intently with Schooling Expertise firms to remodel studying experiences via cloud innovation. Exterior of labor, Anjali enjoys exploring the Pacific Northwest via mountain climbing.

Anjali Vijayakumar is a Senior Options Architect at AWS with over 9 years of expertise serving to prospects construct dependable and scalable cloud options. Based mostly in Seattle, she focuses on architectural steerage for EdTech options, working intently with Schooling Expertise firms to remodel studying experiences via cloud innovation. Exterior of labor, Anjali enjoys exploring the Pacific Northwest via mountain climbing.

Dmitry Soldatkin is a Senior AI/ML Options Architect at Amazon Internet Providers (AWS), serving to prospects design and construct AI/ML options. Dmitry’s work covers a variety of ML use circumstances, with a major curiosity in Generative AI, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary providers, utilities, and telecommunications. You possibly can join with Dmitry on LinkedIn.

Dmitry Soldatkin is a Senior AI/ML Options Architect at Amazon Internet Providers (AWS), serving to prospects design and construct AI/ML options. Dmitry’s work covers a variety of ML use circumstances, with a major curiosity in Generative AI, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary providers, utilities, and telecommunications. You possibly can join with Dmitry on LinkedIn.

Karan Jain is a Senior Machine Studying Specialist at AWS, the place he leads the worldwide Go-To-Market technique for Amazon SageMaker Inference. He helps prospects speed up their generative AI and ML journey on AWS by offering steerage on deployment, cost-optimization, and GTM technique. He has led product, advertising and marketing, and enterprise improvement efforts throughout industries for over 10 years, and is captivated with mapping advanced service options to buyer options.

Karan Jain is a Senior Machine Studying Specialist at AWS, the place he leads the worldwide Go-To-Market technique for Amazon SageMaker Inference. He helps prospects speed up their generative AI and ML journey on AWS by offering steerage on deployment, cost-optimization, and GTM technique. He has led product, advertising and marketing, and enterprise improvement efforts throughout industries for over 10 years, and is captivated with mapping advanced service options to buyer options.