At present, we’re excited to announce the Amazon Bedrock AgentCore Mannequin Context Protocol (MCP) Server. With built-in help for runtime, gateway integration, id administration, and agent reminiscence, the AgentCore MCP Server is purpose-built to hurry up creation of parts appropriate with Bedrock AgentCore. You should use the AgentCore MCP server for fast prototyping, manufacturing AI options, or to scale your agent infrastructure to your enterprise.

Agentic IDEs like Kiro, Amazon Q Developer for CLI, Claude Code, GitHub Copilot, and Cursor, together with subtle MCP servers are reworking how builders construct AI brokers. What usually takes important effort and time, for instance studying about Bedrock AgentCore companies, integrating Runtime and Instruments Gateway, managing safety configurations, and deploying to manufacturing can now be accomplished in minutes by conversational instructions together with your coding assistant.

On this publish we introduce the brand new AgentCore MCP server and stroll by the set up steps so you may get began.

AgentCore MCP server capabilities

The AgentCore MCP server brings a brand new agentic growth expertise to AWS, offering specialised instruments that automate the entire agent lifecycle, get rid of the steep studying curve, and scale back growth friction that may gradual innovation cycles. To handle particular agent growth challenges the AgentCore MCP server:

- Transforms brokers for AgentCore Runtime integration by offering steering to your coding assistant on the minimal performance modifications wanted—including Runtime library imports, updating dependencies, initializing apps with

BedrockAgentCoreApp(), changing entrypoints to decorators, and altering direct agent calls to payload dealing with—whereas preserving your current agent logic and Strands Brokers options. - Automates growth atmosphere provisioning by dealing with the entire setup course of by your coding assistant: putting in required dependencies (bedrock-agentcore SDK, bedrock-agentcore-starter-toolkit CLI helpers, strands-agents SDK), configuring AWS credentials and AWS Areas, defining execution roles with Bedrock AgentCore permissions, establishing ECR repositories, and creating .bedrock_agentcore.yaml configuration recordsdata.

- Simplifies instrument integration with Bedrock AgentCore Gateway for seamless agent-to-tool communication within the cloud atmosphere.

- Allows easy agent invocation and testing by offering pure language instructions by your coding assistant to invoke provisioned brokers on AgentCore Runtime and confirm the entire workflow, together with calls to AgentCore Gateway instruments when relevant.

Layered method

When utilizing the AgentCore MCP server together with your favourite consumer, we encourage you to think about a layered structure designed to supply complete AI agent growth help:

- Layer 1: Agentic IDE or consumer – Use Kiro, Amazon Q Developer for CLI, Claude Code, Cursor, VS Code extensions, or one other pure language interface for builders. For quite simple duties, agentic IDEs are outfitted with the appropriate instruments to lookup documentation and carry out duties particular to Bedrock AgentCore. Nevertheless, with this layer alone, builders might observe sub-optimal efficiency throughout AgentCore developer paths.

- Layer 2: AWS service documentation – Set up the AWS Documentation MCP Server for complete AWS service documentation, together with context about Bedrock AgentCore.

- Layer 3: Framework documentation – Set up the Strands, LangGraph, or different framework docs MCP servers or use the llms.txt for framework-specific context.

- Layer 4: SDK documentation – Set up the MCP or use the llms.txt for the Agent Framework SDK and Bedrock AgentCore SDK for a mixed documentation layer that covers the Strands Brokers SDK documentation and Bedrock AgentCore API references.

- Layer 5: Steering recordsdata – Activity-specific steering for extra advanced and repeated workflows. Every IDE has a distinct method to utilizing steering recordsdata (for instance, see Steering within the Kiro documentation).

Every layer builds upon the earlier one, offering more and more particular context so your coding assistant can deal with the whole lot from fundamental AWS operations to advanced agent transformations and deployments.

Set up

To get began with the Amazon Bedrock AgentCore MCP server you should utilize the one-click set up on the Github repository.

Every IDE integrates with an MCP in a different way utilizing the mcp.json file. Overview the MCP documentation to your IDE, corresponding to Kiro, Cursor, Amazon Q Developer for CLI, and Claude Code to find out the situation of the mcp.json.

Use the next in your mcp.json:

For instance, here’s what the IDE seems like on Kiro, with the AgentCore MCP server and the 2 instruments, search_agentcore_docs and fetch_agentcore_doc, linked:

Utilizing the AgentCore MCP server for agent growth

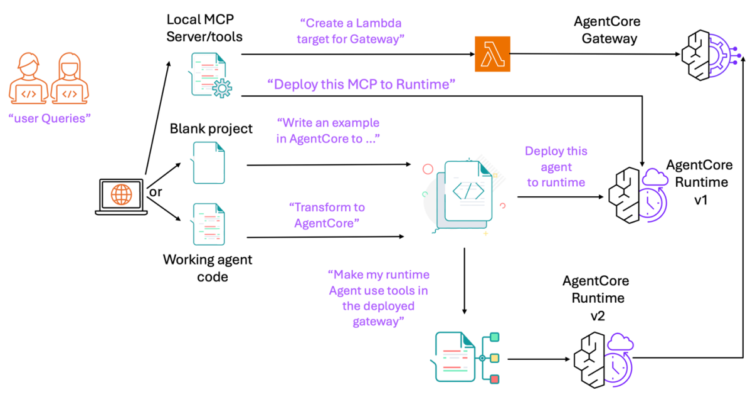

Whereas we present demos for numerous use instances under utilizing the Kiro IDE, the AgentCore MCP server has additionally been examined to work on Claude Code, Amazon Q CLI, Cursor, and the VS Code Q plugin. First, let’s check out a typical agent growth lifecycle utilizing AgentCore companies (do not forget that this is just one instance with the instruments accessible, and you’re free to discover extra such use instances just by instructing the agent in your favourite Agentic IDE):

The agent growth lifecycle follows these steps:

- The consumer takes an area set of instruments or MCP servers and

- Creates a lambda goal for AgentCore Gateway; or

- Deploys the MCP server as-is on AgentCore Runtime

- The consumer prepares the precise agent code utilizing a most popular framework like Strands Brokers or LangGraph. The consumer can both:

- Begin from scratch (the server can fetch docs from the Strands Brokers or LangGraph documentation)

- Begin from totally or partially working agent code

- The consumer asks the agent to rework the code right into a format appropriate with AgentCore Runtime with the intention to deploy the agent later. This causes the agent to:

- Write an applicable necessities.txt file

- import essential libraries together with bedrock_agentcore

- enhance the primary handler (or create one) to entry the core agent calling logic or enter handler

- The consumer might then ask the agent to deploy to AgentCore Runtime. The agent can lookup documentation and might use the AgentCore CLI to deploy the agent code to Runtime

- The consumer can take a look at the agent by asking the agent to take action. The AgentCore CLI command required for that is written and executed by the consumer

- The consumer then asks to switch the code to make use of the deployed AgentCore Gateway MCP server inside this AgentCore Runtime agent.

- The agent modifies the unique code so as to add an MCP consumer that may name the deployed gateway

- The agent then deploys a brand new model v2 of the agent to Runtime

- The agent then assessments this integration with a brand new immediate

Here’s a demo of the MCP server working with Cursor IDE. We see the agent carry out the next steps:

- Rework the weather_agent.py to be appropriate with AgentCore runtime

- Use the AgentCore CLI to deploy the agent

- Take a look at the deployed agent with a profitable immediate

Right here’s one other instance of deploying a LangGraph agent to AgentCore Runtime with the Cursor IDE performing related steps as seen above.

Clear up

In the event you’d wish to uninstall the MCP server, comply with the MCP documentation to your IDE, corresponding to Kiro, Amazon Q Developer for CLI, Cursor, and Claude Code for directions.

Conclusion

On this publish, we confirmed how you should utilize the AgentCore MCP server together with your favourite Agentic IDE of selection to hurry up your growth workflows.

We encourage you to evaluation the Github repository, as nicely learn by and use the next sources in your growth:

We encourage you to check out the AgentCore MCP server and supply any suggestions by points in our GitHub repository.

Concerning the authors