This put up is co-written with Bogdan Arsenie and Nick Mattei from PerformLine.

PerformLine operates inside the advertising and marketing compliance trade, a specialised subset of the broader compliance software program market, which incorporates varied compliance options like anti-money laundering (AML), know your buyer (KYC), and others. Particularly, advertising and marketing compliance refers to adhering to laws and tips set by authorities businesses that make certain an organization’s advertising and marketing, promoting, and gross sales content material and communications are truthful, correct, and never deceptive for customers. PerformLine is the main service offering complete compliance oversight throughout advertising and marketing, gross sales, and associate channels. As pioneers of the advertising and marketing compliance trade, PerformLine has carried out over 1.1 billion compliance observations over the previous 10+ years, automating the whole compliance course of—from pre-publication evaluation of supplies to steady monitoring of consumer-facing channels resembling web sites, emails, and social media. Trusted by client finance manufacturers and international organizations, PerformLine makes use of AI-driven options to guard manufacturers and their customers, reworking compliance efforts right into a aggressive benefit.

“Uncover. Monitor. Act. This isn’t simply our tagline—it’s the inspiration of our innovation at PerformLine,” says PerformLine’s CTO Bogdan Arsenie. PerformLine’s engineering workforce brings these rules to life by creating AI-powered know-how options. On this put up, PerformLine and AWS discover how PerformLine used Amazon Bedrock to speed up compliance processes, generate actionable insights, and supply contextual knowledge—delivering the pace and accuracy important for large-scale oversight.

The issue

One in all PerformLine’s enterprise prospects wanted a extra environment friendly course of for operating compliance checks on newly launched product pages, significantly those who combine a number of merchandise inside the identical visible and textual framework. These advanced pages usually function overlapping content material that may apply to at least one product, a number of merchandise, and even all of them without delay, necessitating a context-aware interpretation that mirrors how a typical client would view and work together with the content material. By adopting AWS and the structure mentioned on this put up, PerformLine can retrieve and analyze these intricate pages by AI-driven processing, producing detailed insights and contextual knowledge that seize the nuanced interaction between varied product components. After the related info is extracted and structured, it’s fed immediately into their guidelines engine, enabling sturdy compliance checks. This accomplishes a seamless move, from knowledge ingestion to rules-based evaluation. It not solely preserves the depth of every product’s presentation but in addition delivers the pace and accuracy crucial to large-scale oversight. Monitoring thousands and thousands of webpages day by day for compliance calls for a system that may intelligently parse, extract, and analyze content material at scale—very similar to the strategy PerformLine has developed for his or her enterprise prospects. On this dynamic panorama, the ever-evolving nature of internet content material challenges conventional static parsing, requiring a context-aware and adaptive resolution. This structure not solely processes bulk knowledge offline but in addition delivers close to real-time efficiency for one-time requests, dynamically scaling to handle the varied complexity of every web page. Through the use of AI-powered inference, PerformLine supplies complete protection of each product and advertising and marketing factor throughout the net, whereas placing a cautious stability between accuracy, efficiency, and price.

Answer overview

With this versatile, adaptable resolution, PerformLine can sort out even probably the most difficult webpages, offering complete protection when extracting and analyzing internet content material with a number of merchandise. On the identical time, by combining consistency with the adaptability of basis fashions (FMs), PerformLine can keep dependable efficiency throughout the varied vary of merchandise and web sites their prospects monitor. This twin concentrate on agility and operational consistency makes positive their prospects profit from sturdy compliance checks and knowledge integrity, with out sacrificing the pace or scale wanted to stay aggressive.

PerformLine’s upstream ingestion pipeline effectively collects thousands and thousands of internet pages and their related metadata in a batch course of. Downstream belongings are submitted to PerformLine’s guidelines engine and compliance evaluation processes. It was crucial that they not disrupt these processes or introduce cascading modifications for this resolution.

PerformLine determined to make use of generative AI and Amazon Bedrock to deal with their core challenges. Amazon Bedrock permits for a broad number of fashions, together with Amazon Nova. Amazon Bedrock is constantly increasing function units round utilizing FMs at scale. This supplies a dependable basis to construct a extremely obtainable and environment friendly content material processing system.

PerformLine’s resolution incorporates the next key elements:

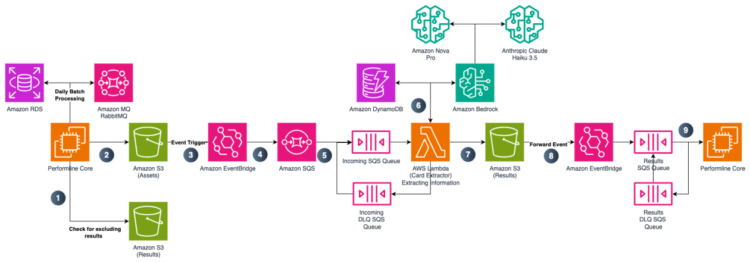

PerformLine applied a scalable, serverless event-driven structure (proven within the following diagram) that seamlessly integrates with their present system, requiring lower than a day to develop and deploy. This made it doable to concentrate on immediate optimization, analysis, and price administration reasonably than infrastructure overhead. This structure permits PerformLine to dynamically parse, extract, and analyze internet content material with excessive reliability, flexibility, and cost-efficiency.

The system implements a number of queue sorts (Incoming, DLQ, Outcomes) and contains error dealing with mechanisms. Knowledge flows by varied AWS providers together with: Amazon RDS for preliminary knowledge storage Amazon MQ RabbitMQ for message dealing with Amazon S3 for asset storage Amazon EventBridge for occasion administration Amazon SQS for queue administration AWS Lambda for serverless processing Amazon DynamoDB for NoSQL knowledge storage

PerformLine’s course of consists of a number of steps, together with processing (Step 1), occasion set off and storage (Steps 2–6), structured output and storage (Step 7), and downstream processing and compliance checks (Steps 8–9):

- Hundreds of thousands of pages are processed by an upstream extract, rework, and cargo (ETL) course of from PerformLine’s core programs operating on the AWS Cloud.

- When a web page is retrieved, it triggers an occasion within the compliance verify system.

- Amazon S3 permits for storage of the info from a web page based on metadata.

- EventBridge makes use of event-driven processing to route Amazon S3 occasions to Amazon SQS.

- Amazon SQS queues messages for processing and allows messages to be retried on failure.

- A Lambda Perform consumes SQS messages and in addition scales dynamically to deal with even unpredictable workloads:

- This operate makes use of Amazon Bedrock to carry out extraction and generative AI evaluation of the content material from Amazon SQS. Amazon Bedrock provides the best flexibility to decide on the best mannequin for the job. For PerformLine’s use case, Amazon’s Nova Professional was greatest fitted to advanced requests that require a strong mannequin however nonetheless permits for a excessive efficiency to value ratio. Anthropic’s Claude Haiku mannequin permits for optimized fast calls, the place a quick response is paramount for added processing if wanted. Amazon Bedrock options, together with Amazon Bedrock Immediate Administration and inference profiles are used to extend enter code variability with out affecting output and scale back complexity in utilization of FMs by Amazon Bedrock.

- The operate shops customer-defined product schemas in Amazon DynamoDB, enabling dynamic massive language mannequin (LLM) concentrating on and schema-driven output era.

- Amazon S3 shops the extracted knowledge, which is formatted as structured JSON adhering to the goal schema.

- EventBridge forwards Amazon S3 occasions to Amazon SQS, making extracted knowledge obtainable for downstream processing.

- Compliance checks and enterprise guidelines, operating on different PerformLine’s programs, are utilized to validate and implement regulatory necessities.

Price optimizations

The answer provides a number of value optimizations, together with change knowledge seize (CDC) on the internet and strategic multi-pass inference. After a web page’s content material has been analyzed and formatted, it’s written again to a partition that features a metadata hash of the asset. This permits upstream processes to find out whether or not a web page has already been processed and if its content material has modified. The important thing advantages of this strategy embrace:

- Assuaging redundant processing of the identical pages, contributing to PerformLine experiencing a 15% workload discount in human analysis duties. This frees time for human evaluators and permits them concentrate on crucial pages reasonably than all of the pages.

- Avoiding reprocessing unchanged pages, dynamically decreasing PerformLine’s analysts’ workload by over 50% along with deduplication positive aspects.

LLM inference prices can escalate at scale, however context and thoroughly structured prompts are crucial for accuracy. To optimize prices whereas sustaining precision, PerformLine applied a multi-pass strategy utilizing Amazon Bedrock:

- Preliminary filtering with Amazon Nova Micro – This light-weight mannequin effectively identifies related merchandise with minimal value.

- Focused extraction with Amazon Nova Lite – Recognized merchandise are batched into smaller teams and handed to Amazon Nova Lite for deeper evaluation. This retains PerformLine inside token limits whereas bettering extraction accuracy.

- Elevated accuracy by context-aware processing – By first figuring out the goal content material after which processing it in smaller batches, PerformLine considerably improved accuracy whereas minimizing token consumption.

Use of Amazon Bedrock

Throughout preliminary testing, PerformLine rapidly realized the necessity for a extra scalable strategy to immediate administration. Manually monitoring a number of immediate variations and templates grew to become inefficient as PerformLine iterated and collaborated.

Amazon Bedrock’s Immediate Administration service supplied a centralized resolution, enabling them to model, handle, and seamlessly deploy prompts to manufacturing. After the prompts are deployed, they are often dynamically referenced in AWS Lambda, permitting for versatile configuration. Moreover, by utilizing Amazon Bedrock software profile inference endpoints, PerformLine can dynamically alter the fashions the Lambda operate invokes, observe value per invocation, and attribute prices to particular software situations by organising value tags.

To streamline mannequin interactions, PerformLine selected the Amazon Bedrock Converse API which supplies a developer-friendly, standardized interface for mannequin invocation. When mixed with inference endpoints and immediate administration, a Lambda operate utilizing the Amazon Bedrock Converse API turns into extremely configurable—PerformLine builders can quickly check new fashions and prompts, consider outcomes, and iterate without having to rebuild or redeploy. The simplification of immediate administration and talent to deploy varied fashions by Amazon Bedrock is proven within the following diagram.

Complete AWS ML mannequin configuration structure highlighting three essential elements: Inference System: Mannequin ID integration Profile configuration Content material administration Inference settings Immediate Administration: Model management (V1 and Draft variations) Publish ID monitoring Mannequin A specs Retailer configurations Atmosphere Management: Separate PROD and DEV paths Atmosphere-specific parameter shops Invoke ID administration Engineering iteration monitoring

Future plans and enhancements

PerformLine is happy to dive into further Amazon Bedrock options, together with immediate caching and Amazon Bedrock Flows.

With immediate caching, customers can checkpoint immediate tokens, successfully caching context for reuse in subsequent API calls. Immediate caching on Amazon Bedrock provides as much as 85% latency enhancements and 90% value discount compared to calls with out immediate caching. PerformLine sees immediate caching as a function that can change into the usual transferring ahead. They’ve quite a few use instances for his or her knowledge, and being able to use additional evaluation on the identical content material at a decrease value creates new alternatives for function growth and growth.

Amazon Bedrock Flows is a visible workflow builder that allows customers to orchestrate multi-step generative AI duties by connecting FMs and APIs with out intensive coding. Amazon Bedrock Flows is a subsequent step in simplifying PerformLine’s orchestration of information bases, immediate caching, and even Amazon Bedrock brokers sooner or later. Creating flows may also help scale back time to function deployment and upkeep.

Abstract

PerformLine has applied a extremely scalable, serverless, AI-driven structure that enhances effectivity, cost-effectiveness, and compliance within the internet content material processing pipeline. Through the use of Amazon Bedrock, EventBridge, Amazon SQS, Lambda, and DynamoDB, they’ve constructed an answer that may dynamically scale, optimize AI inference prices, and scale back redundant processing—all whereas sustaining operational flexibility and compliance integrity. Based mostly on their present quantity and workflow, PerformLine is projected to course of between 1.5 to 2 million pages day by day, from which they anticipate to extract roughly 400,000 to 500,000 merchandise. Moreover, PerformLine anticipates making use of guidelines to every asset, leading to about 500,000 rule observations that can require evaluation every day.All through the design course of PerformLine made positive their resolution stays so simple as doable whereas nonetheless delivering operational flexibility and integrity. This strategy minimizes complexity, enhances maintainability, and accelerates deployment, empowering them to adapt rapidly to evolving enterprise wants with out pointless overhead.

Through the use of a serverless AI-driven structure constructed on Amazon Bedrock, PerformLine helps their prospects sort out even probably the most advanced, multi-product webpages with unparalleled accuracy and effectivity. This holistic strategy interprets visible and textual components as a typical client would, verifying that each product variant is precisely assessed for compliance. The ensuing insights are then fed immediately right into a guidelines engine, enabling speedy, data-driven choices. For PerformLine’s prospects, this implies much less redundant processing, decrease operational prices, and a dramatically simplified compliance workflow, all with out compromising on pace or accuracy. By decreasing the overhead of large-scale knowledge evaluation and streamlining compliance checks, PerformLine’s resolution in the end frees groups to concentrate on driving innovation and delivering worth.

In regards to the authors

Bogdan Arsenie is the Chief Know-how Officer at PerformLine, with over 20 years of expertise main technological innovation throughout digital promoting, huge knowledge, cellular gaming, and social engagement. Bogdan started programming at age 13, customizing bulletin board software program to fund his ardour for Star Trek memorabilia. He served as PerformLine’s founding CTO from 2007–2009, pioneering their preliminary compliance platform. Later, as CTO on the Rumie Initiative, he helped scale a worldwide schooling initiative acknowledged by Google’s Affect Problem.

Bogdan Arsenie is the Chief Know-how Officer at PerformLine, with over 20 years of expertise main technological innovation throughout digital promoting, huge knowledge, cellular gaming, and social engagement. Bogdan started programming at age 13, customizing bulletin board software program to fund his ardour for Star Trek memorabilia. He served as PerformLine’s founding CTO from 2007–2009, pioneering their preliminary compliance platform. Later, as CTO on the Rumie Initiative, he helped scale a worldwide schooling initiative acknowledged by Google’s Affect Problem.

Nick Mattei is a Senior Software program Engineer at PerformLine. He’s targeted on options structure and distributed software growth in AWS. Exterior of labor, Nick is an avid bicycle owner and skier, all the time on the lookout for the following nice climb or powder day.

Nick Mattei is a Senior Software program Engineer at PerformLine. He’s targeted on options structure and distributed software growth in AWS. Exterior of labor, Nick is an avid bicycle owner and skier, all the time on the lookout for the following nice climb or powder day.

Shervin Suresh is a Generative AI Options Architect at AWS. He helps generative AI adoption each internally at AWS and externally with fast-growing startup prospects. He’s keen about utilizing know-how to assist enhance the lives of individuals in all points. Exterior of labor, Shervin likes to cook dinner, construct LEGO, and collaborate with individuals on issues they’re keen about.

Shervin Suresh is a Generative AI Options Architect at AWS. He helps generative AI adoption each internally at AWS and externally with fast-growing startup prospects. He’s keen about utilizing know-how to assist enhance the lives of individuals in all points. Exterior of labor, Shervin likes to cook dinner, construct LEGO, and collaborate with individuals on issues they’re keen about.

Medha Aiyah is a Options Architect at AWS. She graduated from the College of Texas at Dallas with an MS in Laptop Science, with a concentrate on AI/ML. She helps ISV prospects in all kinds of industries, by empowering prospects to make use of AWS optimally to attain their enterprise targets. She is very keen on guiding prospects on methods to implement AI/ML options and use generative AI. Exterior of labor, Medha enjoys mountain climbing, touring, and dancing.

Medha Aiyah is a Options Architect at AWS. She graduated from the College of Texas at Dallas with an MS in Laptop Science, with a concentrate on AI/ML. She helps ISV prospects in all kinds of industries, by empowering prospects to make use of AWS optimally to attain their enterprise targets. She is very keen on guiding prospects on methods to implement AI/ML options and use generative AI. Exterior of labor, Medha enjoys mountain climbing, touring, and dancing.

Michael Zhang is a generalist Options Architect at AWS working with small to medium companies. He has been with Amazon for over 3 years and makes use of his background in laptop science and machine studying to help prospects on AWS. In his free time, Michael likes to hike and discover different cultures.

Michael Zhang is a generalist Options Architect at AWS working with small to medium companies. He has been with Amazon for over 3 years and makes use of his background in laptop science and machine studying to help prospects on AWS. In his free time, Michael likes to hike and discover different cultures.