Organizations need direct solutions to their enterprise questions with out the complexity of writing SQL queries or navigating via enterprise intelligence (BI) dashboards to extract information from structured information shops. Examples of structured information embrace tables, databases, and information warehouses that conform to a predefined schema. Massive language mannequin (LLM)-powered pure language question programs remodel how we work together with information, so you may ask questions like “Which area has the very best income?” and obtain instant, insightful responses. Implementing these capabilities requires cautious consideration of your particular wants—whether or not you could combine data from different programs (for instance, unstructured sources like paperwork), serve inside or exterior customers, deal with the analytical complexity of questions, or customise responses for enterprise appropriateness, amongst different components.

On this put up, we talk about LLM-powered structured information question patterns in AWS. We offer a call framework that will help you choose the perfect sample on your particular use case.

Enterprise problem: Making structured information accessible

Organizations have huge quantities of structured information however wrestle to make it successfully accessible to non-technical customers for a number of causes:

- Enterprise customers lack the technical data (like SQL) wanted to question information

- Staff depend on BI groups or information scientists for evaluation, limiting self-service capabilities

- Gaining insights typically entails time delays that affect decision-making

- Predefined dashboards constrain spontaneous exploration of information

- Customers may not know what questions are attainable or the place related information resides

Answer overview

An efficient answer ought to present the next:

- A conversational interface that enables staff to question structured information sources with out technical experience

- The flexibility to ask questions in on a regular basis language and obtain correct, reliable solutions

- Automated technology of visualizations and explanations to obviously talk insights.

- Integration of data from completely different information sources (each structured and unstructured) offered in a unified method

- Ease of integration with present investments and fast deployment capabilities

- Entry restriction based mostly on identities, roles, and permissions

Within the following sections, we discover 5 patterns that may deal with these wants, highlighting the structure, supreme use instances, advantages, issues, and implementation sources for every strategy.

Sample 1: Direct conversational interface utilizing an enterprise assistant

This sample makes use of Amazon Q Enterprise, a generative AI-powered assistant, to supply a chat interface on information sources with native connectors. When customers ask questions in pure language, Amazon Q Enterprise connects to the info supply, interprets the query, and retrieves related info with out requiring intermediate providers. The next diagram illustrates this workflow.

This strategy is good for inside enterprise assistants that have to reply enterprise user-facing questions from each structured and unstructured information sources in a unified expertise. For instance, HR personnel can ask “What’s our parental depart coverage and what number of staff used it final quarter?” and obtain solutions drawn from each depart coverage documentation and worker databases collectively in a single interplay. With this sample, you may profit from the next:

- Simplified connectivity via the intensive Amazon Q Enterprise library of built-in connectors

- Streamlined implementation with a single service to configure and handle

- Unified search expertise for accessing each structured and unstructured info

- Constructed-in understanding and respect present identities, roles, and permissions

You possibly can outline the scope of information to be pulled within the type of a SQL question. Amazon Q Enterprise pre-indexes database content material based mostly on outlined SQL queries and makes use of this index when responding to consumer questions. Equally, you may outline the sync mode and schedule to find out how typically you need to replace your index. Amazon Q Enterprise does the heavy lifting of indexing the info utilizing a Retrieval Augmented Technology (RAG) strategy and utilizing an LLM to generate well-written solutions. For extra particulars on the right way to arrange Amazon Q Enterprise with an Amazon Aurora PostgreSQL-Appropriate Version connector, see Uncover insights out of your Amazon Aurora PostgreSQL database utilizing the Amazon Q Enterprise connector. You can even consult with the entire record of supported information supply connectors.

Sample 2: Enhancing BI instrument with pure language querying capabilities

This sample makes use of Amazon Q in QuickSight to course of pure language queries in opposition to datasets which have been beforehand configured in Amazon QuickSight. Customers can ask questions in on a regular basis language throughout the QuickSight interface and get visualized solutions with out writing SQL. This strategy works with QuickSight (Enterprise or Q version) and helps numerous information sources, together with Amazon Relational Database Service (Amazon RDS), Amazon Redshift, Amazon Athena, and others. The structure is depicted within the following diagram.

This sample is well-suited for inside BI and analytics use instances. Enterprise analysts, executives, and different staff can ask ad-hoc inquiries to get instant visualized insights within the type of dashboards. For instance, executives can ask questions like “What have been our high 5 areas by income final quarter?” and instantly see responsive charts, decreasing dependency on analytics groups. The advantages of this sample are as follows:

- It allows pure language queries that produce wealthy visualizations and charts

- No coding or machine studying (ML) expertise is required—the heavy lifting like pure language interpretation and SQL technology is managed by Amazon Q in QuickSight

- It integrates seamlessly throughout the acquainted QuickSight dashboard surroundings

Current QuickSight customers may discover this essentially the most easy method to reap the benefits of generative AI advantages. You possibly can optimize this sample for higher-quality outcomes by configuring matters like curated fields, synonyms, and anticipated query phrasing. This sample will pull information solely from a particular configured information supply in QuickSight to supply a dashboard as an output. For extra particulars, take a look at QuickSight DemoCentral to view a demo in QuickSight, see the generative BI studying dashboard, and examine guided directions to create dashboards with Amazon Q. Additionally consult with the record of supported information sources.

Sample 3: Combining BI visualization with conversational AI for a seamless expertise

This sample merges BI visualization capabilities with conversational AI to create a seamless data expertise. By integrating Amazon Q in QuickSight with Amazon Q Enterprise (with the QuickSight plugin enabled), organizations can present customers with a unified conversational interface that pulls on each unstructured and structured information. The next diagram illustrates the structure.

That is supreme for enterprises that need an inside AI assistant to reply quite a lot of questions—whether or not it’s a metric from a database or data from a doc. For instance, executives can ask “What was our This autumn income progress?” and see visualized outcomes from information warehouses via Amazon Redshift via QuickSight, then instantly comply with up with “What’s our firm trip coverage?” to entry HR documentation—all throughout the similar dialog movement. This sample presents the next advantages:

- It unifies solutions from structured information (databases and warehouses) and unstructured information (paperwork, wikis, emails) in a single software

- It delivers wealthy visualizations alongside conversational responses in a seamless expertise with real-time evaluation in chat

- There isn’t a duplication of labor—in case your BI workforce has already constructed datasets and matters in QuickSight for analytics, you employ that in Amazon Q Enterprise

- It maintains conversational context when switching between information and document-based inquiries

For extra particulars, see Question structured information from Amazon Q Enterprise utilizing Amazon QuickSight integration and Amazon Q Enterprise now gives insights out of your databases and information warehouses (preview).

One other variation of this sample is really helpful for BI customers who need to expose unified information via wealthy visuals in QuickSight, as illustrated within the following diagram.

For extra particulars, see Combine unstructured information into Amazon QuickSight utilizing Amazon Q Enterprise.

Sample 4: Constructing data bases from structured information utilizing managed text-to-SQL

This sample makes use of Amazon Bedrock Data Bases to allow structured information retrieval. The service gives a totally managed text-to-SQL module that alleviates widespread challenges in growing pure language question purposes for structured information. This implementation makes use of Amazon Bedrock (Amazon Bedrock Brokers and Amazon Bedrock Data Bases) alongside together with your selection of information warehouse reminiscent of Amazon Redshift or Amazon SageMaker Lakehouse. The next diagram illustrates the workflow.

For instance, a vendor can use this functionality embedded into an ecommerce software to ask a fancy question like “Give me high 5 merchandise whose gross sales elevated by 50% final 12 months as in comparison with earlier 12 months? Additionally group the outcomes by product class.” The system mechanically generates the suitable SQL, executes it in opposition to the info sources, and delivers outcomes or a summarized narrative. This sample options the next advantages:

- It gives totally managed text-to-SQL capabilities with out requiring mannequin coaching

- It allows direct querying of information from the supply with out information motion

- It helps advanced analytical queries on warehouse information

- It presents flexibility in basis mannequin (FM) choice via Amazon Bedrock

- API connectivity, personalization choices, and context-aware chat options make it higher suited to buyer dealing with purposes

Select this sample while you want a versatile, developer-oriented answer. This strategy works nicely for purposes (inside or exterior) the place you management the UI design. Default outputs are primarily textual content or structured information. Nevertheless, executing arbitrary SQL queries is usually a safety danger for text-to-SQL purposes. It is strongly recommended that you simply take precautions as wanted, reminiscent of utilizing restricted roles, read-only databases, and sandboxing. For extra info on the right way to construct this sample, see Empower monetary analytics by creating structured data bases utilizing Amazon Bedrock and Amazon Redshift. For an inventory of supported structured information shops, consult with Create a data base by connecting to a structured information retailer.

Sample 5: Customized text-to-SQL implementation with versatile mannequin choice

This sample represents a build-your-own answer utilizing FMs to transform pure language to SQL, execute queries on information warehouses, and return outcomes. Select Amazon Bedrock while you need to rapidly combine this functionality with out deep ML experience—it presents a totally managed service with ready-to-use FMs via a unified API, dealing with infrastructure wants with pay-as-you-go pricing. Alternatively, choose Amazon SageMaker AI while you require intensive mannequin customization to construct specialised wants—it gives full ML lifecycle instruments for information scientists and ML engineers to construct, practice, and deploy customized fashions with better management. For extra info, consult with our Amazon Bedrock or Amazon SageMaker AI choice information. The next diagram illustrates the structure.

Use this sample in case your use case requires particular open-weight fashions, otherwise you need to fine-tune fashions in your domain-specific information. For instance, in the event you want extremely correct outcomes on your question, then you need to use this sample to fine-tune fashions on particular schema constructions, whereas sustaining the flexibleness to combine with present workflows and multi-cloud environments. This sample presents the next advantages:

- It gives most customization in mannequin choice, fine-tuning, and system design

- It helps advanced logic throughout a number of information sources

- It presents full management over safety and deployment in your digital personal cloud (VPC)

- It allows versatile interface implementation (Slack bots, customized net UIs, pocket book plugins)

- You possibly can implement it for exterior user-facing options

For extra info on steps to construct this sample, see Construct a strong text-to-SQL answer producing advanced queries, self-correcting, and querying various information sources.

Sample comparability: Making the correct selection

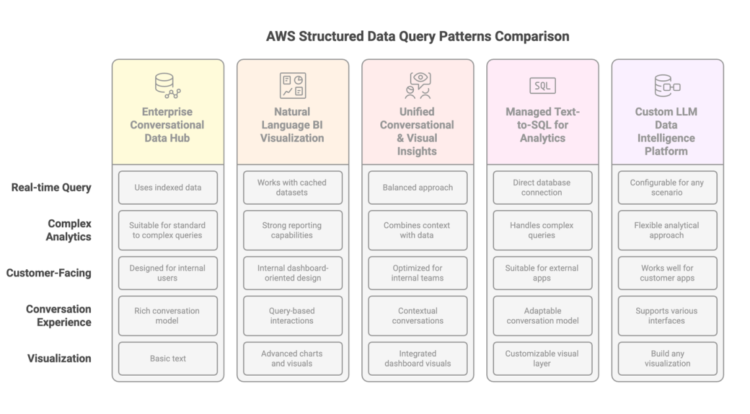

To make efficient choices, let’s evaluate these patterns throughout key standards.

Knowledge workload suitability

Totally different out-of-the-box patterns deal with transactional (operational) and analytical (historic or aggregated) information with various levels of effectiveness. Patterns 1 and three, which use Amazon Q Enterprise, work with listed information and are optimized for lookup-style queries in opposition to beforehand listed content material moderately than real-time transactional database queries. Sample 2, which makes use of Amazon Q in QuickSight, will get visible output for transactional info for ad-hoc evaluation. Sample 4, which makes use of Amazon Bedrock structured information retrieval, is particularly designed for analytical programs and information warehouses, excelling at advanced queries on massive datasets. Sample 5 is a self-managed text-to-SQL possibility that may be constructed to help each transactional or analytical wants of customers.

Target market

Architectures highlighted in Patterns 1, 2, and three (utilizing Amazon Q Enterprise, Amazon Q in QuickSight, or a mix) are finest suited to inside enterprise use. Nevertheless, you need to use Amazon QuickSight Embedded to embed information visuals, dashboards, and pure language queries into each inside or customer-facing purposes. Amazon Q Enterprise serves as an enterprise AI assistant for organizational data that makes use of subscription-based pricing tiers that’s designed for inside staff. Sample 4 (utilizing Amazon Bedrock) can be utilized to construct each inside in addition to customer-facing purposes. It’s because, in contrast to the subscription-based mannequin of Amazon Q Enterprise, Amazon Bedrock gives API-driven providers that alleviate per-user prices and identification administration overhead for exterior buyer situations. This makes it well-suited for customer-facing experiences the place you could serve doubtlessly 1000’s of exterior customers. The customized LLM options in Sample 5 can equally be tailor-made to exterior software necessities.

Interface and output format

Totally different patterns ship solutions via completely different interplay fashions:

- Conversational experiences – Patterns 1 and three (utilizing Amazon Q Enterprise) present chat-based interfaces. Sample 4 (utilizing Amazon Bedrock Data Bases for structured information retrieval) naturally helps AI assistant integration, and Sample 5 (a customized text-to-SQL answer) might be designed for quite a lot of interplay fashions.

- Visualization-focused output – Sample 2 (utilizing Amazon Q in QuickSight) makes a speciality of producing on-the-fly visualizations reminiscent of charts and tables in response to consumer questions.

- API integration – For embedding capabilities into present purposes, Patterns 4 and 5 supply essentially the most versatile API-based integration choices.

The next determine is a comparability matrix of AWS structured information question patterns.

Conclusion

Between these patterns, your optimum selection relies on the next key components:

- Knowledge location and traits – Is your information in operational databases, already in an information warehouse, or distributed throughout numerous sources?

- Consumer profile and interplay mannequin – Are you supporting inside or exterior customers? Do they like conversational or visualization-focused interfaces?

- Accessible sources and experience – Do you may have ML specialists accessible, or do you want a totally managed answer?

- Accuracy and governance necessities – Do you want strictly managed semantics and curation, or is broader question flexibility acceptable with monitoring?

By understanding these patterns and their trade-offs, you may architect options that align with your small business targets.

In regards to the authors

Akshara Shah is a Senior Options Architect at Amazon Net Providers. She helps industrial clients construct cloud-based generative AI providers to satisfy their enterprise wants. She has been designing, growing, and implementing options that leverage AI and ML applied sciences for greater than 10 years. Exterior of labor, she loves portray, exercising and spending time with household.

Akshara Shah is a Senior Options Architect at Amazon Net Providers. She helps industrial clients construct cloud-based generative AI providers to satisfy their enterprise wants. She has been designing, growing, and implementing options that leverage AI and ML applied sciences for greater than 10 years. Exterior of labor, she loves portray, exercising and spending time with household.

Sanghwa Na is a Generative AI Specialist Options Architect at Amazon Net Providers. Based mostly in San Francisco, he works with clients to design and construct generative AI options utilizing massive language fashions and basis fashions on AWS. He focuses on serving to organizations undertake AI applied sciences that drive actual enterprise worth

Sanghwa Na is a Generative AI Specialist Options Architect at Amazon Net Providers. Based mostly in San Francisco, he works with clients to design and construct generative AI options utilizing massive language fashions and basis fashions on AWS. He focuses on serving to organizations undertake AI applied sciences that drive actual enterprise worth