Conferences play an important function in decision-making, venture coordination, and collaboration, and distant conferences are widespread throughout many organizations. Nonetheless, capturing and structuring key takeaways from these conversations is usually inefficient and inconsistent. Manually summarizing conferences or extracting motion gadgets requires important effort and is susceptible to omissions or misinterpretations.

Massive language fashions (LLMs) provide a extra sturdy resolution by remodeling unstructured assembly transcripts into structured summaries and motion gadgets. This functionality is particularly helpful for venture administration, buyer assist and gross sales calls, authorized and compliance, and enterprise data administration.

On this submit, we current a benchmark of various understanding fashions from the Amazon Nova household obtainable on Amazon Bedrock, to supply insights on how one can select the perfect mannequin for a gathering summarization process.

LLMs to generate assembly insights

Trendy LLMs are extremely efficient for summarization and motion merchandise extraction on account of their capability to know context, infer subject relationships, and generate structured outputs. In these use circumstances, immediate engineering supplies a extra environment friendly and scalable strategy in comparison with conventional mannequin fine-tuning or customization. Slightly than modifying the underlying mannequin structure or coaching on massive labeled datasets, immediate engineering makes use of fastidiously crafted enter queries to information the mannequin’s conduct, straight influencing the output format and content material. This methodology permits for fast, domain-specific customization with out the necessity for resource-intensive retraining processes. For duties resembling assembly summarization and motion merchandise extraction, immediate engineering permits exact management over the generated outputs, ensuring they meet particular enterprise necessities. It permits for the versatile adjustment of prompts to swimsuit evolving use circumstances, making it a super resolution for dynamic environments the place mannequin behaviors must be shortly reoriented with out the overhead of mannequin fine-tuning.

Amazon Nova fashions and Amazon Bedrock

Amazon Nova fashions, unveiled at AWS re:Invent in December 2024, are constructed to ship frontier intelligence at industry-leading worth efficiency. They’re among the many quickest and most cost-effective fashions of their respective intelligence tiers, and are optimized to energy enterprise generative AI functions in a dependable, safe, and cost-effective method.

The understanding mannequin household has 4 tiers of fashions: Nova Micro (text-only, ultra-efficient for edge use), Nova Lite (multimodal, balanced for versatility), Nova Professional (multimodal, stability of pace and intelligence, very best for many enterprise wants) and Nova Premier (multimodal, probably the most succesful Nova mannequin for complicated duties and instructor for mannequin distillation). Amazon Nova fashions can be utilized for a wide range of duties, from summarization to structured textual content era. With Amazon Bedrock Mannequin Distillation, clients can even deliver the intelligence of Nova Premier to a sooner and less expensive mannequin resembling Nova Professional or Nova Lite for his or her use case or area. This may be achieved via the Amazon Bedrock console and APIs such because the Converse API and Invoke API.

Answer overview

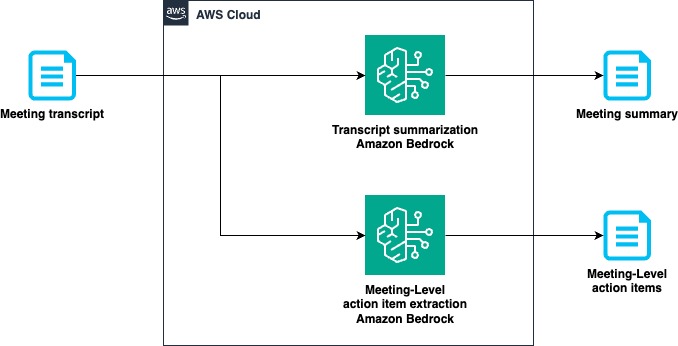

This submit demonstrates the way to use Amazon Nova understanding fashions, obtainable via Amazon Bedrock, for automated perception extraction utilizing immediate engineering. We give attention to two key outputs:

- Assembly summarization – A high-level abstractive abstract that distills key dialogue factors, choices made, and demanding updates from the assembly transcript

- Motion gadgets – A structured record of actionable duties derived from the assembly dialog that apply to your entire crew or venture

The next diagram illustrates the answer workflow.

Stipulations

To observe together with this submit, familiarity with calling LLMs utilizing Amazon Bedrock is predicted. For detailed steps on utilizing Amazon Bedrock for textual content summarization duties, check with Construct an AI textual content summarizer app with Amazon Bedrock. For added details about calling LLMs, check with the Invoke API and Utilizing the Converse API reference documentation.

Answer parts

We developed the 2 core options of the answer—assembly summarization and motion merchandise extraction—through the use of common fashions obtainable via Amazon Bedrock. Within the following sections, we have a look at the prompts that had been used for these key duties.

For the assembly summarization process, we used a persona task, prompting the LLM to generate a abstract in

For the motion merchandise extraction process, we gave particular directions on producing motion gadgets within the prompts and used chain-of-thought to enhance the standard of the generated motion gadgets. Within the assistant message, the prefix

Completely different mannequin households reply to the identical prompts otherwise, and it’s essential to observe the prompting information outlined for the actual mannequin. For extra info on finest practices for Amazon Nova prompting, check with Prompting finest practices for Amazon Nova understanding fashions.

Dataset

To judge the answer, we used the samples for the general public QMSum dataset. The QMSum dataset is a benchmark for assembly summarization, that includes English language transcripts from educational, enterprise, and governance discussions with manually annotated summaries. It evaluates LLMs on producing structured, coherent summaries from complicated and multi-speaker conversations, making it a worthwhile useful resource for abstractive summarization and discourse understanding. For testing, we used 30 randomly sampled conferences from the QMSum dataset. Every assembly contained 2–5 topic-wise transcripts and contained roughly 8,600 tokens for every transcript in common.

Analysis framework

Reaching high-quality outputs from LLMs in assembly summarization and motion merchandise extraction is usually a difficult process. Conventional analysis metrics resembling ROUGE, BLEU, and METEOR give attention to surface-level similarity between generated textual content and reference summaries, however they usually fail to seize nuances resembling factual correctness, coherence, and actionability. Human analysis is the gold commonplace however is dear, time-consuming, and never scalable. To deal with these challenges, you need to use LLM-as-a-judge, the place one other LLM is used to systematically assess the standard of generated outputs based mostly on well-defined standards. This strategy presents a scalable and cost-effective solution to automate analysis whereas sustaining excessive accuracy. On this instance, we used Anthropic’s Claude 3.5 Sonnet v1 because the choose mannequin as a result of we discovered it to be most aligned with human judgment. We used the LLM choose to attain the generated responses on three most important metrics: faithfulness, summarization, and query answering (QA).

The faithfulness rating measures the faithfulness of a generated abstract by measuring the portion of the parsed statements in a abstract which can be supported by given context (for instance, a gathering transcript) with respect to the full variety of statements.

The summarization rating is the mix of the QA rating and the conciseness rating with the identical weight (0.5). The QA rating measures the protection of a generated abstract from a gathering transcript. It first generates an inventory of query and reply pairs from a gathering transcript and measures the portion of the questions which can be requested appropriately when the abstract is used as a context as a substitute of a gathering transcript. The QA rating is complimentary to the faithfulness rating as a result of the faithfulness rating doesn’t measure the protection of a generated abstract. We solely used the QA rating to measure the standard of a generated abstract as a result of the motion gadgets aren’t purported to cowl all features of a gathering transcript. The conciseness rating measures the ratio of the size of a generated abstract divided by the size of the full assembly transcript.

We used a modified model of the faithfulness rating and the summarization rating that had a lot decrease latency than the unique implementation.

Outcomes

Our analysis of Amazon Nova fashions throughout assembly summarization and motion merchandise extraction duties revealed clear performance-latency patterns. For summarization, Nova Premier achieved the very best faithfulness rating (1.0) with a processing time of 5.34s, whereas Nova Professional delivered 0.94 faithfulness in 2.9s. The smaller Nova Lite and Nova Micro fashions offered faithfulness scores of 0.86 and 0.83 respectively, with sooner processing occasions of two.13s and 1.52s. In motion merchandise extraction, Nova Premier once more led in faithfulness (0.83) with 4.94s processing time, adopted by Nova Professional (0.8 faithfulness, 2.03s). Curiously, Nova Micro (0.7 faithfulness, 1.43s) outperformed Nova Lite (0.63 faithfulness, 1.53s) on this specific process regardless of its smaller measurement. These measurements present worthwhile insights into the performance-speed traits throughout the Amazon Nova mannequin household for text-processing functions. The next graphs present these outcomes. The next screenshot exhibits a pattern output for our summarization process, together with the LLM-generated assembly abstract and an inventory of motion gadgets.

Conclusion

On this submit, we confirmed how you need to use prompting to generate assembly insights resembling assembly summaries and motion gadgets utilizing Amazon Nova fashions obtainable via Amazon Bedrock. For giant-scale AI-driven assembly summarization, optimizing latency, price, and accuracy is crucial. The Amazon Nova household of understanding fashions (Nova Micro, Nova Lite, Nova Professional, and Nova Premier) presents a sensible various to high-end fashions, considerably enhancing inference pace whereas lowering operational prices. These components make Amazon Nova a sexy selection for enterprises dealing with massive volumes of assembly knowledge at scale.

For extra info on Amazon Bedrock and the most recent Amazon Nova fashions, check with the Amazon Bedrock Consumer Information and Amazon Nova Consumer Information, respectively. The AWS Generative AI Innovation Heart has a bunch of AWS science and technique consultants with complete experience spanning the generative AI journey, serving to clients prioritize use circumstances, construct a roadmap, and transfer options into manufacturing. Try the Generative AI Innovation Heart for our newest work and buyer success tales.

In regards to the Authors

Baishali Chaudhury is an Utilized Scientist on the Generative AI Innovation Heart at AWS, the place she focuses on advancing Generative AI options for real-world functions. She has a powerful background in laptop imaginative and prescient, machine studying, and AI for healthcare. Baishali holds a PhD in Laptop Science from College of South Florida and PostDoc from Moffitt Most cancers Centre.

Baishali Chaudhury is an Utilized Scientist on the Generative AI Innovation Heart at AWS, the place she focuses on advancing Generative AI options for real-world functions. She has a powerful background in laptop imaginative and prescient, machine studying, and AI for healthcare. Baishali holds a PhD in Laptop Science from College of South Florida and PostDoc from Moffitt Most cancers Centre.

Sungmin Hong is a Senior Utilized Scientist at Amazon Generative AI Innovation Heart the place he helps expedite the number of use circumstances of AWS clients. Earlier than becoming a member of Amazon, Sungmin was a postdoctoral analysis fellow at Harvard Medical College. He holds Ph.D. in Laptop Science from New York College. Outdoors of labor, he prides himself on conserving his indoor vegetation alive for 3+ years.

Sungmin Hong is a Senior Utilized Scientist at Amazon Generative AI Innovation Heart the place he helps expedite the number of use circumstances of AWS clients. Earlier than becoming a member of Amazon, Sungmin was a postdoctoral analysis fellow at Harvard Medical College. He holds Ph.D. in Laptop Science from New York College. Outdoors of labor, he prides himself on conserving his indoor vegetation alive for 3+ years.

Mengdie (Flora) Wang is a Knowledge Scientist at AWS Generative AI Innovation Heart, the place she works with clients to architect and implement scalable Generative AI options that handle their distinctive enterprise challenges. She makes a speciality of mannequin customization methods and agent-based AI programs, serving to organizations harness the complete potential of generative AI expertise. Previous to AWS, Flora earned her Grasp’s diploma in Laptop Science from the College of Minnesota, the place she developed her experience in machine studying and synthetic intelligence.

Mengdie (Flora) Wang is a Knowledge Scientist at AWS Generative AI Innovation Heart, the place she works with clients to architect and implement scalable Generative AI options that handle their distinctive enterprise challenges. She makes a speciality of mannequin customization methods and agent-based AI programs, serving to organizations harness the complete potential of generative AI expertise. Previous to AWS, Flora earned her Grasp’s diploma in Laptop Science from the College of Minnesota, the place she developed her experience in machine studying and synthetic intelligence.

Anila Joshi has greater than a decade of expertise constructing AI options. As a AWSI Geo Chief at AWS Generative AI Innovation Heart, Anila pioneers modern functions of AI that push the boundaries of risk and speed up the adoption of AWS companies with clients by serving to clients ideate, establish, and implement safe generative AI options.

Anila Joshi has greater than a decade of expertise constructing AI options. As a AWSI Geo Chief at AWS Generative AI Innovation Heart, Anila pioneers modern functions of AI that push the boundaries of risk and speed up the adoption of AWS companies with clients by serving to clients ideate, establish, and implement safe generative AI options.