In keeping with the World Well being Group, greater than 2.2 billion individuals globally have imaginative and prescient impairment. For compliance with incapacity laws, such because the Individuals with Disabilities Act (ADA) in the US, media in visible codecs like tv exhibits or motion pictures are required to supply accessibility to visually impaired individuals. This usually comes within the type of audio description tracks that narrate the visible parts of the movie or present. In keeping with the Worldwide Documentary Affiliation, creating audio descriptions can price $25 per minute (or extra) when utilizing third events. For constructing audio descriptions internally, the trouble for companies within the media business could be vital, requiring content material creators, audio description writers, description narrators, audio engineers, supply distributors and extra in response to the American Council of the Blind (ACB). This results in the pure query, are you able to automate this course of with the assistance of generative AI choices in Amazon Net Providers (AWS)?

Newly introduced in December at re:Invent 2024, the Amazon Nova Basis Fashions household is on the market by means of Amazon Bedrock and consists of three multimodal foundational fashions (FMs):

- Amazon Nova Lite (GA) – A low-cost multimodal mannequin that’s lightning-fast for processing picture, video, and textual content inputs

- Amazon Nova Professional (GA) – A extremely succesful multimodal mannequin with a balanced mixture of accuracy, velocity, and value for a variety of duties

- Amazon Nova Premier (GA) – Our most succesful mannequin for advanced duties and a instructor for mannequin distillation

On this put up, we display how you should utilize companies like Amazon Nova, Amazon Rekognition, and Amazon Polly to automate the creation of accessible audio descriptions for video content material. This method can considerably cut back the time and value required to make movies accessible for visually impaired audiences. Nonetheless, this put up doesn’t present an entire, deployment-ready resolution. We share pseudocode snippets and steering in sequential order, along with detailed explanations and hyperlinks to assets. For a whole script, you should utilize extra assets, corresponding to Amazon Q Developer, to construct a completely practical system. The automated workflow described within the put up entails analyzing video content material, producing textual content descriptions, and narrating them utilizing AI voice era. In abstract, whereas highly effective, this requires cautious integration and testing to deploy successfully. By the tip of this put up, you’ll perceive the important thing steps, however some extra work is required to create a production-ready resolution in your particular use case.

Answer overview

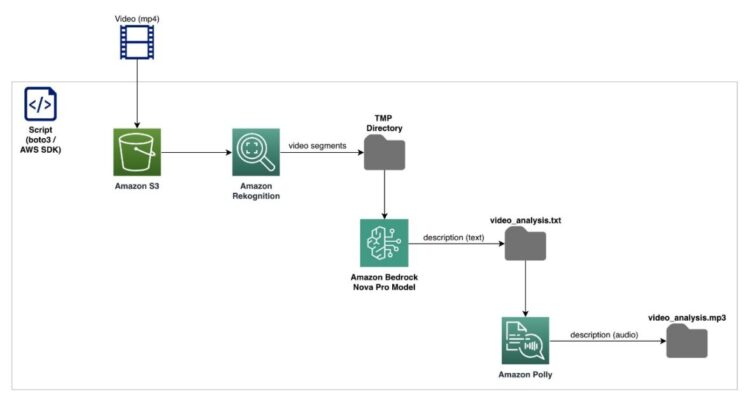

The next structure diagram demonstrates the end-to-end workflow of the proposed resolution. We’ll describe every part in-depth within the later sections of this put up, however observe which you can outline the logic inside a single script. You’ll be able to then run your script on an Amazon Elastic Compute Cloude (Amazon EC2) occasion or in your native laptop. For this put up, we assume that you’ll run the script on an Amazon SageMaker pocket book.

Providers used

The companies proven within the structure diagram embrace:

- Amazon S3 – Amazon Easy Storage Service (Amazon S3) is an object storage service that gives scalable, sturdy, and extremely out there storage. On this instance, we use Amazon S3 to retailer the video information (enter) and scene description (textual content information) and audio description (MP3 information) output generated by the answer. The script begins by fetching the supply video from an S3 bucket.

- Amazon Rekognition – Amazon Rekognition is a pc imaginative and prescient service that may detect and extract video segments or scenes by figuring out technical cues corresponding to shot boundaries, black frames, and different visible parts. To yield greater accuracy for the generated video descriptions, you employ Amazon Rekognition to phase the supply video into smaller chunks earlier than passing it to Amazon Nova. These video segments could be saved in a short lived listing in your compute machine.

- Amazon Bedrock – Amazon Bedrock is a managed service that gives entry to giant, pre-trained AI fashions such because the Amazon Nova Professional mannequin, which is used on this resolution to investigate the content material of every video phase and generate detailed scene descriptions. You’ll be able to retailer these textual content descriptions in a textual content file (for instance,

video_analysis.txt). - Amazon Polly – Amazon Polly is a text-to-speech service that’s used to transform the textual content descriptions generated by the Amazon Nova Professional mannequin into high-quality audio, made out there utilizing an MP3 file.

Stipulations

To comply with together with the answer outlined on this put up, it’s best to have the next in place:

You need to use AWS SDK to create, configure, and handle AWS companies. For Boto3, you may embrace it on the prime of your script utilizing: import boto3

Moreover, you want a mechanism to separate movies. In the event you’re utilizing Python, we suggest the moviepy library.import moviepy # pip set up moviepy

Answer walkthrough

The answer consists of the next fundamental steps, which you should utilize as a fundamental construction and customise or increase to suit your use case.

- Outline the necessities for the AWS atmosphere, together with defining the usage of the Amazon Nova Professional mannequin for its visible help and the AWS Area you’re working in. For optimum throughput, we suggest utilizing inference profiles when configuring Amazon Bedrock to invoke the Amazon Nova Professional mannequin. Initialize a consumer for Amazon Rekognition, which you employ for its help of segmentation.

- Outline a operate for detecting segments within the video. Amazon Rekognition helps segmentation, which suggests customers have the choice to detect and extract completely different segments or scenes inside a video. Through the use of the Amazon Rekognition Phase API, you may carry out the next:

- Detect technical cues corresponding to black frames, colour bars, opening and finish credit, and studio logos in a video.

- Detect shot boundaries to establish the beginning, finish, and length of particular person photographs throughout the video.

The answer makes use of Amazon Rekognition to partition the video into a number of segments and carry out Amazon Nova Professional-based inference on every phase. Lastly, you may piece collectively every phase’s inference output to return a complete audio description for all the video.

Within the previous picture, there are two scenes: a screenshot of 1 scene on the left adopted by the scene that instantly follows it on the suitable. With the Amazon Rekognition segmentation API, you may establish that the scene has modified—that the content material that’s displayed on display screen is completely different—and subsequently it’s good to generate a brand new scene description.

- Create the segmentation job and:

- Add the video file for which you wish to create an audio description to Amazon S3.

- Begin the job utilizing that video.

Setting SegmentType=[‘SHOT’] identifies the beginning, finish, and length of a scene. Moreover, MinSegmentConfidence units the minimal confidence Amazon Rekognition will need to have to return a detected phase, with 0 being lowest confidence and 100 being highest.

- Use the

analyze_chunkoperate. This operate defines the primary logic of the audio description resolution. Some gadgets to notice aboutanalyze_chunk:- For this instance, we despatched a video scene to Amazon Nova Professional for an evaluation of the contents utilizing the immediate

Describe what is going on on this video intimately. This immediate is comparatively simple and experimentation or customization in your use case is inspired. Amazon Nova Professional then returned the textual content description for our video scene. - For longer movies with many scenes, you may encounter throttling. That is resolved by implementing a retry mechanism. For particulars on throttling and quotas for Amazon Bedrock, see Quotas for Amazon Bedrock.

- For this instance, we despatched a video scene to Amazon Nova Professional for an evaluation of the contents utilizing the immediate

In impact, the uncooked scenes are transformed into wealthy, descriptive textual content. Utilizing this textual content, you may generate an entire scene-by-scene walkthrough of the video and ship it to Amazon Polly for audio.

- Use the next code to orchestrate the method:

- Provoke the detection of the varied segments by utilizing Amazon Rekognition.

- Every phase is processed by means of a circulate of:

- Extraction.

- Evaluation utilizing Amazon Nova Professional.

- Compiling the evaluation right into a

video_analysis.txtfile.

- The

analyze_videooperate brings collectively all of the elements and produces a textual content file that comprises the entire, scene-by-scene evaluation of the video contents, with timestamps

In the event you refer again to the earlier screenshot, the output—with none extra refinement—will look just like the next picture.

The next screenshot is an instance is a extra intensive take a look at the video_analysis.txt for the espresso.mp4 video:

- Ship the contents of the textual content file to Amazon Polly. Amazon Polly provides a voice to the textual content file, finishing the workflow of the audio description resolution.

For a listing of various voices that you should utilize in Amazon Polly, see Accessible voices within the Amazon Polly Developer Information.

Your closing output with Polly ought to sound one thing like this:

Clear up

It’s a greatest observe to delete the assets you provisioned for this resolution. In the event you used an EC2 or SageMaker Pocket book Occasion, cease or terminate it. Bear in mind to delete unused information out of your S3 bucket (eg: video_analysis.txt and video_analysis.mp3).

Conclusion

Recapping the answer at a excessive degree, on this put up, you used:

- Amazon S3 to retailer the unique video, intermediate information, and the ultimate audio description artifacts

- Amazon Rekognition to partition the video file into time-stamped scenes

- Pc imaginative and prescient capabilities from Amazon Nova Professional (out there by means of Amazon Bedrock) to investigate the contents of every scene

We confirmed you the right way to use Amazon Polly to create an MP3 audio file from the ultimate scene description textual content file, which is what will probably be consumed by the viewers members. The answer outlined on this put up demonstrates the right way to absolutely automate the method of making audio descriptions for video content material to enhance accessibility. Through the use of Amazon Rekognition for video segmentation, the Amazon Nova Professional mannequin for scene evaluation, and Amazon Polly for text-to-speech, you may generate a complete audio description observe that narrates the important thing visible parts of a video. This end-to-end automation can considerably cut back the time and value required to make video content material accessible for visually impaired audiences, serving to companies and organizations meet their accessibility targets. With the facility of AWS AI companies, this resolution gives a scalable and environment friendly method to enhance accessibility and inclusion for video-based media.

This resolution isn’t restricted to utilizing it for TV exhibits and flicks. Any visible media that requires accessibility could be a candidate! For extra details about the brand new Amazon Nova mannequin household and the superb issues these fashions can do, see Introducing Amazon Nova basis fashions: Frontier intelligence and business main worth efficiency.

Along with the steps described on this put up, extra actions you may have to take embrace:

- Eradicating a video phase evaluation’s introductory textual content from Amazon Nova. When Amazon Nova returns a response, it would start with one thing like “On this video…” or one thing comparable. You in all probability need simply the video description itself with out this introductory textual content. If there may be introductory textual content in your scene descriptions, then Amazon Polly will communicate it aloud and influence the standard of your audio transcriptions. You’ll be able to account for this in just a few methods.

- For instance, previous to sending it to Amazon Polly, you may modify the generated scene descriptions by programmatically eradicating that sort of textual content from them.

- Alternatively, you should utilize immediate engineering to request that Amazon Bedrock return solely the scene descriptions in a structured format or with none extra commentary.

- The third possibility is to outline and use a instrument when performing inference on Amazon Bedrock. This could be a extra complete strategy of defining the format of the output that you really want Amazon Bedrock to return. Utilizing instruments to form mannequin output, is named operate calling. For extra info, see Use a instrument to finish an Amazon Bedrock mannequin response.

- You must also be conscious of the architectural elements of the answer. In a manufacturing atmosphere, being conscious of any potential scaling, safety, and storage parts is vital as a result of the structure may start to resemble one thing extra advanced than the essential resolution structure diagram that this put up started with.

Concerning the Authors

Dylan Martin is an AWS Options Architect, working primarily within the generative AI area serving to AWS Technical Subject groups construct AI/ML workloads on AWS. He brings his expertise as each a safety options architect and software program engineer. Exterior of labor he enjoys motorcycling, the French Riviera and learning languages.

Dylan Martin is an AWS Options Architect, working primarily within the generative AI area serving to AWS Technical Subject groups construct AI/ML workloads on AWS. He brings his expertise as each a safety options architect and software program engineer. Exterior of labor he enjoys motorcycling, the French Riviera and learning languages.

Ankit Patel is an AWS Options Developer, a part of the Prototyping And Buyer Engineering (PACE) group. Ankit helps prospects carry their revolutionary concepts to life by fast prototyping; utilizing the AWS platform to construct, orchestrate, and handle customized purposes.

Ankit Patel is an AWS Options Developer, a part of the Prototyping And Buyer Engineering (PACE) group. Ankit helps prospects carry their revolutionary concepts to life by fast prototyping; utilizing the AWS platform to construct, orchestrate, and handle customized purposes.