AI brokers are remodeling the panorama of buyer help by bridging the hole between giant language fashions (LLMs) and real-world functions. These clever, autonomous techniques are poised to revolutionize customer support throughout industries, ushering in a brand new period of human-AI collaboration and problem-solving. By harnessing the facility of LLMs and integrating them with specialised instruments and APIs, brokers can sort out complicated, multistep buyer help duties that have been beforehand past the attain of conventional AI techniques.As we glance to the longer term, AI brokers will play an important position within the following areas:

- Enhancing decision-making – Offering deeper, context-aware insights to enhance buyer help outcomes

- Automating workflows – Streamlining customer support processes, from preliminary contact to decision, throughout numerous channels

- Human-AI interactions – Enabling extra pure and intuitive interactions between prospects and AI techniques

- Innovation and data integration – Producing new options by combining numerous knowledge sources and specialised data to handle buyer queries extra successfully

- Moral AI practices – Serving to present extra clear and explainable AI techniques to handle buyer considerations and construct belief

Constructing and deploying AI agent techniques for buyer help is a step towards unlocking the total potential of generative AI on this area. As these techniques evolve, they are going to remodel customer support, increase prospects, and open new doorways for AI in enhancing buyer experiences.

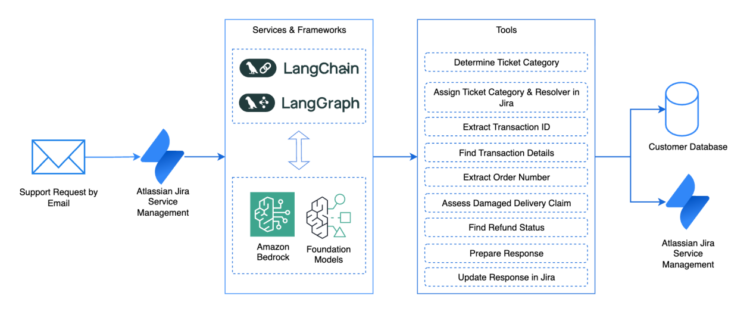

On this publish, we display learn how to use Amazon Bedrock and LangGraph to construct a personalised buyer help expertise for an ecommerce retailer. By integrating the Mistral Giant 2 and Pixtral Giant fashions, we information you thru automating key buyer help workflows akin to ticket categorization, order particulars extraction, harm evaluation, and producing contextual responses. These rules are relevant throughout numerous industries, however we use the ecommerce area as our major instance to showcase the end-to-end implementation and finest practices. This publish gives a complete technical walkthrough that will help you improve your customer support capabilities and discover the most recent developments in LLMs and multimodal AI.

LangGraph is a strong framework constructed on prime of LangChain that permits the creation of cyclical, stateful graphs for complicated AI agent workflows. It makes use of a directed graph construction the place nodes signify particular person processing steps (like calling an LLM or utilizing a instrument), edges outline transitions between steps, and state is maintained and handed between nodes throughout execution. This structure is especially priceless for buyer help automation involving workflows. LangGraph’s benefits embody built-in visualization, logging (traces), human-in-the-loop capabilities, and the power to prepare complicated workflows in a extra maintainable approach than conventional Python code.This publish gives particulars on learn how to do the next:

- Use Amazon Bedrock and LangGraph to construct clever, context-aware buyer help workflows

- Combine knowledge in a helpdesk instrument, like JIRA, within the LangChain workflow

- Use LLMs and imaginative and prescient language fashions (VLMs) within the workflow to carry out context-specific duties

- Extract data from photos to help in decision-making

- Examine photos to evaluate product harm claims

- Generate responses for the shopper help tickets

Answer overview

This answer includes the purchasers initiating help requests by means of e-mail, that are routinely transformed into new help tickets in Atlassian Jira Service Administration. The shopper help automation answer then takes over, figuring out the intent behind every question, categorizing the tickets, and assigning them to a bot consumer for additional processing. The answer makes use of LangGraph to orchestrate a workflow involving AI brokers to extracts key identifiers akin to transaction IDs and order numbers from the help ticket. It analyzes the question and makes use of these identifiers to name related instruments, extracting extra data from the database to generate a complete and context-aware response. After the response is ready, it’s up to date in Jira for human help brokers to overview earlier than sending the response again to the shopper. This course of is illustrated within the following determine. This answer is able to extracting data not solely from the ticket physique and title but in addition from hooked up photos like screenshots and exterior databases.

The answer makes use of two basis fashions (FMs) from Amazon Bedrock, every chosen based mostly on its particular capabilities and the complexity of the duties concerned. As an example, the Pixtral mannequin is used for vision-related duties like picture comparability and ID extraction, whereas the Mistral Giant 2 mannequin handles a wide range of duties like ticket categorization, response technology, and gear calling. Moreover, the answer consists of fraud detection and prevention capabilities. It might determine fraudulent product returns by evaluating the inventory product picture with the returned product picture to confirm in the event that they match and assess whether or not the returned product is genuinely broken. This integration of superior AI fashions with automation instruments enhances the effectivity and reliability of the shopper help course of, facilitating well timed resolutions and safety towards fraudulent actions. LangGraph gives a framework for orchestrating the knowledge movement between brokers, that includes built-in state administration and checkpointing to facilitate seamless course of continuity. This performance permits the inclusion of preliminary ticket summaries and descriptions within the State object, with extra data appended in subsequent steps of the workflows. By sustaining this evolving context, LangGraph permits LLMs to generate context-aware responses. See the next code:

The framework integrates effortlessly with Amazon Bedrock and LLMs, supporting task-specific diversification through the use of cost-effective fashions for easier duties whereas lowering the dangers of exceeding mannequin quotas. Moreover, LangGraph provides conditional routing for dynamic workflow changes based mostly on intermediate outcomes, and its modular design facilitates the addition or removing of brokers to increase system capabilities.

Accountable AI

It’s essential for buyer help automation functions to validate inputs and ensure LLM outputs are safe and accountable. Amazon Bedrock Guardrails can considerably improve buyer help automation functions by offering configurable safeguards that monitor and filter each consumer inputs and AI-generated responses, ensuring interactions stay secure, related, and aligned with organizational insurance policies. By utilizing options akin to content material filters, which detect and block dangerous classes like hate speech, insults, sexual content material, and violence, in addition to denied subjects to assist stop discussions on delicate or restricted topics (for instance, authorized or medical recommendation), buyer help functions can keep away from producing or amplifying inappropriate or defiant data. Moreover, guardrails might help redact personally identifiable data (PII) from dialog transcripts, defending consumer privateness and fostering belief. These measures not solely cut back the danger of reputational hurt and regulatory violations but in addition create a extra optimistic and safe expertise for patrons, permitting help groups to deal with resolving points effectively whereas sustaining excessive requirements of security and duty.

The next diagram illustrates this structure.

Observability

Together with Accountable AI, observability is important for buyer help functions to offer deep, real-time visibility into mannequin efficiency, utilization patterns, and operational well being, enabling groups to proactively detect and resolve points. With complete observability, you may monitor key metrics akin to latency and token consumption, and monitor and analyze enter prompts and outputs for high quality and compliance. This stage of perception helps determine and mitigate dangers like hallucinations, immediate injections, poisonous language, and PII leakage, serving to guarantee that buyer interactions stay secure, dependable, and aligned with regulatory necessities.

Conditions

On this publish, we use Atlassian Jira Service Administration for example. You should use the identical normal method to combine with different service administration instruments that present APIs for programmatic entry. The configuration required in Jira consists of:

- A Jira service administration venture with API token to allow programmatic entry

- The next customized fields:

- Identify: Class, Kind: Choose Checklist (a number of decisions)

- Identify: Response, Kind: Textual content Area (multi-line)

- A bot consumer to assign tickets

The next code reveals a pattern Jira configuration:

Along with Jira, the next providers and Python packages are required:

- A legitimate AWS account.

- An AWS Identification and Entry Administration (IAM) position within the account that has adequate permissions to create the required sources.

- Entry to the next fashions hosted on Amazon Bedrock:

- Mistral Giant 2 (mannequin ID:

mistral.mistral-large-2407-v1:0). - Pixtral Giant (mannequin ID:

us.mistral.pixtral-large-2502-v1:0). The Pixtral Giant mannequin is obtainable in Amazon Bedrock below cross-Area inference profiles.

- Mistral Giant 2 (mannequin ID:

- A LangGraph software up and working regionally. For directions, see Quickstart: Launch Native LangGraph Server.

For this publish, we use the us-west-2 AWS Area. For particulars on obtainable Areas, see Amazon Bedrock endpoints and quotas.

The supply code of this answer is obtainable within the GitHub repository. That is an instance code; it is best to conduct your individual due diligence and cling to the precept of least privilege.

Implementation with LangGraph

On the core of buyer help automation is a set of specialised instruments and capabilities designed to gather, analyze, and combine knowledge from service administration techniques and a SQLite database. These instruments function the inspiration of our system, empowering it to ship context-aware responses. On this part, we delve into the important parts that energy our system.

BedrockClient class

The BedrockClient class is applied within the cs_bedrock.py file. It gives a wrapper for interacting with Amazon Bedrock providers, particularly for managing language fashions and content material security guardrails in buyer help functions. It simplifies the method of initializing language fashions with acceptable configurations and managing content material security guardrails. This class is utilized by LangChain and LangGraph to invoke LLMs on Amazon Bedrock.

This class additionally gives strategies to create guardrails for accountable AI implementation. The next Amazon Bedrock Guardrails coverage filters sexual, violence, hate, insults, misconducts, and immediate assaults, and helps stop fashions from producing inventory and funding recommendation, profanity, hate, violent and sexual content material. Moreover, it helps stop exposing vulnerabilities in fashions by assuaging immediate assaults.

Database class

The Database class is outlined within the cs_db.py file. This class is designed to facilitate interactions with a SQLite database. It’s accountable for creating a neighborhood SQLite database and importing artificial knowledge associated to prospects, orders, refunds, and transactions. By doing so, it makes positive that the required knowledge is available for numerous operations. Moreover, the category consists of handy wrapper capabilities that simplify the method of querying the database.

JiraSM class

The JiraSM class is applied within the cs_jira_sm.py file. It serves as an interface for interacting with Jira Service Administration. It establishes a connection to Jira through the use of the API token, consumer identify, and occasion URL, all of that are configured within the .env file. This setup gives safe and versatile entry to the Jira occasion. The category is designed to deal with numerous ticket operations, together with studying tickets and assigning them to a preconfigured bot consumer. Moreover, it helps downloading attachments from tickets and updating customized fields as wanted.

CustomerSupport class

The CustomerSupport class is applied within the cs_cust_support_flow.py file. This class encapsulates the shopper help processing logic through the use of LangGraph and Amazon Bedrock. Utilizing LangGraph nodes and instruments, this class orchestrates the shopper help workflow. The workflow initially determines the class of the ticket by analyzing its content material and classifying it as associated to transactions, deliveries, refunds, or different points. It updates the help ticket with the class detected. Following this, the workflow extracts pertinent data akin to transaction IDs or order numbers, which could contain analyzing each textual content and pictures, and queries the database for related particulars. The following step is response technology, which is context-aware and adheres to content material security pointers whereas sustaining knowledgeable tone. Lastly, the workflow integrates with Jira, assigning classes, updating responses, and managing attachments as wanted.

The LangGraph orchestration is applied within the build_graph perform, as illustrated within the following code. This perform additionally generates a visible illustration of the workflow utilizing a Mermaid graph for higher readability and understanding. This setup helps an environment friendly and structured method to dealing with buyer help duties.

LangGraph generates the next Mermaid diagram to visually signify the workflow.

Utility class

The Utility class, applied within the cs_util.py file, gives important capabilities to help the shopper help automation. It encompasses utilities for logging, file dealing with, utilization metric monitoring, and picture processing operations. The category is designed as a central hub for numerous helper strategies, streamlining widespread duties throughout the applying. By consolidating these operations, it promotes code reusability and maintainability inside the system. Its performance makes positive that the automation framework stays environment friendly and arranged.

A key function of this class is its complete logging capabilities. It gives strategies to log informational messages, errors, and important occasions immediately into the cs_logs.log file. Moreover, it tracks Amazon Bedrock LLM token utilization and latency metrics, facilitating detailed efficiency monitoring. The category additionally logs the execution movement of application-generated prompts and LLM generated responses, aiding in troubleshooting and debugging. These log information could be seamlessly built-in with commonplace log pusher brokers, permitting for automated switch to most well-liked log monitoring techniques. This integration makes positive that system exercise is totally monitored and rapidly accessible for evaluation.

Run the agentic workflow

Now that the shopper help workflow is outlined, it may be executed for numerous ticket sorts. The next capabilities use the offered ticket key to fetch the corresponding Jira ticket and obtain obtainable attachments. Moreover, they initialize the State object with particulars such because the ticket key, abstract, description, attachment file path, and a system immediate for the LLM. This State object is used all through the workflow execution.

The next code snippet invokes the workflow for the Jira ticket with key AS-6:

The next screenshot reveals the Jira ticket earlier than processing. Discover that the Response and Class fields are empty, and the ticket is unassigned.

The next screenshot reveals the Jira ticket after processing. The Class area is up to date as Refunds and the Response area is up to date by the AI-generated content material.

This logs LLM utilization data as follows:

Clear up

Delete any IAM roles and insurance policies created particularly for this publish. Delete the native copy of this publish’s code.

Should you not want entry to an Amazon Bedrock FM, you may take away entry from it. For directions, see Add or take away entry to Amazon Bedrock basis fashions.

Delete the non permanent information and guardrails used on this publish with the next code:

Conclusion

On this publish, we developed an AI-driven buyer help answer utilizing Amazon Bedrock, LangGraph, and Mistral fashions. This superior agent-based workflow effectively handles numerous buyer queries by integrating a number of knowledge sources and extracting related data from tickets or screenshots. It additionally evaluates harm claims to mitigate fraudulent returns. The answer is designed with flexibility, permitting the addition of latest situations and knowledge sources as companies have to evolve. With this multi-agent method, you may construct strong, scalable, and clever techniques that redefine the capabilities of generative AI in buyer help.

Need to discover additional? Try the next GitHub repo. There, you may observe the code in motion and experiment with the answer your self. The repository consists of step-by-step directions for organising and working the multi-agent system, together with code for interacting with knowledge sources and brokers, routing knowledge, and visualizing workflows.

In regards to the authors

Deepesh Dhapola is a Senior Options Architect at AWS India, specializing in serving to monetary providers and fintech purchasers optimize and scale their functions on the AWS Cloud. With a powerful deal with trending AI applied sciences, together with generative AI, AI brokers, and the Mannequin Context Protocol (MCP), Deepesh makes use of his experience in machine studying to design progressive, scalable, and safe options. Passionate concerning the transformative potential of AI, he actively explores cutting-edge developments to drive effectivity and innovation for AWS prospects. Outdoors of labor, Deepesh enjoys spending high quality time together with his household and experimenting with numerous culinary creations.

Deepesh Dhapola is a Senior Options Architect at AWS India, specializing in serving to monetary providers and fintech purchasers optimize and scale their functions on the AWS Cloud. With a powerful deal with trending AI applied sciences, together with generative AI, AI brokers, and the Mannequin Context Protocol (MCP), Deepesh makes use of his experience in machine studying to design progressive, scalable, and safe options. Passionate concerning the transformative potential of AI, he actively explores cutting-edge developments to drive effectivity and innovation for AWS prospects. Outdoors of labor, Deepesh enjoys spending high quality time together with his household and experimenting with numerous culinary creations.