articles, I’ve explored and in contrast many AI instruments, for instance, Google’s Knowledge Science Agent, ChatGPT vs. Claude vs. Gemini for Knowledge Science, DeepSeek V3, and so forth. Nonetheless, that is solely a small subset of all of the AI instruments accessible for Knowledge Science. Simply to call a number of that I used at work:

- OpenAI API: I take advantage of it to categorize and summarize buyer suggestions and floor product ache factors (see my tutorial article).

- ChatGPT and Gemini: They assist me draft Slack messages and emails, write evaluation experiences, and even efficiency critiques.

- Glean AI: I used Glean AI to seek out solutions throughout inside documentation and communications rapidly.

- Cursor and Copilot: I take pleasure in simply urgent tab-tab to auto-complete code and feedback.

- Hex Magic: I take advantage of Hex for collaborative information notebooks at work. Additionally they provide a characteristic referred to as Hex Magic to jot down code and repair bugs utilizing conversational AI.

- Snowflake Cortex: Cortex AI permits customers to name Llm endpoints, construct RAG and text-to-SQL companies utilizing information in Snowflake.

I’m positive you may add much more to this record, and new AI instruments are being launched day by day. It’s virtually unimaginable to get an entire record at this level. Subsequently, on this article, I need to take one step again and concentrate on a much bigger query: what do we actually want as information professionals, and the way AI can assist.

Within the part beneath, I’ll concentrate on two essential instructions — eliminating low-value duties and accelerating high-value work.

1. Eliminating Low-Worth Duties

I turned a knowledge scientist as a result of I actually take pleasure in uncovering enterprise insights from complicated information and driving enterprise selections. Nonetheless, having labored within the business for over seven years now, I’ve to confess that not all of the work is as thrilling as I had hoped. Earlier than conducting superior analyses or constructing machine studying fashions, there are lots of low-value work streams which are unavoidable every day — and in lots of instances, it’s as a result of we don’t have the fitting tooling to empower our stakeholders for self-serve analytics. Let’s take a look at the place we’re immediately and the best state:

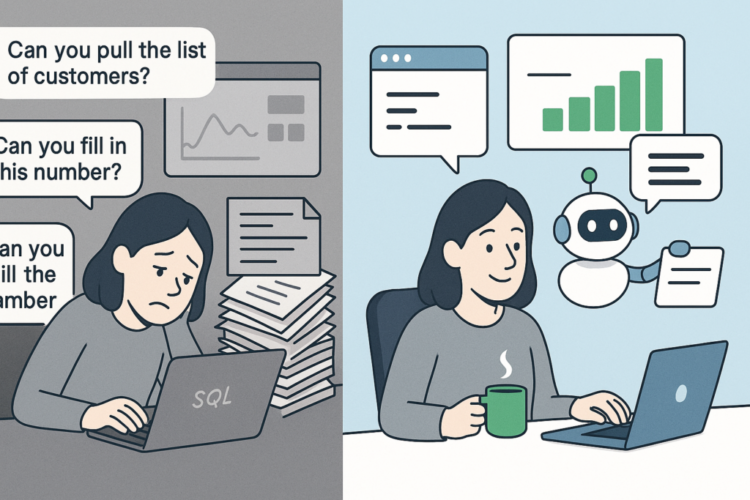

Present state: We work as information interpreters and gatekeepers (typically “SQL monkeys”)

- Easy information pull requests come to me and my group on Slack each week asking, “What was the GMV final month?” “Are you able to pull the record of shoppers who meet these standards?” “Are you able to assist me fill on this quantity on the deck that I must current tomorrow?”

- BI instruments don’t assist self-service use instances effectively. We adopted BI instruments like Looker and Tableau so stakeholders can discover the info and monitor the metrics simply. However the actuality is that there’s all the time a trade-off between simplicity and self-servability. Generally we make the dashboards straightforward to grasp with a number of metrics, however they’ll solely fulfill a number of use instances. In the meantime, if we make the instrument very customizable with the potential to discover the metrics and underlying information freely, stakeholders may discover the instrument complicated and lack the boldness to make use of it, and within the worst case, the info is pulled and interpreted within the improper approach.

- Documentation is sparse or outdated. This can be a widespread state of affairs, however might be brought on by totally different causes — possibly we transfer quick and concentrate on delivering outcomes, or there isn’t a nice information documentation and governance insurance policies in place. Because of this, tribal information turns into the bottleneck for folks exterior of the info group to make use of the info.

Preferrred state: Empower stakeholders to self-serve so we are able to decrease low-value work

- Stakeholders can do easy information pulls and reply fundamental information questions simply and confidently.

- Knowledge groups spend much less time on repetitive reporting or one-off fundamental queries.

- Dashboards are discoverable, interpretable, and actionable with out hand-holding.

So, to get nearer to the best state, what function can AI play right here? From what I’ve noticed, these are the widespread instructions AI instruments are going to shut the hole:

- Question information with pure language (Textual content-to-SQL): One option to decrease the technical barrier is to allow stakeholders to question the info with pure language. There are many Textual content-to-SQL efforts within the business:

- For instance, Snowflake is one firm that has made a number of progress in Text2SQL fashions and began integrating the potential into its product.

- Many corporations (together with mine) additionally explored in-house Text2SQL options. For instance, Uber shared their journey with Uber’s QueryGPT to make information querying extra accessible for his or her Operations group. This text defined intimately how Uber designed a multi-agent structure for question era. In the meantime, it additionally surfaced main challenges on this space, together with precisely deciphering consumer intent, dealing with giant desk schemas, and avoiding hallucinations and so forth.

- Actually, to make Textual content-to-SQL work, there’s a very excessive bar as it’s important to make the question correct — even when the instrument fails simply as soon as, it may destroy the belief and ultimately stakeholders will come again to you to validate the queries (then you must learn+rewrite the queries, which just about double the work 🙁). Up to now, I haven’t discovered a Textual content-to-SQL mannequin or instrument that works completely. I solely see it achievable if you end up querying from a really small subset of well-documented core datasets for particular and standardized use instances, however it is extremely laborious to scale to all of the accessible information and totally different enterprise situations.

- However in fact, given the massive quantity of funding on this space and fast improvement in AI, I’m positive we’ll get nearer and nearer to correct and scalable Textual content-to-SQL options.

- Chat-based BI assistant: One other widespread space to enhance stakeholders’ expertise with BI instruments is the chat-based BI assistant. This really takes one step additional than Textual content-to-SQL — as a substitute of producing a SQL question primarily based on a consumer immediate, it responds within the format of a visualization plus textual content abstract.

- Gemini in Looker is an instance right here. Looker is owned by Google, so it is extremely pure for them to combine with Gemini. One other benefit for Looker to construct their AI characteristic is that information fields are already documented within the LookML semantic layer, with widespread joins outlined and widespread metrics inbuilt dashboards. Subsequently, it has a number of nice information to study from. Gemini permits customers to regulate Looker dashboards, ask questions in regards to the information, and even construct customized information brokers for Conversational Analytics. Although primarily based on my restricted experimentation with the instrument, it occasions out typically and fails to reply easy questions typically. Let me know when you have a distinct expertise and have made it work…

- Tableau additionally launched the same characteristic, Tableau AI. I haven’t used it myself, however primarily based on the demo, it helps the info group to organize information and make dashboards rapidly utilizing pure language, and summarise information insights into “Tableau Pulse” for stakeholders to simply spot metric adjustments and irregular developments.

- Knowledge Catalog Instruments: AI may also assist with the problem of sparse or outdated information documentation.

- Throughout one inside hackathon, I bear in mind one challenge from our information engineers was to make use of LLM to extend desk documentation protection. AI is ready to learn the codebase and describe the columns accordingly with excessive accuracy normally, so it might probably assist enhance documentation rapidly with restricted human validation and changes.

- Equally, when my group creates new tables, now we have began to ask Cursor to jot down the desk documentation YAML information to avoid wasting us time with high-quality output.

- There are additionally a number of information catalogs and governance instruments which have been built-in with AI. Once I google “ai information catalog”, I see the logos of knowledge catalog instruments like Atlan, Alation, Collibra, Informatica, and so forth (disclaimer: I’ve used none of them..). That is clearly an business pattern.

2. Accelerating high-value work

Now that we’ve talked about how AI may assist with eliminating low-value duties, let’s focus on the way it can speed up high-value information initiatives. Right here, high-value work refers to information initiatives that mix technical excellence with enterprise context, and drive significant affect by means of cross-functional collaboration. For instance, a deep dive evaluation that understands product utilization patterns and results in product adjustments, or a churn prediction mannequin to establish churn-risk prospects and leads to churn-prevention initiatives. Let’s examine the present state and the best future:

Present state: Productiveness bottlenecks exist in on a regular basis workflows

- EDA is time-consuming. This step is vital to get an preliminary understanding of the info, but it surely may take a very long time to conduct all of the univariate and multivariate analyses.

- Time misplaced to coding and debugging. Let’s be sincere — nobody can bear in mind all of the numpy and pandas syntax and sklearn mannequin parameters. We always must search for documentation whereas coding.

- Wealthy unstructured information shouldn’t be totally utilized. Enterprise generates a number of textual content information day by day from surveys, assist tickets, and critiques. However extract insights scalably stays a problem.

Preferrred state: Knowledge scientists concentrate on deep pondering, not syntax

- Writing code feels quicker with out the interruption to search for syntax.

- Analysts spend extra time deciphering outcomes, much less time wrangling information.

- Unstructured information is not a blocker and may be rapidly analyzed.

Seeing the best state, I’m positive you have already got some AI instrument candidates in thoughts. Let’s see how AI can affect or is already making a distinction:

- AI coding and debugging assistants. I feel that is by far probably the most adopted sort of AI instrument for anybody who codes. And we’re already seeing it iterating.

- When LLM chatbots like ChatGPT and Claude got here out, engineers realized they might simply throw their syntax questions or error messages to the chatbot with high-accuracy solutions. That is nonetheless an interruption to the coding workflow, however significantly better than clicking by means of a dozen StackOverflow tabs — this already appears like final century.

- Later, we see increasingly more built-in AI coding instruments popping up — GitHub Copilot and Cursor combine along with your code editor and may learn by means of your codebase to proactively counsel code completions and debug points inside your IDE.

- As I briefly talked about at first, information instruments like Snowflake and Hex additionally began to embed AI coding assistants to assist information analysts and information scientists write code simply.

- AI for EDA and evaluation. That is considerably much like the Chat-based BI assistant instruments I discussed above, however their aim is extra formidable — they begin with the uncooked datasets and goal to automate the entire evaluation cycle of knowledge cleansing, pre-processing, exploratory evaluation, and typically even modeling. These are the instruments often marketed as “changing information analysts” (however are they?).

- Google Knowledge Science Agent is a really spectacular new instrument that may generate a complete Jupyter Pocket book with a easy immediate. I not too long ago wrote an article displaying what it might probably do and what it can not. In brief, it might probably rapidly spin up a well-structured and functioning Jupyter Pocket book primarily based on a customizable execution plan. Nonetheless, it’s lacking the capabilities of modifying the Jupyter Pocket book primarily based on follow-up questions, nonetheless requires somebody with stable information science information to audit the strategies and make handbook iterations, and desires a transparent information drawback assertion with clear and well-documented datasets. Subsequently, I view it as a fantastic instrument to free us a while on starter code, as a substitute of threatening our jobs.

- ChatGPT’s Knowledge Analyst instrument will also be categorized below this space. It permits customers to add a dataset and chat with it to get their evaluation performed, visualizations generated, and questions answered. Yow will discover my prior article discussing its capabilities right here. It additionally faces comparable challenges and works higher as an EDA helper as a substitute of changing information analysts.

- Simple-to-use and scalable NLP capabilities. LLM is nice at conversations. Subsequently, NLP is made exponentially simpler with LLM immediately.

- My firm hosts an inside hackathon yearly. I bear in mind my hackathon challenge three years in the past was to strive BERT and different conventional subject modeling strategies to investigate NPS survey responses, which was enjoyable however actually very laborious to make it correct and significant for the enterprise. Then two years in the past, throughout the hackathon, we tried OpenAI API to categorize and summarise those self same suggestions information — it labored like magic as you are able to do high-accuracy subject modeling, sentiment evaluation, suggestions categorization all simply in a single API name, and the outputs effectively match into our enterprise context primarily based on the system immediate. We later constructed an inside pipeline that scaled simply to textual content information throughout survey responses, assist tickets, Gross sales calls, consumer analysis notes, and so forth., and it has develop into the centralized buyer suggestions hub and knowledgeable our product roadmap. Yow will discover extra in this tech weblog.

- There are additionally a number of new corporations constructing packaged AI buyer suggestions evaluation instruments, product evaluation evaluation instruments, customer support assistant instruments, and so forth. The concepts are all the identical — using the benefit of how LLM can perceive textual content context and make conversations to create specialised AI brokers in textual content analytics.

Conclusion

It’s straightforward to get caught up chasing the most recent AI instruments. However on the finish of the day, what issues most is utilizing AI to get rid of what slows us down and speed up what strikes us ahead. The secret is to remain pragmatic: undertake what works immediately, keep inquisitive about what’s rising, and by no means lose sight of the core objective of knowledge science—to drive higher selections by means of higher understanding.