Generative AI is quickly remodeling the trendy office, providing unprecedented capabilities that increase how we work together with textual content and information. At Amazon Internet Providers (AWS), we acknowledge that a lot of our clients depend on the acquainted Microsoft Workplace suite of purposes, together with Phrase, Excel, and Outlook, because the spine of their every day workflows. On this weblog put up, we showcase a robust resolution that seamlessly integrates AWS generative AI capabilities within the type of massive language fashions (LLMs) primarily based on Amazon Bedrock into the Workplace expertise. By harnessing the most recent developments in generative AI, we empower staff to unlock new ranges of effectivity and creativity throughout the instruments they already use each day. Whether or not it’s drafting compelling textual content, analyzing advanced datasets, or gaining extra in-depth insights from info, integrating generative AI with Workplace suite transforms the best way groups strategy their important work. Be part of us as we discover how your group can leverage this transformative expertise to drive innovation and enhance worker productiveness.

Answer overview

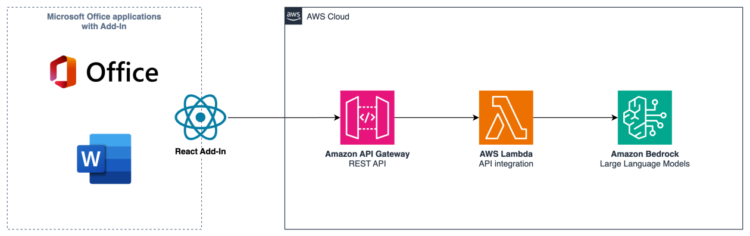

Determine 1: Answer structure overview

The answer structure in Determine 1 exhibits how Workplace purposes work together with a serverless backend hosted on the AWS Cloud by means of an Add-In. This structure permits customers to leverage Amazon Bedrock’s generative AI capabilities instantly from throughout the Workplace suite, enabling enhanced productiveness and insights inside their current workflows.

Parts deep-dive

Workplace Add-ins

Workplace Add-ins enable extending Workplace merchandise with customized extensions constructed on normal internet applied sciences. Utilizing AWS, organizations can host and serve Workplace Add-ins for customers worldwide with minimal infrastructure overhead.

An Workplace Add-in consists of two components:

The code snippet beneath demonstrates a part of a perform that might run each time a consumer invokes the plugin, performing the next actions:

- Provoke a request to the generative AI backend, offering the consumer immediate and accessible context within the request physique

- Combine the outcomes from the backend response into the Phrase doc utilizing Microsoft’s Workplace JavaScript APIs. Word that these APIs use objects as namespaces, assuaging the necessity for express imports. As an alternative, we use the globally accessible namespaces, comparable to

Phrase, to instantly entry related APIs, as proven in following instance snippet.

Generative AI backend infrastructure

The AWS Cloud backend consists of three parts:

- Amazon API Gateway acts as an entry level, receiving requests from the Workplace purposes’ Add-in. API Gateway helps a number of mechanisms for controlling and managing entry to an API.

- AWS Lambda handles the REST API integration, processing the requests and invoking the suitable AWS providers.

- Amazon Bedrock is a completely managed service that makes basis fashions (FMs) from main AI startups and Amazon accessible by way of an API, so you’ll be able to select from a variety of FMs to seek out the mannequin that’s greatest suited on your use case. With Bedrock’s serverless expertise, you may get began shortly, privately customise FMs with your individual information, and shortly combine and deploy them into your purposes utilizing the AWS instruments with out having to handle infrastructure.

LLM prompting

Amazon Bedrock lets you select from a broad collection of basis fashions for prompting. Right here, we use Anthropic’s Claude 3.5 Sonnet on Amazon Bedrock for completions. The system immediate we used on this instance is as follows:

You might be an workplace assistant serving to people to write down textual content for his or her paperwork.

[When preparing the answer, take into account the following text: {context} ]

Earlier than answering the query, assume by means of it step-by-step throughout the Within the immediate, we first give the LLM a persona, indicating that it’s an workplace assistant serving to people. The second, non-compulsory line incorporates textual content that has been chosen by the consumer within the doc and is offered as context to the LLM. We particularly instruct the LLM to first mimic a step-by-step thought course of for arriving on the reply (chain-of-thought reasoning), an efficient measure of prompt-engineering to enhance the output high quality. Subsequent, we instruct it to detect the consumer’s language from their query so we are able to later seek advice from it. Lastly, we instruct the LLM to develop its reply utilizing the beforehand detected consumer language inside response tags, that are used as the ultimate response. Whereas right here, we use the default configuration for inference parameters comparable to temperature, that may shortly be configured with each LLM immediate. The consumer enter is then added as a consumer message to the immediate and despatched by way of the Amazon Bedrock Messages API to the LLM.

Implementation particulars and demo setup in an AWS account

As a prerequisite, we have to ensure that we’re working in an AWS Area with Amazon Bedrock help for the inspiration mannequin (right here, we use Anthropic’s Claude 3.5 Sonnet). Additionally, entry to the required related Amazon Bedrock basis fashions must be added. For this demo setup, we describe the handbook steps taken within the AWS console. If required, this setup will also be outlined in Infrastructure as Code.

To arrange the combination, comply with these steps:

- Create an AWS Lambda perform with Python runtime and beneath code to be the backend for the API. Be sure that we’ve got Powertools for AWS Lambda (Python) accessible in our runtime, for instance, by attaching aLambda layer to our perform. Be sure that the Lambda perform’s IAM position gives entry to the required FM, for instance:

The next code block exhibits a pattern implementation for the REST API Lambda integration primarily based on a Powertools for AWS Lambda (Python) REST API occasion handler:

- Create an API Gateway REST API with a Lambda proxy integration to reveal the Lambda perform by way of a REST API. You possibly can comply with this tutorial for making a REST API for the Lambda perform through the use of the API Gateway console. By making a Lambda proxy integration with a proxy useful resource, we are able to route requests to the sources to the Lambda perform. Observe the tutorial to deploy the API and be aware of the API’s invoke URL. Ensure to configure satisfactory entry management for the REST API.

We will now invoke and take a look at our perform by way of the API’s invoke URL. The next instance makes use of curl to ship a request (make certain to interchange all placeholders in curly braces as required), and the response generated by the LLM:

If required, the created sources might be cleaned up by 1) deleting the API Gateway REST API, and a pair of) deleting the REST API Lambda perform and related IAM position.

Instance use circumstances

To create an interactive expertise, the Workplace Add-in integrates with the cloud back-end that implements conversational capabilities with help for added context retrieved from the Workplace JavaScript API.

Subsequent, we exhibit two completely different use circumstances supported by the proposed resolution, textual content era and textual content refinement.

Textual content era

Determine 2: Textual content era use-case demo

Within the demo in Determine 2, we present how the plug-in is prompting the LLM to supply a textual content from scratch. The consumer enters their question with some context into the Add-In textual content enter space. Upon sending, the backend will immediate the LLM to generate respective textual content, and return it again to the frontend. From the Add-in, it’s inserted into the Phrase doc on the cursor place utilizing the Workplace JavaScript API.

Textual content refinement

Determine 3: Textual content refinement use-case demo

In Determine 3, the consumer highlighted a textual content phase within the work space and entered a immediate into the Add-In textual content enter space to rephrase the textual content phase. Once more, the consumer enter and highlighted textual content are processed by the backend and returned to the Add-In, thereby changing the beforehand highlighted textual content.

Conclusion

This weblog put up showcases how the transformative energy of generative AI might be included into Workplace processes. We described an end-to-end pattern of integrating Workplace merchandise with an Add-in for textual content era and manipulation with the ability of LLMs. In our instance, we used managed LLMs on Amazon Bedrock for textual content era. The backend is hosted as a completely serverless software on the AWS cloud.

Textual content era with LLMs in Workplace helps staff by streamlining their writing course of and boosting productiveness. Workers can leverage the ability of generative AI to generate and edit high-quality content material shortly, releasing up time for different duties. Moreover, the combination with a well-known software like Phrase gives a seamless consumer expertise, minimizing disruptions to current workflows.

To study extra about boosting productiveness, constructing differentiated experiences, and innovating sooner with AWS go to the Generative AI on AWS web page.

In regards to the Authors

Martin Maritsch is a Generative AI Architect at AWS ProServe specializing in Generative AI and MLOps. He helps enterprise clients to attain enterprise outcomes by unlocking the complete potential of AI/ML providers on the AWS Cloud.

Martin Maritsch is a Generative AI Architect at AWS ProServe specializing in Generative AI and MLOps. He helps enterprise clients to attain enterprise outcomes by unlocking the complete potential of AI/ML providers on the AWS Cloud.

Miguel Pestana is a Cloud Utility Architect within the AWS Skilled Providers workforce with over 4 years of expertise within the automotive trade delivering cloud native options. Exterior of labor Miguel enjoys spending its days on the seaside or with a padel racket in a single hand and a glass of sangria on the opposite.

Miguel Pestana is a Cloud Utility Architect within the AWS Skilled Providers workforce with over 4 years of expertise within the automotive trade delivering cloud native options. Exterior of labor Miguel enjoys spending its days on the seaside or with a padel racket in a single hand and a glass of sangria on the opposite.

Carlos Antonio Perea Gomez is a Builder with AWS Skilled Providers. He allows clients to develop into AWSome throughout their journey to the cloud. When not up within the cloud he enjoys scuba diving deep within the waters.

Carlos Antonio Perea Gomez is a Builder with AWS Skilled Providers. He allows clients to develop into AWSome throughout their journey to the cloud. When not up within the cloud he enjoys scuba diving deep within the waters.