Amazon Bedrock has emerged as the popular alternative for tens of hundreds of consumers searching for to construct their generative AI technique. It presents an easy, quick, and safe technique to develop superior generative AI purposes and experiences to drive innovation.

With the great capabilities of Amazon Bedrock, you might have entry to a various vary of high-performing basis fashions (FMs), empowering you to pick the best option on your particular wants, customise the mannequin privately with your individual knowledge utilizing methods resembling fine-tuning and Retrieval Augmented Technology (RAG), and create managed brokers that run advanced enterprise duties.

Wonderful-tuning pre-trained language fashions permits organizations to customise and optimize the fashions for his or her particular use circumstances, offering higher efficiency and extra correct outputs tailor-made to their distinctive knowledge and necessities. Through the use of fine-tuning capabilities, companies can unlock the complete potential of generative AI whereas sustaining management over the mannequin’s conduct and aligning it with their objectives and values.

On this put up, we delve into the important safety greatest practices that organizations ought to contemplate when fine-tuning generative AI fashions.

Safety in Amazon Bedrock

Cloud safety at AWS is the very best precedence. Amazon Bedrock prioritizes safety by way of a complete method to guard buyer knowledge and AI workloads.

Amazon Bedrock is constructed with safety at its core, providing a number of options to guard your knowledge and fashions. The primary points of its safety framework embody:

- Entry management – This contains options resembling:

- Knowledge encryption – Amazon Bedrock presents the next encryption:

- Community safety – Amazon Bedrock presents a number of safety choices, together with:

- Help for AWS PrivateLink to determine non-public connectivity between your digital non-public cloud (VPC) and Amazon Bedrock

- VPC endpoints for safe communication inside your AWS atmosphere

- Compliance – Amazon Bedrock is in alignment with varied business requirements and laws, together with HIPAA, SOC, and PCI DSS

Answer overview

Mannequin customization is the method of offering coaching knowledge to a mannequin to enhance its efficiency for particular use circumstances. Amazon Bedrock at the moment presents the next customization strategies:

- Continued pre-training – Permits tailoring an FM’s capabilities to particular domains by fine-tuning its parameters with unlabeled, proprietary knowledge, permitting steady enchancment as extra related knowledge turns into out there.

- Wonderful-tuning – Entails offering labeled knowledge to coach a mannequin on particular duties, enabling it to be taught the suitable outputs for given inputs. This course of adjusts the mannequin’s parameters, enhancing its efficiency on the duties represented by the labeled coaching dataset.

- Distillation – Strategy of transferring information from a bigger extra clever mannequin (generally known as trainer) to a smaller, quicker, cost-efficient mannequin (generally known as pupil).

Mannequin customization in Amazon Bedrock includes the next actions:

- Create coaching and validation datasets.

- Arrange IAM permissions for knowledge entry.

- Configure a KMS key and VPC.

- Create a fine-tuning or pre-training job with hyperparameter tuning.

- Analyze outcomes by way of metrics and analysis.

- Buy provisioned throughput for the {custom} mannequin.

- Use the {custom} mannequin for duties like inference.

On this put up, we clarify these steps in relation to fine-tuning. Nevertheless, you’ll be able to apply the identical ideas for continued pre-training as nicely.

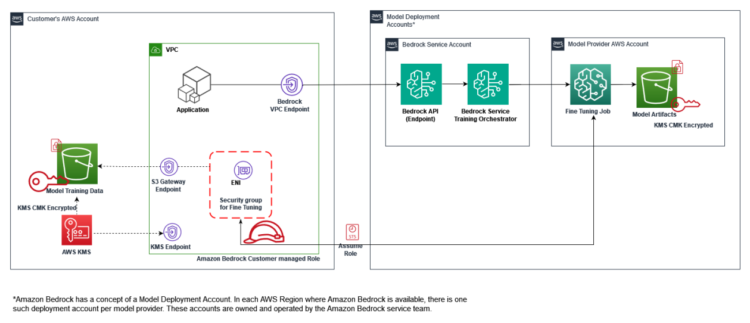

The next structure diagram explains the workflow of Amazon Bedrock mannequin fine-tuning.

The workflow steps are as follows:

- The consumer submits an Amazon Bedrock fine-tuning job inside their AWS account, utilizing IAM for useful resource entry.

- The fine-tuning job initiates a coaching job within the mannequin deployment accounts.

- To entry coaching knowledge in your Amazon Easy Storage Service (Amazon S3) bucket, the job employs Amazon Safety Token Service (AWS STS) to imagine function permissions for authentication and authorization.

- Community entry to S3 knowledge is facilitated by way of a VPC community interface, utilizing the VPC and subnet particulars offered throughout job submission.

- The VPC is provided with non-public endpoints for Amazon S3 and AWS KMS entry, enhancing total safety.

- The fine-tuning course of generates mannequin artifacts, that are saved within the mannequin supplier AWS account and encrypted utilizing the customer-provided KMS key.

This workflow offers safe knowledge dealing with throughout a number of AWS accounts whereas sustaining buyer management over delicate info utilizing buyer managed encryption keys.

The shopper is accountable for the information; mannequin suppliers don’t have entry to the information, and so they don’t have entry to a buyer’s inference knowledge or their customization coaching datasets. Due to this fact, knowledge won’t be out there to mannequin suppliers for them to enhance their base fashions. Your knowledge can be unavailable to the Amazon Bedrock service workforce.

Within the following sections, we undergo the steps of fine-tuning and deploying the Meta Llama 3.1 8B Instruct mannequin in Amazon Bedrock utilizing the Amazon Bedrock console.

Stipulations

Earlier than you get began, be sure to have the next stipulations:

- An AWS account

- An IAM federation function with entry to do the next:

- Create, edit, view, and delete VPC community and safety sources

- Create, edit, view, and delete KMS keys

- Create, edit, view, and delete IAM roles and insurance policies for mannequin customization

- Create, add, view, and delete S3 buckets to entry coaching and validation knowledge and permission to jot down output knowledge to Amazon S3

- Checklist FMs on the bottom mannequin that might be used for fine-tuning

- Create a {custom} coaching job for the Amazon Bedrock FM

- Provisioned mannequin throughputs

- Checklist {custom} fashions and invoke mannequin permissions on the fine-tuned mannequin

- Mannequin entry, which you’ll request by way of the Amazon Bedrock console

For this put up, we use the us-west-2 AWS Area. For directions on assigning permissions to the IAM function, check with Id-based coverage examples for Amazon Bedrock and How Amazon Bedrock works with IAM.

Put together your knowledge

To fine-tune a text-to-text mannequin like Meta Llama 3.1 8B Instruct, put together a coaching and optionally available validation dataset by making a JSONL file with a number of JSON traces.

Every JSON line is a pattern containing a immediate and completion discipline. The format is as follows:

The next is an instance from a pattern dataset used as one-line enter for fine-tuning Meta Llama 3.1 8B Instruct in Amazon Bedrock. In JSONL format, every document is one textual content line.

Create a KMS symmetric key

When importing your coaching knowledge to Amazon S3, you need to use server-side encryption with AWS KMS. You’ll be able to create KMS keys on the AWS Administration Console, the AWS Command Line Interface (AWS CLI) and SDKs, or an AWS CloudFormation template. Full the next steps to create a KMS key within the console:

- On the AWS KMS console, select Buyer managed keys within the navigation pane.

- Select Create key.

- Create a symmetric key. For directions, see Create a KMS key.

Create an S3 bucket and configure encryption

Full the next steps to create an S3 bucket and configure encryption:

- On the Amazon S3 console, select Buckets within the navigation pane.

- Select Create bucket.

- For Bucket identify, enter a novel identify on your bucket.

- For Encryption sort¸ choose Server-side encryption with AWS Key Administration Service keys.

- For AWS KMS key, choose Select out of your AWS KMS keys and select the important thing you created.

- Full the bucket creation with default settings or customise as wanted.

Add the coaching knowledge

Full the next steps to add the coaching knowledge:

- On the Amazon S3 console, navigate to your bucket.

- Create the folders fine-tuning-datasets and outputs and maintain the bucket encryption settings as server-side encryption.

- Select Add and add your coaching knowledge file.

Create a VPC

To create a VPC utilizing Amazon Digital Non-public Cloud (Amazon VPC), full the next steps:

- On the Amazon VPC console, select Create VPC.

- Create a VPC with non-public subnets in all Availability Zones.

Create an Amazon S3 VPC gateway endpoint

You’ll be able to additional safe your VPC by organising an Amazon S3 VPC endpoint and utilizing resource-based IAM insurance policies to limit entry to the S3 bucket containing the mannequin customization knowledge.

Let’s create an Amazon S3 gateway endpoint and fasten it to VPC with {custom} IAM resource-based insurance policies to extra tightly management entry to your Amazon S3 recordsdata.

The next code is a pattern useful resource coverage. Use the identify of the bucket you created earlier.

Create a safety group for the AWS KMS VPC interface endpoint

A safety group acts as a digital firewall on your occasion to regulate inbound and outbound visitors. This VPC endpoint safety group solely permits visitors originating from the safety group hooked up to your VPC non-public subnets, including a layer of safety. Full the next steps to create the safety group:

- On the Amazon VPC console, select Safety teams within the navigation pane.

- Select Create safety group.

- For Safety group identify, enter a reputation (for instance,

bedrock-kms-interface-sg). - For Description, enter an outline.

- For VPC, select your VPC.

- Add an inbound rule to HTTPS visitors from the VPC CIDR block.

Create a safety group for the Amazon Bedrock {custom} fine-tuning job

Now you’ll be able to create a safety group to determine guidelines for controlling Amazon Bedrock {custom} fine-tuning job entry to the VPC sources. You employ this safety group later throughout mannequin customization job creation. Full the next steps:

- On the Amazon VPC console, select Safety teams within the navigation pane.

- Select Create safety group.

- For Safety group identify, enter a reputation (for instance,

bedrock-fine-tuning-custom-job-sg). - For Description, enter an outline.

- For VPC, select your VPC.

- Add an inbound rule to permit visitors from the safety group.

Create an AWS KMS VPC interface endpoint

Now you’ll be able to create an interface VPC endpoint (PrivateLink) to determine a non-public connection between the VPC and AWS KMS.

For the safety group, use the one you created within the earlier step.

Connect a VPC endpoint coverage that controls the entry to sources by way of the VPC endpoint. The next code is a pattern useful resource coverage. Use the Amazon Useful resource Title (ARN) of the KMS key you created earlier.

Now you might have efficiently created the endpoints wanted for personal communication.

Create a service function for mannequin customization

Let’s create a service function for mannequin customization with the next permissions:

- A belief relationship for Amazon Bedrock to imagine and perform the mannequin customization job

- Permissions to entry your coaching and validation knowledge in Amazon S3 and to jot down your output knowledge to Amazon S3

- In the event you encrypt any of the next sources with a KMS key, permissions to decrypt the important thing (see Encryption of mannequin customization jobs and artifacts)

- A mannequin customization job or the ensuing {custom} mannequin

- The coaching, validation, or output knowledge for the mannequin customization job

- Permission to entry the VPC

Let’s first create the required IAM insurance policies:

- On the IAM console, select Insurance policies within the navigation pane.

- Select Create coverage.

- Beneath Specify permissions¸ use the next JSON to supply entry on S3 buckets, VPC, and KMS keys. Present your account, bucket identify, and VPC settings.

You need to use the next IAM permissions coverage as a template for VPC permissions:

You need to use the next IAM permissions coverage as a template for Amazon S3 permissions:

Now let’s create the IAM function.

- On the IAM console, select Roles within the navigation pane.

- Select Create roles.

- Create a task with the next belief coverage (present your AWS account ID):

- Assign your {custom} VPC and S3 bucket entry insurance policies.

- Give a reputation to your function and select Create function.

Replace the KMS key coverage with the IAM function

Within the KMS key you created within the earlier steps, it’s essential replace the important thing coverage to incorporate the ARN of the IAM function. The next code is a pattern key coverage:

For extra particulars, check with Encryption of mannequin customization jobs and artifacts.

Provoke the fine-tuning job

Full the next steps to arrange your fine-tuning job:

- On the Amazon Bedrock console, select Customized fashions within the navigation pane.

- Within the Fashions part, select Customise mannequin and Create fine-tuning job.

- Beneath Mannequin particulars, select Choose mannequin.

- Select Llama 3.1 8B Instruct as the bottom mannequin and select Apply.

- For Wonderful-tuned mannequin identify, enter a reputation on your {custom} mannequin.

- Choose Mannequin encryption so as to add a KMS key and select the KMS key you created earlier.

- For Job identify, enter a reputation for the coaching job.

- Optionally, develop the Tags part so as to add tags for monitoring.

- Beneath VPC Settings, select the VPC, subnets, and safety group you created as a part of earlier steps.

While you specify the VPC subnets and safety teams for a job, Amazon Bedrock creates elastic community interfaces (ENIs) which are related together with your safety teams in one of many subnets. ENIs permit the Amazon Bedrock job to connect with sources in your VPC.

We advocate that you simply present at the very least one subnet in every Availability Zone.

- Beneath Enter knowledge, specify the S3 places on your coaching and validation datasets.

- Beneath Hyperparameters, set the values for Epochs, Batch measurement, Studying fee, and Studying fee heat up steps on your fine-tuning job.

Seek advice from Customized mannequin hyperparameters for added particulars.

- Beneath Output knowledge, for S3 location, enter the S3 path for the bucket storing fine-tuning metrics.

- Beneath Service entry, choose a technique to authorize Amazon Bedrock. You’ll be able to choose Use an current service function and use the function you created earlier.

- Select Create Wonderful-tuning job.

Monitor the job

On the Amazon Bedrock console, select Customized fashions within the navigation pane and find your job.

You’ll be able to monitor the job on the job particulars web page.

Buy provisioned throughput

After fine-tuning is full (as proven within the following screenshot), you need to use the {custom} mannequin for inference. Nevertheless, earlier than you need to use a custom-made mannequin, it’s essential buy provisioned throughput for it.

Full the next steps:

- On the Amazon Bedrock console, beneath Basis fashions within the navigation pane, select Customized fashions.

- On the Fashions tab, choose your mannequin and select Buy provisioned throughput.

- For Provisioned throughput identify, enter a reputation.

- Beneath Choose mannequin, be certain that the mannequin is identical because the {custom} mannequin you chose earlier.

- Beneath Dedication time period & mannequin models, configure your dedication time period and mannequin models. Seek advice from Enhance mannequin invocation capability with Provisioned Throughput in Amazon Bedrock for added insights. For this put up, we select No dedication and use 1 mannequin unit.

- Beneath Estimated buy abstract, assessment the estimated price and select Buy provisioned throughput.

After the provisioned throughput is in service, you need to use the mannequin for inference.

Use the mannequin

Now you’re prepared to make use of your mannequin for inference.

- On the Amazon Bedrock console, beneath Playgrounds within the navigation pane, select Chat/textual content.

- Select Choose mannequin.

- For Class, select Customized fashions beneath Customized & self-hosted fashions.

- For Mannequin, select the mannequin you simply educated.

- For Throughput, select the provisioned throughput you simply bought.

- Select Apply.

Now you’ll be able to ask pattern questions, as proven within the following screenshot.

Implementing these procedures permits you to comply with safety greatest practices once you deploy and use your fine-tuned mannequin inside Amazon Bedrock for inference duties.

When creating a generative AI utility that requires entry to this fine-tuned mannequin, you might have the choice to configure it inside a VPC. By using a VPC interface endpoint, you may make certain communication between your VPC and the Amazon Bedrock API endpoint happens by way of a PrivateLink connection, reasonably than by way of the general public web.

This method additional enhances safety and privateness. For extra info on this setup, check with Use interface VPC endpoints (AWS PrivateLink) to create a non-public connection between your VPC and Amazon Bedrock.

Clear up

Delete the next AWS sources created for this demonstration to keep away from incurring future fees:

- Amazon Bedrock mannequin provisioned throughput

- VPC endpoints

- VPC and related safety teams

- KMS key

- IAM roles and insurance policies

- S3 bucket and objects

Conclusion

On this put up, we carried out safe fine-tuning jobs in Amazon Bedrock, which is essential for safeguarding delicate knowledge and sustaining the integrity of your AI fashions.

By following one of the best practices outlined on this put up, together with correct IAM function configuration, encryption at relaxation and in transit, and community isolation, you’ll be able to considerably improve the safety posture of your fine-tuning processes.

By prioritizing safety in your Amazon Bedrock workflows, you not solely safeguard your knowledge and fashions, but additionally construct belief together with your stakeholders and end-users, enabling accountable and safe AI improvement.

As a subsequent step, strive the answer out in your account and share your suggestions.

In regards to the Authors

Vishal Naik is a Sr. Options Architect at Amazon Internet Providers (AWS). He’s a builder who enjoys serving to prospects accomplish their enterprise wants and remedy advanced challenges with AWS options and greatest practices. His core space of focus contains Generative AI and Machine Studying. In his spare time, Vishal loves making quick movies on time journey and alternate universe themes.

Vishal Naik is a Sr. Options Architect at Amazon Internet Providers (AWS). He’s a builder who enjoys serving to prospects accomplish their enterprise wants and remedy advanced challenges with AWS options and greatest practices. His core space of focus contains Generative AI and Machine Studying. In his spare time, Vishal loves making quick movies on time journey and alternate universe themes.

Sumeet Tripathi is an Enterprise Help Lead (TAM) at AWS in North Carolina. He has over 17 years of expertise in expertise throughout varied roles. He’s captivated with serving to prospects to cut back operational challenges and friction. His focus space is AI/ML and Vitality & Utilities Section. Outdoors work, He enjoys touring with household, watching cricket and films.

Sumeet Tripathi is an Enterprise Help Lead (TAM) at AWS in North Carolina. He has over 17 years of expertise in expertise throughout varied roles. He’s captivated with serving to prospects to cut back operational challenges and friction. His focus space is AI/ML and Vitality & Utilities Section. Outdoors work, He enjoys touring with household, watching cricket and films.