This publish was co-written with Ben Doughton, Head of Product Operations – LCH, Iulia Midus, Website Reliability Engineer – LCH, and Maurizio Morabito, Software program and AI specialist – LCH (a part of London Inventory Alternate Group, LSEG).

Within the monetary trade, fast and dependable entry to data is crucial, however trying to find information or going through unclear communication can gradual issues down. An AI-powered assistant can change that. By immediately offering solutions and serving to to navigate advanced programs, such assistants can ensure that key data is all the time inside attain, bettering effectivity and lowering the danger of miscommunication. Amazon Q Enterprise is a generative AI-powered assistant that may reply questions, present summaries, generate content material, and securely full duties primarily based on information and knowledge in your enterprise programs. Amazon Q Enterprise permits workers to grow to be extra artistic, data-driven, environment friendly, organized, and productive.

On this weblog publish, we discover a shopper companies agent assistant utility developed by the London Inventory Alternate Group (LSEG) utilizing Amazon Q Enterprise. We’ll focus on how Amazon Q Enterprise saved time in producing solutions, together with summarizing paperwork, retrieving solutions to advanced Member enquiries, and mixing data from totally different information sources (whereas offering in-text citations to the info sources used for every reply).

The problem

The London Clearing Home (LCH) Group of corporations consists of main multi-asset class clearing homes and are a part of the Markets division of LSEG PLC (LSEG Markets). LCH gives confirmed threat administration capabilities throughout a variety of asset courses, together with over-the-counter (OTC) and listed rates of interest, fastened revenue, international change (FX), credit score default swap (CDS), equities, and commodities.

Because the LCH enterprise continues to develop, the LCH staff has been repeatedly exploring methods to enhance their help to prospects (members) and to extend LSEG’s impression on buyer success. As a part of LSEG’s multi-stage AI technique, LCH has been exploring the function that generative AI companies can have on this area. One of many key capabilities that LCH is fascinated with is a managed conversational assistant that requires minimal technical information to construct and preserve. As well as, LCH has been searching for an answer that’s targeted on its information base and that may be shortly stored updated. Because of this, LCH was eager to discover methods resembling Retrieval Augmented Technology (RAG). Following a assessment of accessible options, the LCH staff determined to construct a proof-of-concept round Amazon Q Enterprise.

Enterprise use case

Realizing worth from generative AI depends on a strong enterprise use case. LCH has a broad base of shoppers elevating queries to their shopper companies (CS) staff throughout a various and complicated vary of asset courses and merchandise. Instance queries embrace: “What’s the eligible collateral at LCH?” and “Can members clear NIBOR IRS at LCH?” This requires CS staff members to check with detailed service and coverage documentation sources to offer correct recommendation to their members.

Traditionally, the CS staff has relied on producing product FAQs for LCH members to check with and, the place required, an in-house information heart for CS staff members to check with when answering advanced buyer queries. To enhance the shopper expertise and increase worker productiveness, the CS staff got down to examine whether or not generative AI may assist reply questions from particular person members, thus lowering the variety of buyer queries. The purpose was to extend the pace and accuracy of data retrieval inside the CS workflows when responding to the queries that inevitably come by means of from prospects.

Undertaking workflow

The CS use case was developed by means of shut collaboration between LCH and Amazon Net Service (AWS) and concerned the next steps:

- Ideation: The LCH staff carried out a collection of cross-functional workshops to look at totally different massive language mannequin (LLM) approaches together with immediate engineering, RAG, and customized mannequin fantastic tuning and pre-training. They thought of totally different applied sciences resembling Amazon SageMaker and Amazon SageMaker Jumpstart and evaluated trade-offs between growth effort and mannequin customization. Amazon Q Enterprise was chosen due to its built-in enterprise search internet crawler functionality and ease of deployment with out the necessity for LLM deployment. One other enticing function was the power to obviously present supply attribution and citations. This enhanced the reliability of the responses, permitting customers to confirm info and discover subjects in higher depth (vital features to extend their total belief within the responses obtained).

- Information base creation: The CS staff constructed information sources connectors for the LCH web site, FAQs, buyer relationship administration (CRM) software program, and inner information repositories and included the Amazon Q Enterprise built-in index and retriever within the construct.

- Integration and testing: The applying was secured utilizing a third-party id supplier (IdP) because the IdP for id and entry administration to handle customers with their enterprise IdP and used AWS Id and Entry Administration (IAM) to authenticate customers once they signed in to Amazon Q Enterprise. Testing was carried out to confirm factual accuracy of responses, evaluating the efficiency and high quality of the AI-generated solutions, which demonstrated that the system had achieved a excessive degree of factual accuracy. Wider enhancements in enterprise efficiency have been demonstrated together with enhancements in response time, the place responses have been delivered inside a couple of seconds. Assessments have been undertaken with each unstructured and structured information inside the paperwork.

- Phased rollout: The CS AI assistant was rolled out in a phased method to offer thorough, high-quality solutions. Sooner or later, there are plans to combine their Amazon Q Enterprise utility with current e-mail and CRM interfaces, and to develop its use to further use circumstances and capabilities inside LSEG.

Answer overview

On this answer overview, we’ll discover the LCH-built Amazon Q Enterprise utility.

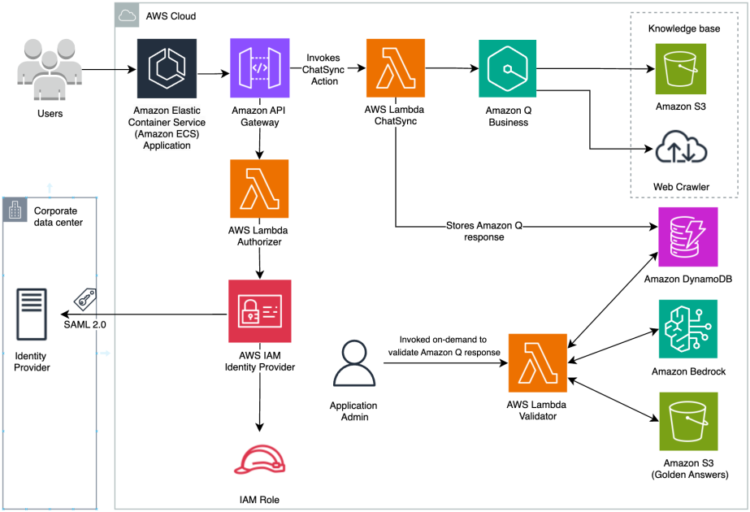

The LCH admin staff developed a web-based interface that serves as a gateway for his or her inner shopper companies staff to work together with the Amazon Q Enterprise API and different AWS companies (Amazon Elastic Compute Cloud (Amazon ECS), Amazon API Gateway, AWS Lambda, Amazon DynamoDB, Amazon Easy Storage Service (Amazon S3), and Amazon Bedrock) and secured it utilizing SAML 2.0 IAM federation—sustaining safe entry to the chat interface—to retrieve solutions from a pre-indexed information base and to validate the responses utilizing Anthropic’s Claude v2 LLM.

The next determine illustrates the structure for the LCH shopper companies utility.

The workflow consists of the next steps:

- The LCH staff arrange the Amazon Q Enterprise utility utilizing a SAML 2.0 IAM IdP. (The instance within the weblog publish exhibits connecting with Okta because the IdP for Amazon Q Enterprise. Nevertheless, the LCH staff constructed the appliance utilizing a third-party answer because the IdP as a substitute of Okta). This structure permits LCH customers to check in utilizing their current id credentials from their enterprise IdP, whereas they preserve management over which customers have entry to their Amazon Q Enterprise utility.

- The applying had two information sources as a part of the configuration for his or her Amazon Q Enterprise utility:

- An S3 bucket to retailer and index their inner LCH paperwork. This permits the Amazon Q Enterprise utility to entry and search by means of their inner product FAQ PDF paperwork as a part of offering responses to consumer queries. Indexing the paperwork in Amazon S3 makes them available for the appliance to retrieve related data.

- Along with inner paperwork, the staff has additionally arrange their public-facing LCH web site as a knowledge supply utilizing an online crawler that may index and extract data from their rulebooks.

- The LCH staff opted for a customized consumer interface (UI) as a substitute of the built-in internet expertise supplied by Amazon Q Enterprise to have extra management over the frontend by straight accessing the Amazon Q Enterprise API. The applying’s frontend was developed utilizing the open supply utility framework and hosted on Amazon ECS. The frontend utility accesses an Amazon API Gateway REST API endpoint to work together with the enterprise logic written in AWS Lambda

- The structure consists of two Lambda capabilities:

- An authorizer Lambda perform is liable for authorizing the frontend utility to entry the Amazon Q enterprise API by producing non permanent AWS credentials.

- A ChatSync Lambda perform is liable for accessing the Amazon Q Enterprise ChatSync API to begin an Amazon Q Enterprise dialog.

- The structure features a Validator Lambda perform, which is utilized by the admin to validate the accuracy of the responses generated by the Amazon Q Enterprise utility.

- The LCH staff has saved a golden reply information base in an S3 bucket, consisting of roughly 100 questions and solutions about their product FAQs and rulebooks collected from their dwell brokers. This data base serves as a benchmark for the accuracy and reliability of the AI-generated responses.

- By evaluating the Amazon Q Enterprise chat responses in opposition to their golden solutions, LCH can confirm that the AI-powered assistant is offering correct and constant data to their prospects.

- The Validator Lambda perform retrieves information from a DynamoDB desk and sends it to Amazon Bedrock, a totally managed service that gives a alternative of high-performing basis fashions (FMs) that can be utilized to shortly experiment with and consider high FMs for a given use case, privately customise the FMs with current information utilizing methods resembling fine-tuning and RAG, and construct brokers that execute duties utilizing enterprise programs and information sources.

- The Amazon Bedrock service makes use of Anthropic’s Claude v2 mannequin to validate the Amazon Q Enterprise utility queries and responses in opposition to the golden solutions saved within the S3 bucket.

- Anthropic’s Claude v2 mannequin returns a rating for every query and reply, along with a complete rating, which is then supplied to the appliance admin for assessment.

- The Amazon Q Enterprise utility returned solutions inside a couple of seconds for every query. The general expectation is that Amazon Q Enterprise saves time for every dwell agent on every query by offering fast and proper responses.

This validation course of helped LCH to construct belief and confidence within the capabilities of Amazon Q Enterprise, enhancing the general buyer expertise.

Conclusion

This publish gives an outline of LSEG’s expertise in adopting Amazon Q Enterprise to help LCH shopper companies brokers for B2B question dealing with. This particular use case was constructed by working backward from a enterprise purpose to enhance buyer expertise and employees productiveness in a posh, extremely technical space of the buying and selling life cycle (post-trade). The range and huge measurement of enterprise information sources and the regulated surroundings that LSEG operates in makes this publish notably related to customer support operations coping with advanced question dealing with. Managed, straightforward-to-use RAG is a key functionality inside a wider imaginative and prescient of offering technical and enterprise customers with an surroundings, instruments, and companies to make use of generative AI throughout suppliers and LLMs. You will get began with this device by making a pattern Amazon Q Enterprise utility.

In regards to the Authors

Ben Doughton is a Senior Product Supervisor at LSEG with over 20 years of expertise in Monetary Providers. He leads product operations, specializing in product discovery initiatives, data-informed decision-making and innovation. He’s obsessed with machine studying and generative AI in addition to agile, lean and steady supply practices.

Ben Doughton is a Senior Product Supervisor at LSEG with over 20 years of expertise in Monetary Providers. He leads product operations, specializing in product discovery initiatives, data-informed decision-making and innovation. He’s obsessed with machine studying and generative AI in addition to agile, lean and steady supply practices.

Maurizio Morabito, Software program and AI specialist at LCH, one of many early adopters of Neural Networks within the years 1990–1992 earlier than an extended hiatus in know-how and finance corporations in Asia and Europe, lastly returning to Machine Studying in 2021. Maurizio is now main the best way to implement AI in LSEG Markets, following the motto “Tackling the Lengthy and the Boring”

Maurizio Morabito, Software program and AI specialist at LCH, one of many early adopters of Neural Networks within the years 1990–1992 earlier than an extended hiatus in know-how and finance corporations in Asia and Europe, lastly returning to Machine Studying in 2021. Maurizio is now main the best way to implement AI in LSEG Markets, following the motto “Tackling the Lengthy and the Boring”

Iulia Midus is a current IT Administration graduate and at the moment working in Submit-trade. The principle focus of the work to this point has been information evaluation and AI, and methods to implement these throughout the enterprise.

Iulia Midus is a current IT Administration graduate and at the moment working in Submit-trade. The principle focus of the work to this point has been information evaluation and AI, and methods to implement these throughout the enterprise.

Magnus Schoeman is a Principal Buyer Options Supervisor at AWS. He has 25 years of expertise throughout personal and public sectors the place he has held management roles in transformation packages, enterprise growth, and strategic alliances. Over the past 10 years, Magnus has led technology-driven transformations in regulated monetary companies operations (throughout Funds, Wealth Administration, Capital Markets, and Life & Pensions).

Magnus Schoeman is a Principal Buyer Options Supervisor at AWS. He has 25 years of expertise throughout personal and public sectors the place he has held management roles in transformation packages, enterprise growth, and strategic alliances. Over the past 10 years, Magnus has led technology-driven transformations in regulated monetary companies operations (throughout Funds, Wealth Administration, Capital Markets, and Life & Pensions).

Sudha Arumugam is an Enterprise Options Architect at AWS, advising massive Monetary Providers organizations. She has over 13 years of expertise in creating dependable software program options to advanced issues and She has in depth expertise in serverless event-driven structure and applied sciences and is obsessed with machine studying and AI. She enjoys creating cell and internet purposes.

Sudha Arumugam is an Enterprise Options Architect at AWS, advising massive Monetary Providers organizations. She has over 13 years of expertise in creating dependable software program options to advanced issues and She has in depth expertise in serverless event-driven structure and applied sciences and is obsessed with machine studying and AI. She enjoys creating cell and internet purposes.

Elias Bedmar is a Senior Buyer Options Supervisor at AWS. He’s a technical and enterprise program supervisor serving to prospects achieve success on AWS. He helps massive migration and modernization packages, cloud maturity initiatives, and adoption of recent companies. Elias has expertise in migration supply, DevOps engineering and cloud infrastructure.

Elias Bedmar is a Senior Buyer Options Supervisor at AWS. He’s a technical and enterprise program supervisor serving to prospects achieve success on AWS. He helps massive migration and modernization packages, cloud maturity initiatives, and adoption of recent companies. Elias has expertise in migration supply, DevOps engineering and cloud infrastructure.

Marcin Czelej is a Machine Studying Engineer at AWS Generative AI Innovation and Supply. He combines over 7 years of expertise in C/C++ and assembler programming with in depth information in machine studying and information science. This distinctive talent set permits him to ship optimized and customised options throughout numerous industries. Marcin has efficiently carried out AI developments in sectors resembling e-commerce, telecommunications, automotive, and the general public sector, persistently creating worth for patrons.

Marcin Czelej is a Machine Studying Engineer at AWS Generative AI Innovation and Supply. He combines over 7 years of expertise in C/C++ and assembler programming with in depth information in machine studying and information science. This distinctive talent set permits him to ship optimized and customised options throughout numerous industries. Marcin has efficiently carried out AI developments in sectors resembling e-commerce, telecommunications, automotive, and the general public sector, persistently creating worth for patrons.

Zmnako Awrahman, Ph.D., is a generative AI Follow Supervisor at AWS Generative AI Innovation and Supply with in depth expertise in serving to enterprise prospects construct information, ML, and generative AI methods. With a robust background in technology-driven transformations, notably in regulated industries, Zmnako has a deep understanding of the challenges and alternatives that include implementing cutting-edge options in advanced environments.

Zmnako Awrahman, Ph.D., is a generative AI Follow Supervisor at AWS Generative AI Innovation and Supply with in depth expertise in serving to enterprise prospects construct information, ML, and generative AI methods. With a robust background in technology-driven transformations, notably in regulated industries, Zmnako has a deep understanding of the challenges and alternatives that include implementing cutting-edge options in advanced environments.