In accordance with the Nationwide Most cancers Institute, a most cancers biomarker is a “organic molecule present in blood, different physique fluids, or tissues that could be a signal of a standard or irregular course of, or of a situation or illness reminiscent of most cancers.” Biomarkers sometimes differentiate an affected affected person from an individual with out the illness. Properly-known most cancers biomarkers embody EGFR for lung most cancers, HER2 for breast most cancers, PSA for prostrate most cancers, and so forth. The BEST (Biomarkers, EndpointS, and different Instruments) useful resource categorizes biomarkers into a number of sorts reminiscent of diagnostic, prognostic, and predictive biomarkers that may be measured with numerous strategies together with molecular, imaging, and physiological measurements.

A examine printed in Nature Evaluations Drug Discovery mentions that the general success price for oncology medicine from Section I to approval is simply round 5%. Biomarkers play a vital position in enhancing the success of medical improvement by bettering affected person stratification for trials, expediting drug improvement, decreasing prices and dangers, and enabling customized medication. For instance, a examine of 1,079 oncology medicine discovered that the success charges for medicine developed with a biomarker was 24% versus 6% for compounds developed with out biomarkers.

Analysis scientists and real-world proof (RWE) consultants face quite a few challenges to investigate biomarkers and validate hypotheses for biomarker discovery with their present set of instruments. Most notably, this consists of handbook and time-consuming steps for search, summarization, and perception technology throughout numerous biomedical literature (for instance, PubMed), public scientific databases (for instance, Protein Knowledge Financial institution), industrial knowledge banks and inner enterprise proprietary knowledge. They need to shortly use, modify, or develop instruments vital for biomarker identification and correlation throughout modalities, indications, drug exposures and coverings, and related endpoint outcomes reminiscent of survival. Every experiment would possibly make use of numerous mixtures of information, instruments, and visualization. Proof in scientific literature ought to be easy to determine and cite with related context.

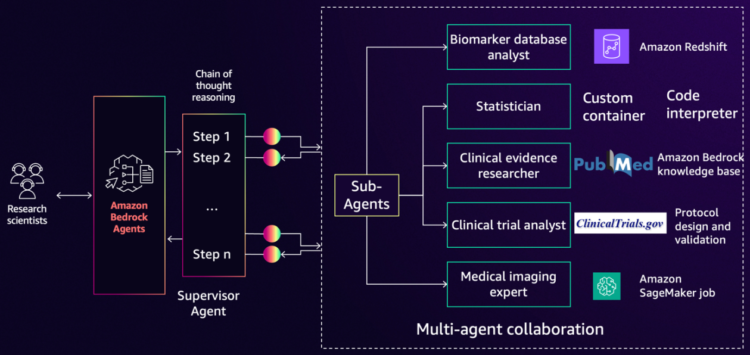

Amazon Bedrock Brokers permits generative AI functions to automate multistep duties by seamlessly connecting with firm techniques, APIs, and knowledge sources. Bedrock multi-agent collaboration permits builders to construct, deploy, and handle a number of specialised brokers working collectively seamlessly to deal with more and more advanced enterprise workflows. On this submit, we present you ways agentic workflows with Amazon Bedrock Brokers might help speed up this journey for analysis scientists with a pure language interface. We outline an instance evaluation pipeline, particularly for lung most cancers survival with medical, genomics, and imaging modalities of biomarkers. We showcase a wide range of specialised brokers together with a biomarker database analyst, statistician, medical proof researcher, and medical imaging knowledgeable in collaboration with a supervisor agent. We exhibit superior capabilities of brokers for self-review and planning that assist construct belief with finish customers by breaking down advanced duties right into a sequence of steps and displaying the chain of thought to generate the ultimate reply. The code for this resolution is on the market in GitHub.

Multi-modal biomarker evaluation workflow

Some instance scientific necessities from analysis scientists analyzing multi-modal affected person biomarkers embody:

- What are the highest 5 biomarkers related to general survival? Present me a Kaplan Meier plot for top and low threat sufferers.

- In accordance with literature proof, what properties of the tumor are related to metagene X exercise and EGFR pathway?

- Are you able to compute the imaging biomarkers for the affected person cohort with low gene X expression? Present me the tumor segmentation and the sphericity and elongation values.

To reply the previous questions, analysis scientists sometimes run a survival evaluation pipeline (as proven within the following illustration) with multimodal knowledge; together with medical, genomic, and computed tomography (CT) imaging knowledge.

They may have to:

- Preprocess programmatically a various set of enter knowledge, structured and unstructured, and extract biomarkers (radiomic/genomic/medical and others).

- Conduct statistical survival analyses such because the Cox proportional hazards mannequin, and generate visuals reminiscent of Kaplan-Meier curves for interpretation.

- Conduct gene set enrichment evaluation (GSEA) to determine vital genes.

- Analysis related literature to validate preliminary findings.

- Affiliate findings to radiogenomic biomarkers.

Resolution overview

We suggest a large-language-model (LLM) agents-based framework to enhance and speed up the above evaluation pipeline. Design patterns for LLM brokers, as described in Agentic Design Patterns Half 1 by Andrew Ng, embody the capabilities for reflection, software use, planning and multi-agent collaboration. An agent helps customers full actions based mostly on each proprietary and public knowledge and consumer enter. Brokers orchestrate interactions between basis fashions (FMs), knowledge sources, software program functions, and consumer conversations. As well as, brokers mechanically name APIs to take actions and search information bases to complement data for these actions.

As proven within the previous determine, we outline our resolution to incorporate planning and reasoning with a number of sub-agents together with:

- Biomarker database analyst: Convert pure language inquiries to SQL statements and execute on an Amazon Redshift database of biomarkers.

- Statistician: Use a customized container with lifelines library to construct survival regression fashions and visualization reminiscent of Kaplan Meier charts for survival evaluation.

- Medical proof researcher: Use PubMed APIs to go looking biomedical literature for exterior proof. Use Amazon Bedrock Data Bases for Retrieval Augmented Technology (RAG) to ship responses from inner literature proof.

- Medical trial analyst: Use Clinicaltrials.gov APIs to go looking previous medical trial research.

- Medical imaging knowledgeable: Use Amazon SageMaker jobs to enhance brokers with the aptitude to set off asynchronous jobs with an ephemeral cluster to course of CT scan photographs.

Dataset description

The non-small cell lung most cancers (NSCLC) radiogenomic dataset includes medical imaging, medical, and genomic knowledge collected from a cohort of early-stage NSCLC sufferers referred for surgical therapy. Every knowledge modality presents a distinct view of a affected person. It consists of medical knowledge reflective of digital well being data (EHR) reminiscent of age, gender, weight, ethnicity, smoking standing, tumor node metastasis (TNM) stage, histopathological grade, and survival consequence. The genomic knowledge incorporates gene mutation and RNA sequencing knowledge from samples of surgically excised tumor tissue. It consists of CT, positron emission tomography (PET)/CT photographs, semantic annotations of the tumors as noticed on the medical photographs utilizing a managed vocabulary, segmentation maps of tumors within the CT scans, and quantitative values obtained from the PET/CT scans.

We reuse the information pipelines described on this weblog submit.

Medical knowledge

The info is saved in CSV format as proven within the following desk. Every row corresponds to the medical data of a affected person.

| Case ID | Survival standing | Age at histological prognosis | Weight (lbs) | Smoking standing | Pack years | Stop smoking 12 months | Chemotherapy | Adjuvant therapy | EGFR mutation standing |

| R01-005 | Lifeless | 84 | 145 | Former | 20 | 1951 | No | No | Wildtype |

| R01-006 | Alive | 62 | Not collected | Former | Not collected | nan | No | No | Wildtype |

Genomics knowledge

The next desk exhibits the tabular illustration of the gene expression knowledge. Every row corresponds to a affected person, and the columns signify a subset of genes chosen for demonstration. The worth denotes the expression degree of a gene for a affected person. A better worth means the corresponding gene is extremely expressed in that particular tumor pattern.

| Case_ID | LRIG1 | HPGD | GDF15 | CDH2 | POSTN |

| R01-024 | 26.7037 | 3.12635 | 13.0269 | 0 | 36.4332 |

| R01-153 | 15.2133 | 5.0693 | 0.90866 | 0 | 32.8595 |

Medical imaging knowledge

The next picture is an instance overlay of a tumor segmentation onto a lung CT scan (case R01-093 within the dataset).

Deployment and getting began

Observe the deployment directions described within the GitHub repo.

Full deployment takes roughly 10–quarter-hour. After deployment, you may entry the pattern UI to check the agent with pattern questions out there within the UI or the chain of thought reasoning instance.

The stack may also be launched within the us-east-1 or us-west-2 AWS Areas by selecting launch stack within the following:

| Area | codepipeline.yaml |

| us-east-1 | |

| us-west-2 |

Amazon Bedrock Brokers deep dive

The next diagram describes the important thing parts of the agent that interacts with the customers by way of an online utility.

Giant language fashions

LLMs, reminiscent of Anthropic’s Claude or Amazon Titan fashions, possess the flexibility to know and generate human-like textual content. They allow brokers to understand consumer queries, generate acceptable responses, and carry out advanced reasoning duties. Within the deployment, we use Anthropic’s Claude 3 Sonnet mannequin.

Immediate templates

Immediate templates are pre-designed constructions that information the LLM’s responses and behaviors. These templates assist form the agent’s character, tone, and particular capabilities to know scientific terminology. By rigorously crafting immediate templates, you may assist be sure that brokers preserve consistency of their interactions and cling to particular tips or model voice. Amazon Bedrock Brokers supplies default immediate templates for pre-processing customers’ queries, orchestration, a information base, and a post-processing template.

Directions

Along with the immediate templates, directions describe what the agent is designed to do and the way it can work together with customers. You should use directions to outline the position of a particular agent and the way it can use the out there set of actions below totally different situations. Directions are augmented with the immediate templates as context for every invocation of the agent. You’ll find how we outline our agent directions in agent_build.yaml.

Person enter

Person enter is the start line for an interplay with an agent. The agent processes this enter, understanding the consumer’s intent and context, after which formulates an acceptable chain of thought. The agent will decide whether or not it has the required data to reply the consumer’s query or have to request extra data from the consumer. If extra data is required from the consumer, the agent will formulate the query to request extra data. Amazon Bedrock Brokers are designed to deal with a variety of consumer inputs, from easy queries to advanced, multi-turn conversations.

Amazon Bedrock Data Bases

The Amazon Bedrock information base is a repository of data that has been vectorized from the supply knowledge and that the agent can entry to complement its responses. By integrating an Amazon Bedrock information base, brokers can present extra correct and contextually acceptable solutions, particularly for domain-specific queries which may not be lined by the LLM’s normal information. On this resolution, we embody literature on non-small cell lung most cancers that may signify inner proof belonging to a buyer.

Motion teams

Motion teams are collections of particular features or API calls that Amazon Bedrock Brokers can carry out. By defining motion teams, you may lengthen the agent’s capabilities past mere dialog, enabling it to carry out sensible, real-world duties. The next instruments are made out there to the agent by way of motion teams within the resolution. The supply code may be discovered within the ActionGroups folder within the repository.

- Text2SQL and Redshift database invocation: The Text2SQL motion group permits the agent to get the related schema of the Redshift database, generate a SQL question for the actual sub-question, evaluation and refine the SQL question with a further LLM invocation, and eventually execute the SQL question to retrieve the related outcomes from the Redshift database. The motion group incorporates OpenAPI schema for these actions. If the question execution returns a end result higher than the acceptable lambda return payload measurement, the motion group writes the information to an intermediate Amazon Easy Storage Service (Amazon S3) location as an alternative.

- Scientific evaluation with a customized container: The scientific evaluation motion group permits the agent to make use of a customized container to carry out scientific evaluation with particular libraries and APIs. On this resolution, these embody duties reminiscent of becoming survival regression fashions and Kaplan Meier plot technology for survival evaluation. The customized container permits a consumer to confirm that the outcomes are repeatable with out deviations in library variations or algorithmic logic. This motion group defines features with particular parameters for every of the required duties. The Kaplan Meier plot is output to Amazon S3.

- Biomedical literature proof with PubMed: The PubMed motion group permits the agent to work together with the PubMed Entrez Programming Utilities (E-utilities) API to fetch biomedical literature. The motion group incorporates OpenAPI schema that accepts consumer queries to go looking throughout PubMed for articles. The Lambda perform supplies a handy strategy to seek for and retrieve scientific articles from the PubMed database. It permits customers to carry out searches utilizing particular queries, retrieve article metadata, and deal with the complexities of API interactions. General, the agent makes use of this motion group and serves as a bridge between a researcher’s question and the PubMed database, simplifying the method of accessing and processing biomedical analysis data.

- Medical imaging with SageMaker jobs: The medical imaging motion group permits the agent to course of CT scan photographs of particular affected person teams by triggering a SageMaker processing job. We re-use the medical imaging part from this earlier weblog.

The motion group creates patient-level three-dimensional radiomic options that designate the dimensions, form, and visible attributes of the tumors noticed within the CT scans and shops them in Amazon S3. For every affected person examine, the next steps are carried out, as proven within the determine that follows:

- Learn the 2D DICOM slice recordsdata for each the CT scan and tumor segmentation, mix them to 3D volumes, and save the volumes in NIfTI format.

- Align CT quantity and tumor segmentation so we are able to focus the computation contained in the tumor.

- Compute radiomic options describing the tumor area utilizing the pyradiomics library. It extracts 120 radiomic options of eight courses reminiscent of statistical representations of the distribution and co-occurrence of the depth inside the tumorous area of curiosity, and shape-based measurements describing the tumor morphologically.

Chain of thought reasoning and responses

Let’s take a look at a number of examples of chain of thought reasoning and execution with the supervisor agent. The supervisor agent interprets the consumer query, generates a sequence of steps, and executes them with the related sub-agents.

To answer the next questions, the agent units up the next orchestration workflows with the out there sub-agents.

Query: What’s the finest gene expression biomarker (lowest p worth) with general survival for sufferers which have undergone chemotherapy, present me a bar chart with the highest 5 biomarkers.

- I’ll generate a plan

- Question the biomarker database for sufferers’ knowledge

- Run survival evaluation to search out p-values

- Get the highest 5 biomarkers with lowest p-values and create a visualization

- I’ll ask the Biomarker database analyst

- Get DB schema and generate SQL

- Overview and refine the SQL with self-evaluation

- Execute the SQL question and retailer the ends in Amazon S3

- I’ll ask the Statistician

- Retrieve the generated dataset from the database analyst

- Match a survival regression mannequin with the customized container API

- Retrieve the mannequin match abstract outcomes and type the highest 5 biomarkers based mostly on the bottom p-value

- Generate a bar chart with the highest 5 biomarkers

Supervisor response: Gene biomarker ‘gdf15’ has the bottom p worth of 0.02, subsequently gdf15 is the very best gene biomarker. The bar chart exhibits the highest 5 biomarkers and their corresponding p values.

Query: In accordance with literature proof, what properties of the tumor are related to metagene X exercise and EGFR pathway?

- I’ll generate a plan

- Route the request to the Medical proof researcher

- I’ll ask the Medical proof researcher

- Question inner information base for inner proof

- Question PubMed for exterior literature

- Summarize the outcomes with citations

Supervisor response: Based mostly on inner and exterior proof, imaging properties, molecular traits, drug resistance and therapeutic approaches, signalling pathways and mechanisms, rising therapeutic methods , and mobile properties are related for the EGFR pathway.

Query: Are you able to compute the imaging biomarkers for affected person cohort with low gene X expression? Present me the tumor segmentation and the sphericity and elongation values.

- I’ll generate a plan

- Question the biomarker database to search out the affected person cohort with lowest gdf15 values

- Set off medical imaging evaluation jobs to course of CT scan photographs of the cohort

- Summarize the findings of the imaging jobs.

- I’ll ask the Biomarker database analyst

- Get DB schema and generate SQL

- Overview and refine the SQL with self-evaluation

- Execute the SQL question and retrieve affected person ids

- I’ll ask the medical imaging knowledgeable

- Retrieve the affected person cohort from the database analyst

- Compute imaging biomarkers for every affected person within the cohort with a SageMaker medical imaging job

- On job completion notification, retrieve and analyse the outcomes

- Extract the required biomarkers and visualize the tumour segmentation output photographs

Supervisor response: The compute imaging biomarker job to course of the medical photographs of the sufferers was triggered and is now full. The next sufferers have been chosen based mostly on their gene X expression values. The elongation and sphericity values for the affected person tumours are displayed together with the corresponding tumour segmentation photographs.

Analysis scientists face vital challenges in figuring out and validating biomarkers particular to most cancers subtypes and related to interventions and affected person outcomes. Present instruments typically require intensive handbook steps to go looking, summarize, and generate insights throughout numerous knowledge sources. This submit has demonstrated how Amazon Bedrock Brokers can supply a versatile framework with multi-agent collaboration and related instruments to assist speed up this vital discovery course of.

By offering an instance evaluation pipeline for lung most cancers survival, we showcased how these agentic workflows use a pure language interface, database retrieval, statistical modeling, literature search, and medical picture processing to remodel advanced analysis queries into actionable insights. The agent used superior and clever capabilities reminiscent of self-review and planning, breaking down duties into step-by-step analyses and transparently displaying the chain of thought behind the ultimate solutions. Whereas the potential affect of this expertise on pharmaceutical analysis and medical trial outcomes stays to be absolutely realized, options like this might help automate knowledge evaluation and speculation validation duties.

The code for this resolution is on the market on GitHub, and we encourage you to discover and construct upon this template. For examples to get began with Amazon Bedrock Brokers, try the Amazon Bedrock Brokers GitHub repository.

In regards to the authors

Hasan Poonawala is a Senior AI/ML Options Architect at AWS, working with Healthcare and Life Sciences clients. Hasan helps design, deploy and scale Generative AI and Machine studying functions on AWS. He has over 15 years of mixed work expertise in machine studying, software program improvement and knowledge science on the cloud. In his spare time, Hasan likes to discover nature and spend time with family and friends.

Hasan Poonawala is a Senior AI/ML Options Architect at AWS, working with Healthcare and Life Sciences clients. Hasan helps design, deploy and scale Generative AI and Machine studying functions on AWS. He has over 15 years of mixed work expertise in machine studying, software program improvement and knowledge science on the cloud. In his spare time, Hasan likes to discover nature and spend time with family and friends.

Michael Hsieh is a Principal AI/ML Specialist Options Architect. He works with HCLS clients to advance their ML journey with AWS applied sciences and his experience in medical imaging. As a Seattle transplant, he loves exploring the good mom nature the town has to supply, such because the climbing trails, surroundings kayaking within the SLU, and the sundown at Shilshole Bay.

Michael Hsieh is a Principal AI/ML Specialist Options Architect. He works with HCLS clients to advance their ML journey with AWS applied sciences and his experience in medical imaging. As a Seattle transplant, he loves exploring the good mom nature the town has to supply, such because the climbing trails, surroundings kayaking within the SLU, and the sundown at Shilshole Bay.

Nihir Chadderwala is a Senior AI/ML Options Architect on the International Healthcare and Life Sciences workforce. His background is constructing massive knowledge and AI-powered options to buyer issues in a wide range of domains reminiscent of software program, media, automotive, and healthcare. In his spare time, he enjoys taking part in tennis, and watching and studying about Cosmos.

Nihir Chadderwala is a Senior AI/ML Options Architect on the International Healthcare and Life Sciences workforce. His background is constructing massive knowledge and AI-powered options to buyer issues in a wide range of domains reminiscent of software program, media, automotive, and healthcare. In his spare time, he enjoys taking part in tennis, and watching and studying about Cosmos.

Zeek Granston is an Affiliate AI/ML Options Architect targeted on constructing efficient synthetic intelligence and machine studying options. He stays present with trade traits to ship sensible outcomes for shoppers. Exterior of labor, Zeek enjoys constructing AI functions, and taking part in basketball.

Zeek Granston is an Affiliate AI/ML Options Architect targeted on constructing efficient synthetic intelligence and machine studying options. He stays present with trade traits to ship sensible outcomes for shoppers. Exterior of labor, Zeek enjoys constructing AI functions, and taking part in basketball.